Commit

•

e1f3b59

1

Parent(s):

d192fe8

Update README.md

Browse files

README.md

CHANGED

|

@@ -2,57 +2,106 @@

|

|

| 2 |

license: cc-by-nc-4.0

|

| 3 |

base_model: adept/fuyu-8b

|

| 4 |

tags:

|

| 5 |

-

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 6 |

model-index:

|

| 7 |

-

- name:

|

| 8 |

results: []

|

|

|

|

|

|

|

|

|

|

|

|

|

| 9 |

---

|

| 10 |

|

| 11 |

-

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

|

| 12 |

-

should probably proofread and complete it, then remove this comment. -->

|

| 13 |

|

| 14 |

-

#

|

| 15 |

|

| 16 |

-

|

| 17 |

|

| 18 |

-

|

| 19 |

|

| 20 |

-

More information needed

|

| 21 |

|

| 22 |

-

##

|

| 23 |

|

| 24 |

-

|

|

|

|

|

|

|

| 25 |

|

| 26 |

-

##

|

| 27 |

|

| 28 |

-

|

| 29 |

|

| 30 |

-

##

|

| 31 |

|

| 32 |

-

|

| 33 |

|

| 34 |

-

|

| 35 |

-

- learning_rate: 1e-05

|

| 36 |

-

- train_batch_size: 1

|

| 37 |

-

- eval_batch_size: 1

|

| 38 |

-

- seed: 42

|

| 39 |

-

- distributed_type: multi-GPU

|

| 40 |

-

- num_devices: 16

|

| 41 |

-

- gradient_accumulation_steps: 8

|

| 42 |

-

- total_train_batch_size: 128

|

| 43 |

-

- total_eval_batch_size: 16

|

| 44 |

-

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

|

| 45 |

-

- lr_scheduler_type: cosine

|

| 46 |

-

- lr_scheduler_warmup_ratio: 0.03

|

| 47 |

-

- num_epochs: 1.0

|

| 48 |

|

| 49 |

-

###

|

|

|

|

|

|

|

|

|

|

| 50 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 51 |

|

| 52 |

|

| 53 |

-

|

|

|

|

|

|

|

| 54 |

|

| 55 |

-

|

| 56 |

-

|

| 57 |

-

|

| 58 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 2 |

license: cc-by-nc-4.0

|

| 3 |

base_model: adept/fuyu-8b

|

| 4 |

tags:

|

| 5 |

+

- multimodal

|

| 6 |

+

- fuyu

|

| 7 |

+

- mfuyu

|

| 8 |

+

- mantis

|

| 9 |

+

- lmm

|

| 10 |

+

- vlm

|

| 11 |

model-index:

|

| 12 |

+

- name: Mantis-8B-Fuyu

|

| 13 |

results: []

|

| 14 |

+

datasets:

|

| 15 |

+

- TIGER-Lab/Mantis-Instruct

|

| 16 |

+

language:

|

| 17 |

+

- en

|

| 18 |

---

|

| 19 |

|

|

|

|

|

|

|

| 20 |

|

| 21 |

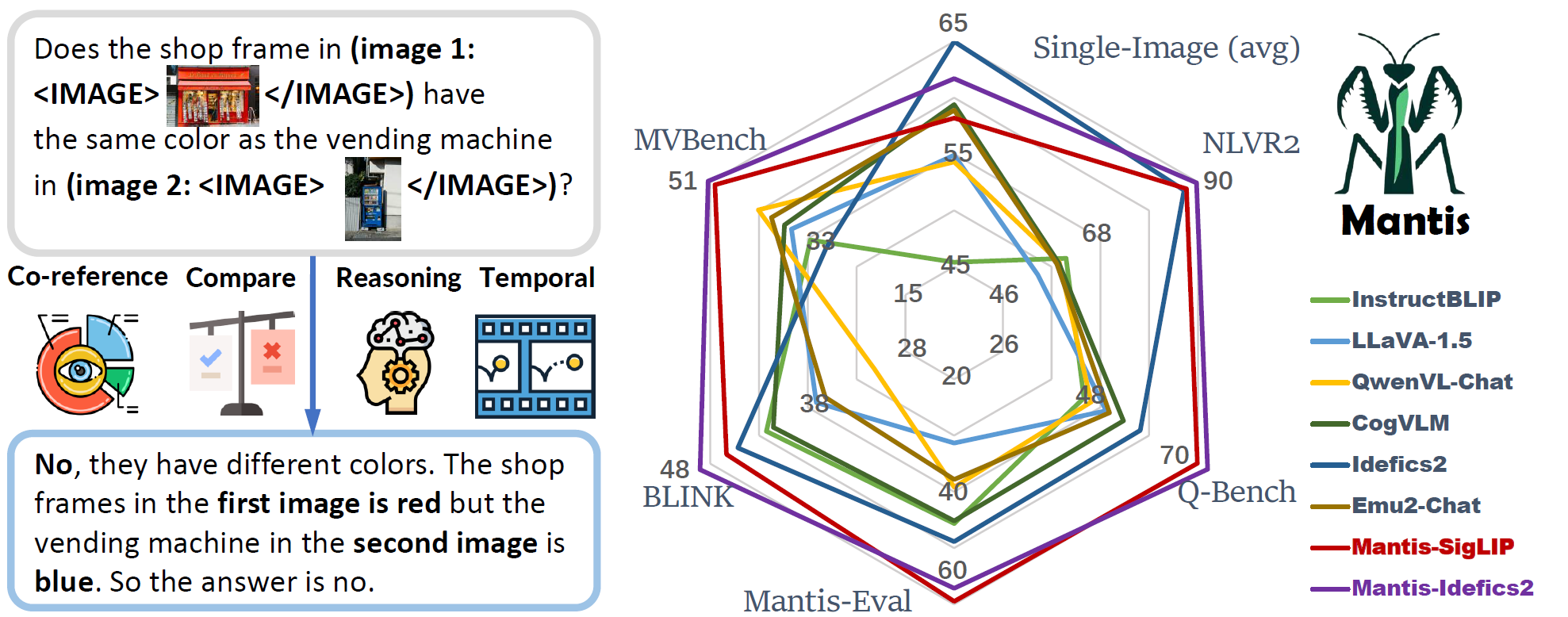

+

# 🔥 Mantis

|

| 22 |

|

| 23 |

+

[Paper](https://arxiv.org/abs/2405.01483) | [Website](https://tiger-ai-lab.github.io/Mantis/) | [Github](https://github.com/TIGER-AI-Lab/Mantis) | [Models](https://huggingface.co/collections/TIGER-Lab/mantis-6619b0834594c878cdb1d6e4) | [Demo](https://huggingface.co/spaces/TIGER-Lab/Mantis)

|

| 24 |

|

| 25 |

+

|

| 26 |

|

|

|

|

| 27 |

|

| 28 |

+

## Summary

|

| 29 |

|

| 30 |

+

- Mantis is an LLaMA-3 based LMM with **interleaved text and image as inputs**, train on Mantis-Instruct under academic-level resources (i.e. 36 hours on 16xA100-40G).

|

| 31 |

+

- Mantis is trained to have multi-image skills including co-reference, reasoning, comparing, temporal understanding.

|

| 32 |

+

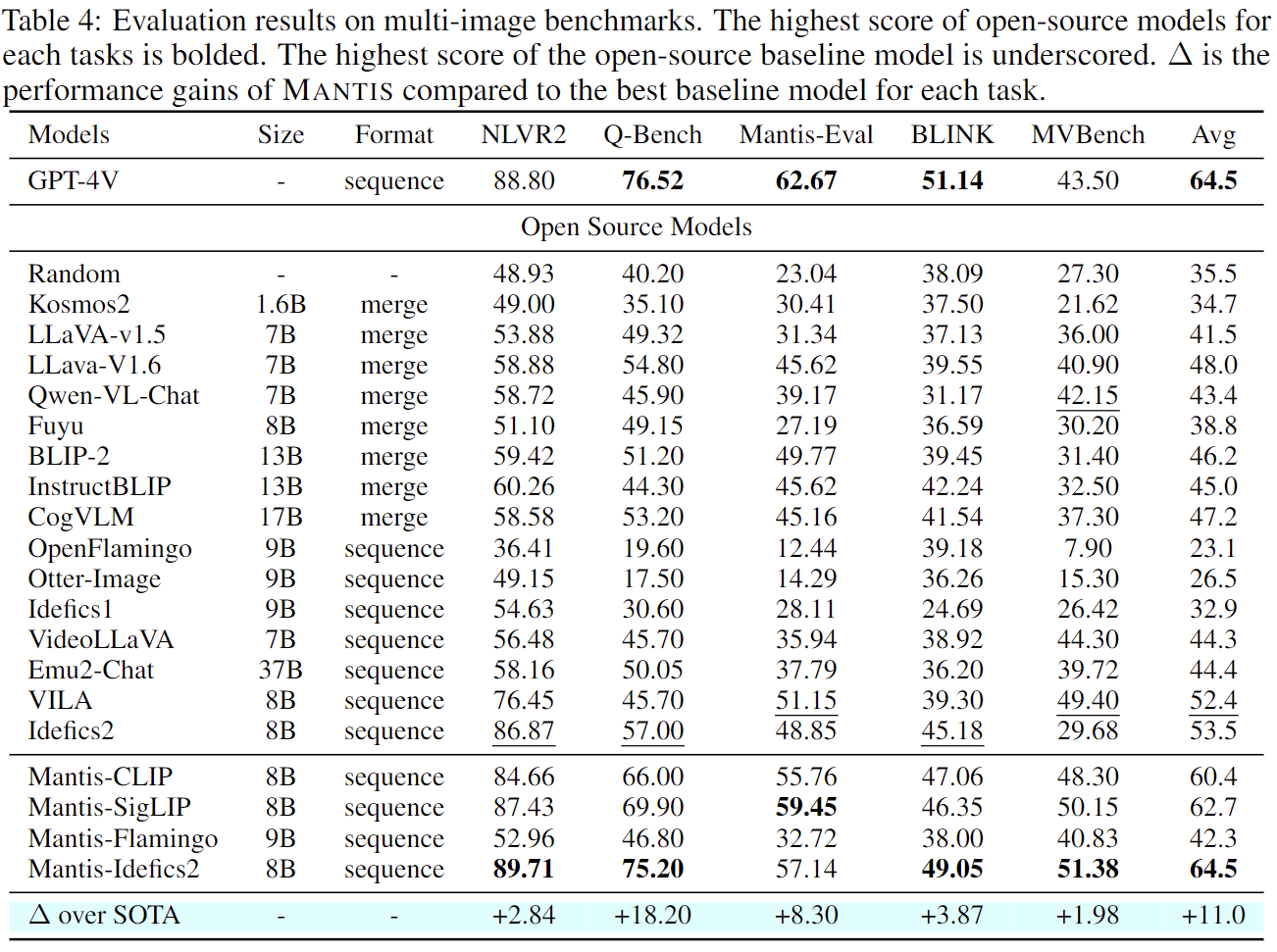

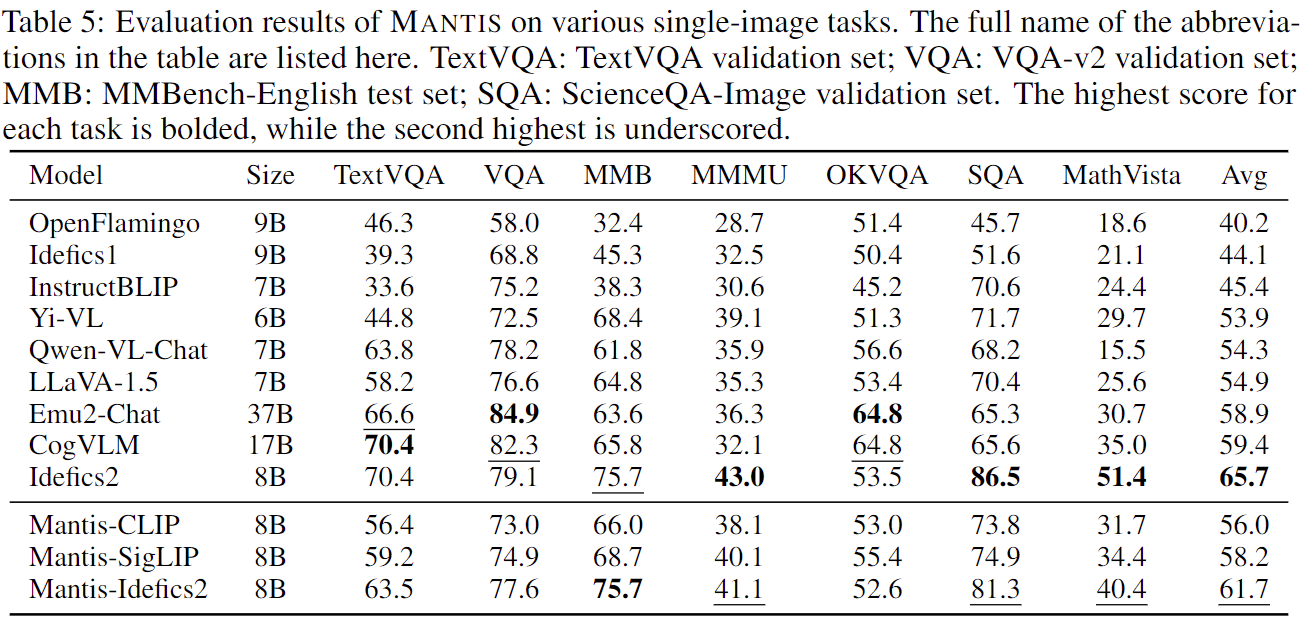

- Mantis reaches the state-of-the-art performance on five multi-image benchmarks (NLVR2, Q-Bench, BLINK, MVBench, Mantis-Eval), and also maintain a strong single-image performance on par with CogVLM and Emu2.

|

| 33 |

|

| 34 |

+

## Multi-Image Performance

|

| 35 |

|

| 36 |

+

|

| 37 |

|

| 38 |

+

## Single-Image Performance

|

| 39 |

|

| 40 |

+

|

| 41 |

|

| 42 |

+

## How to use

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 43 |

|

| 44 |

+

### Installation

|

| 45 |

+

```bash

|

| 46 |

+

pip install git+https://github.com/TIGER-AI-Lab/Mantis.git

|

| 47 |

+

```

|

| 48 |

|

| 49 |

+

### Run example inference:

|

| 50 |

+

```python

|

| 51 |

+

from mantis.models.mllava import chat_mllava

|

| 52 |

+

from PIL import Image

|

| 53 |

+

import torch

|

| 54 |

|

| 55 |

|

| 56 |

+

image1 = "image1.jpg"

|

| 57 |

+

image2 = "image2.jpg"

|

| 58 |

+

images = [Image.open(image1), Image.open(image2)]

|

| 59 |

|

| 60 |

+

# load processor and model

|

| 61 |

+

from mantis.models.mllava import MLlavaProcessor, LlavaForConditionalGeneration

|

| 62 |

+

processor = MLlavaProcessor.from_pretrained("TIGER-Lab/Mantis-8B-siglip-llama3")

|

| 63 |

+

attn_implementation = None # or "flash_attention_2"

|

| 64 |

+

model = LlavaForConditionalGeneration.from_pretrained("TIGER-Lab/Mantis-8B-siglip-llama3", device_map="cuda", torch_dtype=torch.bfloat16, attn_implementation=attn_implementation)

|

| 65 |

+

|

| 66 |

+

generation_kwargs = {

|

| 67 |

+

"max_new_tokens": 1024,

|

| 68 |

+

"num_beams": 1,

|

| 69 |

+

"do_sample": False

|

| 70 |

+

}

|

| 71 |

+

|

| 72 |

+

# chat

|

| 73 |

+

text = "Describe the difference of <image> and <image> as much as you can."

|

| 74 |

+

response, history = chat_mllava(text, images, model, processor, **generation_kwargs)

|

| 75 |

+

|

| 76 |

+

print("USER: ", text)

|

| 77 |

+

print("ASSISTANT: ", response)

|

| 78 |

+

|

| 79 |

+

text = "How many wallets are there in image 1 and image 2 respectively?"

|

| 80 |

+

response, history = chat_mllava(text, images, model, processor, history=history, **generation_kwargs)

|

| 81 |

+

|

| 82 |

+

print("USER: ", text)

|

| 83 |

+

print("ASSISTANT: ", response)

|

| 84 |

+

|

| 85 |

+

"""

|

| 86 |

+

USER: Describe the difference of <image> and <image> as much as you can.

|

| 87 |

+

ASSISTANT: The second image has more variety in terms of colors and designs. While the first image only shows two brown leather pouches, the second image features four different pouches in various colors and designs, including a purple one with a gold coin, a red one with a gold coin, a black one with a gold coin, and a brown one with a gold coin. This variety makes the second image more visually interesting and dynamic.

|

| 88 |

+

USER: How many wallets are there in image 1 and image 2 respectively?

|

| 89 |

+

ASSISTANT: There are two wallets in image 1, and four wallets in image 2.

|

| 90 |

+

"""

|

| 91 |

+

```

|

| 92 |

+

|

| 93 |

+

### Training

|

| 94 |

+

See [mantis/train](https://github.com/TIGER-AI-Lab/Mantis/tree/main/mantis/train) for details

|

| 95 |

+

|

| 96 |

+

### Evaluation

|

| 97 |

+

See [mantis/benchmark](https://github.com/TIGER-AI-Lab/Mantis/tree/main/mantis/benchmark) for details

|

| 98 |

+

|

| 99 |

+

## Citation

|

| 100 |

+

```

|

| 101 |

+

@inproceedings{Jiang2024MANTISIM,

|

| 102 |

+

title={MANTIS: Interleaved Multi-Image Instruction Tuning},

|

| 103 |

+

author={Dongfu Jiang and Xuan He and Huaye Zeng and Cong Wei and Max W.F. Ku and Qian Liu and Wenhu Chen},

|

| 104 |

+

publisher={arXiv2405.01483}

|

| 105 |

+

year={2024},

|

| 106 |

+

}

|

| 107 |

+

```

|