Create README.md

Browse files

README.md

ADDED

|

@@ -0,0 +1,85 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## Description

|

| 2 |

+

|

| 3 |

+

This repo contains fp16 files of [Norquinal/Mistral-7B-claude-chat](https://huggingface.co/Norquinal/Mistral-7B-claude-chat) with the LoRA [lemonilia/LimaRP-Mistral-7B-v0.1](https://huggingface.co/lemonilia/LimaRP-Mistral-7B-v0.1) applied at weight "0.75".

|

| 4 |

+

|

| 5 |

+

All credit go to [lemonilia](https://huggingface.co/lemonilia) and [Norquinal](https://huggingface.co/Norquinal)

|

| 6 |

+

|

| 7 |

+

## Prompt format

|

| 8 |

+

Same as before. It uses the [extended Alpaca format](https://github.com/tatsu-lab/stanford_alpaca),

|

| 9 |

+

with `### Input:` immediately preceding user inputs and `### Response:` immediately preceding

|

| 10 |

+

model outputs. While Alpaca wasn't originally intended for multi-turn responses, in practice this

|

| 11 |

+

is not a problem; the format follows a pattern already used by other models.

|

| 12 |

+

|

| 13 |

+

```

|

| 14 |

+

### Instruction:

|

| 15 |

+

Character's Persona: {bot character description}

|

| 16 |

+

|

| 17 |

+

User's Persona: {user character description}

|

| 18 |

+

|

| 19 |

+

Scenario: {what happens in the story}

|

| 20 |

+

|

| 21 |

+

Play the role of Character. You must engage in a roleplaying chat with User below this line. Do not write dialogues and narration for User.

|

| 22 |

+

|

| 23 |

+

### Input:

|

| 24 |

+

User: {utterance}

|

| 25 |

+

|

| 26 |

+

### Response:

|

| 27 |

+

Character: {utterance}

|

| 28 |

+

|

| 29 |

+

### Input

|

| 30 |

+

User: {utterance}

|

| 31 |

+

|

| 32 |

+

### Response:

|

| 33 |

+

Character: {utterance}

|

| 34 |

+

|

| 35 |

+

(etc.)

|

| 36 |

+

```

|

| 37 |

+

|

| 38 |

+

You should:

|

| 39 |

+

- Replace all text in curly braces (curly braces included) with your own text.

|

| 40 |

+

- Replace `User` and `Character` with appropriate names.

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

### Message length control

|

| 44 |

+

Inspired by the previously named "Roleplay" preset in SillyTavern, with this

|

| 45 |

+

version of LimaRP it is possible to append a length modifier to the response instruction

|

| 46 |

+

sequence, like this:

|

| 47 |

+

|

| 48 |

+

```

|

| 49 |

+

### Input

|

| 50 |

+

User: {utterance}

|

| 51 |

+

|

| 52 |

+

### Response: (length = medium)

|

| 53 |

+

Character: {utterance}

|

| 54 |

+

```

|

| 55 |

+

|

| 56 |

+

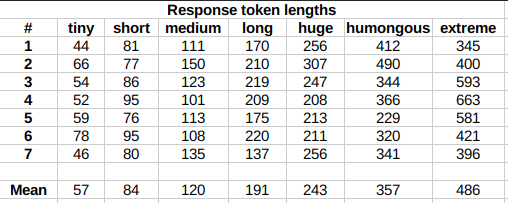

This has an immediately noticeable effect on bot responses. The available lengths are:

|

| 57 |

+

`tiny`, `short`, `medium`, `long`, `huge`, `humongous`, `extreme`, `unlimited`. **The

|

| 58 |

+

recommended starting length is `medium`**. Keep in mind that the AI may ramble

|

| 59 |

+

or impersonate the user with very long messages.

|

| 60 |

+

|

| 61 |

+

The length control effect is reproducible, but the messages will not necessarily follow

|

| 62 |

+

lengths very precisely, rather follow certain ranges on average, as seen in this table

|

| 63 |

+

with data from tests made with one reply at the beginning of the conversation:

|

| 64 |

+

|

| 65 |

+

|

| 66 |

+

|

| 67 |

+

Response length control appears to work well also deep into the conversation.

|

| 68 |

+

|

| 69 |

+

## Suggested settings

|

| 70 |

+

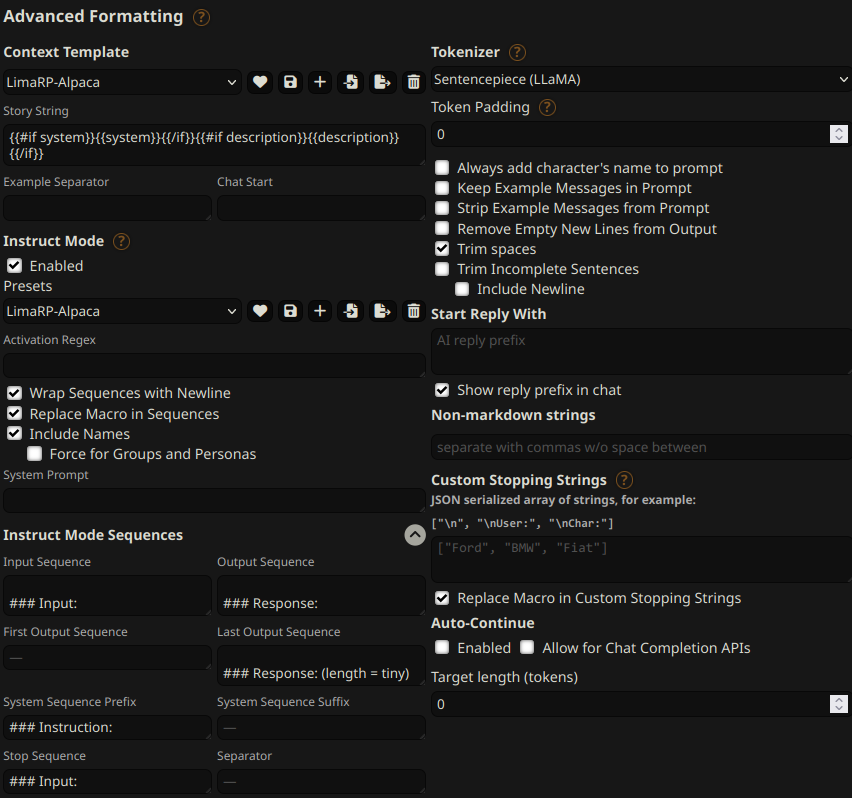

You can follow these instruction format settings in SillyTavern. Replace `tiny` with

|

| 71 |

+

your desired response length:

|

| 72 |

+

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

## Text generation settings

|

| 76 |

+

Extensive testing with Mistral has not been performed yet, but suggested starting text

|

| 77 |

+

generation settings may be:

|

| 78 |

+

|

| 79 |

+

- TFS = 0.90~0.95

|

| 80 |

+

- Temperature = 0.70~0.85

|

| 81 |

+

- Repetition penalty = 1.08~1.10

|

| 82 |

+

- top-k = 0 (disabled)

|

| 83 |

+

- top-p = 1 (disabled)

|

| 84 |

+

|

| 85 |

+

If you want to support me, you can [here](https://ko-fi.com/undiai).

|