update readme

Browse files

README.md

CHANGED

|

@@ -7,99 +7,118 @@ language:

|

|

| 7 |

---

|

| 8 |

<!-- ## **HunyuanDiT** -->

|

| 9 |

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

</p>

|

| 13 |

|

| 14 |

-

|

| 15 |

-

# 混元-DiT: 具有细粒度中文理解的多分辨率Diffusion Transformer

|

| 16 |

|

| 17 |

-

|

| 18 |

|

| 19 |

-

|

| 20 |

|

| 21 |

-

|

| 22 |

-

Please install PyTorch first, following the instruction in [https://pytorch.org](https://pytorch.org)

|

| 23 |

|

| 24 |

-

|

| 25 |

-

|

| 26 |

-

|

| 27 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 28 |

|

| 29 |

-

Then install the latest github version of 🤗 Diffusers with `pip`:

|

| 30 |

-

```

|

| 31 |

-

pip install git+https://github.com/huggingface/diffusers.git

|

| 32 |

```

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

|

| 34 |

-

|

| 35 |

-

|

| 36 |

-

|

| 37 |

-

|

| 38 |

|

| 39 |

-

|

| 40 |

-

|

|

|

|

| 41 |

|

| 42 |

-

|

| 43 |

-

# prompt = "An astronaut riding a horse"

|

| 44 |

-

prompt = "一个宇航员在骑马"

|

| 45 |

-

image = pipe(prompt).images[0]

|

| 46 |

```

|

| 47 |

|

| 48 |

-

|

| 49 |

-

|

| 50 |

-

|

| 51 |

-

|

| 52 |

-

|

| 53 |

-

|

| 54 |

-

|

| 55 |

-

|

| 56 |

-

|

| 57 |

-

|

| 58 |

-

|

| 59 |

-

|

| 60 |

-

|

| 61 |

-

|

| 62 |

-

|

| 63 |

-

|

| 64 |

-

|

| 65 |

-

|

| 66 |

-

|

| 67 |

-

|

| 68 |

-

|

| 69 |

-

|

| 70 |

-

|

| 71 |

-

|

| 72 |

-

|

| 73 |

-

|

| 74 |

-

|

| 75 |

-

|

| 76 |

-

|

| 77 |

-

|

| 78 |

-

|

| 79 |

-

|

| 80 |

-

|

| 81 |

-

|

| 82 |

-

|

| 83 |

-

|

| 84 |

-

|

| 85 |

-

|

| 86 |

-

|

| 87 |

-

|

| 88 |

-

|

| 89 |

-

|

| 90 |

-

|

| 91 |

-

|

| 92 |

-

|

| 93 |

-

|

| 94 |

-

|

| 95 |

-

|

| 96 |

-

|

| 97 |

-

|

| 98 |

-

|

| 99 |

-

|

| 100 |

-

|

| 101 |

-

|

| 102 |

-

|

| 103 |

-

|

| 104 |

-

|

| 105 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 7 |

---

|

| 8 |

<!-- ## **HunyuanDiT** -->

|

| 9 |

|

| 10 |

+

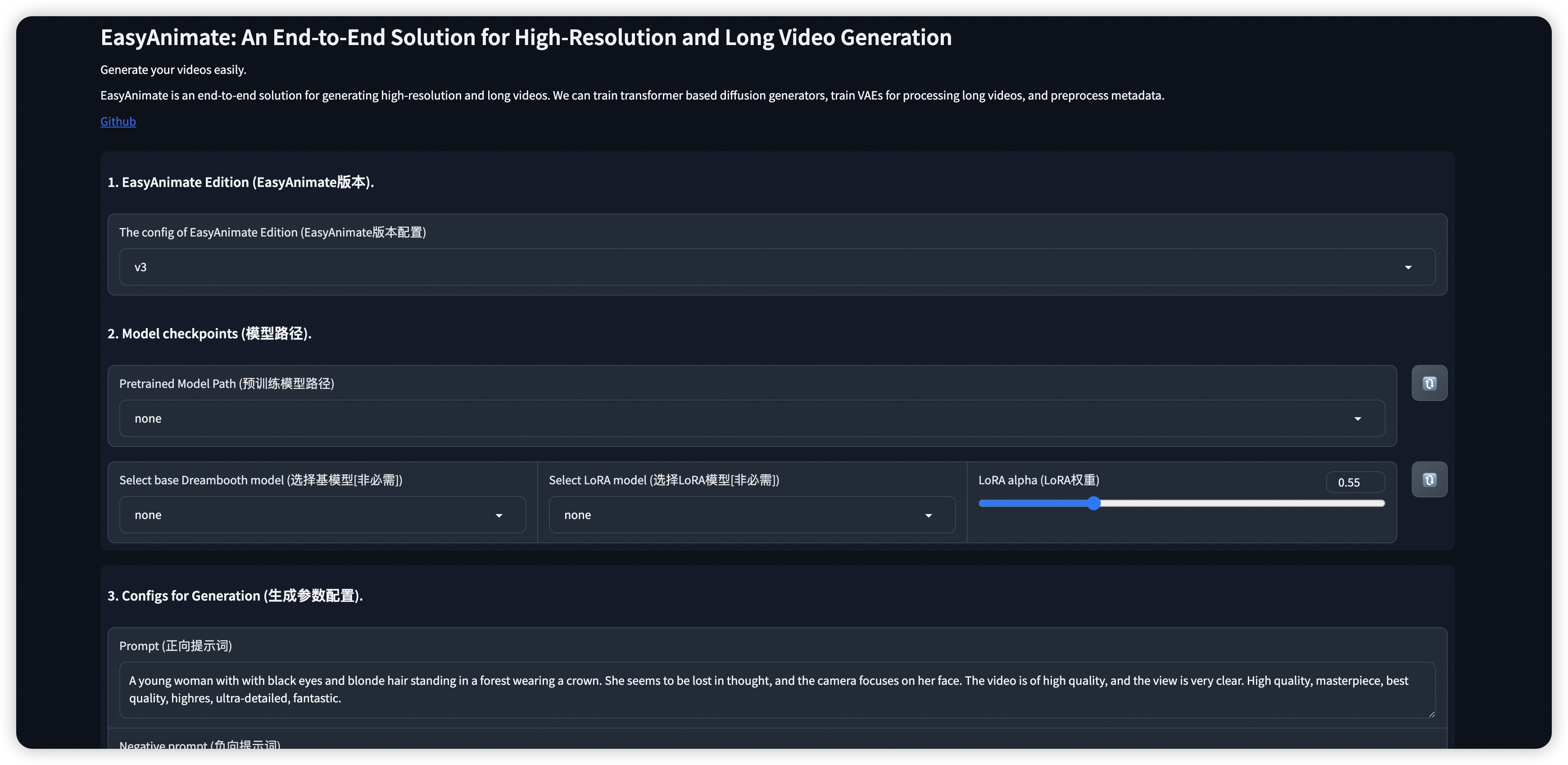

# 📷 EasyAnimate | An End-to-End Solution for High-Resolution and Long Video Generation (V4)

|

| 11 |

+

😊 EasyAnimate is an end-to-end solution for generating high-resolution and long videos. We can train transformer based diffusion generators, train VAEs for processing long videos, and preprocess metadata.

|

|

|

|

| 12 |

|

| 13 |

+

😊 Based on Sora like structure and DIT, we use transformer as a diffuser for video generation. We built easyanimate based on motion module, u-vit and slice-vae. In the future, we will try more training programs to improve the effect.

|

|

|

|

| 14 |

|

| 15 |

+

😊 Welcome!

|

| 16 |

|

| 17 |

+

- Updated to version 4, supporting a maximum resolution of 1024x1024, 144 frames, 6 seconds, and 24fps video generation. It also supports larger resolutions of 1280x1280 with video generation at 96 frames. Supports the generation of videos from text, images, and videos. A single model can support arbitrary resolutions from 512 to 1280 and supports bilingual predictions in Chinese and English. [ 2024.08.15 ]

|

| 18 |

|

| 19 |

+

# Table of Contents

|

|

|

|

| 20 |

|

| 21 |

+

- [Result Gallery](#result-gallery)

|

| 22 |

+

- [How to use](#how-to-use)

|

| 23 |

+

- [Model zoo](#model-zoo)

|

| 24 |

+

- [Algorithm Detailed](#algorithm-detailed)

|

| 25 |

+

- [TODO List](#todo-list)

|

| 26 |

+

- [Contact Us](#contact-us)

|

| 27 |

+

- [Reference](#reference)

|

| 28 |

+

- [License](#license)

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

# Result Gallery

|

| 32 |

+

|

| 33 |

+

These are our generated results:

|

| 34 |

+

<video controls src="https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/v3/EasyAnimate-v3-DemoShow.mp4" title="movie"></video>

|

| 35 |

+

Our UI interface is as follows:

|

| 36 |

+

|

| 37 |

+

|

| 38 |

+

# How to use

|

| 39 |

|

|

|

|

|

|

|

|

|

|

| 40 |

```

|

| 41 |

+

# clone code

|

| 42 |

+

git clone https://github.com/aigc-apps/EasyAnimate.git

|

| 43 |

+

|

| 44 |

+

# enter EasyAnimate's dir

|

| 45 |

+

cd EasyAnimate

|

| 46 |

|

| 47 |

+

# download weights

|

| 48 |

+

mkdir models/Diffusion_Transformer

|

| 49 |

+

mkdir models/Motion_Module

|

| 50 |

+

mkdir models/Personalized_Model

|

| 51 |

|

| 52 |

+

cd models/Diffusion_Transformer/

|

| 53 |

+

git lfs install

|

| 54 |

+

git clone https://huggingface.co/alibaba-pai/EasyAnimateV4-XL-2-InP

|

| 55 |

|

| 56 |

+

cd ../../

|

|

|

|

|

|

|

|

|

|

| 57 |

```

|

| 58 |

|

| 59 |

+

# Model zoo

|

| 60 |

+

|

| 61 |

+

EasyAnimateV3:

|

| 62 |

+

| Name | Type | Storage Space | Url | Hugging Face | Description |

|

| 63 |

+

|--|--|--|--|--|--|

|

| 64 |

+

| EasyAnimateV3-XL-2-InP-512x512.tar | EasyAnimateV3 | 16.2GB | [Download](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/EasyAnimateV4:

|

| 65 |

+

|

| 66 |

+

We attempted to implement EasyAnimate using 3D full attention, but this structure performed moderately on slice VAE and incurred considerable training costs. As a result, the performance of version V4 did not significantly surpass that of version V3. Due to limited resources, we are migrating EasyAnimate to a retrained 16-channel MagVit to pursue better model performance.

|

| 67 |

+

|

| 68 |

+

| Name | Type | Storage Space | Url | Hugging Face | Description |

|

| 69 |

+

|--|--|--|--|--|--|

|

| 70 |

+

| EasyAnimateV4-XL-2-InP.tar.gz | EasyAnimateV4 | Before extraction: 8.9 GB \/ After extraction: 14.0 GB | [Download](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/Diffusion_Transformer/EasyAnimateV4-XL-2-InP.tar.gz) | [🤗Link](https://huggingface.co/alibaba-pai/EasyAnimateV4-XL-2-InP)| Our official graph-generated video model is capable of predicting videos at multiple resolutions (512, 768, 1024, 1280) and has been trained on 144 frames at a rate of 24 frames per second. |

|

| 71 |

+

|

| 72 |

+

# Algorithm Detailed

|

| 73 |

+

### 1. Data Preprocessing

|

| 74 |

+

**Video Cut**

|

| 75 |

+

|

| 76 |

+

For long video cut, EasyAnimate utilizes PySceneDetect to identify scene changes within the video and performs scene cutting based on certain threshold values to ensure consistency in the themes of the video segments. After cutting, we only keep segments with lengths ranging from 3 to 10 seconds for model training.

|

| 77 |

+

|

| 78 |

+

**Video Cleaning and Description**

|

| 79 |

+

|

| 80 |

+

Following SVD's data preparation process, EasyAnimate provides a simple yet effective data processing pipeline for high-quality data filtering and labeling. It also supports distributed processing to accelerate the speed of data preprocessing. The overall process is as follows:

|

| 81 |

+

|

| 82 |

+

- Duration filtering: Analyze the basic information of the video to filter out low-quality videos that are short in duration or low in resolution.

|

| 83 |

+

- Aesthetic filtering: Filter out videos with poor content (blurry, dim, etc.) by calculating the average aesthetic score of uniformly distributed 4 frames.

|

| 84 |

+

- Text filtering: Use easyocr to calculate the text proportion of middle frames to filter out videos with a large proportion of text.

|

| 85 |

+

- Motion filtering: Calculate interframe optical flow differences to filter out videos that move too slowly or too quickly.

|

| 86 |

+

- Text description: Recaption video frames using videochat2 and vila. PAI is also developing a higher quality video recaption model, which will be released for use as soon as possible.

|

| 87 |

+

|

| 88 |

+

### 2. Model Architecture

|

| 89 |

+

We have adopted [PixArt-alpha](https://github.com/PixArt-alpha/PixArt-alpha) as the base model and modified the VAE and DiT model structures on this basis to better support video generation. The overall structure of EasyAnimate is as follows:

|

| 90 |

+

|

| 91 |

+

The diagram below outlines the pipeline of EasyAnimate. It includes the Text Encoder, Video VAE (video encoder and decoder), and Diffusion Transformer (DiT). The T5 Encoder is used as the text encoder. Other components are detailed in the sections below.

|

| 92 |

+

|

| 93 |

+

<img src="https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/pipeline_v2.jpg" alt="ui" style="zoom:50%;" />

|

| 94 |

+

|

| 95 |

+

To introduce feature information along the temporal axis, EasyAnimate incorporates the Motion Module to achieve the expansion from 2D images to 3D videos. For better generation effects, it jointly finetunes the Backbone together with the Motion Module, thereby achieving image generation and video generation within a single Pipeline.

|

| 96 |

+

|

| 97 |

+

Additionally, referencing U-ViT, it introduces a skip connection structure into EasyAnimate to further optimize deeper features by incorporating shallow features. A fully connected layer is also zero-initialized for each skip connection structure, allowing it to be applied as a plug-in module to previously trained and well-performing DiTs.

|

| 98 |

+

|

| 99 |

+

Moreover, it proposes Slice VAE, which addresses the memory difficulties encountered by MagViT when dealing with long and large videos, while also achieving greater compression in the temporal dimension during video encoding and decoding stages compared to MagViT.

|

| 100 |

+

|

| 101 |

+

For more details, please refer to [arxiv](https://arxiv.org/abs/2405.18991).

|

| 102 |

+

|

| 103 |

+

# TODO List

|

| 104 |

+

- Support model with larger resolution.

|

| 105 |

+

- Support video inpaint model.

|

| 106 |

+

|

| 107 |

+

# Contact Us

|

| 108 |

+

1. Use Dingding to search group 77450006752 or Scan to join

|

| 109 |

+

2. You need to scan the image to join the WeChat group or if it is expired, add this student as a friend first to invite you.

|

| 110 |

+

|

| 111 |

+

<img src="https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/group/dd.png" alt="ding group" width="30%"/>

|

| 112 |

+

<img src="https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/group/wechat.jpg" alt="Wechat group" width="30%"/>

|

| 113 |

+

<img src="https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/group/person.jpg" alt="Person" width="30%"/>

|

| 114 |

+

|

| 115 |

+

|

| 116 |

+

# Reference

|

| 117 |

+

- magvit: https://github.com/google-research/magvit

|

| 118 |

+

- PixArt: https://github.com/PixArt-alpha/PixArt-alpha

|

| 119 |

+

- Open-Sora-Plan: https://github.com/PKU-YuanGroup/Open-Sora-Plan

|

| 120 |

+

- Open-Sora: https://github.com/hpcaitech/Open-Sora

|

| 121 |

+

- Animatediff: https://github.com/guoyww/AnimateDiff

|

| 122 |

+

|

| 123 |

+

# License

|

| 124 |

+

This project is licensed under the [Apache License (Version 2.0)](https://github.com/modelscope/modelscope/blob/master/LICENSE).

|