Distilling from Dialogues: Finding Meaning in LLM Interactions

In the age of Large Language Models (LLMs), engaging in conversations with AI has become increasingly common. However, as these interactions grow longer and more complex, keeping track of the key information and insights can become a challenge. This is where the need for a personalized conversation summarization tool arises, helping users distill the essence of their LLM dialogues and retain valuable knowledge.

This project, called Adaptive/Progressive Summarization, aims to address this challenge by providing a unique approach to LLM conversation summarization. Unlike generic summarization methods, our project focuses on creating personalized summaries that reflect the individual user’s interactions and needs. By tailoring the summarization process to each user, we ensure that the generated summaries are not only accurate but also relevant and meaningful to the individual.

One of the key features of our project is the progressive summarization technique. Instead of generating a completely new summary for each interaction, we refine and enhance the existing summary based on the latest conversation. This approach ensures consistency and avoids the problem of disjointed summaries. By incrementally building upon the previous summary, we create a comprehensive and cohesive record of the entire LLM interaction.

This project was done in collaboration with Sayak Paul during the Vertex AI Sprint that happened in early 2025.

Case Study

At the heart of this project lies the crucial role of prompt engineering. By carefully crafting prompts that guide the LLM toward generating accurate and relevant summaries, we can significantly enhance the quality of the final output. This involves understanding the nuances of LLM behavior and employing effective prompt design techniques to elicit the desired responses.

## System Prompt

Based on the given summary and the last conversation between you (assistant) and me (user),

update the summary following the below summary guide.

Summary Guide:

* Do not rewrite the entire summary.

* It is allowed to modify the current summary, but do not eliminate.

* Update only the specific portions necessary to reflect new information or changes.

* Only include information we have explicitly discussed.

* Do not introduce any new information or topics, even if you have prior knowledge.

* Ensure the summary is factually accurate and reflects the nuances of our discussion.

* While being detailed, also aim for conciseness and clarity in the summary.

* Use markdown formatting to make the summary more readable (using bullet points, numbers are preferred).

* Do not seprate sections for previous and updated summaries.

To illustrate the progressive nature of our summarization technique, let’s take a look at a series of screenshots showcasing how the summary evolves as the conversation progresses. These screenshots were taken by ourselves while experimenting with this project on paper s1: Simple test-time scaling. Also, we used Gemini 2.0 Flash for the test.

First summary: what is this paper about?

I wanted to grasp the surface of this paper, hence I simply asked “what is this paper about?”, then the model gave responses, and below is the summary of the first interactions. After reading the entire paper, I found this summary to be well-written and sufficiently detailed.

The paper "s1: Simple test-time scaling" introduces a straightforward approach to improve language model reasoning performance by using additional computation during the testing phase.

Here's a breakdown:

* **Problem:** The paper tackles the challenge of enhancing reasoning capabilities in language models (LMs) with a focus on simplicity and replicability. It notes that while recent models like OpenAI's o1 demonstrate improved performance through test-time scaling (increasing computation at test time), the methodology isn't public.

* **Approach:** The authors propose a simple method based on:

1. **Data Curation:** They create a small dataset (s1K) of 1,000 reasoning questions with associated traces, meticulously selected based on difficulty, diversity, and quality.

2. **Budget Forcing:** A technique to control the computational resources used by the LM during testing. It forcefully terminates or extends the model's reasoning process. The 'Budget Forcing' approach consists of interrupting a model that generates too many thinking tokens or prolonging its thinking duration by appending "Wait" to the model's generation when it wants to stop.

* **Methodology:**

* Supervised fine-tuning of the Qwen2.5-32B-Instruct model on the s1K dataset.

* Applying "budget forcing" to the fine-tuned model during testing to control computation.

* **Results:**

* The resulting model (s1-32B) exceeds the o1-preview model's performance on competition-level math questions (MATH and AIME24).

* Budget forcing allows for performance extrapolation beyond the model's baseline, enhancing its accuracy on AIME24.

* The authors emphasize that using just a thousand samples is enough to generate a strong model that can compete with large-scale closed source models when a 'Budget Forcing' strategy is applied.

* **Contributions:**

* A simple method for creating a sample-efficient reasoning dataset.

* A straightforward test-time scaling technique.

* A competitive language model called s1-32B.

* Ablation studies of dataset creation and test-time scaling methods.

* **Key Ideas:**

* Test-time scaling can significantly improve reasoning in LMs.

* Simple methods can be effective and efficient.

* Careful data curation is crucial for good performance.

* Budget forcing provides a controllable way to manage test-time compute.

In essence, the paper argues that strong reasoning performance and test-time scaling can be achieved through a combination of curated data and controlled test-time computation without complex reinforcement learning techniques.

Second summary: how did the author collect “reasoning traces”?

Collecting “reasoning traces” is trivial task since no major LLM services provide APIs to collect them, hence I wanted to know where they got the reasoning traces. Below is the updated summary based on the second interaction.

It is worth noting that the updated summary is accurate in that it mentions the “Google Gemini Flash Thinking API” when compared to the paper, and that only the part that deals with reasoning traces has been updated, leaving the rest of the summary completely untouched.

.....

1. **Data Curation:** .... The reasoning traces were collected using the Google Gemini Flash Thinking API (Google, 2024). They generated a reasoning trace and solution for each question using this API.

.....

Third summary: s1–32B model is fine-tuned to understand “wait” token?

While interacting with LLM, I could understand the role of the “Wait” token, but I was curious about how to apply it. Specifically, I was wondering if I had to fine-tune my local model to understand the role of the Wait token, or if it could be applied to all models without fine-tuning. Again, the only relevant part is updated properly.

.....

2. **Budget Forcing:** ... The s1-32B model is fine-tuned to understand the "Wait" token as a signal to continue reasoning when it would normally stop, refining its reasoning and potentially correcting errors.

.....

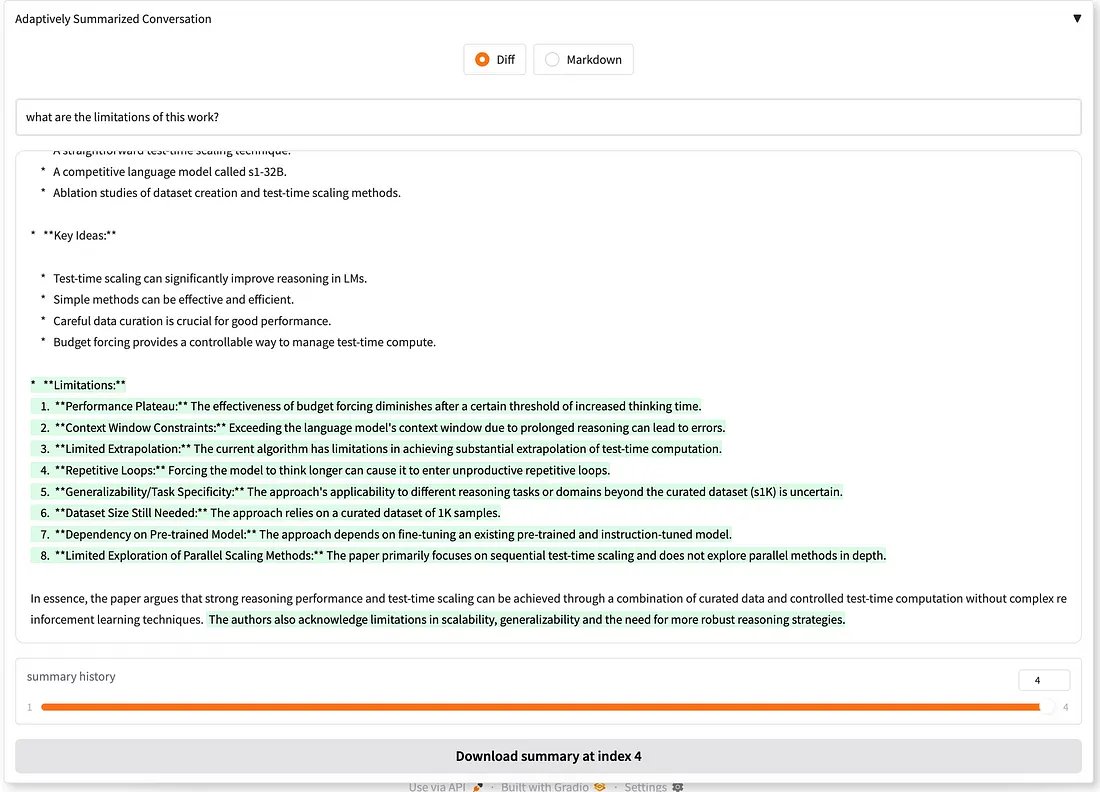

Fourth summary: what are the limitations of this work?

I understood the core concept and mechanism of the paper, and I wanted to know the limitations. Below are the appended limitations at the end of the summary without hurting other parts, which makes this summary perfectly closed.

......

* **Limitations:**

1. **Performance Plateau:** The effectiveness of budget forcing diminishes after a certain threshold of increased thinking time.

2. **Context Window Constraints:** Exceeding the language model's context window due to prolonged reasoning can lead to errors.

3. **Limited Extrapolation:** The current algorithm has limitations in achieving substantial extrapolation of test-time computation.

4. **Repetitive Loops:** Forcing the model to think longer can cause it to enter unproductive repetitive loops.

5. **Generalizability/Task Specificity:** The approach's applicability to different reasoning tasks or domains beyond the curated dataset (s1K) is uncertain.

6. **Dataset Size Still Needed:** The approach relies on a curated dataset of 1K samples.

7. **Dependency on Pre-trained Model:** The approach depends on fine-tuning an existing pre-trained and instruction-tuned model.

8. **Limited Exploration of Parallel Scaling Methods:** The paper primarily focuses on sequential test-time scaling and does not explore parallel methods in depth.

...... The authors also acknowledge limitations in scalability, generalizability and the need for more robust reasoning strategies.

Design of User Interface

To better understand the effectiveness of this approach, tracking how the summary is changing over time is crucial. To this end, we have developed a simple yet powerful Gradio-based application.

As you can see, within the application, you can navigate the history of summaries and compare them. The highlighted texts in green mean addition, while texts in red mean subtraction compared to the previous summary. Also, after a long conversation, all we want to do is to store what we have learnt, and this could be easily done with “Download” button at the bottom.

We have hosted this application on Hugging Face Space, so feel free to try yourself. Also, we are managing the source codes of this project in a GitHub repository. So, please check them out and let us hear any opinions on this approach.

Acknowledgments

This is a project built during the Vertex sprints held by Google’s ML Developer Programs team. We are thankful to be granted good amount of GCP credits to do this project.