Brain-Inspired Efficient Pruning: Exploiting Criticality in Spiking Neural Networks

Overview

- Novel brain-inspired pruning method for Spiking Neural Networks (SNNs)

- Uses criticality theory from neuroscience to identify important neurons

- Achieves up to 90% network compression while maintaining accuracy

- Introduces adaptive pruning schedule based on network dynamics

- Demonstrates effectiveness across multiple SNN architectures

Plain English Explanation

The brain naturally optimizes itself by strengthening important connections and pruning away less useful ones. This research paper takes inspiration from this biological process to make artificial neural networks more efficient.

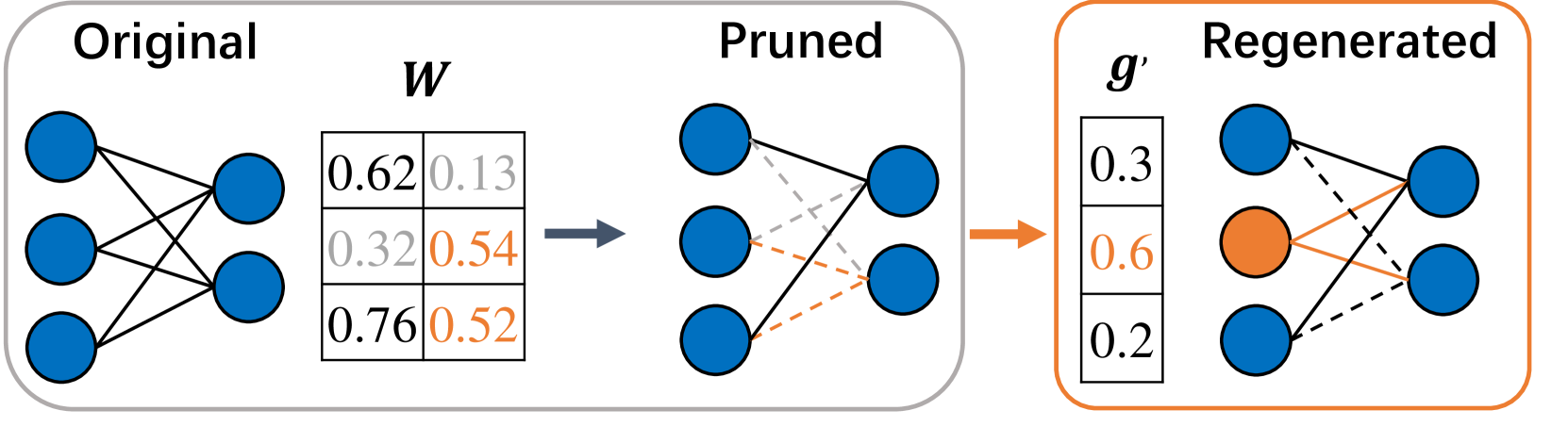

The researchers developed a method to identify which artificial neurons are most important by looking at how they influence the overall network behavior - similar to how critical neurons in the brain have outsized effects on brain function. By carefully removing less important neurons while preserving the critical ones, they can dramatically reduce the size of neural networks without hurting their performance.

Think of it like pruning a tree - carefully removing smaller branches while preserving the main structure keeps the tree healthy while making it more manageable. Their method adapts the pruning process based on how the network responds, just like a gardener would adjust their pruning based on how the tree reacts.

Key Findings

- Spiking neural networks can be compressed by up to 90% while maintaining accuracy

- Critical neurons identified through avalanche analysis show higher importance

- Adaptive pruning schedule outperforms fixed schedules

- Method works across different network architectures and datasets

- Pruned networks show improved energy efficiency

Technical Explanation

The research introduces a criticality-based pruning approach that identifies important neurons through neuronal avalanche analysis. The method monitors how spike activities propagate through the network to determine which neurons trigger larger cascade effects.

The pruning schedule adapts dynamically based on network stability measures. When the network shows signs of instability, pruning slows down to allow recovery. This prevents catastrophic forgetting while maximizing compression.

Experiments were conducted across multiple architectures including feed-forward and convolutional SNNs. The method was tested on standard datasets like MNIST and CIFAR-10, showing consistent performance improvements over existing pruning approaches.

Critical Analysis

The research has some limitations to consider. The computational overhead of avalanche analysis could be significant for larger networks. The method also requires careful parameter tuning for different architectures.

While the biological inspiration is compelling, the paper could benefit from deeper analysis of how closely the artificial pruning process mirrors actual neural development. Additional research is needed to validate the approach on more complex tasks and network architectures.

Conclusion

This brain-inspired approach represents a significant advance in neural network optimization. By combining biological principles with engineering pragmatism, it achieves impressive compression rates while maintaining network functionality. The method has potential applications in resource-constrained environments like mobile devices and IoT systems.

The success of this biologically-motivated approach suggests that further insights from neuroscience could lead to additional improvements in artificial neural networks. Future work could explore other biological mechanisms for network optimization.