url

stringlengths 58

61

| repository_url

stringclasses 1

value | labels_url

stringlengths 72

75

| comments_url

stringlengths 67

70

| events_url

stringlengths 65

68

| html_url

stringlengths 46

51

| id

int64 600M

2.05B

| node_id

stringlengths 18

32

| number

int64 2

6.51k

| title

stringlengths 1

290

| user

dict | labels

listlengths 0

4

| state

stringclasses 2

values | locked

bool 1

class | assignee

dict | assignees

listlengths 0

4

| milestone

dict | comments

sequencelengths 0

30

| created_at

unknown | updated_at

unknown | closed_at

unknown | author_association

stringclasses 3

values | active_lock_reason

float64 | draft

float64 0

1

⌀ | pull_request

dict | body

stringlengths 0

228k

⌀ | reactions

dict | timeline_url

stringlengths 67

70

| performed_via_github_app

float64 | state_reason

stringclasses 3

values | is_pull_request

bool 2

classes |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/datasets/issues/4829 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/4829/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/4829/comments | https://api.github.com/repos/huggingface/datasets/issues/4829/events | https://github.com/huggingface/datasets/issues/4829 | 1,336,068,068 | I_kwDODunzps5Posfk | 4,829 | Misalignment between card tag validation and docs | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova"

} | [

{

"color": "d73a4a",

"default": true,

"description": "Something isn't working",

"id": 1935892857,

"name": "bug",

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug"

}

] | open | false | null | [] | null | [

"(Note that the doc is aligned with the hub validation rules, and the \"ground truth\" is the hub validation rules given that they apply to all datasets, not just the canonical ones)",

"Instead of our own implementation, we now use `huggingface_hub`'s `DatasetCardData`, which has the correct type hint, so I think we can close this issue."

] | "2022-08-11T14:44:45Z" | "2023-07-21T15:38:02Z" | null | MEMBER | null | null | null | ## Describe the bug

As pointed out in other issue: https://github.com/huggingface/datasets/pull/4827#discussion_r943536284

the validation of the dataset card tags is not aligned with its documentation: e.g.

- implementation: `license: List[str]`

- docs: `license: Union[str, List[str]]`

They should be aligned.

CC: @julien-c

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/4829/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/4829/timeline | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/41 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/41/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/41/comments | https://api.github.com/repos/huggingface/datasets/issues/41/events | https://github.com/huggingface/datasets/pull/41 | 611,739,219 | MDExOlB1bGxSZXF1ZXN0NDEyODQzNDQy | 41 | [Load module] allow kwargs into load module | {

"avatar_url": "https://avatars.githubusercontent.com/u/23423619?v=4",

"events_url": "https://api.github.com/users/patrickvonplaten/events{/privacy}",

"followers_url": "https://api.github.com/users/patrickvonplaten/followers",

"following_url": "https://api.github.com/users/patrickvonplaten/following{/other_user}",

"gists_url": "https://api.github.com/users/patrickvonplaten/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/patrickvonplaten",

"id": 23423619,

"login": "patrickvonplaten",

"node_id": "MDQ6VXNlcjIzNDIzNjE5",

"organizations_url": "https://api.github.com/users/patrickvonplaten/orgs",

"received_events_url": "https://api.github.com/users/patrickvonplaten/received_events",

"repos_url": "https://api.github.com/users/patrickvonplaten/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/patrickvonplaten/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/patrickvonplaten/subscriptions",

"type": "User",

"url": "https://api.github.com/users/patrickvonplaten"

} | [] | closed | false | null | [] | null | [] | "2020-05-04T09:42:11Z" | "2020-05-04T19:39:07Z" | "2020-05-04T19:39:06Z" | MEMBER | null | 0 | {

"diff_url": "https://github.com/huggingface/datasets/pull/41.diff",

"html_url": "https://github.com/huggingface/datasets/pull/41",

"merged_at": "2020-05-04T19:39:06Z",

"patch_url": "https://github.com/huggingface/datasets/pull/41.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/41"

} | Currenly it is not possible to force a re-download of the dataset script.

This simple change allows to pass ``force_reload=True`` as ``builder_kwargs`` in the ``load.py`` function. | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/41/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/41/timeline | null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3577 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3577/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3577/comments | https://api.github.com/repos/huggingface/datasets/issues/3577/events | https://github.com/huggingface/datasets/issues/3577 | 1,102,598,241 | I_kwDODunzps5BuFBh | 3,577 | Add The Mexican Emotional Speech Database (MESD) | {

"avatar_url": "https://avatars.githubusercontent.com/u/4755430?v=4",

"events_url": "https://api.github.com/users/omarespejel/events{/privacy}",

"followers_url": "https://api.github.com/users/omarespejel/followers",

"following_url": "https://api.github.com/users/omarespejel/following{/other_user}",

"gists_url": "https://api.github.com/users/omarespejel/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/omarespejel",

"id": 4755430,

"login": "omarespejel",

"node_id": "MDQ6VXNlcjQ3NTU0MzA=",

"organizations_url": "https://api.github.com/users/omarespejel/orgs",

"received_events_url": "https://api.github.com/users/omarespejel/received_events",

"repos_url": "https://api.github.com/users/omarespejel/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/omarespejel/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/omarespejel/subscriptions",

"type": "User",

"url": "https://api.github.com/users/omarespejel"

} | [

{

"color": "e99695",

"default": false,

"description": "Requesting to add a new dataset",

"id": 2067376369,

"name": "dataset request",

"node_id": "MDU6TGFiZWwyMDY3Mzc2MzY5",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset%20request"

},

{

"color": "d93f0b",

"default": false,

"description": "",

"id": 2725241052,

"name": "speech",

"node_id": "MDU6TGFiZWwyNzI1MjQxMDUy",

"url": "https://api.github.com/repos/huggingface/datasets/labels/speech"

}

] | open | false | null | [] | null | [] | "2022-01-13T23:49:36Z" | "2022-01-27T14:14:38Z" | null | NONE | null | null | null | ## Adding a Dataset

- **Name:** *The Mexican Emotional Speech Database (MESD)*

- **Description:** *Contains 864 voice recordings with six different prosodies: anger, disgust, fear, happiness, neutral, and sadness. Furthermore, three voice categories are included: female adult, male adult, and child. *

- **Paper:** *[Paper](https://ieeexplore.ieee.org/abstract/document/9629934/authors#authors)*

- **Data:** *[link to the Github repository or current dataset location](https://data.mendeley.com/datasets/cy34mh68j9/3)*

- **Motivation:** *Would add Spanish speech data to the HF datasets :) *

Instructions to add a new dataset can be found [here](https://github.com/huggingface/datasets/blob/master/ADD_NEW_DATASET.md).

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3577/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/3577/timeline | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/642 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/642/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/642/comments | https://api.github.com/repos/huggingface/datasets/issues/642/events | https://github.com/huggingface/datasets/pull/642 | 704,397,499 | MDExOlB1bGxSZXF1ZXN0NDg5MzMwMDAx | 642 | Rename wnut fields | {

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/lhoestq",

"id": 42851186,

"login": "lhoestq",

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"type": "User",

"url": "https://api.github.com/users/lhoestq"

} | [] | closed | false | null | [] | null | [] | "2020-09-18T13:51:31Z" | "2020-09-18T17:18:31Z" | "2020-09-18T17:18:30Z" | MEMBER | null | 0 | {

"diff_url": "https://github.com/huggingface/datasets/pull/642.diff",

"html_url": "https://github.com/huggingface/datasets/pull/642",

"merged_at": "2020-09-18T17:18:30Z",

"patch_url": "https://github.com/huggingface/datasets/pull/642.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/642"

} | As mentioned in #641 it would be cool to have it follow the naming of the other NER datasets | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/642/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/642/timeline | null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/4778 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/4778/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/4778/comments | https://api.github.com/repos/huggingface/datasets/issues/4778/events | https://github.com/huggingface/datasets/pull/4778 | 1,324,928,750 | PR_kwDODunzps48dRPh | 4,778 | Update local loading script docs | {

"avatar_url": "https://avatars.githubusercontent.com/u/59462357?v=4",

"events_url": "https://api.github.com/users/stevhliu/events{/privacy}",

"followers_url": "https://api.github.com/users/stevhliu/followers",

"following_url": "https://api.github.com/users/stevhliu/following{/other_user}",

"gists_url": "https://api.github.com/users/stevhliu/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/stevhliu",

"id": 59462357,

"login": "stevhliu",

"node_id": "MDQ6VXNlcjU5NDYyMzU3",

"organizations_url": "https://api.github.com/users/stevhliu/orgs",

"received_events_url": "https://api.github.com/users/stevhliu/received_events",

"repos_url": "https://api.github.com/users/stevhliu/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/stevhliu/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/stevhliu/subscriptions",

"type": "User",

"url": "https://api.github.com/users/stevhliu"

} | [

{

"color": "0075ca",

"default": true,

"description": "Improvements or additions to documentation",

"id": 1935892861,

"name": "documentation",

"node_id": "MDU6TGFiZWwxOTM1ODkyODYx",

"url": "https://api.github.com/repos/huggingface/datasets/labels/documentation"

}

] | closed | false | null | [] | null | [

"The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/datasets/pr_4778). All of your documentation changes will be reflected on that endpoint.",

"I would rather have a section in the docs that explains how to modify the script of an existing dataset (`inspect_dataset` + modification + `load_dataset`) instead of focusing on the GH datasets bundled with the source (only applicable for devs).",

"Good idea! I went with @mariosasko's suggestion to use `inspect_dataset` instead of cloning a dataset repository since it's a good opportunity to show off more of the library's lesser-known functions if that's ok with everyone :)",

"One advantage of cloning the repo is that it fetches potential data files referenced inside a script using relative paths, so if we decide to use `inspect_dataset`, we should at least add a tip to explain this limitation and how to circumvent it.",

"Oh you're right. Calling `load_dataset` on the modified script without having the files that come with it is not ideal. I agree it should be `git clone` instead - and inspect is for inspection only ^^'"

] | "2022-08-01T20:21:07Z" | "2022-08-23T16:32:26Z" | "2022-08-23T16:32:22Z" | MEMBER | null | 0 | {

"diff_url": "https://github.com/huggingface/datasets/pull/4778.diff",

"html_url": "https://github.com/huggingface/datasets/pull/4778",

"merged_at": "2022-08-23T16:32:22Z",

"patch_url": "https://github.com/huggingface/datasets/pull/4778.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/4778"

} | This PR clarifies the local loading script section to include how to load a dataset after you've modified the local loading script (closes #4732). | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/4778/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/4778/timeline | null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/5969 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5969/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5969/comments | https://api.github.com/repos/huggingface/datasets/issues/5969/events | https://github.com/huggingface/datasets/pull/5969 | 1,765,529,905 | PR_kwDODunzps5Tcgq4 | 5,969 | Add `encoding` and `errors` params to JSON loader | {

"avatar_url": "https://avatars.githubusercontent.com/u/47462742?v=4",

"events_url": "https://api.github.com/users/mariosasko/events{/privacy}",

"followers_url": "https://api.github.com/users/mariosasko/followers",

"following_url": "https://api.github.com/users/mariosasko/following{/other_user}",

"gists_url": "https://api.github.com/users/mariosasko/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/mariosasko",

"id": 47462742,

"login": "mariosasko",

"node_id": "MDQ6VXNlcjQ3NDYyNzQy",

"organizations_url": "https://api.github.com/users/mariosasko/orgs",

"received_events_url": "https://api.github.com/users/mariosasko/received_events",

"repos_url": "https://api.github.com/users/mariosasko/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/mariosasko/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mariosasko/subscriptions",

"type": "User",

"url": "https://api.github.com/users/mariosasko"

} | [] | closed | false | null | [] | null | [

"_The documentation is not available anymore as the PR was closed or merged._",

"<details>\n<summary>Show benchmarks</summary>\n\nPyArrow==8.0.0\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.006770 / 0.011353 (-0.004583) | 0.004143 / 0.011008 (-0.006865) | 0.098928 / 0.038508 (0.060420) | 0.044893 / 0.023109 (0.021783) | 0.302630 / 0.275898 (0.026732) | 0.368173 / 0.323480 (0.044693) | 0.005631 / 0.007986 (-0.002354) | 0.003397 / 0.004328 (-0.000931) | 0.075748 / 0.004250 (0.071497) | 0.062582 / 0.037052 (0.025530) | 0.329586 / 0.258489 (0.071097) | 0.362625 / 0.293841 (0.068784) | 0.033250 / 0.128546 (-0.095296) | 0.008880 / 0.075646 (-0.066766) | 0.329683 / 0.419271 (-0.089588) | 0.054426 / 0.043533 (0.010893) | 0.297940 / 0.255139 (0.042801) | 0.319796 / 0.283200 (0.036597) | 0.023296 / 0.141683 (-0.118387) | 1.462142 / 1.452155 (0.009987) | 1.495796 / 1.492716 (0.003079) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.201771 / 0.018006 (0.183765) | 0.454514 / 0.000490 (0.454024) | 0.003333 / 0.000200 (0.003133) | 0.000081 / 0.000054 (0.000027) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.028084 / 0.037411 (-0.009327) | 0.109452 / 0.014526 (0.094926) | 0.119200 / 0.176557 (-0.057357) | 0.180302 / 0.737135 (-0.556834) | 0.125653 / 0.296338 (-0.170686) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.409819 / 0.215209 (0.194610) | 4.055117 / 2.077655 (1.977462) | 1.855279 / 1.504120 (0.351159) | 1.655281 / 1.541195 (0.114086) | 1.687938 / 1.468490 (0.219448) | 0.528352 / 4.584777 (-4.056425) | 3.750250 / 3.745712 (0.004538) | 3.386741 / 5.269862 (-1.883121) | 1.572036 / 4.565676 (-2.993640) | 0.065125 / 0.424275 (-0.359150) | 0.011259 / 0.007607 (0.003652) | 0.513449 / 0.226044 (0.287405) | 5.139421 / 2.268929 (2.870492) | 2.316973 / 55.444624 (-53.127651) | 1.984109 / 6.876477 (-4.892368) | 2.127915 / 2.142072 (-0.014158) | 0.653238 / 4.805227 (-4.151989) | 0.142686 / 6.500664 (-6.357978) | 0.063666 / 0.075469 (-0.011803) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.185174 / 1.841788 (-0.656614) | 14.790282 / 8.074308 (6.715974) | 13.089222 / 10.191392 (2.897830) | 0.146055 / 0.680424 (-0.534369) | 0.017835 / 0.534201 (-0.516366) | 0.399598 / 0.579283 (-0.179685) | 0.425296 / 0.434364 (-0.009068) | 0.478552 / 0.540337 (-0.061786) | 0.579702 / 1.386936 (-0.807234) |\n\n</details>\nPyArrow==latest\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.006750 / 0.011353 (-0.004603) | 0.004156 / 0.011008 (-0.006853) | 0.074948 / 0.038508 (0.036440) | 0.043368 / 0.023109 (0.020259) | 0.355389 / 0.275898 (0.079491) | 0.429167 / 0.323480 (0.105687) | 0.003911 / 0.007986 (-0.004075) | 0.004340 / 0.004328 (0.000012) | 0.075940 / 0.004250 (0.071689) | 0.054293 / 0.037052 (0.017241) | 0.400317 / 0.258489 (0.141827) | 0.432001 / 0.293841 (0.138160) | 0.032340 / 0.128546 (-0.096206) | 0.008876 / 0.075646 (-0.066770) | 0.082284 / 0.419271 (-0.336987) | 0.050819 / 0.043533 (0.007286) | 0.351994 / 0.255139 (0.096855) | 0.375917 / 0.283200 (0.092717) | 0.022466 / 0.141683 (-0.119217) | 1.538824 / 1.452155 (0.086669) | 1.563995 / 1.492716 (0.071279) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.227330 / 0.018006 (0.209323) | 0.446380 / 0.000490 (0.445890) | 0.000408 / 0.000200 (0.000208) | 0.000058 / 0.000054 (0.000003) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.028534 / 0.037411 (-0.008878) | 0.113467 / 0.014526 (0.098941) | 0.123590 / 0.176557 (-0.052966) | 0.174309 / 0.737135 (-0.562827) | 0.130631 / 0.296338 (-0.165707) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.441020 / 0.215209 (0.225811) | 4.386564 / 2.077655 (2.308909) | 2.100704 / 1.504120 (0.596584) | 1.901484 / 1.541195 (0.360289) | 1.963494 / 1.468490 (0.495004) | 0.536838 / 4.584777 (-4.047939) | 3.739071 / 3.745712 (-0.006642) | 3.278981 / 5.269862 (-1.990881) | 1.515476 / 4.565676 (-3.050201) | 0.066388 / 0.424275 (-0.357887) | 0.011857 / 0.007607 (0.004250) | 0.545507 / 0.226044 (0.319463) | 5.441479 / 2.268929 (3.172550) | 2.602144 / 55.444624 (-52.842480) | 2.235583 / 6.876477 (-4.640894) | 2.293458 / 2.142072 (0.151385) | 0.658535 / 4.805227 (-4.146692) | 0.141327 / 6.500664 (-6.359337) | 0.063726 / 0.075469 (-0.011743) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.247819 / 1.841788 (-0.593968) | 15.234524 / 8.074308 (7.160216) | 14.592700 / 10.191392 (4.401308) | 0.141952 / 0.680424 (-0.538472) | 0.017747 / 0.534201 (-0.516454) | 0.396819 / 0.579283 (-0.182465) | 0.415902 / 0.434364 (-0.018462) | 0.464619 / 0.540337 (-0.075718) | 0.560866 / 1.386936 (-0.826070) |\n\n</details>\n</details>\n\n\n",

"<details>\n<summary>Show benchmarks</summary>\n\nPyArrow==8.0.0\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.008278 / 0.011353 (-0.003075) | 0.005044 / 0.011008 (-0.005964) | 0.123382 / 0.038508 (0.084874) | 0.054039 / 0.023109 (0.030929) | 0.382338 / 0.275898 (0.106440) | 0.453287 / 0.323480 (0.129807) | 0.006342 / 0.007986 (-0.001644) | 0.003930 / 0.004328 (-0.000398) | 0.094039 / 0.004250 (0.089789) | 0.076525 / 0.037052 (0.039472) | 0.394066 / 0.258489 (0.135577) | 0.445600 / 0.293841 (0.151759) | 0.039348 / 0.128546 (-0.089199) | 0.010485 / 0.075646 (-0.065161) | 0.433730 / 0.419271 (0.014459) | 0.082671 / 0.043533 (0.039138) | 0.375250 / 0.255139 (0.120111) | 0.416269 / 0.283200 (0.133070) | 0.038397 / 0.141683 (-0.103286) | 1.864834 / 1.452155 (0.412680) | 2.010453 / 1.492716 (0.517737) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.240008 / 0.018006 (0.222002) | 0.470975 / 0.000490 (0.470485) | 0.004001 / 0.000200 (0.003801) | 0.000097 / 0.000054 (0.000042) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.031107 / 0.037411 (-0.006304) | 0.129371 / 0.014526 (0.114846) | 0.141559 / 0.176557 (-0.034997) | 0.205571 / 0.737135 (-0.531564) | 0.144611 / 0.296338 (-0.151728) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.506972 / 0.215209 (0.291763) | 5.055951 / 2.077655 (2.978296) | 2.397438 / 1.504120 (0.893318) | 2.170435 / 1.541195 (0.629240) | 2.240296 / 1.468490 (0.771806) | 0.641559 / 4.584777 (-3.943218) | 4.644772 / 3.745712 (0.899060) | 4.064200 / 5.269862 (-1.205662) | 1.946991 / 4.565676 (-2.618685) | 0.086413 / 0.424275 (-0.337862) | 0.015082 / 0.007607 (0.007475) | 0.670413 / 0.226044 (0.444369) | 6.331346 / 2.268929 (4.062418) | 2.965813 / 55.444624 (-52.478812) | 2.547952 / 6.876477 (-4.328524) | 2.718390 / 2.142072 (0.576318) | 0.796657 / 4.805227 (-4.008571) | 0.173229 / 6.500664 (-6.327435) | 0.079606 / 0.075469 (0.004137) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.568761 / 1.841788 (-0.273026) | 18.485432 / 8.074308 (10.411124) | 15.758513 / 10.191392 (5.567121) | 0.170427 / 0.680424 (-0.509997) | 0.021421 / 0.534201 (-0.512780) | 0.518623 / 0.579283 (-0.060660) | 0.525887 / 0.434364 (0.091523) | 0.640331 / 0.540337 (0.099993) | 0.766748 / 1.386936 (-0.620188) |\n\n</details>\nPyArrow==latest\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.007680 / 0.011353 (-0.003673) | 0.005289 / 0.011008 (-0.005719) | 0.093773 / 0.038508 (0.055265) | 0.054997 / 0.023109 (0.031888) | 0.456277 / 0.275898 (0.180379) | 0.500642 / 0.323480 (0.177162) | 0.005935 / 0.007986 (-0.002050) | 0.004375 / 0.004328 (0.000047) | 0.094131 / 0.004250 (0.089881) | 0.063399 / 0.037052 (0.026347) | 0.470546 / 0.258489 (0.212057) | 0.504989 / 0.293841 (0.211148) | 0.038541 / 0.128546 (-0.090006) | 0.010403 / 0.075646 (-0.065244) | 0.102469 / 0.419271 (-0.316802) | 0.063105 / 0.043533 (0.019572) | 0.466005 / 0.255139 (0.210866) | 0.458677 / 0.283200 (0.175477) | 0.028407 / 0.141683 (-0.113276) | 1.893829 / 1.452155 (0.441675) | 1.917954 / 1.492716 (0.425238) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.272760 / 0.018006 (0.254754) | 0.476159 / 0.000490 (0.475669) | 0.008467 / 0.000200 (0.008267) | 0.000146 / 0.000054 (0.000091) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.035755 / 0.037411 (-0.001656) | 0.145038 / 0.014526 (0.130512) | 0.148322 / 0.176557 (-0.028235) | 0.210193 / 0.737135 (-0.526943) | 0.156547 / 0.296338 (-0.139792) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.541204 / 0.215209 (0.325995) | 5.382746 / 2.077655 (3.305091) | 2.704229 / 1.504120 (1.200109) | 2.468422 / 1.541195 (0.927227) | 2.522672 / 1.468490 (1.054182) | 0.644899 / 4.584777 (-3.939878) | 4.654401 / 3.745712 (0.908689) | 2.159223 / 5.269862 (-3.110638) | 1.280098 / 4.565676 (-3.285578) | 0.080053 / 0.424275 (-0.344222) | 0.014383 / 0.007607 (0.006776) | 0.662770 / 0.226044 (0.436725) | 6.617651 / 2.268929 (4.348722) | 3.234347 / 55.444624 (-52.210277) | 2.861417 / 6.876477 (-4.015059) | 2.888928 / 2.142072 (0.746856) | 0.792854 / 4.805227 (-4.012374) | 0.172553 / 6.500664 (-6.328111) | 0.078402 / 0.075469 (0.002933) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.565351 / 1.841788 (-0.276436) | 18.681916 / 8.074308 (10.607608) | 17.264473 / 10.191392 (7.073081) | 0.168461 / 0.680424 (-0.511963) | 0.021353 / 0.534201 (-0.512848) | 0.517843 / 0.579283 (-0.061440) | 0.519907 / 0.434364 (0.085543) | 0.623687 / 0.540337 (0.083350) | 0.761796 / 1.386936 (-0.625140) |\n\n</details>\n</details>\n\n\n",

"<details>\n<summary>Show benchmarks</summary>\n\nPyArrow==8.0.0\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.006750 / 0.011353 (-0.004603) | 0.004268 / 0.011008 (-0.006741) | 0.098644 / 0.038508 (0.060136) | 0.044643 / 0.023109 (0.021534) | 0.309420 / 0.275898 (0.033522) | 0.379294 / 0.323480 (0.055815) | 0.005729 / 0.007986 (-0.002256) | 0.003615 / 0.004328 (-0.000714) | 0.076086 / 0.004250 (0.071835) | 0.068994 / 0.037052 (0.031942) | 0.325653 / 0.258489 (0.067164) | 0.375187 / 0.293841 (0.081347) | 0.032546 / 0.128546 (-0.096000) | 0.009089 / 0.075646 (-0.066557) | 0.329905 / 0.419271 (-0.089366) | 0.066832 / 0.043533 (0.023300) | 0.299247 / 0.255139 (0.044108) | 0.323460 / 0.283200 (0.040260) | 0.034226 / 0.141683 (-0.107457) | 1.475659 / 1.452155 (0.023505) | 1.556234 / 1.492716 (0.063518) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.292305 / 0.018006 (0.274299) | 0.542584 / 0.000490 (0.542094) | 0.003047 / 0.000200 (0.002847) | 0.000082 / 0.000054 (0.000027) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.030096 / 0.037411 (-0.007315) | 0.112341 / 0.014526 (0.097815) | 0.124965 / 0.176557 (-0.051591) | 0.183159 / 0.737135 (-0.553976) | 0.131885 / 0.296338 (-0.164453) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.426437 / 0.215209 (0.211228) | 4.260984 / 2.077655 (2.183330) | 2.078358 / 1.504120 (0.574238) | 1.877644 / 1.541195 (0.336449) | 2.044036 / 1.468490 (0.575546) | 0.532980 / 4.584777 (-4.051797) | 3.749573 / 3.745712 (0.003860) | 1.944155 / 5.269862 (-3.325706) | 1.090307 / 4.565676 (-3.475370) | 0.065445 / 0.424275 (-0.358830) | 0.011237 / 0.007607 (0.003630) | 0.521448 / 0.226044 (0.295403) | 5.213118 / 2.268929 (2.944189) | 2.507829 / 55.444624 (-52.936795) | 2.177179 / 6.876477 (-4.699297) | 2.351161 / 2.142072 (0.209088) | 0.656775 / 4.805227 (-4.148452) | 0.141207 / 6.500664 (-6.359457) | 0.063286 / 0.075469 (-0.012183) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.190281 / 1.841788 (-0.651506) | 15.327424 / 8.074308 (7.253116) | 13.300695 / 10.191392 (3.109303) | 0.190484 / 0.680424 (-0.489939) | 0.017984 / 0.534201 (-0.516217) | 0.405714 / 0.579283 (-0.173569) | 0.435915 / 0.434364 (0.001551) | 0.494083 / 0.540337 (-0.046254) | 0.600616 / 1.386936 (-0.786320) |\n\n</details>\nPyArrow==latest\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.006740 / 0.011353 (-0.004613) | 0.004289 / 0.011008 (-0.006719) | 0.076532 / 0.038508 (0.038024) | 0.043305 / 0.023109 (0.020196) | 0.356111 / 0.275898 (0.080213) | 0.434121 / 0.323480 (0.110641) | 0.005599 / 0.007986 (-0.002387) | 0.003461 / 0.004328 (-0.000868) | 0.077097 / 0.004250 (0.072847) | 0.055369 / 0.037052 (0.018317) | 0.367093 / 0.258489 (0.108604) | 0.418801 / 0.293841 (0.124960) | 0.032057 / 0.128546 (-0.096489) | 0.009048 / 0.075646 (-0.066599) | 0.082897 / 0.419271 (-0.336374) | 0.050287 / 0.043533 (0.006754) | 0.352060 / 0.255139 (0.096921) | 0.376278 / 0.283200 (0.093078) | 0.023924 / 0.141683 (-0.117759) | 1.522780 / 1.452155 (0.070626) | 1.578938 / 1.492716 (0.086222) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.287317 / 0.018006 (0.269311) | 0.508490 / 0.000490 (0.508000) | 0.000431 / 0.000200 (0.000231) | 0.000056 / 0.000054 (0.000002) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.031139 / 0.037411 (-0.006272) | 0.113927 / 0.014526 (0.099401) | 0.128147 / 0.176557 (-0.048409) | 0.179712 / 0.737135 (-0.557424) | 0.134364 / 0.296338 (-0.161975) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.452834 / 0.215209 (0.237625) | 4.507944 / 2.077655 (2.430289) | 2.287758 / 1.504120 (0.783638) | 2.091145 / 1.541195 (0.549951) | 2.196228 / 1.468490 (0.727738) | 0.539306 / 4.584777 (-4.045471) | 3.838941 / 3.745712 (0.093228) | 1.908801 / 5.269862 (-3.361060) | 1.139235 / 4.565676 (-3.426442) | 0.066677 / 0.424275 (-0.357599) | 0.011422 / 0.007607 (0.003815) | 0.562966 / 0.226044 (0.336921) | 5.633712 / 2.268929 (3.364784) | 2.788622 / 55.444624 (-52.656002) | 2.438465 / 6.876477 (-4.438012) | 2.523479 / 2.142072 (0.381407) | 0.668730 / 4.805227 (-4.136498) | 0.143977 / 6.500664 (-6.356687) | 0.064661 / 0.075469 (-0.010808) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.291708 / 1.841788 (-0.550080) | 15.573316 / 8.074308 (7.499008) | 14.435099 / 10.191392 (4.243707) | 0.147745 / 0.680424 (-0.532679) | 0.017602 / 0.534201 (-0.516599) | 0.401560 / 0.579283 (-0.177723) | 0.429861 / 0.434364 (-0.004502) | 0.469800 / 0.540337 (-0.070538) | 0.567515 / 1.386936 (-0.819421) |\n\n</details>\n</details>\n\n\n"

] | "2023-06-20T14:28:35Z" | "2023-06-21T13:39:50Z" | "2023-06-21T13:32:22Z" | CONTRIBUTOR | null | 0 | {

"diff_url": "https://github.com/huggingface/datasets/pull/5969.diff",

"html_url": "https://github.com/huggingface/datasets/pull/5969",

"merged_at": "2023-06-21T13:32:22Z",

"patch_url": "https://github.com/huggingface/datasets/pull/5969.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/5969"

} | "Requested" in https://discuss.huggingface.co/t/utf-16-for-datasets/43828/3.

`pd.read_json` also has these parameters, so it makes sense to be consistent. | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/5969/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/5969/timeline | null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3604 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3604/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3604/comments | https://api.github.com/repos/huggingface/datasets/issues/3604/events | https://github.com/huggingface/datasets/issues/3604 | 1,108,477,316 | I_kwDODunzps5CEgWE | 3,604 | Dataset Viewer not showing Previews for Private Datasets | {

"avatar_url": "https://avatars.githubusercontent.com/u/1778297?v=4",

"events_url": "https://api.github.com/users/abidlabs/events{/privacy}",

"followers_url": "https://api.github.com/users/abidlabs/followers",

"following_url": "https://api.github.com/users/abidlabs/following{/other_user}",

"gists_url": "https://api.github.com/users/abidlabs/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/abidlabs",

"id": 1778297,

"login": "abidlabs",

"node_id": "MDQ6VXNlcjE3NzgyOTc=",

"organizations_url": "https://api.github.com/users/abidlabs/orgs",

"received_events_url": "https://api.github.com/users/abidlabs/received_events",

"repos_url": "https://api.github.com/users/abidlabs/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/abidlabs/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/abidlabs/subscriptions",

"type": "User",

"url": "https://api.github.com/users/abidlabs"

} | [

{

"color": "a2eeef",

"default": true,

"description": "New feature or request",

"id": 1935892871,

"name": "enhancement",

"node_id": "MDU6TGFiZWwxOTM1ODkyODcx",

"url": "https://api.github.com/repos/huggingface/datasets/labels/enhancement"

},

{

"color": "E5583E",

"default": false,

"description": "Related to the dataset viewer on huggingface.co",

"id": 3470211881,

"name": "dataset-viewer",

"node_id": "LA_kwDODunzps7O1zsp",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset-viewer"

}

] | closed | false | {

"avatar_url": "https://avatars.githubusercontent.com/u/1676121?v=4",

"events_url": "https://api.github.com/users/severo/events{/privacy}",

"followers_url": "https://api.github.com/users/severo/followers",

"following_url": "https://api.github.com/users/severo/following{/other_user}",

"gists_url": "https://api.github.com/users/severo/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/severo",

"id": 1676121,

"login": "severo",

"node_id": "MDQ6VXNlcjE2NzYxMjE=",

"organizations_url": "https://api.github.com/users/severo/orgs",

"received_events_url": "https://api.github.com/users/severo/received_events",

"repos_url": "https://api.github.com/users/severo/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/severo/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/severo/subscriptions",

"type": "User",

"url": "https://api.github.com/users/severo"

} | [

{

"avatar_url": "https://avatars.githubusercontent.com/u/1676121?v=4",

"events_url": "https://api.github.com/users/severo/events{/privacy}",

"followers_url": "https://api.github.com/users/severo/followers",

"following_url": "https://api.github.com/users/severo/following{/other_user}",

"gists_url": "https://api.github.com/users/severo/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/severo",

"id": 1676121,

"login": "severo",

"node_id": "MDQ6VXNlcjE2NzYxMjE=",

"organizations_url": "https://api.github.com/users/severo/orgs",

"received_events_url": "https://api.github.com/users/severo/received_events",

"repos_url": "https://api.github.com/users/severo/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/severo/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/severo/subscriptions",

"type": "User",

"url": "https://api.github.com/users/severo"

}

] | null | [

"Sure, it's on the roadmap.",

"Closing in favor of https://github.com/huggingface/datasets-server/issues/39."

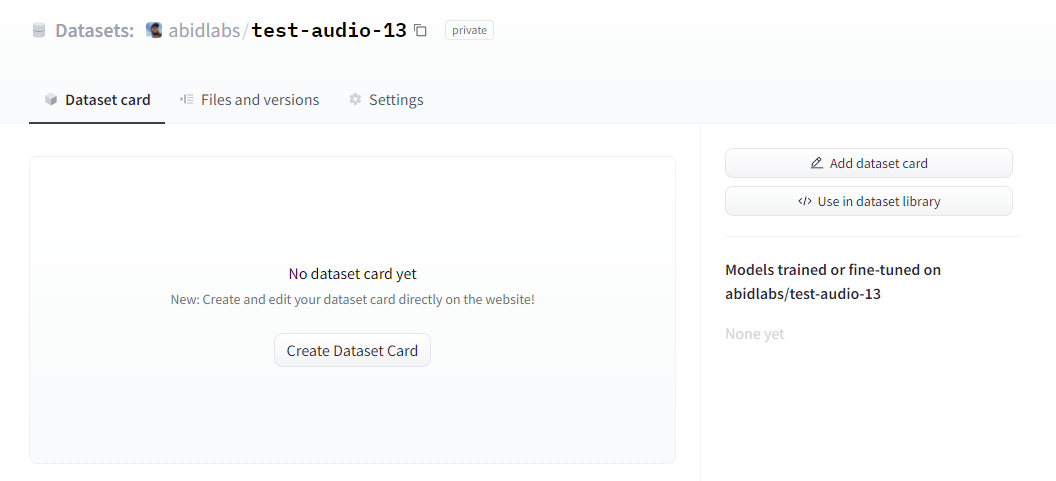

] | "2022-01-19T19:29:26Z" | "2022-09-26T08:04:43Z" | "2022-09-26T08:04:43Z" | MEMBER | null | null | null | ## Dataset viewer issue for 'abidlabs/test-audio-13'

It seems that the dataset viewer does not show previews for `private` datasets, even for the user who's private dataset it is. See [1] for example. If I change the visibility to public, then it does show, but it would be useful to have the viewer even for private datasets.

**Link:**

[1] https://huggingface.co/datasets/abidlabs/test-audio-13

**Am I the one who added this dataset?**

Yes

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3604/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/3604/timeline | null | completed | false |

https://api.github.com/repos/huggingface/datasets/issues/6117 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/6117/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/6117/comments | https://api.github.com/repos/huggingface/datasets/issues/6117/events | https://github.com/huggingface/datasets/pull/6117 | 1,835,213,848 | PR_kwDODunzps5XHktw | 6,117 | Set dev version | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova"

} | [] | closed | false | null | [] | null | [

"The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/datasets/pr_6117). All of your documentation changes will be reflected on that endpoint.",

"<details>\n<summary>Show benchmarks</summary>\n\nPyArrow==8.0.0\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.012516 / 0.011353 (0.001163) | 0.004725 / 0.011008 (-0.006283) | 0.112245 / 0.038508 (0.073736) | 0.079146 / 0.023109 (0.056037) | 0.386415 / 0.275898 (0.110517) | 0.420441 / 0.323480 (0.096961) | 0.005682 / 0.007986 (-0.002304) | 0.004169 / 0.004328 (-0.000160) | 0.077847 / 0.004250 (0.073597) | 0.055763 / 0.037052 (0.018711) | 0.385529 / 0.258489 (0.127040) | 0.422711 / 0.293841 (0.128870) | 0.047212 / 0.128546 (-0.081334) | 0.013711 / 0.075646 (-0.061935) | 0.342856 / 0.419271 (-0.076416) | 0.066788 / 0.043533 (0.023255) | 0.380728 / 0.255139 (0.125589) | 0.416241 / 0.283200 (0.133041) | 0.034676 / 0.141683 (-0.107007) | 1.679661 / 1.452155 (0.227506) | 1.838014 / 1.492716 (0.345297) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.219556 / 0.018006 (0.201550) | 0.524728 / 0.000490 (0.524238) | 0.005045 / 0.000200 (0.004845) | 0.000124 / 0.000054 (0.000069) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.025475 / 0.037411 (-0.011936) | 0.085937 / 0.014526 (0.071412) | 0.099245 / 0.176557 (-0.077311) | 0.158995 / 0.737135 (-0.578141) | 0.101504 / 0.296338 (-0.194835) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.582200 / 0.215209 (0.366991) | 5.794340 / 2.077655 (3.716685) | 2.473635 / 1.504120 (0.969515) | 2.168135 / 1.541195 (0.626941) | 2.215886 / 1.468490 (0.747396) | 0.855599 / 4.584777 (-3.729178) | 5.003067 / 3.745712 (1.257354) | 4.503566 / 5.269862 (-0.766295) | 2.912248 / 4.565676 (-1.653428) | 0.103267 / 0.424275 (-0.321008) | 0.012114 / 0.007607 (0.004507) | 0.712240 / 0.226044 (0.486196) | 7.131946 / 2.268929 (4.863017) | 3.280052 / 55.444624 (-52.164573) | 2.583472 / 6.876477 (-4.293004) | 2.820758 / 2.142072 (0.678686) | 1.132097 / 4.805227 (-3.673131) | 0.232191 / 6.500664 (-6.268473) | 0.082966 / 0.075469 (0.007497) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.581125 / 1.841788 (-0.260662) | 22.723878 / 8.074308 (14.649570) | 19.969347 / 10.191392 (9.777955) | 0.234365 / 0.680424 (-0.446059) | 0.030245 / 0.534201 (-0.503956) | 0.470843 / 0.579283 (-0.108440) | 0.558069 / 0.434364 (0.123705) | 0.534878 / 0.540337 (-0.005460) | 0.801025 / 1.386936 (-0.585911) |\n\n</details>\nPyArrow==latest\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.008524 / 0.011353 (-0.002829) | 0.005083 / 0.011008 (-0.005925) | 0.078054 / 0.038508 (0.039546) | 0.082025 / 0.023109 (0.058915) | 0.458027 / 0.275898 (0.182129) | 0.498232 / 0.323480 (0.174752) | 0.005938 / 0.007986 (-0.002048) | 0.003776 / 0.004328 (-0.000553) | 0.080413 / 0.004250 (0.076163) | 0.060485 / 0.037052 (0.023433) | 0.462816 / 0.258489 (0.204327) | 0.513970 / 0.293841 (0.220129) | 0.047574 / 0.128546 (-0.080973) | 0.013424 / 0.075646 (-0.062222) | 0.087707 / 0.419271 (-0.331565) | 0.065007 / 0.043533 (0.021474) | 0.465844 / 0.255139 (0.210705) | 0.498474 / 0.283200 (0.215274) | 0.033518 / 0.141683 (-0.108164) | 1.737507 / 1.452155 (0.285352) | 1.848291 / 1.492716 (0.355574) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.316710 / 0.018006 (0.298703) | 0.504415 / 0.000490 (0.503925) | 0.042128 / 0.000200 (0.041928) | 0.000171 / 0.000054 (0.000117) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.032097 / 0.037411 (-0.005314) | 0.099371 / 0.014526 (0.084845) | 0.109311 / 0.176557 (-0.067246) | 0.177373 / 0.737135 (-0.559762) | 0.110753 / 0.296338 (-0.185585) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.688060 / 0.215209 (0.472851) | 6.255219 / 2.077655 (4.177564) | 2.696845 / 1.504120 (1.192725) | 2.395424 / 1.541195 (0.854230) | 2.414870 / 1.468490 (0.946380) | 0.865704 / 4.584777 (-3.719073) | 5.086828 / 3.745712 (1.341116) | 4.648107 / 5.269862 (-0.621754) | 3.091119 / 4.565676 (-1.474558) | 0.101787 / 0.424275 (-0.322489) | 0.008829 / 0.007607 (0.001222) | 0.772398 / 0.226044 (0.546354) | 7.700366 / 2.268929 (5.431438) | 3.608632 / 55.444624 (-51.835992) | 2.923309 / 6.876477 (-3.953168) | 2.952141 / 2.142072 (0.810069) | 1.093006 / 4.805227 (-3.712221) | 0.224363 / 6.500664 (-6.276301) | 0.074927 / 0.075469 (-0.000542) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.638414 / 1.841788 (-0.203374) | 23.486781 / 8.074308 (15.412473) | 21.129104 / 10.191392 (10.937712) | 0.259955 / 0.680424 (-0.420469) | 0.027305 / 0.534201 (-0.506895) | 0.464448 / 0.579283 (-0.114835) | 0.553737 / 0.434364 (0.119373) | 0.571318 / 0.540337 (0.030981) | 0.772917 / 1.386936 (-0.614019) |\n\n</details>\n</details>\n\n\n",

"<details>\n<summary>Show benchmarks</summary>\n\nPyArrow==8.0.0\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.009093 / 0.011353 (-0.002260) | 0.005283 / 0.011008 (-0.005725) | 0.112299 / 0.038508 (0.073791) | 0.081341 / 0.023109 (0.058232) | 0.363799 / 0.275898 (0.087901) | 0.409261 / 0.323480 (0.085781) | 0.006400 / 0.007986 (-0.001586) | 0.003965 / 0.004328 (-0.000363) | 0.074389 / 0.004250 (0.070139) | 0.060654 / 0.037052 (0.023602) | 0.391046 / 0.258489 (0.132557) | 0.430514 / 0.293841 (0.136673) | 0.054900 / 0.128546 (-0.073646) | 0.017972 / 0.075646 (-0.057675) | 0.410875 / 0.419271 (-0.008396) | 0.067405 / 0.043533 (0.023873) | 0.371468 / 0.255139 (0.116329) | 0.435061 / 0.283200 (0.151861) | 0.038063 / 0.141683 (-0.103620) | 1.733509 / 1.452155 (0.281354) | 1.833899 / 1.492716 (0.341182) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.243230 / 0.018006 (0.225224) | 0.605636 / 0.000490 (0.605146) | 0.004890 / 0.000200 (0.004690) | 0.000098 / 0.000054 (0.000043) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.027624 / 0.037411 (-0.009787) | 0.084799 / 0.014526 (0.070273) | 0.104405 / 0.176557 (-0.072152) | 0.165383 / 0.737135 (-0.571752) | 0.102083 / 0.296338 (-0.194255) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.578334 / 0.215209 (0.363125) | 5.369520 / 2.077655 (3.291866) | 2.294174 / 1.504120 (0.790055) | 2.054195 / 1.541195 (0.513000) | 2.007304 / 1.468490 (0.538814) | 0.839283 / 4.584777 (-3.745494) | 5.262288 / 3.745712 (1.516576) | 4.363346 / 5.269862 (-0.906516) | 2.854903 / 4.565676 (-1.710773) | 0.096975 / 0.424275 (-0.327300) | 0.008237 / 0.007607 (0.000630) | 0.646746 / 0.226044 (0.420702) | 6.250621 / 2.268929 (3.981693) | 2.900377 / 55.444624 (-52.544247) | 2.283238 / 6.876477 (-4.593239) | 2.443785 / 2.142072 (0.301713) | 0.991719 / 4.805227 (-3.813508) | 0.189755 / 6.500664 (-6.310909) | 0.067906 / 0.075469 (-0.007563) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.515563 / 1.841788 (-0.326225) | 21.956499 / 8.074308 (13.882191) | 19.161750 / 10.191392 (8.970358) | 0.238199 / 0.680424 (-0.442225) | 0.026771 / 0.534201 (-0.507430) | 0.450195 / 0.579283 (-0.129088) | 0.585168 / 0.434364 (0.150804) | 0.522945 / 0.540337 (-0.017393) | 0.776244 / 1.386936 (-0.610693) |\n\n</details>\nPyArrow==latest\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.007997 / 0.011353 (-0.003356) | 0.005021 / 0.011008 (-0.005988) | 0.087308 / 0.038508 (0.048800) | 0.077760 / 0.023109 (0.054650) | 0.425313 / 0.275898 (0.149415) | 0.451470 / 0.323480 (0.127990) | 0.006848 / 0.007986 (-0.001137) | 0.004812 / 0.004328 (0.000484) | 0.071198 / 0.004250 (0.066947) | 0.058325 / 0.037052 (0.021273) | 0.427411 / 0.258489 (0.168922) | 0.466069 / 0.293841 (0.172228) | 0.048686 / 0.128546 (-0.079861) | 0.011841 / 0.075646 (-0.063806) | 0.086225 / 0.419271 (-0.333047) | 0.060500 / 0.043533 (0.016967) | 0.435580 / 0.255139 (0.180441) | 0.456919 / 0.283200 (0.173719) | 0.035094 / 0.141683 (-0.106588) | 1.582805 / 1.452155 (0.130650) | 1.717838 / 1.492716 (0.225122) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.283967 / 0.018006 (0.265960) | 0.517496 / 0.000490 (0.517006) | 0.014747 / 0.000200 (0.014547) | 0.000099 / 0.000054 (0.000045) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.027870 / 0.037411 (-0.009541) | 0.083835 / 0.014526 (0.069309) | 0.099157 / 0.176557 (-0.077400) | 0.173210 / 0.737135 (-0.563925) | 0.094212 / 0.296338 (-0.202127) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.535720 / 0.215209 (0.320511) | 5.273730 / 2.077655 (3.196075) | 2.422560 / 1.504120 (0.918440) | 2.131416 / 1.541195 (0.590222) | 2.192000 / 1.468490 (0.723510) | 0.708469 / 4.584777 (-3.876308) | 4.758092 / 3.745712 (1.012380) | 3.940729 / 5.269862 (-1.329133) | 2.553093 / 4.565676 (-2.012583) | 0.084895 / 0.424275 (-0.339380) | 0.008730 / 0.007607 (0.001123) | 0.646975 / 0.226044 (0.420930) | 6.294811 / 2.268929 (4.025883) | 3.293964 / 55.444624 (-52.150660) | 2.568985 / 6.876477 (-4.307492) | 2.743786 / 2.142072 (0.601713) | 0.899733 / 4.805227 (-3.905494) | 0.193484 / 6.500664 (-6.307181) | 0.070012 / 0.075469 (-0.005457) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.502255 / 1.841788 (-0.339532) | 20.690234 / 8.074308 (12.615926) | 18.375791 / 10.191392 (8.184399) | 0.200135 / 0.680424 (-0.480289) | 0.029434 / 0.534201 (-0.504767) | 0.477267 / 0.579283 (-0.102016) | 0.566869 / 0.434364 (0.132505) | 0.543756 / 0.540337 (0.003418) | 0.700476 / 1.386936 (-0.686460) |\n\n</details>\n</details>\n\n\n"

] | "2023-08-03T14:46:04Z" | "2023-08-03T14:56:59Z" | "2023-08-03T14:46:18Z" | MEMBER | null | 0 | {

"diff_url": "https://github.com/huggingface/datasets/pull/6117.diff",

"html_url": "https://github.com/huggingface/datasets/pull/6117",

"merged_at": "2023-08-03T14:46:18Z",

"patch_url": "https://github.com/huggingface/datasets/pull/6117.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/6117"

} | null | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/6117/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/6117/timeline | null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/5451 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5451/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5451/comments | https://api.github.com/repos/huggingface/datasets/issues/5451/events | https://github.com/huggingface/datasets/issues/5451 | 1,552,336,300 | I_kwDODunzps5chsWs | 5,451 | ImageFolder BadZipFile: Bad offset for central directory | {

"avatar_url": "https://avatars.githubusercontent.com/u/1524208?v=4",

"events_url": "https://api.github.com/users/hmartiro/events{/privacy}",

"followers_url": "https://api.github.com/users/hmartiro/followers",

"following_url": "https://api.github.com/users/hmartiro/following{/other_user}",

"gists_url": "https://api.github.com/users/hmartiro/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/hmartiro",

"id": 1524208,

"login": "hmartiro",

"node_id": "MDQ6VXNlcjE1MjQyMDg=",

"organizations_url": "https://api.github.com/users/hmartiro/orgs",

"received_events_url": "https://api.github.com/users/hmartiro/received_events",

"repos_url": "https://api.github.com/users/hmartiro/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/hmartiro/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/hmartiro/subscriptions",

"type": "User",

"url": "https://api.github.com/users/hmartiro"

} | [] | closed | false | null | [] | null | [

"Hi ! Could you share the full stack trace ? Which dataset did you try to load ?\r\n\r\nit may be related to https://github.com/huggingface/datasets/pull/5640",

"The `BadZipFile` error means the ZIP file is corrupted, so I'm closing this issue as it's not directly related to `datasets`.",

"For others that find this issue following a `BadZipFile` error, I had the same problem because I had a file in a folder dataset `my-image.target` and the datasets library was incorrectly determining that the (PNG) file was a zip archive. When it tried to extract the file, this error occurred. \r\n\r\nUpdating to `datasets==2.12.0` fixed the problem for me."

] | "2023-01-22T23:50:12Z" | "2023-05-23T10:35:48Z" | "2023-02-10T16:31:36Z" | NONE | null | null | null | ### Describe the bug

I'm getting the following exception:

```

lib/python3.10/zipfile.py:1353 in _RealGetContents │

│ │

│ 1350 │ │ # self.start_dir: Position of start of central directory │

│ 1351 │ │ self.start_dir = offset_cd + concat │

│ 1352 │ │ if self.start_dir < 0: │

│ ❱ 1353 │ │ │ raise BadZipFile("Bad offset for central directory") │

│ 1354 │ │ fp.seek(self.start_dir, 0) │

│ 1355 │ │ data = fp.read(size_cd) │

│ 1356 │ │ fp = io.BytesIO(data) │

╰──────────────────────────────────────────────────────────────────────────────────────────────────╯

BadZipFile: Bad offset for central directory

Extracting data files: 35%|█████████████████▊ | 38572/110812 [00:10<00:20, 3576.26it/s]

```

### Steps to reproduce the bug

```

load_dataset(

args.dataset_name,

args.dataset_config_name,

cache_dir=args.cache_dir,

),

```

### Expected behavior

loads the dataset

### Environment info

datasets==2.8.0

Python 3.10.8

Linux 129-146-3-202 5.15.0-52-generic #58~20.04.1-Ubuntu SMP Thu Oct 13 13:09:46 UTC 2022 x86_64 x86_64 x86_64 GNU/Linux | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/5451/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/5451/timeline | null | completed | false |

https://api.github.com/repos/huggingface/datasets/issues/4810 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/4810/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/4810/comments | https://api.github.com/repos/huggingface/datasets/issues/4810/events | https://github.com/huggingface/datasets/pull/4810 | 1,333,038,702 | PR_kwDODunzps484C9l | 4,810 | Add description to hellaswag dataset | {

"avatar_url": "https://avatars.githubusercontent.com/u/326577?v=4",

"events_url": "https://api.github.com/users/julien-c/events{/privacy}",

"followers_url": "https://api.github.com/users/julien-c/followers",

"following_url": "https://api.github.com/users/julien-c/following{/other_user}",

"gists_url": "https://api.github.com/users/julien-c/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/julien-c",

"id": 326577,

"login": "julien-c",

"node_id": "MDQ6VXNlcjMyNjU3Nw==",

"organizations_url": "https://api.github.com/users/julien-c/orgs",

"received_events_url": "https://api.github.com/users/julien-c/received_events",

"repos_url": "https://api.github.com/users/julien-c/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/julien-c/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/julien-c/subscriptions",

"type": "User",