Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +6 -0

- adetailer/.github/ISSUE_TEMPLATE/bug_report.yaml +53 -0

- adetailer/.github/ISSUE_TEMPLATE/feature_request.yaml +24 -0

- adetailer/.github/ISSUE_TEMPLATE/question.yaml +10 -0

- adetailer/.github/workflows/stale.yml +13 -0

- adetailer/.gitignore +196 -0

- adetailer/.pre-commit-config.yaml +20 -0

- adetailer/.vscode/extensions.json +8 -0

- adetailer/.vscode/settings.json +8 -0

- adetailer/CHANGELOG.md +377 -0

- adetailer/LICENSE.md +662 -0

- adetailer/README.md +97 -0

- adetailer/Taskfile.yml +27 -0

- adetailer/adetailer/__init__.py +18 -0

- adetailer/adetailer/__version__.py +1 -0

- adetailer/adetailer/args.py +236 -0

- adetailer/adetailer/common.py +132 -0

- adetailer/adetailer/mask.py +256 -0

- adetailer/adetailer/mediapipe.py +168 -0

- adetailer/adetailer/traceback.py +161 -0

- adetailer/adetailer/ui.py +640 -0

- adetailer/adetailer/ultralytics.py +51 -0

- adetailer/controlnet_ext/__init__.py +7 -0

- adetailer/controlnet_ext/controlnet_ext.py +167 -0

- adetailer/controlnet_ext/restore.py +43 -0

- adetailer/install.py +76 -0

- adetailer/preload.py +9 -0

- adetailer/pyproject.toml +42 -0

- adetailer/scripts/!adetailer.py +1000 -0

- kohya-sd-scripts-webui/.gitignore +9 -0

- kohya-sd-scripts-webui/README.md +22 -0

- kohya-sd-scripts-webui/built-in-presets.json +126 -0

- kohya-sd-scripts-webui/install.py +116 -0

- kohya-sd-scripts-webui/kohya-sd-scripts-webui-colab.ipynb +157 -0

- kohya-sd-scripts-webui/launch.py +79 -0

- kohya-sd-scripts-webui/main.py +14 -0

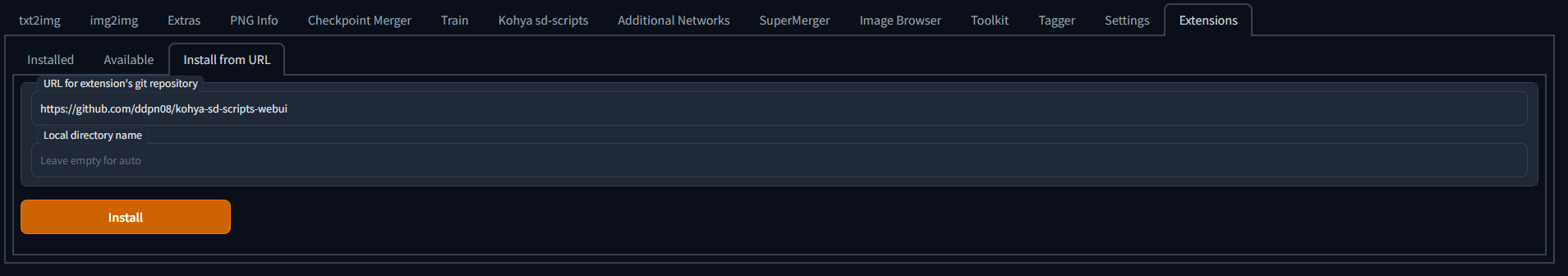

- kohya-sd-scripts-webui/screenshots/installation-extension.png +0 -0

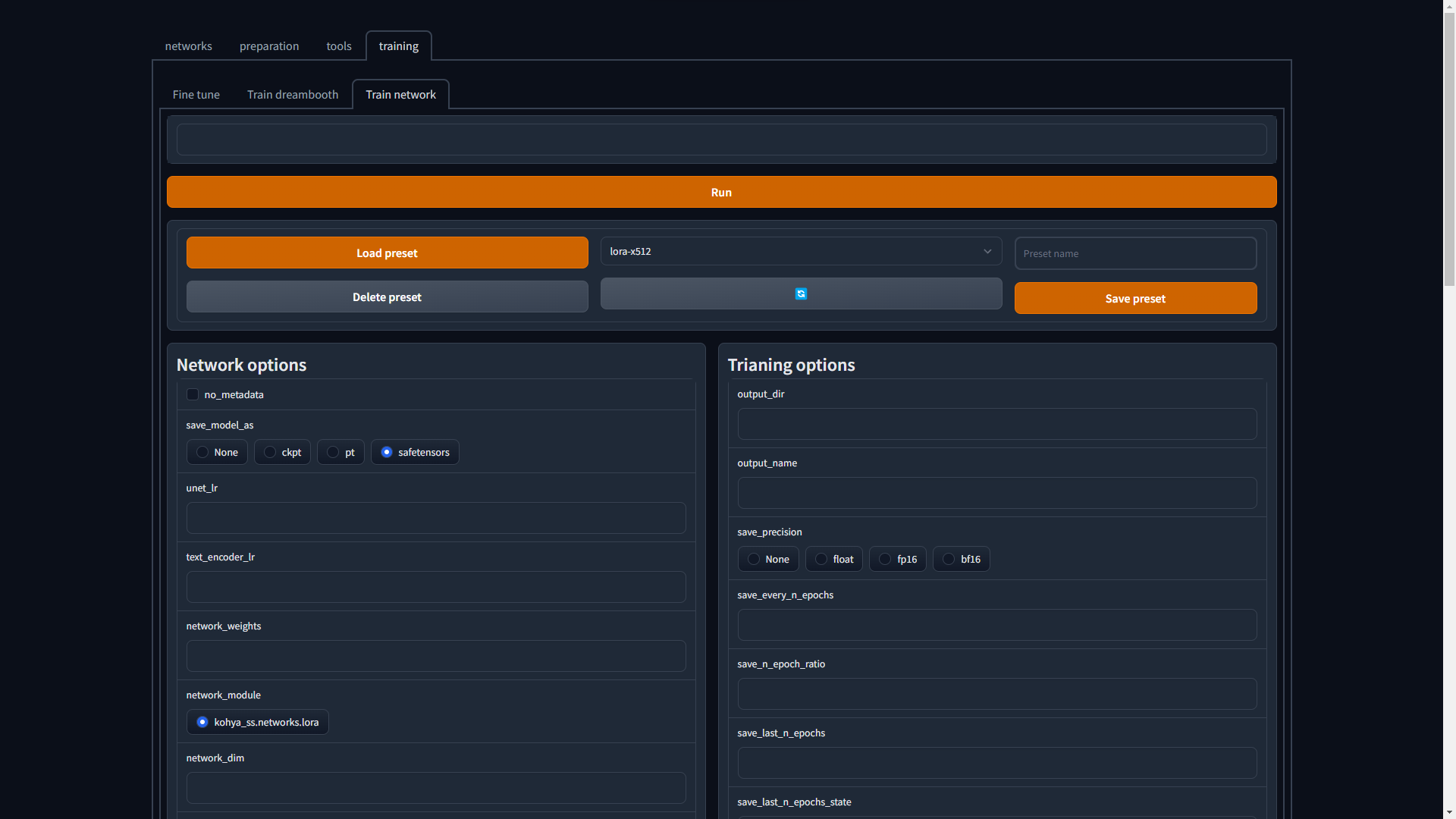

- kohya-sd-scripts-webui/screenshots/webui-01.png +0 -0

- kohya-sd-scripts-webui/script.js +87 -0

- kohya-sd-scripts-webui/scripts/main.py +98 -0

- kohya-sd-scripts-webui/scripts/ngrok.py +28 -0

- kohya-sd-scripts-webui/scripts/presets.py +179 -0

- kohya-sd-scripts-webui/scripts/runner.py +113 -0

- kohya-sd-scripts-webui/scripts/shared.py +32 -0

- kohya-sd-scripts-webui/scripts/tabs/networks/check_lora_weights.py +23 -0

- kohya-sd-scripts-webui/scripts/tabs/networks/extract_lora_from_models.py +25 -0

- kohya-sd-scripts-webui/scripts/tabs/networks/lora_interrogator.py +23 -0

- kohya-sd-scripts-webui/scripts/tabs/networks/merge_lora.py +23 -0

- kohya-sd-scripts-webui/scripts/tabs/networks/resize_lora.py +23 -0

- kohya-sd-scripts-webui/scripts/tabs/networks/svd_merge_lora.py +23 -0

.gitattributes

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

sd-civitai-browser-plus/aria2/lin/aria2 filter=lfs diff=lfs merge=lfs -text

|

| 2 |

+

sd-civitai-browser-plus/aria2/win/aria2.exe filter=lfs diff=lfs merge=lfs -text

|

| 3 |

+

sd-webui-controlnet/annotator/oneformer/oneformer/data/bpe_simple_vocab_16e6.txt.gz filter=lfs diff=lfs merge=lfs -text

|

| 4 |

+

sd-webui-inpaint-anything/images/inpaint_anything_ui_image_1.png filter=lfs diff=lfs merge=lfs -text

|

| 5 |

+

stable-diffusion-webui-aesthetic-gradients/ss.png filter=lfs diff=lfs merge=lfs -text

|

| 6 |

+

stable-diffusion-webui-rembg/preview.png filter=lfs diff=lfs merge=lfs -text

|

adetailer/.github/ISSUE_TEMPLATE/bug_report.yaml

ADDED

|

@@ -0,0 +1,53 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Bug report

|

| 2 |

+

description: Create a report

|

| 3 |

+

title: "[Bug]: "

|

| 4 |

+

labels:

|

| 5 |

+

- bug

|

| 6 |

+

|

| 7 |

+

body:

|

| 8 |

+

- type: textarea

|

| 9 |

+

attributes:

|

| 10 |

+

label: Describe the bug

|

| 11 |

+

description: A clear and concise description of what the bug is.

|

| 12 |

+

placeholder: |

|

| 13 |

+

Any language accepted

|

| 14 |

+

아무 언어 사용가능

|

| 15 |

+

すべての言語に対応

|

| 16 |

+

接受所有语言

|

| 17 |

+

Se aceptan todos los idiomas

|

| 18 |

+

Alle Sprachen werden akzeptiert

|

| 19 |

+

Toutes les langues sont acceptées

|

| 20 |

+

Принимаются все языки

|

| 21 |

+

|

| 22 |

+

- type: textarea

|

| 23 |

+

attributes:

|

| 24 |

+

label: Screenshots

|

| 25 |

+

description: Screenshots related to the issue.

|

| 26 |

+

|

| 27 |

+

- type: textarea

|

| 28 |

+

attributes:

|

| 29 |

+

label: Console logs, from start to end.

|

| 30 |

+

description: |

|

| 31 |

+

The full console log of your terminal.

|

| 32 |

+

placeholder: |

|

| 33 |

+

Python ...

|

| 34 |

+

Version: ...

|

| 35 |

+

Commit hash: ...

|

| 36 |

+

Installing requirements

|

| 37 |

+

...

|

| 38 |

+

|

| 39 |

+

Launching Web UI with arguments: ...

|

| 40 |

+

[-] ADetailer initialized. version: ...

|

| 41 |

+

...

|

| 42 |

+

...

|

| 43 |

+

|

| 44 |

+

Traceback (most recent call last):

|

| 45 |

+

...

|

| 46 |

+

...

|

| 47 |

+

render: Shell

|

| 48 |

+

validations:

|

| 49 |

+

required: true

|

| 50 |

+

|

| 51 |

+

- type: textarea

|

| 52 |

+

attributes:

|

| 53 |

+

label: List of installed extensions

|

adetailer/.github/ISSUE_TEMPLATE/feature_request.yaml

ADDED

|

@@ -0,0 +1,24 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Feature request

|

| 2 |

+

description: Suggest an idea for this project

|

| 3 |

+

title: "[Feature Request]: "

|

| 4 |

+

|

| 5 |

+

body:

|

| 6 |

+

- type: textarea

|

| 7 |

+

attributes:

|

| 8 |

+

label: Is your feature request related to a problem? Please describe.

|

| 9 |

+

description: A clear and concise description of what the problem is. Ex. I'm always frustrated when [...]

|

| 10 |

+

|

| 11 |

+

- type: textarea

|

| 12 |

+

attributes:

|

| 13 |

+

label: Describe the solution you'd like

|

| 14 |

+

description: A clear and concise description of what you want to happen.

|

| 15 |

+

|

| 16 |

+

- type: textarea

|

| 17 |

+

attributes:

|

| 18 |

+

label: Describe alternatives you've considered

|

| 19 |

+

description: A clear and concise description of any alternative solutions or features you've considered.

|

| 20 |

+

|

| 21 |

+

- type: textarea

|

| 22 |

+

attributes:

|

| 23 |

+

label: Additional context

|

| 24 |

+

description: Add any other context or screenshots about the feature request here.

|

adetailer/.github/ISSUE_TEMPLATE/question.yaml

ADDED

|

@@ -0,0 +1,10 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Question

|

| 2 |

+

description: Write a question

|

| 3 |

+

labels:

|

| 4 |

+

- question

|

| 5 |

+

|

| 6 |

+

body:

|

| 7 |

+

- type: textarea

|

| 8 |

+

attributes:

|

| 9 |

+

label: Question

|

| 10 |

+

description: Please do not write bug reports or feature requests here.

|

adetailer/.github/workflows/stale.yml

ADDED

|

@@ -0,0 +1,13 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: 'Close stale issues and PRs'

|

| 2 |

+

on:

|

| 3 |

+

schedule:

|

| 4 |

+

- cron: '30 1 * * *'

|

| 5 |

+

|

| 6 |

+

jobs:

|

| 7 |

+

stale:

|

| 8 |

+

runs-on: ubuntu-latest

|

| 9 |

+

steps:

|

| 10 |

+

- uses: actions/stale@v8

|

| 11 |

+

with:

|

| 12 |

+

days-before-stale: 23

|

| 13 |

+

days-before-close: 3

|

adetailer/.gitignore

ADDED

|

@@ -0,0 +1,196 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Created by https://www.toptal.com/developers/gitignore/api/python,visualstudiocode

|

| 2 |

+

# Edit at https://www.toptal.com/developers/gitignore?templates=python,visualstudiocode

|

| 3 |

+

|

| 4 |

+

### Python ###

|

| 5 |

+

# Byte-compiled / optimized / DLL files

|

| 6 |

+

__pycache__/

|

| 7 |

+

*.py[cod]

|

| 8 |

+

*$py.class

|

| 9 |

+

|

| 10 |

+

# C extensions

|

| 11 |

+

*.so

|

| 12 |

+

|

| 13 |

+

# Distribution / packaging

|

| 14 |

+

.Python

|

| 15 |

+

build/

|

| 16 |

+

develop-eggs/

|

| 17 |

+

dist/

|

| 18 |

+

downloads/

|

| 19 |

+

eggs/

|

| 20 |

+

.eggs/

|

| 21 |

+

lib/

|

| 22 |

+

lib64/

|

| 23 |

+

parts/

|

| 24 |

+

sdist/

|

| 25 |

+

var/

|

| 26 |

+

wheels/

|

| 27 |

+

share/python-wheels/

|

| 28 |

+

*.egg-info/

|

| 29 |

+

.installed.cfg

|

| 30 |

+

*.egg

|

| 31 |

+

MANIFEST

|

| 32 |

+

|

| 33 |

+

# PyInstaller

|

| 34 |

+

# Usually these files are written by a python script from a template

|

| 35 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 36 |

+

*.manifest

|

| 37 |

+

*.spec

|

| 38 |

+

|

| 39 |

+

# Installer logs

|

| 40 |

+

pip-log.txt

|

| 41 |

+

pip-delete-this-directory.txt

|

| 42 |

+

|

| 43 |

+

# Unit test / coverage reports

|

| 44 |

+

htmlcov/

|

| 45 |

+

.tox/

|

| 46 |

+

.nox/

|

| 47 |

+

.coverage

|

| 48 |

+

.coverage.*

|

| 49 |

+

.cache

|

| 50 |

+

nosetests.xml

|

| 51 |

+

coverage.xml

|

| 52 |

+

*.cover

|

| 53 |

+

*.py,cover

|

| 54 |

+

.hypothesis/

|

| 55 |

+

.pytest_cache/

|

| 56 |

+

cover/

|

| 57 |

+

|

| 58 |

+

# Translations

|

| 59 |

+

*.mo

|

| 60 |

+

*.pot

|

| 61 |

+

|

| 62 |

+

# Django stuff:

|

| 63 |

+

*.log

|

| 64 |

+

local_settings.py

|

| 65 |

+

db.sqlite3

|

| 66 |

+

db.sqlite3-journal

|

| 67 |

+

|

| 68 |

+

# Flask stuff:

|

| 69 |

+

instance/

|

| 70 |

+

.webassets-cache

|

| 71 |

+

|

| 72 |

+

# Scrapy stuff:

|

| 73 |

+

.scrapy

|

| 74 |

+

|

| 75 |

+

# Sphinx documentation

|

| 76 |

+

docs/_build/

|

| 77 |

+

|

| 78 |

+

# PyBuilder

|

| 79 |

+

.pybuilder/

|

| 80 |

+

target/

|

| 81 |

+

|

| 82 |

+

# Jupyter Notebook

|

| 83 |

+

.ipynb_checkpoints

|

| 84 |

+

|

| 85 |

+

# IPython

|

| 86 |

+

profile_default/

|

| 87 |

+

ipython_config.py

|

| 88 |

+

|

| 89 |

+

# pyenv

|

| 90 |

+

# For a library or package, you might want to ignore these files since the code is

|

| 91 |

+

# intended to run in multiple environments; otherwise, check them in:

|

| 92 |

+

# .python-version

|

| 93 |

+

|

| 94 |

+

# pipenv

|

| 95 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 96 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 97 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 98 |

+

# install all needed dependencies.

|

| 99 |

+

#Pipfile.lock

|

| 100 |

+

|

| 101 |

+

# poetry

|

| 102 |

+

# Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

|

| 103 |

+

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

| 104 |

+

# commonly ignored for libraries.

|

| 105 |

+

# https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

|

| 106 |

+

#poetry.lock

|

| 107 |

+

|

| 108 |

+

# pdm

|

| 109 |

+

# Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

|

| 110 |

+

#pdm.lock

|

| 111 |

+

# pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

|

| 112 |

+

# in version control.

|

| 113 |

+

# https://pdm.fming.dev/#use-with-ide

|

| 114 |

+

.pdm.toml

|

| 115 |

+

|

| 116 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

|

| 117 |

+

__pypackages__/

|

| 118 |

+

|

| 119 |

+

# Celery stuff

|

| 120 |

+

celerybeat-schedule

|

| 121 |

+

celerybeat.pid

|

| 122 |

+

|

| 123 |

+

# SageMath parsed files

|

| 124 |

+

*.sage.py

|

| 125 |

+

|

| 126 |

+

# Environments

|

| 127 |

+

.env

|

| 128 |

+

.venv

|

| 129 |

+

env/

|

| 130 |

+

venv/

|

| 131 |

+

ENV/

|

| 132 |

+

env.bak/

|

| 133 |

+

venv.bak/

|

| 134 |

+

|

| 135 |

+

# Spyder project settings

|

| 136 |

+

.spyderproject

|

| 137 |

+

.spyproject

|

| 138 |

+

|

| 139 |

+

# Rope project settings

|

| 140 |

+

.ropeproject

|

| 141 |

+

|

| 142 |

+

# mkdocs documentation

|

| 143 |

+

/site

|

| 144 |

+

|

| 145 |

+

# mypy

|

| 146 |

+

.mypy_cache/

|

| 147 |

+

.dmypy.json

|

| 148 |

+

dmypy.json

|

| 149 |

+

|

| 150 |

+

# Pyre type checker

|

| 151 |

+

.pyre/

|

| 152 |

+

|

| 153 |

+

# pytype static type analyzer

|

| 154 |

+

.pytype/

|

| 155 |

+

|

| 156 |

+

# Cython debug symbols

|

| 157 |

+

cython_debug/

|

| 158 |

+

|

| 159 |

+

# PyCharm

|

| 160 |

+

# JetBrains specific template is maintained in a separate JetBrains.gitignore that can

|

| 161 |

+

# be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

|

| 162 |

+

# and can be added to the global gitignore or merged into this file. For a more nuclear

|

| 163 |

+

# option (not recommended) you can uncomment the following to ignore the entire idea folder.

|

| 164 |

+

#.idea/

|

| 165 |

+

|

| 166 |

+

### Python Patch ###

|

| 167 |

+

# Poetry local configuration file - https://python-poetry.org/docs/configuration/#local-configuration

|

| 168 |

+

poetry.toml

|

| 169 |

+

|

| 170 |

+

# ruff

|

| 171 |

+

.ruff_cache/

|

| 172 |

+

|

| 173 |

+

# LSP config files

|

| 174 |

+

pyrightconfig.json

|

| 175 |

+

|

| 176 |

+

### VisualStudioCode ###

|

| 177 |

+

.vscode/*

|

| 178 |

+

!.vscode/settings.json

|

| 179 |

+

!.vscode/tasks.json

|

| 180 |

+

!.vscode/launch.json

|

| 181 |

+

!.vscode/extensions.json

|

| 182 |

+

!.vscode/*.code-snippets

|

| 183 |

+

|

| 184 |

+

# Local History for Visual Studio Code

|

| 185 |

+

.history/

|

| 186 |

+

|

| 187 |

+

# Built Visual Studio Code Extensions

|

| 188 |

+

*.vsix

|

| 189 |

+

|

| 190 |

+

### VisualStudioCode Patch ###

|

| 191 |

+

# Ignore all local history of files

|

| 192 |

+

.history

|

| 193 |

+

.ionide

|

| 194 |

+

|

| 195 |

+

# End of https://www.toptal.com/developers/gitignore/api/python,visualstudiocode

|

| 196 |

+

*.ipynb

|

adetailer/.pre-commit-config.yaml

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

repos:

|

| 2 |

+

- repo: https://github.com/pre-commit/pre-commit-hooks

|

| 3 |

+

rev: v4.5.0

|

| 4 |

+

hooks:

|

| 5 |

+

- id: check-ast

|

| 6 |

+

- id: trailing-whitespace

|

| 7 |

+

args: [--markdown-linebreak-ext=md]

|

| 8 |

+

- id: end-of-file-fixer

|

| 9 |

+

- id: mixed-line-ending

|

| 10 |

+

|

| 11 |

+

- repo: https://github.com/astral-sh/ruff-pre-commit

|

| 12 |

+

rev: v0.1.14

|

| 13 |

+

hooks:

|

| 14 |

+

- id: ruff

|

| 15 |

+

args: [--fix, --exit-non-zero-on-fix]

|

| 16 |

+

|

| 17 |

+

- repo: https://github.com/psf/black-pre-commit-mirror

|

| 18 |

+

rev: 23.12.1

|

| 19 |

+

hooks:

|

| 20 |

+

- id: black

|

adetailer/.vscode/extensions.json

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"recommendations": [

|

| 3 |

+

"ms-python.black-formatter",

|

| 4 |

+

"kevinrose.vsc-python-indent",

|

| 5 |

+

"charliermarsh.ruff",

|

| 6 |

+

"shardulm94.trailing-spaces"

|

| 7 |

+

]

|

| 8 |

+

}

|

adetailer/.vscode/settings.json

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"explorer.fileNesting.enabled": true,

|

| 3 |

+

"explorer.fileNesting.patterns": {

|

| 4 |

+

"pyproject.toml": ".env, .gitignore, .pre-commit-config.yaml, Taskfile.yml",

|

| 5 |

+

"README.md": "LICENSE.md, CHANGELOG.md",

|

| 6 |

+

"install.py": "preload.py"

|

| 7 |

+

}

|

| 8 |

+

}

|

adetailer/CHANGELOG.md

ADDED

|

@@ -0,0 +1,377 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Changelog

|

| 2 |

+

|

| 3 |

+

## 2024-01-23

|

| 4 |

+

|

| 5 |

+

- v24.1.2

|

| 6 |

+

- controlnet 모델에 `Passthrough` 옵션 추가. 입력으로 들어온 컨트롤넷 옵션을 그대로 사용

|

| 7 |

+

- fastapi 엔드포인트 추가

|

| 8 |

+

|

| 9 |

+

## 2024-01-10

|

| 10 |

+

|

| 11 |

+

- v24.1.1

|

| 12 |

+

- SDNext 호환 업데이트 (issue #466)

|

| 13 |

+

- 설정 값 state에 초기값 추가

|

| 14 |

+

- 위젯 값을 변경할 때마다 state도 변경되게 함 (기존에는 생성 버튼을 누를 때 적용되었음)

|

| 15 |

+

- `inpaint_depth_hand` 컨트롤넷 모델이 depth 모델로 인식되게 함 (issue #463)

|

| 16 |

+

|

| 17 |

+

## 2024-01-04

|

| 18 |

+

|

| 19 |

+

- v24.1.0

|

| 20 |

+

- `depth_hand_refiner` ControlNet 추가 (PR #460)

|

| 21 |

+

|

| 22 |

+

## 2023-12-30

|

| 23 |

+

|

| 24 |

+

- v23.12.0

|

| 25 |

+

- 파일을 인자로 추가하는 몇몇 스크립트에 대해 deepcopy의 에러를 피하기 위해 script_args 복사 방법을 변경함

|

| 26 |

+

- skip img2img 기능을 사용할 때 너비, 높이를 128로 고정하여 스킵 과정이 조금 더 나아짐

|

| 27 |

+

- img2img inpainting 모드에서 adetailer 자동 비활성화

|

| 28 |

+

- 처음 생성된 params.txt 파일을 항상 유지하도록 변경함

|

| 29 |

+

|

| 30 |

+

## 2023-11-19

|

| 31 |

+

|

| 32 |

+

- v23.11.1

|

| 33 |

+

- 기본 스크립트 목록에 negpip 추가

|

| 34 |

+

- 기존에 설치한 사람에게 소급적용되지는 않음

|

| 35 |

+

- skip img2img 옵션이 2스텝 이상일 때, 제대로 적용되지 않는 문제 수정

|

| 36 |

+

- SD.Next에서 이미지가 np.ndarray로 입력되는 경우 수정

|

| 37 |

+

- 컨트롤넷 경로를 sys.path에 추가하여 --data-dir등을 지정한 경우에도 임포트 에러가 일어나지 않게 함.

|

| 38 |

+

|

| 39 |

+

## 2023-10-30

|

| 40 |

+

|

| 41 |

+

- v23.11.0

|

| 42 |

+

- 이미지의 인덱스 계산방법 변경

|

| 43 |

+

- webui 1.1.0 미만에서 adetailer 실행 불가능하게 함

|

| 44 |

+

- 컨트롤넷 preprocessor 선택지 늘림

|

| 45 |

+

- 추가 yolo 모델 디렉터리를 설정할 수 있는 옵션 추가

|

| 46 |

+

- infotext에 `/`가 있는 항목이 exif에서 복원되지 않는 문제 수정

|

| 47 |

+

- 이전 버전에 생성된 이미지는 여전히 복원안됨

|

| 48 |

+

- 같은 탭에서 항상 같은 시드를 적용하게 하는 옵션 추가

|

| 49 |

+

- 컨트롤넷 1.1.411 (f2aafcf2beb99a03cbdf7db73852228ccd6bd1d6) 버전을 사용중일 경우,

|

| 50 |

+

webui 버전 1.6.0 미만에서 사용할 수 없다는 메세지 출력

|

| 51 |

+

|

| 52 |

+

## 2023-10-15

|

| 53 |

+

|

| 54 |

+

- v23.10.1

|

| 55 |

+

- xyz grid에 prompt S/R 추가

|

| 56 |

+

- img2img에서 steps가 1일때 에러가 발생하는 샘플러의 처리를 위해 샘플러 이름도 변경하게 수정

|

| 57 |

+

|

| 58 |

+

## 2023-10-07

|

| 59 |

+

|

| 60 |

+

- v23.10.0

|

| 61 |

+

- 허깅페이스 모델을 다운로드 실패했을 때, 계속 다운로드를 시도하지 않음

|

| 62 |

+

- img2img에서 img2img단계를 건너뛰는 기능 추가

|

| 63 |

+

- live preview에서 감지 단계를 보여줌 (PR #352)

|

| 64 |

+

|

| 65 |

+

## 2023-09-20

|

| 66 |

+

|

| 67 |

+

- v23.9.3

|

| 68 |

+

- ultralytics 버전 8.0.181로 업데이트 (https://github.com/ultralytics/ultralytics/pull/4891)

|

| 69 |

+

- mediapipe와 ultralytics의 lazy import

|

| 70 |

+

|

| 71 |

+

## 2023-09-10

|

| 72 |

+

|

| 73 |

+

- v23.9.2

|

| 74 |

+

- (실험적) VAE 선택 기능

|

| 75 |

+

|

| 76 |

+

## 2023-09-01

|

| 77 |

+

|

| 78 |

+

- v23.9.1

|

| 79 |

+

- webui 1.6.0에 추가된 인자를 사용해서 생긴 하위 호환 문제 수정

|

| 80 |

+

|

| 81 |

+

## 2023-08-31

|

| 82 |

+

|

| 83 |

+

- v23.9.0

|

| 84 |

+

- (실험적) 체크포인트 선택기능

|

| 85 |

+

- 버그가 있어 리프레시 버튼은 구현에서 빠짐

|

| 86 |

+

- 1.6.0 업데이트에 따라 img2img에서 사용불가능한 샘플러를 선택했을 때 더이상 Euler로 변경하지 않음

|

| 87 |

+

- 유효하지 않은 인자가 전달되었을 때, 에러를 일으키지 않고 대신 adetailer를 비활성화함

|

| 88 |

+

|

| 89 |

+

|

| 90 |

+

## 2023-08-25

|

| 91 |

+

|

| 92 |

+

- v23.8.1

|

| 93 |

+

- xyz grid에서 model을 `None`으로 설정한 이후에 adetailer가 비활성화 되는 문제 수정

|

| 94 |

+

- skip을 눌렀을 때 진행을 멈춤

|

| 95 |

+

- `--medvram-sdxl`을 설정했을 때에도 cpu를 사용하게 함

|

| 96 |

+

|

| 97 |

+

## 2023-08-14

|

| 98 |

+

|

| 99 |

+

- v23.8.0

|

| 100 |

+

- `[PROMPT]` 키워드 추가. `ad_prompt` 또는 `ad_negative_prompt`에 사용하면 입력 프롬프트로 대체됨 (PR #243)

|

| 101 |

+

- Only top k largest 옵션 추가 (PR #264)

|

| 102 |

+

- ultralytics 버전 업데이트

|

| 103 |

+

|

| 104 |

+

|

| 105 |

+

## 2023-07-31

|

| 106 |

+

|

| 107 |

+

- v23.7.11

|

| 108 |

+

- separate clip skip 옵션 추가

|

| 109 |

+

- install requirements 정리 (ultralytics 새 버전, mediapipe~=3.20)

|

| 110 |

+

|

| 111 |

+

## 2023-07-28

|

| 112 |

+

|

| 113 |

+

- v23.7.10

|

| 114 |

+

- ultralytics, mediapipe import문 정리

|

| 115 |

+

- traceback에서 컬러를 없앰 (api 때문), 라이브러리 버전도 보여주게 설정.

|

| 116 |

+

- huggingface_hub, pydantic을 install.py에서 없앰

|

| 117 |

+

- 안쓰는 컨트롤넷 관련 코드 삭제

|

| 118 |

+

|

| 119 |

+

|

| 120 |

+

## 2023-07-23

|

| 121 |

+

|

| 122 |

+

- v23.7.9

|

| 123 |

+

- `ultralytics.utils` ModuleNotFoundError 해결 (https://github.com/ultralytics/ultralytics/issues/3856)

|

| 124 |

+

- `pydantic` 2.0 이상 버전 설치안되도록 함

|

| 125 |

+

- `controlnet_dir` cmd args 문제 수정 (PR #107)

|

| 126 |

+

|

| 127 |

+

## 2023-07-20

|

| 128 |

+

|

| 129 |

+

- v23.7.8

|

| 130 |

+

- `paste_field_names` 추가했던 것을 되돌림

|

| 131 |

+

|

| 132 |

+

## 2023-07-19

|

| 133 |

+

|

| 134 |

+

- v23.7.7

|

| 135 |

+

- 인페인팅 단계에서 별도의 샘플러를 선택할 수 있게 옵션을 추가함 (xyz그리드에도 추가)

|

| 136 |

+

- webui 1.0.0-pre 이하 버전에서 batch index 문제 수정

|

| 137 |

+

- 스크립트에 `paste_field_names`을 추가함. 사용되는지는 모르겠음

|

| 138 |

+

|

| 139 |

+

## 2023-07-16

|

| 140 |

+

|

| 141 |

+

- v23.7.6

|

| 142 |

+

- `ultralytics 8.0.135`에 추가된 cpuinfo 기능을 위해 `py-cpuinfo`를 미리 설치하게 함. (미리 설치 안하면 cpu나 mps사용할 때 재시작해야함)

|

| 143 |

+

- init_image가 RGB 모드가 아닐 때 RGB로 변경.

|

| 144 |

+

|

| 145 |

+

## 2023-07-07

|

| 146 |

+

|

| 147 |

+

- v23.7.4

|

| 148 |

+

- batch count > 1일때 프롬프트의 인덱스 문제 수정

|

| 149 |

+

|

| 150 |

+

- v23.7.5

|

| 151 |

+

- i2i의 `cached_uc`와 `cached_c`가 p의 `cached_uc`와 `cached_c`가 다른 인스턴스가 되도록 수정

|

| 152 |

+

|

| 153 |

+

## 2023-07-05

|

| 154 |

+

|

| 155 |

+

- v23.7.3

|

| 156 |

+

- 버그 수정

|

| 157 |

+

- `object()`가 json 직렬화 안되는 문제

|

| 158 |

+

- `process`를 호출함에 따라 배치 카운트가 2이상일 때, all_prompts가 고정되는 문제

|

| 159 |

+

- `ad-before`와 `ad-preview` 이미지 파일명이 실제 파일명과 다른 문제

|

| 160 |

+

- pydantic 2.0 호환성 문제

|

| 161 |

+

|

| 162 |

+

## 2023-07-04

|

| 163 |

+

|

| 164 |

+

- v23.7.2

|

| 165 |

+

- `mediapipe_face_mesh_eyes_only` 모델 추가: `mediapipe_face_mesh`로 감지한 뒤 눈만 사용함.

|

| 166 |

+

- 매 배치 시작 전에 `scripts.postprocess`를, 후에 `scripts.process`를 호출함.

|

| 167 |

+

- 컨트롤넷을 사용하면 소요 시간이 조금 늘어나지만 몇몇 문제 해결에 도움이 됨.

|

| 168 |

+

- `lora_block_weight`를 스크립트 화이트리스트에 추가함.

|

| 169 |

+

- 한번이라도 ADetailer를 사용한 사람은 수동으로 추가해야함.

|

| 170 |

+

|

| 171 |

+

## 2023-07-03

|

| 172 |

+

|

| 173 |

+

- v23.7.1

|

| 174 |

+

- `process_images`를 진행한 뒤 `StableDiffusionProcessing` 오브젝트의 close를 호출함

|

| 175 |

+

- api 호출로 사용했는지 확인하는 속성 추가

|

| 176 |

+

- `NansException`이 발생했을 때 중지하지 않고 남은 과정 계속 진행함

|

| 177 |

+

|

| 178 |

+

## 2023-07-02

|

| 179 |

+

|

| 180 |

+

- v23.7.0

|

| 181 |

+

- `NansException`이 발생하면 로그에 표시하고 원본 이미지를 반환하게 설정

|

| 182 |

+

- `rich`를 사용한 에러 트레이싱

|

| 183 |

+

- install.py에 `rich` 추가

|

| 184 |

+

- 생성 중에 컴포넌트의 값을 변경하면 args의 값도 함께 변경되는 문제 수정 (issue #180)

|

| 185 |

+

- 터미널 로그로 ad_prompt와 ad_negative_prompt에 적용된 실제 프롬프트 확인할 수 있음 (입력과 다를 경우에만)

|

| 186 |

+

|

| 187 |

+

## 2023-06-28

|

| 188 |

+

|

| 189 |

+

- v23.6.4

|

| 190 |

+

- 최대 모델 수 5 -> 10개

|

| 191 |

+

- ad_prompt와 ad_negative_prompt에 빈칸으로 놔두면 입력 프롬프트가 사용된다는 문구 추가

|

| 192 |

+

- huggingface 모델 다운로드 실패시 로깅

|

| 193 |

+

- 1st 모델이 `None`일 경우 나머지 입력을 무시하던 문제 수정

|

| 194 |

+

- `--use-cpu` 에 `adetailer` 입력 시 cpu로 yolo모델을 사용함

|

| 195 |

+

|

| 196 |

+

## 2023-06-20

|

| 197 |

+

|

| 198 |

+

- v23.6.3

|

| 199 |

+

- 컨트롤넷 inpaint 모델에 대해, 3가지 모듈을 사용할 수 있도록 함

|

| 200 |

+

- Noise Multiplier 옵션 추가 (PR #149)

|

| 201 |

+

- pydantic 최소 버전 1.10.8로 설정 (Issue #146)

|

| 202 |

+

|

| 203 |

+

## 2023-06-05

|

| 204 |

+

|

| 205 |

+

- v23.6.2

|

| 206 |

+

- xyz_grid에서 ADetailer를 사용할 수 있게함.

|

| 207 |

+

- 8가지 옵션만 1st 탭에 적용되도록 함.

|

| 208 |

+

|

| 209 |

+

## 2023-06-01

|

| 210 |

+

|

| 211 |

+

- v23.6.1

|

| 212 |

+

- `inpaint, scribble, lineart, openpose, tile` 5가지 컨트롤넷 모델 지원 (PR #107)

|

| 213 |

+

- controlnet guidance start, end 인자 추가 (PR #107)

|

| 214 |

+

- `modules.extensions`를 사용하여 컨트롤넷 확장을 불러오고 경로를 알아내로록 변경

|

| 215 |

+

- ui에서 컨트롤넷을 별도 함수로 분리

|

| 216 |

+

|

| 217 |

+

## 2023-05-30

|

| 218 |

+

|

| 219 |

+

- v23.6.0

|

| 220 |

+

- 스크립트의 이름을 `After Detailer`에서 `ADetailer`로 변경

|

| 221 |

+

- API 사용자는 변경 필요함

|

| 222 |

+

- 몇몇 설정 변경

|

| 223 |

+

- `ad_conf` → `ad_confidence`. 0~100 사이의 int → 0.0~1.0 사이의 float

|

| 224 |

+

- `ad_inpaint_full_res` → `ad_inpaint_only_masked`

|

| 225 |

+

- `ad_inpaint_full_res_padding` → `ad_inpaint_only_masked_padding`

|

| 226 |

+

- mediapipe face mesh 모델 추가

|

| 227 |

+

- mediapipe 최소 버전 `0.10.0`

|

| 228 |

+

|

| 229 |

+

- rich traceback 제거함

|

| 230 |

+

- huggingface 다운로드 실패할 때 에러가 나지 않게 하고 해당 모델을 제거함

|

| 231 |

+

|

| 232 |

+

## 2023-05-26

|

| 233 |

+

|

| 234 |

+

- v23.5.19

|

| 235 |

+

- 1번째 탭에도 `None` 옵션을 추가함

|

| 236 |

+

- api로 ad controlnet model에 inpaint가 아닌 다른 컨트롤넷 모델을 사용하지 못하도록 막음

|

| 237 |

+

- adetailer 진행중에 total tqdm 진행바 업데이트를 멈춤

|

| 238 |

+

- state.inturrupted 상태에서 adetailer 과정을 중지함

|

| 239 |

+

- 컨트롤넷 process를 각 batch가 끝난 순간에만 호출하도록 변경

|

| 240 |

+

|

| 241 |

+

### 2023-05-25

|

| 242 |

+

|

| 243 |

+

- v23.5.18

|

| 244 |

+

- 컨트롤넷 관련 수정

|

| 245 |

+

- unit의 `input_mode`를 `SIMPLE`로 모두 변경

|

| 246 |

+

- 컨트롤넷 유넷 훅과 하이잭 함수들을 adetailer를 실행할 때에만 되돌리는 기능 추가

|

| 247 |

+

- adetailer 처리가 끝난 뒤 컨트롤넷 스크립트의 process를 다시 진행함. (batch count 2 이상일때의 문제 해결)

|

| 248 |

+

- 기본 활성 스크립트 목록에서 컨트롤넷을 뺌

|

| 249 |

+

|

| 250 |

+

### 2023-05-22

|

| 251 |

+

|

| 252 |

+

- v23.5.17

|

| 253 |

+

- 컨트롤넷 확장이 있으면 컨트롤넷 스크립트를 활성화함. (컨트롤넷 관련 문제 해결)

|

| 254 |

+

- 모든 컴포넌트에 elem_id 설정

|

| 255 |

+

- ui에 버전을 표시함

|

| 256 |

+

|

| 257 |

+

|

| 258 |

+

### 2023-05-19

|

| 259 |

+

|

| 260 |

+

- v23.5.16

|

| 261 |

+

- 추가한 옵션

|

| 262 |

+

- Mask min/max ratio

|

| 263 |

+

- Mask merge mode

|

| 264 |

+

- Restore faces after ADetailer

|

| 265 |

+

- 옵션들을 Accordion으로 묶음

|

| 266 |

+

|

| 267 |

+

### 2023-05-18

|

| 268 |

+

|

| 269 |

+

- v23.5.15

|

| 270 |

+

- 필요한 것만 임포트하도록 변경 (vae 로딩 오류 없어짐. 로딩 속도 빨라짐)

|

| 271 |

+

|

| 272 |

+

### 2023-05-17

|

| 273 |

+

|

| 274 |

+

- v23.5.14

|

| 275 |

+

- `[SKIP]`으로 ad prompt 일부를 건너뛰는 기능 추가

|

| 276 |

+

- bbox 정렬 옵션 추가

|

| 277 |

+

- sd_webui 타입힌트를 만들어냄

|

| 278 |

+

- enable checker와 관련된 api 오류 수정?

|

| 279 |

+

|

| 280 |

+

### 2023-05-15

|

| 281 |

+

|

| 282 |

+

- v23.5.13

|

| 283 |

+

- `[SEP]`으로 ad prompt를 분리하여 적용하는 기능 추가

|

| 284 |

+

- enable checker를 다시 pydantic으로 변경함

|

| 285 |

+

- ui 관련 함수를 adetailer.ui 폴더로 분리함

|

| 286 |

+

- controlnet을 사용할 때 모든 controlnet unit 비활성화

|

| 287 |

+

- adetailer 폴더가 없으면 만들게 함

|

| 288 |

+

|

| 289 |

+

### 2023-05-13

|

| 290 |

+

|

| 291 |

+

- v23.5.12

|

| 292 |

+

- `ad_enable`을 제외한 입력이 dict타입으로 들어오도록 변경

|

| 293 |

+

- web api로 사용할 때에 특히 사용하기 쉬움

|

| 294 |

+

- web api breaking change

|

| 295 |

+

- `mask_preprocess` 인자를 넣지 않았던 오류 수정 (PR #47)

|

| 296 |

+

- huggingface에서 모델을 다운로드하지 않는 옵션 추가 `--ad-no-huggingface`

|

| 297 |

+

|

| 298 |

+

### 2023-05-12

|

| 299 |

+

|

| 300 |

+

- v23.5.11

|

| 301 |

+

- `ultralytics` 알람 제거

|

| 302 |

+

- 필요없는 exif 인자 더 제거함

|

| 303 |

+

- `use separate steps` 옵션 추가

|

| 304 |

+

- ui 배치를 조정함

|

| 305 |

+

|

| 306 |

+

### 2023-05-09

|

| 307 |

+

|

| 308 |

+

- v23.5.10

|

| 309 |

+

- 선택한 스크립트만 ADetailer에 적용하는 옵션 추가, 기본값 `True`. 설정 탭에서 지정가능.

|

| 310 |

+

- 기본값: `dynamic_prompting,dynamic_thresholding,wildcards,wildcard_recursive`

|

| 311 |

+

- `person_yolov8s-seg.pt` 모델 추가

|

| 312 |

+

- `ultralytics`의 최소 버전을 `8.0.97`로 설정 (C:\\ 문제 해결된 버전)

|

| 313 |

+

|

| 314 |

+

### 2023-05-08

|

| 315 |

+

|

| 316 |

+

- v23.5.9

|

| 317 |

+

- 2가지 이상의 모델을 사용할 수 있음. 기본값: 2, 최대: 5

|

| 318 |

+

- segment 모델을 사용할 수 있게 함. `person_yolov8n-seg.pt` 추가

|

| 319 |

+

|

| 320 |

+

### 2023-05-07

|

| 321 |

+

|

| 322 |

+

- v23.5.8

|

| 323 |

+

- 프롬프트와 네거티브 프롬프트에 방향키 지원 (PR #24)

|

| 324 |

+

- `mask_preprocess`를 추가함. 이전 버전과 시드값이 달라질 가능성 있음!

|

| 325 |

+

- 이미지 처리가 일어났을 때에만 before이미지를 저장함

|

| 326 |

+

- 설정창의 레이블을 ADetailer 대신 더 적절하게 수정함

|

| 327 |

+

|

| 328 |

+

### 2023-05-06

|

| 329 |

+

|

| 330 |

+

- v23.5.7

|

| 331 |

+

- `ad_use_cfg_scale` 옵션 추가. cfg 스케일을 따로 사용할지 말지 결정함.

|

| 332 |

+

- `ad_enable` 기본값을 `True`에서 `False`로 변경

|

| 333 |

+

- `ad_model`의 기본값을 `None`에서 첫번째 모델로 변경

|

| 334 |

+

- 최소 2개의 입력(ad_enable, ad_model)만 들어오면 작동하게 변경.

|

| 335 |

+

|

| 336 |

+

- v23.5.7.post0

|

| 337 |

+

- `init_controlnet_ext`을 controlnet_exists == True일때에만 실행

|

| 338 |

+

- webui를 C드라이브 바로 밑에 설치한 사람들에게 `ultralytics` 경고 표시

|

| 339 |

+

|

| 340 |

+

### 2023-05-05 (어린이날)

|

| 341 |

+

|

| 342 |

+

- v23.5.5

|

| 343 |

+

- `Save images before ADetailer` 옵션 추가

|

| 344 |

+

- 입력으로 들어온 인자와 ALL_ARGS의 길이가 다르면 에러메세지

|

| 345 |

+

- README.md에 설치방법 추가

|

| 346 |

+

|

| 347 |

+

- v23.5.6

|

| 348 |

+

- get_args에서 IndexError가 발생하면 자세한 에러메세지를 볼 수 있음

|

| 349 |

+

- AdetailerArgs에 extra_params 내장

|

| 350 |

+

- scripts_args를 딥카피함

|

| 351 |

+

- postprocess_image를 약간 분리함

|

| 352 |

+

|

| 353 |

+

- v23.5.6.post0

|

| 354 |

+

- `init_controlnet_ext`에서 에러메세지를 자세히 볼 수 있음

|

| 355 |

+

|

| 356 |

+

### 2023-05-04

|

| 357 |

+

|

| 358 |

+

- v23.5.4

|

| 359 |

+

- use pydantic for arguments validation

|

| 360 |

+

- revert: ad_model to `None` as default

|

| 361 |

+

- revert: `__future__` imports

|

| 362 |

+

- lazily import yolo and mediapipe

|

| 363 |

+

|

| 364 |

+

### 2023-05-03

|

| 365 |

+

|

| 366 |

+

- v23.5.3.post0

|

| 367 |

+

- remove `__future__` imports

|

| 368 |

+

- change to copy scripts and scripts args

|

| 369 |

+

|

| 370 |

+

- v23.5.3.post1

|

| 371 |

+

- change default ad_model from `None`

|

| 372 |

+

|

| 373 |

+

### 2023-05-02

|

| 374 |

+

|

| 375 |

+

- v23.5.3

|

| 376 |

+

- Remove `None` from model list and add `Enable ADetailer` checkbox.

|

| 377 |

+

- install.py `skip_install` fix.

|

adetailer/LICENSE.md

ADDED

|

@@ -0,0 +1,662 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|