Update README.md

Browse files

README.md

CHANGED

|

@@ -1,9 +1,7 @@

|

|

| 1 |

-

#

|

| 2 |

<a href='https://junzhan2000.github.io/AnyGPT.github.io/'><img src='https://img.shields.io/badge/Project-Page-Green'></a> <a href='https://arxiv.org/pdf/2402.12226.pdf'><img src='https://img.shields.io/badge/Paper-Arxiv-red'></a> [](https://huggingface.co/datasets/fnlp/AnyInstruct)

|

| 3 |

|

| 4 |

-

|

| 5 |

-

<img src="static/images/logo.png" width="16%"> <br>

|

| 6 |

-

</p>

|

| 7 |

|

| 8 |

## Introduction

|

| 9 |

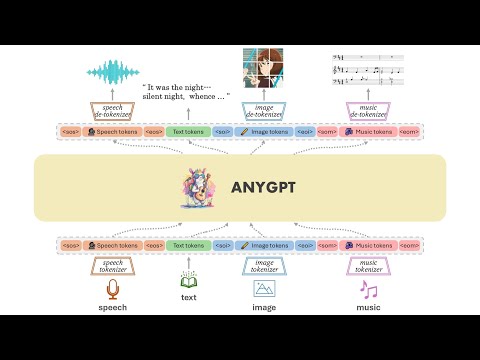

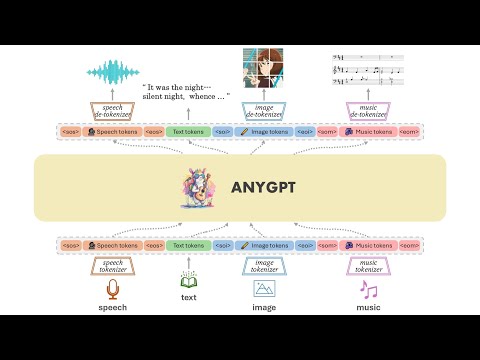

We introduce AnyGPT, an any-to-any multimodal language model that utilizes discrete representations for the unified processing of various modalities, including speech, text, images, and music. The [base model](https://huggingface.co/fnlp/AnyGPT-base) aligns the four modalities, allowing for intermodal conversions between different modalities and text. Furthermore, we constructed the [AnyInstruct](https://huggingface.co/datasets/fnlp/AnyInstruct) dataset based on various generative models, which contains instructions for arbitrary modal interconversion. Trained on this dataset, our [chat model](https://huggingface.co/fnlp/AnyGPT-chat) can engage in free multimodal conversations, where multimodal data can be inserted at will.

|

|

@@ -15,12 +13,6 @@ Demos are shown in [project page](https://junzhan2000.github.io/AnyGPT.github.io

|

|

| 15 |

[](https://www.youtube.com/watch?v=oW3E3pIsaRg)

|

| 16 |

|

| 17 |

|

| 18 |

-

## Open-Source Checklist

|

| 19 |

-

- [x] Base Model

|

| 20 |

-

- [ ] Chat Model

|

| 21 |

-

- [x] Inference Code

|

| 22 |

-

- [x] Instruction Dataset

|

| 23 |

-

|

| 24 |

## Inference

|

| 25 |

|

| 26 |

### Installation

|

|

|

|

| 1 |

+

# Base model for paper "AnyGPT: Unified Multimodal LLM with Discrete Sequence Modeling"

|

| 2 |

<a href='https://junzhan2000.github.io/AnyGPT.github.io/'><img src='https://img.shields.io/badge/Project-Page-Green'></a> <a href='https://arxiv.org/pdf/2402.12226.pdf'><img src='https://img.shields.io/badge/Paper-Arxiv-red'></a> [](https://huggingface.co/datasets/fnlp/AnyInstruct)

|

| 3 |

|

| 4 |

+

|

|

|

|

|

|

|

| 5 |

|

| 6 |

## Introduction

|

| 7 |

We introduce AnyGPT, an any-to-any multimodal language model that utilizes discrete representations for the unified processing of various modalities, including speech, text, images, and music. The [base model](https://huggingface.co/fnlp/AnyGPT-base) aligns the four modalities, allowing for intermodal conversions between different modalities and text. Furthermore, we constructed the [AnyInstruct](https://huggingface.co/datasets/fnlp/AnyInstruct) dataset based on various generative models, which contains instructions for arbitrary modal interconversion. Trained on this dataset, our [chat model](https://huggingface.co/fnlp/AnyGPT-chat) can engage in free multimodal conversations, where multimodal data can be inserted at will.

|

|

|

|

| 13 |

[](https://www.youtube.com/watch?v=oW3E3pIsaRg)

|

| 14 |

|

| 15 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 16 |

## Inference

|

| 17 |

|

| 18 |

### Installation

|