End of training

Browse files- README.md +2 -1

- all_results.json +12 -0

- eval_results.json +7 -0

- train_results.json +8 -0

- trainer_state.json +318 -0

- training_eval_loss.png +0 -0

- training_loss.png +0 -0

README.md

CHANGED

|

@@ -4,6 +4,7 @@ license: apache-2.0

|

|

| 4 |

base_model: Qwen/Qwen2.5-7B

|

| 5 |

tags:

|

| 6 |

- llama-factory

|

|

|

|

| 7 |

- generated_from_trainer

|

| 8 |

model-index:

|

| 9 |

- name: Bespoke-Stratos-17k

|

|

@@ -15,7 +16,7 @@ should probably proofread and complete it, then remove this comment. -->

|

|

| 15 |

|

| 16 |

# Bespoke-Stratos-17k

|

| 17 |

|

| 18 |

-

This model is a fine-tuned version of [Qwen/Qwen2.5-7B](https://huggingface.co/Qwen/Qwen2.5-7B) on

|

| 19 |

It achieves the following results on the evaluation set:

|

| 20 |

- Loss: 0.5591

|

| 21 |

|

|

|

|

| 4 |

base_model: Qwen/Qwen2.5-7B

|

| 5 |

tags:

|

| 6 |

- llama-factory

|

| 7 |

+

- full

|

| 8 |

- generated_from_trainer

|

| 9 |

model-index:

|

| 10 |

- name: Bespoke-Stratos-17k

|

|

|

|

| 16 |

|

| 17 |

# Bespoke-Stratos-17k

|

| 18 |

|

| 19 |

+

This model is a fine-tuned version of [Qwen/Qwen2.5-7B](https://huggingface.co/Qwen/Qwen2.5-7B) on the bespokelabs/Bespoke-Stratos-17k dataset.

|

| 20 |

It achieves the following results on the evaluation set:

|

| 21 |

- Loss: 0.5591

|

| 22 |

|

all_results.json

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"epoch": 2.9876543209876543,

|

| 3 |

+

"eval_loss": 0.55907142162323,

|

| 4 |

+

"eval_runtime": 31.1269,

|

| 5 |

+

"eval_samples_per_second": 26.215,

|

| 6 |

+

"eval_steps_per_second": 0.418,

|

| 7 |

+

"total_flos": 190042236518400.0,

|

| 8 |

+

"train_loss": 0.506568502460301,

|

| 9 |

+

"train_runtime": 5156.7482,

|

| 10 |

+

"train_samples_per_second": 9.019,

|

| 11 |

+

"train_steps_per_second": 0.07

|

| 12 |

+

}

|

eval_results.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"epoch": 2.9876543209876543,

|

| 3 |

+

"eval_loss": 0.55907142162323,

|

| 4 |

+

"eval_runtime": 31.1269,

|

| 5 |

+

"eval_samples_per_second": 26.215,

|

| 6 |

+

"eval_steps_per_second": 0.418

|

| 7 |

+

}

|

train_results.json

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"epoch": 2.9876543209876543,

|

| 3 |

+

"total_flos": 190042236518400.0,

|

| 4 |

+

"train_loss": 0.506568502460301,

|

| 5 |

+

"train_runtime": 5156.7482,

|

| 6 |

+

"train_samples_per_second": 9.019,

|

| 7 |

+

"train_steps_per_second": 0.07

|

| 8 |

+

}

|

trainer_state.json

ADDED

|

@@ -0,0 +1,318 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"best_metric": null,

|

| 3 |

+

"best_model_checkpoint": null,

|

| 4 |

+

"epoch": 2.9876543209876543,

|

| 5 |

+

"eval_steps": 500,

|

| 6 |

+

"global_step": 363,

|

| 7 |

+

"is_hyper_param_search": false,

|

| 8 |

+

"is_local_process_zero": true,

|

| 9 |

+

"is_world_process_zero": true,

|

| 10 |

+

"log_history": [

|

| 11 |

+

{

|

| 12 |

+

"epoch": 0.0823045267489712,

|

| 13 |

+

"grad_norm": 2.3707054376650794,

|

| 14 |

+

"learning_rate": 5e-06,

|

| 15 |

+

"loss": 0.8018,

|

| 16 |

+

"step": 10

|

| 17 |

+

},

|

| 18 |

+

{

|

| 19 |

+

"epoch": 0.1646090534979424,

|

| 20 |

+

"grad_norm": 0.9189518749553744,

|

| 21 |

+

"learning_rate": 5e-06,

|

| 22 |

+

"loss": 0.6615,

|

| 23 |

+

"step": 20

|

| 24 |

+

},

|

| 25 |

+

{

|

| 26 |

+

"epoch": 0.24691358024691357,

|

| 27 |

+

"grad_norm": 0.7181231203254296,

|

| 28 |

+

"learning_rate": 5e-06,

|

| 29 |

+

"loss": 0.6226,

|

| 30 |

+

"step": 30

|

| 31 |

+

},

|

| 32 |

+

{

|

| 33 |

+

"epoch": 0.3292181069958848,

|

| 34 |

+

"grad_norm": 0.8098373994551952,

|

| 35 |

+

"learning_rate": 5e-06,

|

| 36 |

+

"loss": 0.5947,

|

| 37 |

+

"step": 40

|

| 38 |

+

},

|

| 39 |

+

{

|

| 40 |

+

"epoch": 0.411522633744856,

|

| 41 |

+

"grad_norm": 0.6752478716308398,

|

| 42 |

+

"learning_rate": 5e-06,

|

| 43 |

+

"loss": 0.5731,

|

| 44 |

+

"step": 50

|

| 45 |

+

},

|

| 46 |

+

{

|

| 47 |

+

"epoch": 0.49382716049382713,

|

| 48 |

+

"grad_norm": 0.6563536956259921,

|

| 49 |

+

"learning_rate": 5e-06,

|

| 50 |

+

"loss": 0.5748,

|

| 51 |

+

"step": 60

|

| 52 |

+

},

|

| 53 |

+

{

|

| 54 |

+

"epoch": 0.5761316872427984,

|

| 55 |

+

"grad_norm": 0.7032292694552905,

|

| 56 |

+

"learning_rate": 5e-06,

|

| 57 |

+

"loss": 0.5696,

|

| 58 |

+

"step": 70

|

| 59 |

+

},

|

| 60 |

+

{

|

| 61 |

+

"epoch": 0.6584362139917695,

|

| 62 |

+

"grad_norm": 0.7003547749235107,

|

| 63 |

+

"learning_rate": 5e-06,

|

| 64 |

+

"loss": 0.5579,

|

| 65 |

+

"step": 80

|

| 66 |

+

},

|

| 67 |

+

{

|

| 68 |

+

"epoch": 0.7407407407407407,

|

| 69 |

+

"grad_norm": 0.6613096075940125,

|

| 70 |

+

"learning_rate": 5e-06,

|

| 71 |

+

"loss": 0.563,

|

| 72 |

+

"step": 90

|

| 73 |

+

},

|

| 74 |

+

{

|

| 75 |

+

"epoch": 0.823045267489712,

|

| 76 |

+

"grad_norm": 0.5810433314696346,

|

| 77 |

+

"learning_rate": 5e-06,

|

| 78 |

+

"loss": 0.5506,

|

| 79 |

+

"step": 100

|

| 80 |

+

},

|

| 81 |

+

{

|

| 82 |

+

"epoch": 0.9053497942386831,

|

| 83 |

+

"grad_norm": 0.6666365832464547,

|

| 84 |

+

"learning_rate": 5e-06,

|

| 85 |

+

"loss": 0.5526,

|

| 86 |

+

"step": 110

|

| 87 |

+

},

|

| 88 |

+

{

|

| 89 |

+

"epoch": 0.9876543209876543,

|

| 90 |

+

"grad_norm": 0.7058806830798787,

|

| 91 |

+

"learning_rate": 5e-06,

|

| 92 |

+

"loss": 0.5415,

|

| 93 |

+

"step": 120

|

| 94 |

+

},

|

| 95 |

+

{

|

| 96 |

+

"epoch": 0.9958847736625515,

|

| 97 |

+

"eval_loss": 0.5658594369888306,

|

| 98 |

+

"eval_runtime": 31.1396,

|

| 99 |

+

"eval_samples_per_second": 26.205,

|

| 100 |

+

"eval_steps_per_second": 0.417,

|

| 101 |

+

"step": 121

|

| 102 |

+

},

|

| 103 |

+

{

|

| 104 |

+

"epoch": 1.0699588477366255,

|

| 105 |

+

"grad_norm": 0.6935479068001943,

|

| 106 |

+

"learning_rate": 5e-06,

|

| 107 |

+

"loss": 0.5334,

|

| 108 |

+

"step": 130

|

| 109 |

+

},

|

| 110 |

+

{

|

| 111 |

+

"epoch": 1.1522633744855968,

|

| 112 |

+

"grad_norm": 0.760550165297717,

|

| 113 |

+

"learning_rate": 5e-06,

|

| 114 |

+

"loss": 0.5067,

|

| 115 |

+

"step": 140

|

| 116 |

+

},

|

| 117 |

+

{

|

| 118 |

+

"epoch": 1.2345679012345678,

|

| 119 |

+

"grad_norm": 0.643311514826356,

|

| 120 |

+

"learning_rate": 5e-06,

|

| 121 |

+

"loss": 0.4975,

|

| 122 |

+

"step": 150

|

| 123 |

+

},

|

| 124 |

+

{

|

| 125 |

+

"epoch": 1.316872427983539,

|

| 126 |

+

"grad_norm": 0.7180631408695722,

|

| 127 |

+

"learning_rate": 5e-06,

|

| 128 |

+

"loss": 0.4907,

|

| 129 |

+

"step": 160

|

| 130 |

+

},

|

| 131 |

+

{

|

| 132 |

+

"epoch": 1.3991769547325104,

|

| 133 |

+

"grad_norm": 0.6268855948646844,

|

| 134 |

+

"learning_rate": 5e-06,

|

| 135 |

+

"loss": 0.4846,

|

| 136 |

+

"step": 170

|

| 137 |

+

},

|

| 138 |

+

{

|

| 139 |

+

"epoch": 1.4814814814814814,

|

| 140 |

+

"grad_norm": 0.6205279314065159,

|

| 141 |

+

"learning_rate": 5e-06,

|

| 142 |

+

"loss": 0.4857,

|

| 143 |

+

"step": 180

|

| 144 |

+

},

|

| 145 |

+

{

|

| 146 |

+

"epoch": 1.5637860082304527,

|

| 147 |

+

"grad_norm": 0.6432594466375224,

|

| 148 |

+

"learning_rate": 5e-06,

|

| 149 |

+

"loss": 0.4922,

|

| 150 |

+

"step": 190

|

| 151 |

+

},

|

| 152 |

+

{

|

| 153 |

+

"epoch": 1.646090534979424,

|

| 154 |

+

"grad_norm": 0.5837924660934569,

|

| 155 |

+

"learning_rate": 5e-06,

|

| 156 |

+

"loss": 0.485,

|

| 157 |

+

"step": 200

|

| 158 |

+

},

|

| 159 |

+

{

|

| 160 |

+

"epoch": 1.7283950617283952,

|

| 161 |

+

"grad_norm": 0.5800173415658518,

|

| 162 |

+

"learning_rate": 5e-06,

|

| 163 |

+

"loss": 0.4899,

|

| 164 |

+

"step": 210

|

| 165 |

+

},

|

| 166 |

+

{

|

| 167 |

+

"epoch": 1.8106995884773662,

|

| 168 |

+

"grad_norm": 0.5452696697421119,

|

| 169 |

+

"learning_rate": 5e-06,

|

| 170 |

+

"loss": 0.4794,

|

| 171 |

+

"step": 220

|

| 172 |

+

},

|

| 173 |

+

{

|

| 174 |

+

"epoch": 1.8930041152263375,

|

| 175 |

+

"grad_norm": 0.6589276092411267,

|

| 176 |

+

"learning_rate": 5e-06,

|

| 177 |

+

"loss": 0.4925,

|

| 178 |

+

"step": 230

|

| 179 |

+

},

|

| 180 |

+

{

|

| 181 |

+

"epoch": 1.9753086419753085,

|

| 182 |

+

"grad_norm": 0.7099238180212905,

|

| 183 |

+

"learning_rate": 5e-06,

|

| 184 |

+

"loss": 0.486,

|

| 185 |

+

"step": 240

|

| 186 |

+

},

|

| 187 |

+

{

|

| 188 |

+

"epoch": 2.0,

|

| 189 |

+

"eval_loss": 0.552381157875061,

|

| 190 |

+

"eval_runtime": 31.0974,

|

| 191 |

+

"eval_samples_per_second": 26.24,

|

| 192 |

+

"eval_steps_per_second": 0.418,

|

| 193 |

+

"step": 243

|

| 194 |

+

},

|

| 195 |

+

{

|

| 196 |

+

"epoch": 2.05761316872428,

|

| 197 |

+

"grad_norm": 0.6173968011853433,

|

| 198 |

+

"learning_rate": 5e-06,

|

| 199 |

+

"loss": 0.4643,

|

| 200 |

+

"step": 250

|

| 201 |

+

},

|

| 202 |

+

{

|

| 203 |

+

"epoch": 2.139917695473251,

|

| 204 |

+

"grad_norm": 0.6515589754644828,

|

| 205 |

+

"learning_rate": 5e-06,

|

| 206 |

+

"loss": 0.4264,

|

| 207 |

+

"step": 260

|

| 208 |

+

},

|

| 209 |

+

{

|

| 210 |

+

"epoch": 2.2222222222222223,

|

| 211 |

+

"grad_norm": 0.6329003562540839,

|

| 212 |

+

"learning_rate": 5e-06,

|

| 213 |

+

"loss": 0.427,

|

| 214 |

+

"step": 270

|

| 215 |

+

},

|

| 216 |

+

{

|

| 217 |

+

"epoch": 2.3045267489711936,

|

| 218 |

+

"grad_norm": 0.61321586370113,

|

| 219 |

+

"learning_rate": 5e-06,

|

| 220 |

+

"loss": 0.4284,

|

| 221 |

+

"step": 280

|

| 222 |

+

},

|

| 223 |

+

{

|

| 224 |

+

"epoch": 2.386831275720165,

|

| 225 |

+

"grad_norm": 0.5986219957694312,

|

| 226 |

+

"learning_rate": 5e-06,

|

| 227 |

+

"loss": 0.4298,

|

| 228 |

+

"step": 290

|

| 229 |

+

},

|

| 230 |

+

{

|

| 231 |

+

"epoch": 2.4691358024691357,

|

| 232 |

+

"grad_norm": 0.5797625936229965,

|

| 233 |

+

"learning_rate": 5e-06,

|

| 234 |

+

"loss": 0.4332,

|

| 235 |

+

"step": 300

|

| 236 |

+

},

|

| 237 |

+

{

|

| 238 |

+

"epoch": 2.551440329218107,

|

| 239 |

+

"grad_norm": 0.6606165599990808,

|

| 240 |

+

"learning_rate": 5e-06,

|

| 241 |

+

"loss": 0.432,

|

| 242 |

+

"step": 310

|

| 243 |

+

},

|

| 244 |

+

{

|

| 245 |

+

"epoch": 2.633744855967078,

|

| 246 |

+

"grad_norm": 0.6055776178246806,

|

| 247 |

+

"learning_rate": 5e-06,

|

| 248 |

+

"loss": 0.4297,

|

| 249 |

+

"step": 320

|

| 250 |

+

},

|

| 251 |

+

{

|

| 252 |

+

"epoch": 2.7160493827160495,

|

| 253 |

+

"grad_norm": 0.5792227650214353,

|

| 254 |

+

"learning_rate": 5e-06,

|

| 255 |

+

"loss": 0.4301,

|

| 256 |

+

"step": 330

|

| 257 |

+

},

|

| 258 |

+

{

|

| 259 |

+

"epoch": 2.7983539094650207,

|

| 260 |

+

"grad_norm": 0.5678659977310005,

|

| 261 |

+

"learning_rate": 5e-06,

|

| 262 |

+

"loss": 0.4256,

|

| 263 |

+

"step": 340

|

| 264 |

+

},

|

| 265 |

+

{

|

| 266 |

+

"epoch": 2.8806584362139915,

|

| 267 |

+

"grad_norm": 0.5959535146183862,

|

| 268 |

+

"learning_rate": 5e-06,

|

| 269 |

+

"loss": 0.4252,

|

| 270 |

+

"step": 350

|

| 271 |

+

},

|

| 272 |

+

{

|

| 273 |

+

"epoch": 2.962962962962963,

|

| 274 |

+

"grad_norm": 0.624214333713647,

|

| 275 |

+

"learning_rate": 5e-06,

|

| 276 |

+

"loss": 0.4216,

|

| 277 |

+

"step": 360

|

| 278 |

+

},

|

| 279 |

+

{

|

| 280 |

+

"epoch": 2.9876543209876543,

|

| 281 |

+

"eval_loss": 0.55907142162323,

|

| 282 |

+

"eval_runtime": 30.0336,

|

| 283 |

+

"eval_samples_per_second": 27.17,

|

| 284 |

+

"eval_steps_per_second": 0.433,

|

| 285 |

+

"step": 363

|

| 286 |

+

},

|

| 287 |

+

{

|

| 288 |

+

"epoch": 2.9876543209876543,

|

| 289 |

+

"step": 363,

|

| 290 |

+

"total_flos": 190042236518400.0,

|

| 291 |

+

"train_loss": 0.506568502460301,

|

| 292 |

+

"train_runtime": 5156.7482,

|

| 293 |

+

"train_samples_per_second": 9.019,

|

| 294 |

+

"train_steps_per_second": 0.07

|

| 295 |

+

}

|

| 296 |

+

],

|

| 297 |

+

"logging_steps": 10,

|

| 298 |

+

"max_steps": 363,

|

| 299 |

+

"num_input_tokens_seen": 0,

|

| 300 |

+

"num_train_epochs": 3,

|

| 301 |

+

"save_steps": 500,

|

| 302 |

+

"stateful_callbacks": {

|

| 303 |

+

"TrainerControl": {

|

| 304 |

+

"args": {

|

| 305 |

+

"should_epoch_stop": false,

|

| 306 |

+

"should_evaluate": false,

|

| 307 |

+

"should_log": false,

|

| 308 |

+

"should_save": true,

|

| 309 |

+

"should_training_stop": true

|

| 310 |

+

},

|

| 311 |

+

"attributes": {}

|

| 312 |

+

}

|

| 313 |

+

},

|

| 314 |

+

"total_flos": 190042236518400.0,

|

| 315 |

+

"train_batch_size": 8,

|

| 316 |

+

"trial_name": null,

|

| 317 |

+

"trial_params": null

|

| 318 |

+

}

|

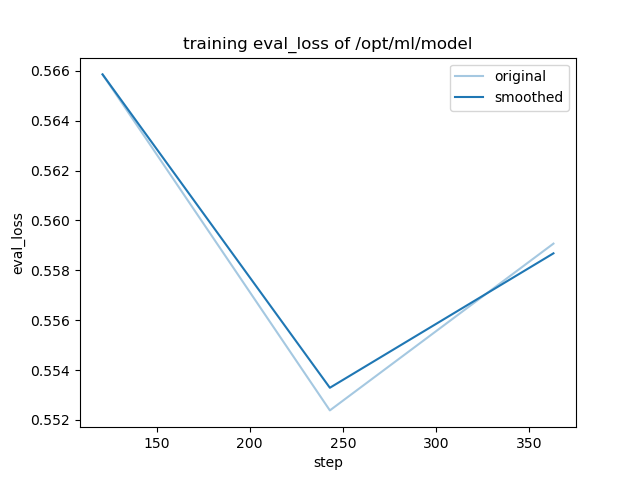

training_eval_loss.png

ADDED

|

training_loss.png

ADDED

|