Update README.md

Browse files

README.md

CHANGED

|

@@ -76,54 +76,31 @@ I created this model to make the hands as beautiful as possible.<br>

|

|

| 76 |

ぜひお試しください (Please try it!) <br>

|

| 77 |

<br>

|

| 78 |

<br>

|

| 79 |

-

|

| 80 |

-

|

| 81 |

-

|

| 82 |

-

|

| 83 |

-

|

| 84 |

-

さらに早い生成のテストです<br>

|

| 85 |

-

|

| 86 |

-

[設定] CFG:4, STEP:6, HiresFix-Denoize:0.5(GeForce users) <br>

|

| 87 |

-

上の設定で試してみてください(参考プロンプトはファイルの PNG 情報からどうぞ)<br>

|

| 88 |

-

Here's a test of even faster generation.<br>

|

| 89 |

-

Try the above settings.(For reference prompts, please go to the PNG info in the file)<br>

|

| 90 |

-

|

| 91 |

-

[説明] このテストは LCM LORA を組み込んだモデルです[LCM(LORA) 0.5]<br>

|

| 92 |

-

その他、epi_Noize-(LORA) などをマイナス適用し生成時のノイズを減じてます<br>

|

| 93 |

-

※FLAT(LORA) をネガティブに入れると精細化しキレイな画像を得られます<br>

|

| 94 |

-

※西洋系モデルを学習元にしたノイズ LORA をマイナス適用すると西洋系の顔立ちを薄められました<br>

|

| 95 |

-

※東洋系モデルを学習元にしたノイズ LORA をプラス適用すると東洋系の顔立ちを濃くできました<br>

|

| 96 |

-

※FreeU によるチャンネル分けもマージ時の層別適用に効果的と思います<br>

|

| 97 |

-

皆さんの「マージ」の参考になれば幸いです<br>

|

| 98 |

-

[Description] This test is a model incorporating LCM LORA [LCM(LORA) 0.5].<br>

|

| 99 |

-

Other, epi_Noize-(LORA), etc. are applied to reduce noise during generation.[epi_Noize-(LORA) -1.0]<br>

|

| 100 |

-

※Negative prompt of FLAT(LORA) results in a more refined and beautiful image.<br>

|

| 101 |

-

※Negative noise LORA using Western models as training sources can be applied to reduce Western facial features.<br>

|

| 102 |

-

※Noise LORA using an oriental model as a training source can be applied to enhance oriental facial features.<br>

|

| 103 |

-

※Channel separation by FREEU is Effective stratified application during merging.<br>

|

| 104 |

-

I hope this will be helpful for your "merging"!<br>

|

| 105 |

<br>

|

| 106 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 107 |

<br>

|

| 108 |

-

[

|

| 109 |

-

LCM (LORA) 全層適用の高速化は最大 62% になりましたが OUT 層への影響で消えないノイズも残りました<br>

|

| 110 |

-

そこで MID 層のみ LCM (LORA) 適用します、高速化は 25% に留まりますがノイズはキレイに消えます<br>

|

| 111 |

-

結果的にこれが最適解かなと思います ※LCM 0.5 適用の場合です強度を上げればさらに高速化するはずです<br>

|

| 112 |

-

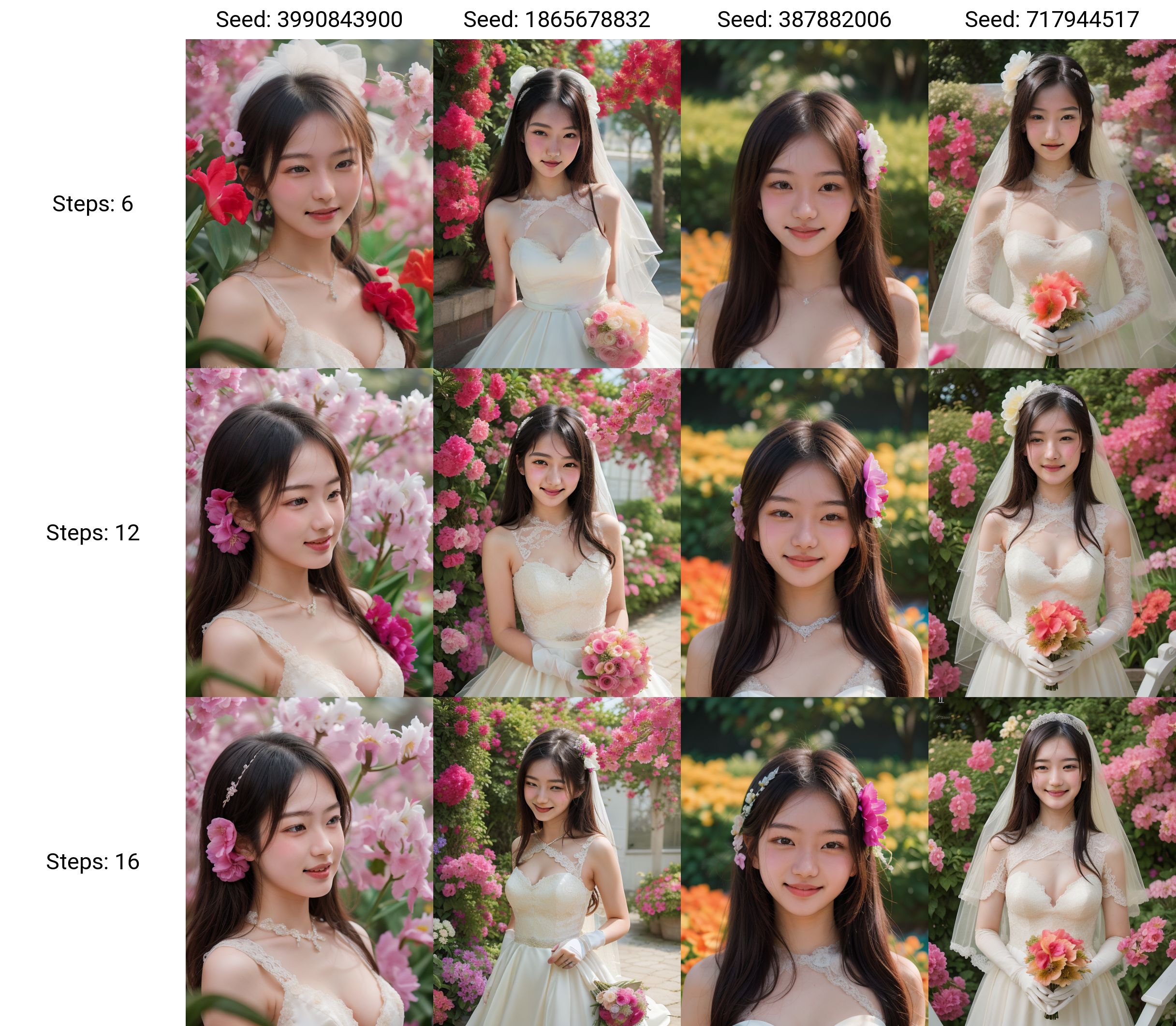

(<<NON LCM STEP:16<<rev 020 LCM ALL 層 STEP:6>>LCM MID Layer STEP:12>>)<br>

|

| 113 |

-

[LCM Considerations] What we learned from the rev020 test<br>

|

| 114 |

-

LCM (LORA) applied to all layers resulted in a 62% speedup, but some noise remained due to the effect on the OUT layer.<br>

|

| 115 |

-

Therefore, LCM (LORA) was applied only to the MID layer. The speedup was 25%, but the noise disappeared cleanly.<br>

|

| 116 |

-

As a result, I think this is the best solution.<br>

|

| 117 |

-

※This is the case when LCM 0.5 is applied. If the intensity is increased, the speed should be further improved.<br>

|

| 118 |

-

(<<NON LCM STEP:16<<rev 020 LCM ALL Layer STEP:6>>LCM MID Layer STEP:12>>)<br>

|

| 119 |

<br>

|

| 120 |

-

[おすすめ]

|

| 121 |

-

LCM(LORA) をモデルにマージする場合 bM1280ch (FreeU 相当) にすると STEP

|

| 122 |

-

今回のテスト版では STEP:10 以上の場合に破綻のない画像を高頻度で得られました<br>

|

| 123 |

※bM1280ch = 0,0,0,0,0,0,0,0,1,1,1,1,1,1,1,1,1,1,1,0,0,0,0,0<br>

|

| 124 |

[Recommendation] Efficient LCM usage<br>

|

| 125 |

When merging LCM(LORA) into a model, set bM1280ch (equivalent to FreeU) to give more leeway for STEP setting.<br>

|

| 126 |

-

In this test version, we obtained images with no collapses at a high frequency when STEP:10 or higher was used.<br>

|

| 127 |

※bM1280ch = 0,0,0,0,0,0,0,0,1,1,1,1,1,1,1,1,1,1,1,0,0,0,0,0<br>

|

| 128 |

<br>

|

| 129 |

<br>

|

|

|

|

| 76 |

ぜひお試しください (Please try it!) <br>

|

| 77 |

<br>

|

| 78 |

<br>

|

| 79 |

+

|修正| マージモデル破損と修正<br>

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 80 |

<br>

|

| 81 |

+

以前のモデルデータに「Unet 破損」を見つけたため修正しました<br>

|

| 82 |

+

rev01A、rev010A、TEST-rev020A、それぞれ非公開とします(ComfyUI 等で生成不能)<br>

|

| 83 |

+

<br>

|

| 84 |

+

※上の3モデルについては「マージ不適格」であることを連絡します<br>

|

| 85 |

+

<br>

|

| 86 |

+

rev010A を修正し、新たに、rev011A、rev011B-LCM、として公開します<br>

|

| 87 |

+

<br>

|

| 88 |

+

|Fixes| Merge Model Corruption and Fixes<br>

|

| 89 |

+

Correction of "Unet corruption" found in previous model data.<br>

|

| 90 |

+

rev01A, rev010A, and TEST-rev020A are now private (could not be generated by ComfyUI, etc.).<br>

|

| 91 |

+

※I will inform you that the above 3 models are "merge ineligible".<br>

|

| 92 |

+

rev010A is modified, rev011A and rev011B-LCM1-5, respectively.<br>

|

| 93 |

+

<br>

|

| 94 |

+

|

| 95 |

+

|

| 96 |

<br>

|

| 97 |

+

[Easy settings] //rev011A// STEP:16~ CFG:5 //rev011B-LCM// STEP:12~ CFG:5 あたりがオススメです<br>

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 98 |

<br>

|

| 99 |

+

[おすすめ] 私的 LCM 活用<br>

|

| 100 |

+

LCM(LORA) をモデルにマージする場合 bM1280ch (FreeU 相当) にすると STEP 設定に余裕が生まれました<br>

|

|

|

|

| 101 |

※bM1280ch = 0,0,0,0,0,0,0,0,1,1,1,1,1,1,1,1,1,1,1,0,0,0,0,0<br>

|

| 102 |

[Recommendation] Efficient LCM usage<br>

|

| 103 |

When merging LCM(LORA) into a model, set bM1280ch (equivalent to FreeU) to give more leeway for STEP setting.<br>

|

|

|

|

| 104 |

※bM1280ch = 0,0,0,0,0,0,0,0,1,1,1,1,1,1,1,1,1,1,1,0,0,0,0,0<br>

|

| 105 |

<br>

|

| 106 |

<br>

|