wyt1234

commited on

Commit

•

c45ebf2

1

Parent(s):

a347ec0

Update:README.md README_zh.md

Browse files- README.md +82 -3

- README_zh.md +89 -0

- assets/Wechat.jpeg +0 -0

- assets/product1.png +0 -0

- assets/product3.png +0 -0

- assets/saofund2.png +0 -0

- assets/sft_demo.png +0 -0

- assets//350/277/231/344/270/252/347/224/267/344/272/272/350/203/275/345/253/201/345/220/227.jpg +0 -0

- cli_demo.py +261 -0

README.md

CHANGED

|

@@ -1,3 +1,82 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

<!-- <img src="assets/这个男人能嫁吗.jpg" width="900" alt="# MarryWise"> -->

|

| 3 |

+

|

| 4 |

+

<!-- [](https://github.com/saofund/marrywise-llm/stargazers) -->

|

| 5 |

+

[](LICENSE)

|

| 6 |

+

[](https://github.com/saofund/marrywise-llm/commits/main)

|

| 7 |

+

[](https://modelscope.cn/models/qwen/Qwen2-7B)

|

| 8 |

+

[](https://huggingface.co/saofund/marrywise-7b-lora)

|

| 9 |

+

[](https://x.com/976582772Wyt)

|

| 10 |

+

|

| 11 |

+

\[ English | [中文](README_zh.md) \]

|

| 12 |

+

|

| 13 |

+

<!-- **MarryWise: AI-Driven Matchmaking Analysis Tool** -->

|

| 14 |

+

|

| 15 |

+

| [](https://xn--ciqpnj1l70hxw9az0oyqy.com/) | [](https://can-he-marry.com/) |

|

| 16 |

+

|---|---|

|

| 17 |

+

| [](https://can-he-marry.com/) | [](https://can-he-marry.com/) |

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

## Features

|

| 21 |

+

|

| 22 |

+

- **AI Matchmaking Analyst**: Utilizing advanced AI technology to automatically analyze behavior and speech patterns during dates, revealing hidden motives and true personality traits.

|

| 23 |

+

- **AI Fake Matchmaker Detector**: Helps users identify potential deceitful behavior during the dating process, ensuring the authenticity and reliability of partners.

|

| 24 |

+

|

| 25 |

+

## Main Analysis Dimensions

|

| 26 |

+

|

| 27 |

+

- **Personality Authenticity**: Assessing the true character of a potential partner through behavior and speech analysis.

|

| 28 |

+

- **Actual Motives**: Revealing the real intentions behind a suitor's actions to prevent being deceived by appearances.

|

| 29 |

+

- **Personal Achievements**: Evaluating a suitor's personal achievements and capabilities to ensure their descriptions match reality.

|

| 30 |

+

|

| 31 |

+

## Fine-tuning Data Example

|

| 32 |

+

Fine-tuned using [Qwen2-7B](https://qwen.readthedocs.io/en/latest/getting_started/quickstart.html), example of fine-tuning data (coming soon).

|

| 33 |

+

|

| 34 |

+

| Input (Conditions) | Output (After analysis, large model conversion) |

|

| 35 |

+

| ------------------------------------------------------------------------------------------------------------------------------------------ | ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

|

| 36 |

+

| 1. 32 years old, 178cm <br> 2. Collects rent, annual income of 15104 <br> 3. 6 houses, one car <br> 4. Loves cleanliness, cooking, wife, parents divorced and lives with mother, mother is gentle. | 🔍 Hidden Secrets: The truth he doesn't want you to know <br> In the dazzling matchmaking market, everyone tries to show their best side. But beware of those unspoken secrets, they could be stumbling blocks on your path to happiness. <br> 🍳 The weight mystery behind cooking <br> He claims to be a cooking master, living an easy and comfortable life. But numbers don't lie: the undisclosed weight may hint at an unhealthy lifestyle. Don't overlook long-term health risks while enjoying the delicacies. <br> 💰 Questions about property origin <br> Six properties, one car—seemingly solid financial foundation. But where do these assets come from? Being a demolition household might signify luck, or it could indicate a lack of ambition. <br> 👨👩👦 Complexity of family relationships <br> He praises his mother's gentleness and easy-going nature, but does it mean he cannot be independent? The mother-son relationship in a single-parent family may be more complex than it appears, potentially affecting future family harmony. <br> 💍 The "loving wife" label trap <br> "Loving wife" sounds perfect, but beware it could be a psychological tactic. Don't be easily fooled by this label without delving into his true intentions. <br> 🔍 Deep Dive: What are the real motives? <br> He understands women, but why is he still single? There might be hidden secrets under his perfect exterior. Uncover the veil and see his true motives before making a decision. |

|

| 37 |

+

|

| 38 |

+

## Local Setup

|

| 39 |

+

|

| 40 |

+

##### Detailed Steps:

|

| 41 |

+

|

| 42 |

+

```shell

|

| 43 |

+

# Download Qwen2-7B-Instruct model: https://modelscope.cn/models/qwen/Qwen2-7B/files

|

| 44 |

+

git lfs install

|

| 45 |

+

git clone https://www.modelscope.cn/qwen/Qwen2-7B.git

|

| 46 |

+

|

| 47 |

+

# Download lora weights

|

| 48 |

+

|

| 49 |

+

# Install LLaMA-Factory

|

| 50 |

+

git clone --depth 1 https://github.com/hiyouga/LLaMA-Factory.git

|

| 51 |

+

cd LLaMA-Factory

|

| 52 |

+

pip install -e ".[torch,metrics]" # Install dependencies, follow the official instructions

|

| 53 |

+

|

| 54 |

+

# Use LLaMA-Factory to merge lora weights

|

| 55 |

+

# Requires GPU, approximately 12G VRAM usage

|

| 56 |

+

llamafactory-cli export \

|

| 57 |

+

--model_name_or_path Qwen2-7B-Instruct \ # The just downloaded Qwen2-7B weights

|

| 58 |

+

--adapter_name_or_path output_qwen\ # Path to lora weights

|

| 59 |

+

--template qwen \ # Default

|

| 60 |

+

--finetuning_type lora \ # Default

|

| 61 |

+

--export_dir lora_full_param_model \ # Output path for full weights

|

| 62 |

+

--export_size 2 \ # Default

|

| 63 |

+

--export_legacy_format False # Default

|

| 64 |

+

|

| 65 |

+

# Official Qwen2 inference test script, replace the weight path with the merged path

|

| 66 |

+

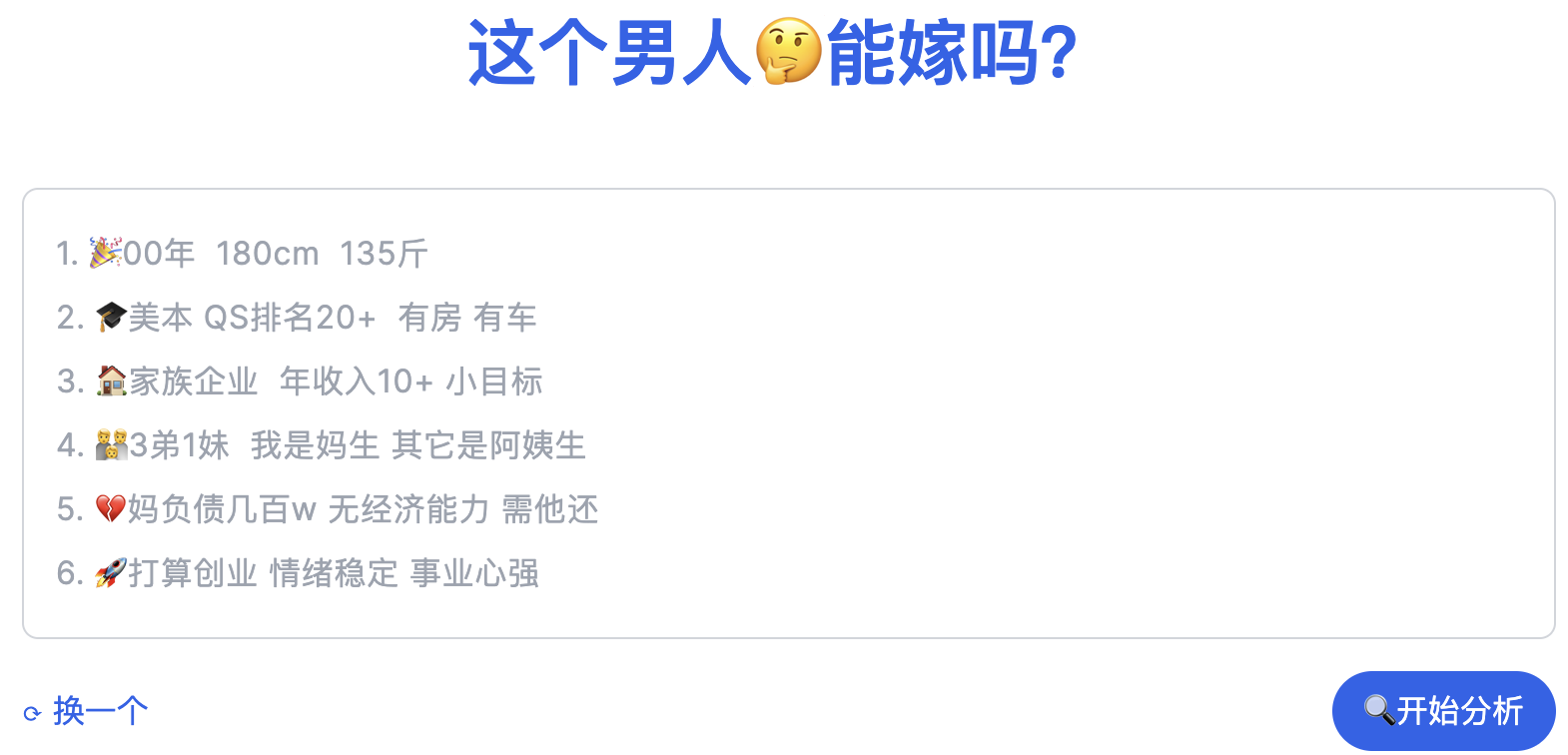

python cli_demo.py -c path_to_merged_weights # Approximately 15G VRAM

|

| 67 |

+

|

| 68 |

+

# Note: Due to the "style" characteristics of lora fine-tuning, specific prompt words need to be added at the beginning of the question:

|

| 69 |

+

# Your role is a matchmaking condition analyst, specializing in identifying the "hidden" conditions not mentioned by the male party, analyzing the "secrets not mentioned" in matchmaking. xxxx (followed by specific conditions)

|

| 70 |

+

|

| 71 |

+

```

|

| 72 |

+

##### Local CLI Result:

|

| 73 |

+

<img src="assets/sft_demo.png" width="500" alt="CLI Result">

|

| 74 |

+

|

| 75 |

+

#### Contact the Author

|

| 76 |

+

|

| 77 |

+

For dataset acquisition, models, algorithms, technical exchanges, and collaborative development, feel free to add the author's WeChat.

|

| 78 |

+

|

| 79 |

+

| Author's WeChat QR Code | sáo Fund Sponsorship |

|

| 80 |

+

|---|---|

|

| 81 |

+

|  |  |

|

| 82 |

+

| For dataset acquisition, models, algorithms, technical exchanges, and collaborative development, feel free to add the author's WeChat. | Sponsored by sáo Fund, thank you. |

|

README_zh.md

ADDED

|

@@ -0,0 +1,89 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

<!-- <img src="assets/这个男人能嫁吗.jpg" width="900" alt="# MarryWise"> -->

|

| 3 |

+

|

| 4 |

+

<!-- [](https://github.com/saofund/marrywise-llm/stargazers) -->

|

| 5 |

+

[](LICENSE)

|

| 6 |

+

[](https://github.com/saofund/marrywise-llm/commits/main)

|

| 7 |

+

[](https://modelscope.cn/models/qwen/Qwen2-7B)

|

| 8 |

+

[](https://huggingface.co/saofund/marrywise-7b-lora)

|

| 9 |

+

[](https://x.com/976582772Wyt)

|

| 10 |

+

|

| 11 |

+

\[ English | [中文](README_zh.md) \]

|

| 12 |

+

|

| 13 |

+

<!-- **MarryWise: AI驱动的相亲分析工具** -->

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

| [](https://xn--ciqpnj1l70hxw9az0oyqy.com/) | [](https://can-he-marry.com/) |

|

| 17 |

+

|---|---|

|

| 18 |

+

| [](https://can-he-marry.com/) | [](https://can-he-marry.com/) |

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

## 功能特点

|

| 22 |

+

|

| 23 |

+

- **AI 相亲分析师**:利用先进的 AI 技术,自动分析约会中的行为和言语模式,揭示隐藏的动机和真实的性格特征。

|

| 24 |

+

- **AI 打假媒婆**:帮助用户识别约会过程中的潜在欺骗行为,确保伴侣的真实性和可靠性。

|

| 25 |

+

|

| 26 |

+

## 主要分析维度

|

| 27 |

+

|

| 28 |

+

- **性格真实性**:通过行为和言语分析,评估潜在伴侣的真实性格。

|

| 29 |

+

- **实际动机**:揭示求婚者行为背后的真实意图,防止被表象所迷惑。

|

| 30 |

+

- **个人成就**:评估求婚者的个人成就和能力,确保其描述与实际情况一致。

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

## 微调数据示例

|

| 34 |

+

使用 [Qwen2-7B](https://qwen.readthedocs.io/en/latest/getting_started/quickstart.html) 微调,微调数据示例(即将开源)。

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

| 输入(条件) | 输出:(分析、大模型转换后) |

|

| 38 |

+

| -------------------------------------------------------------------------------------------------------------------------------- | ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

|

| 39 |

+

| 1. 32岁,178 <br> 2. 收房租,年收入15104 <br> 3. 6套房,一辆代步车 <br> 4. 爱干净,爱做饭,爱老婆,父母离异跟母亲,母亲温柔。 | 🔍 隐藏的秘密:他不想让你知道的真相 <br> 在闪耀的相亲市场,每个人都试图展示自己最好的一面。但小心那些未被言说的秘密,它们可能是你幸福之路上的绊脚石。 <br> 🍳 烹饪背后的体重迷思 <br> 他自称烹饪大师,生活轻松自在。但数字不会说谎:未提及的体重可能暗示着不健康的生活方式。在你品尝美食的同时,不要忽视长期健康的风险。 <br> 💰 财产来源的疑问 <br> 六套房产,一辆车——看似稳固的经济基础。但这些财产来自何方?拆迁户的身份可能是幸运的象征,也可能是缺乏进取心的标��。 <br> 👨👩👦 家庭关系的复杂性 <br> 他称赞母亲的温柔和易相处,但这是否意味着他无法独立?单亲家庭背景下的母子关系可能比表面看起来要复杂得多,这可能会影响未来的家庭和谐。 <br> 💍 “爱妻”标签的陷阱 <br> “爱老婆”听起来很完美,但小心这是一种心理战术。在深入了解他的真实意图之前,不要轻易被这个标签迷惑。 <br> 🔍 深入挖掘:真正的动机是什么? <br> 他了解女性,但为何仍单身?在他的完美外表下,可能隐藏着不为人知的秘密。在做出决定之前,请揭开那层薄纱,看清他的真实动机。 |

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

## 本地启动

|

| 43 |

+

|

| 44 |

+

##### 详细步骤:

|

| 45 |

+

|

| 46 |

+

```shell

|

| 47 |

+

# 下载Qwen2-7B-Instruct模型:https://modelscope.cn/models/qwen/Qwen2-7B/files

|

| 48 |

+

git lfs install

|

| 49 |

+

git clone https://www.modelscope.cn/qwen/Qwen2-7B.git

|

| 50 |

+

|

| 51 |

+

# 下载lora权重

|

| 52 |

+

|

| 53 |

+

# 安装 LLaMA-Factory

|

| 54 |

+

git clone --depth 1 https://github.com/hiyouga/LLaMA-Factory.git

|

| 55 |

+

cd LLaMA-Factory

|

| 56 |

+

pip install -e ".[torch,metrics]" # 安装依赖,这里最好按官方乖乖装完

|

| 57 |

+

|

| 58 |

+

# 使用LLaMA-Factory 合并lora权重

|

| 59 |

+

# 需要GPU,大概12G显存占用

|

| 60 |

+

llamafactory-cli export \

|

| 61 |

+

--model_name_or_path Qwen2-7B-Instruct \ # 刚下载的Qwen2-7B权重

|

| 62 |

+

--adapter_name_or_path output_qwen\ # lora权重路径

|

| 63 |

+

--template qwen \ # 默认

|

| 64 |

+

--finetuning_type lora \ # 默认

|

| 65 |

+

--export_dir lora_full_param_model \ # 完整权重的输出路径

|

| 66 |

+

--export_size 2 \ # 默认

|

| 67 |

+

--export_legacy_format False # 默认

|

| 68 |

+

|

| 69 |

+

# Qwen2的官方推理测试脚本,替换权重路径为刚才的合并后的路径

|

| 70 |

+

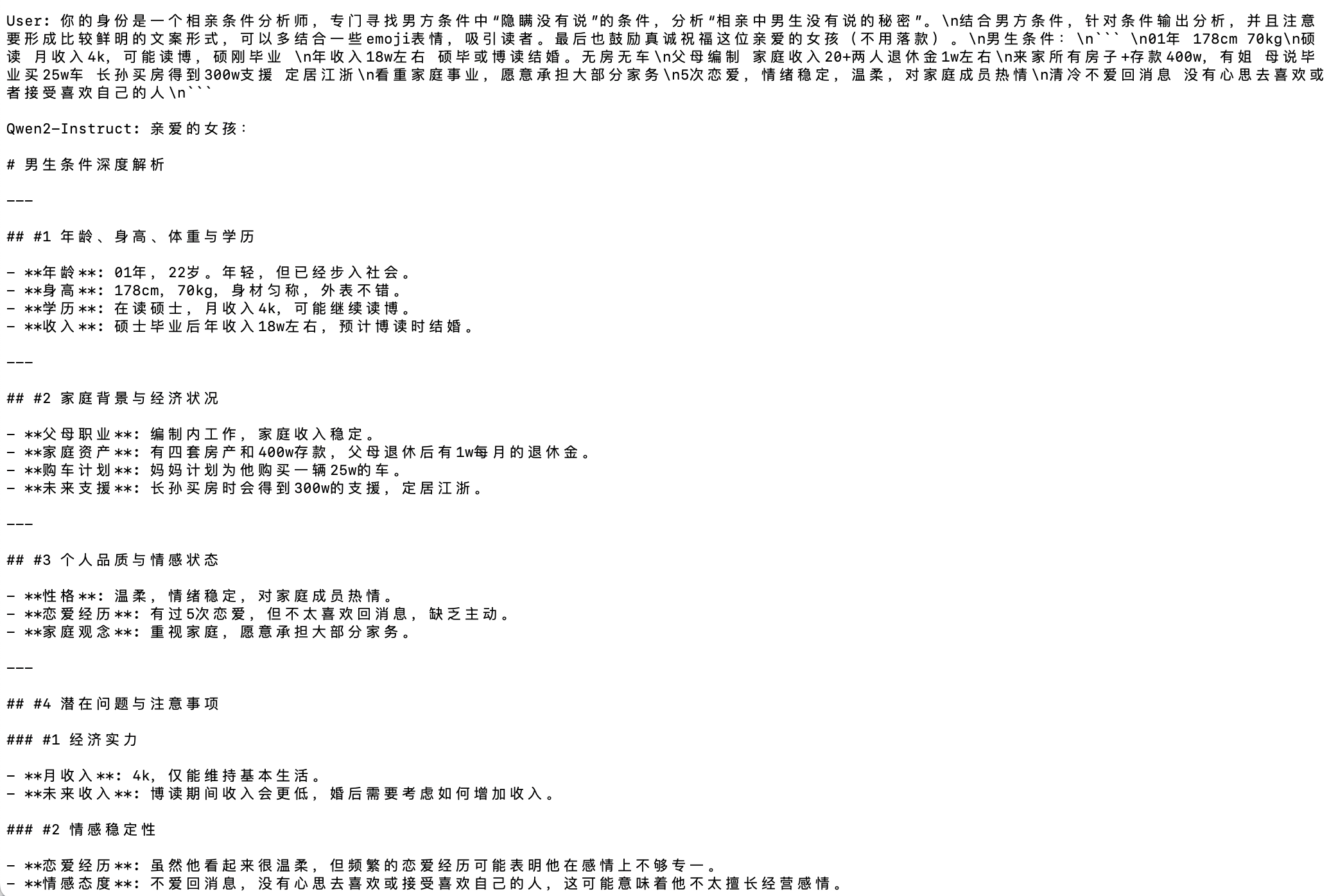

python cli_demo.py -c 合并后的权重路径 # 大概15G显存

|

| 71 |

+

|

| 72 |

+

# 请注意,由于lora微调的“风格”特性,需要在问题的开头加入特定提示词:

|

| 73 |

+

# 你的身份是一个相亲条件分析师,专门寻找男方条件中“隐瞒没有说”的条件,分析“相亲中男生没有说的秘密”。xxxx(后面跟具体条件)

|

| 74 |

+

|

| 75 |

+

```

|

| 76 |

+

|

| 77 |

+

|

| 78 |

+

##### 本地运行cli结果:

|

| 79 |

+

<img src="assets/sft_demo.png" width="500" alt="CLI Result">

|

| 80 |

+

|

| 81 |

+

|

| 82 |

+

#### 欢迎联系作者

|

| 83 |

+

|

| 84 |

+

数据集获取、模型、算法、技术交流、合作开发等,欢迎添加作者微信。

|

| 85 |

+

|

| 86 |

+

| 作者微信二维码 | sáo基金赞助 |

|

| 87 |

+

|---|---|

|

| 88 |

+

|  |  |

|

| 89 |

+

| 数据集获取、模型、算法、技术交流、合作开发等,欢迎添加作者微信。 | 由 sáo 基金赞助,感谢。 |

|

assets/Wechat.jpeg

ADDED

|

assets/product1.png

ADDED

|

assets/product3.png

ADDED

|

assets/saofund2.png

ADDED

|

assets/sft_demo.png

ADDED

|

assets//350/277/231/344/270/252/347/224/267/344/272/272/350/203/275/345/253/201/345/220/227.jpg

ADDED

|

cli_demo.py

ADDED

|

@@ -0,0 +1,261 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""A simple command-line interactive chat demo."""

|

| 2 |

+

|

| 3 |

+

import argparse

|

| 4 |

+

import os

|

| 5 |

+

import platform

|

| 6 |

+

import shutil

|

| 7 |

+

from copy import deepcopy

|

| 8 |

+

from threading import Thread

|

| 9 |

+

|

| 10 |

+

import torch

|

| 11 |

+

from transformers import AutoModelForCausalLM, AutoTokenizer, TextIteratorStreamer

|

| 12 |

+

from transformers.trainer_utils import set_seed

|

| 13 |

+

|

| 14 |

+

DEFAULT_CKPT_PATH = 'Qwen/Qwen2-7B-Instruct'

|

| 15 |

+

|

| 16 |

+

_WELCOME_MSG = '''\

|

| 17 |

+

Welcome to use Qwen2-Instruct model, type text to start chat, type :h to show command help.

|

| 18 |

+

(欢迎使用 Qwen2-Instruct 模型,输入内容即可进行对话,:h 显示命令帮助。)

|

| 19 |

+

|

| 20 |

+

Note: This demo is governed by the original license of Qwen2.

|

| 21 |

+

We strongly advise users not to knowingly generate or allow others to knowingly generate harmful content, including hate speech, violence, pornography, deception, etc.

|

| 22 |

+

(注:本演示受Qwen2的许可协议限制。我们强烈建议,用户不应传播及不应允许他人传播以下内容,包括但不限于仇恨言论、暴力、色情、欺诈相关的有害信息。)

|

| 23 |

+

'''

|

| 24 |

+

_HELP_MSG = '''\

|

| 25 |

+

Commands:

|

| 26 |

+

:help / :h Show this help message 显示帮助信息

|

| 27 |

+

:exit / :quit / :q Exit the demo 退出Demo

|

| 28 |

+

:clear / :cl Clear screen 清屏

|

| 29 |

+

:clear-history / :clh Clear history 清除对话历史

|

| 30 |

+

:history / :his Show history 显示对话历史

|

| 31 |

+

:seed Show current random seed 显示当前随机种子

|

| 32 |

+

:seed <N> Set random seed to <N> 设置随机种子

|

| 33 |

+

:conf Show current generation config 显示生成配置

|

| 34 |

+

:conf <key>=<value> Change generation config 修改生成配置

|

| 35 |

+

:reset-conf Reset generation config 重置生成配置

|

| 36 |

+

'''

|

| 37 |

+

_ALL_COMMAND_NAMES = [

|

| 38 |

+

'help', 'h', 'exit', 'quit', 'q', 'clear', 'cl', 'clear-history', 'clh', 'history', 'his',

|

| 39 |

+

'seed', 'conf', 'reset-conf',

|

| 40 |

+

]

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

def _setup_readline():

|

| 44 |

+

try:

|

| 45 |

+

import readline

|

| 46 |

+

except ImportError:

|

| 47 |

+

return

|

| 48 |

+

|

| 49 |

+

_matches = []

|

| 50 |

+

|

| 51 |

+

def _completer(text, state):

|

| 52 |

+

nonlocal _matches

|

| 53 |

+

|

| 54 |

+

if state == 0:

|

| 55 |

+

_matches = [cmd_name for cmd_name in _ALL_COMMAND_NAMES if cmd_name.startswith(text)]

|

| 56 |

+

if 0 <= state < len(_matches):

|

| 57 |

+

return _matches[state]

|

| 58 |

+

return None

|

| 59 |

+

|

| 60 |

+

readline.set_completer(_completer)

|

| 61 |

+

readline.parse_and_bind('tab: complete')

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

def _load_model_tokenizer(args):

|

| 65 |

+

tokenizer = AutoTokenizer.from_pretrained(

|

| 66 |

+

args.checkpoint_path, resume_download=True,

|

| 67 |

+

)

|

| 68 |

+

|

| 69 |

+

if args.cpu_only:

|

| 70 |

+

device_map = "cpu"

|

| 71 |

+

else:

|

| 72 |

+

device_map = "auto"

|

| 73 |

+

|

| 74 |

+

model = AutoModelForCausalLM.from_pretrained(

|

| 75 |

+

args.checkpoint_path,

|

| 76 |

+

torch_dtype="auto",

|

| 77 |

+

device_map=device_map,

|

| 78 |

+

resume_download=True,

|

| 79 |

+

).eval()

|

| 80 |

+

model.generation_config.max_new_tokens = 2048 # For chat.

|

| 81 |

+

|

| 82 |

+

return model, tokenizer

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

def _gc():

|

| 86 |

+

import gc

|

| 87 |

+

gc.collect()

|

| 88 |

+

if torch.cuda.is_available():

|

| 89 |

+

torch.cuda.empty_cache()

|

| 90 |

+

|

| 91 |

+

|

| 92 |

+

def _clear_screen():

|

| 93 |

+

if platform.system() == "Windows":

|

| 94 |

+

os.system("cls")

|

| 95 |

+

else:

|

| 96 |

+

os.system("clear")

|

| 97 |

+

|

| 98 |

+

|

| 99 |

+

def _print_history(history):

|

| 100 |

+

terminal_width = shutil.get_terminal_size()[0]

|

| 101 |

+

print(f'History ({len(history)})'.center(terminal_width, '='))

|

| 102 |

+

for index, (query, response) in enumerate(history):

|

| 103 |

+

print(f'User[{index}]: {query}')

|

| 104 |

+

print(f'QWen[{index}]: {response}')

|

| 105 |

+

print('=' * terminal_width)

|

| 106 |

+

|

| 107 |

+

|

| 108 |

+

def _get_input() -> str:

|

| 109 |

+

while True:

|

| 110 |

+

try:

|

| 111 |

+

message = input('User> ').strip()

|

| 112 |

+

except UnicodeDecodeError:

|

| 113 |

+

print('[ERROR] Encoding error in input')

|

| 114 |

+

continue

|

| 115 |

+

except KeyboardInterrupt:

|

| 116 |

+

exit(1)

|

| 117 |

+

if message:

|

| 118 |

+

return message

|

| 119 |

+

print('[ERROR] Query is empty')

|

| 120 |

+

|

| 121 |

+

|

| 122 |

+

def _chat_stream(model, tokenizer, query, history):

|

| 123 |

+

conversation = [

|

| 124 |

+

{'role': 'system', 'content': 'You are a helpful assistant.'},

|

| 125 |

+

]

|

| 126 |

+

for query_h, response_h in history:

|

| 127 |

+

conversation.append({'role': 'user', 'content': query_h})

|

| 128 |

+

conversation.append({'role': 'assistant', 'content': response_h})

|

| 129 |

+

conversation.append({'role': 'user', 'content': query})

|

| 130 |

+

inputs = tokenizer.apply_chat_template(

|

| 131 |

+

conversation,

|

| 132 |

+

add_generation_prompt=True,

|

| 133 |

+

return_tensors='pt',

|

| 134 |

+

)

|

| 135 |

+

inputs = inputs.to(model.device)

|

| 136 |

+

streamer = TextIteratorStreamer(tokenizer=tokenizer, skip_prompt=True, timeout=60.0, skip_special_tokens=True)

|

| 137 |

+

generation_kwargs = dict(

|

| 138 |

+

input_ids=inputs,

|

| 139 |

+

streamer=streamer,

|

| 140 |

+

)

|

| 141 |

+

thread = Thread(target=model.generate, kwargs=generation_kwargs)

|

| 142 |

+

thread.start()

|

| 143 |

+

|

| 144 |

+

for new_text in streamer:

|

| 145 |

+

yield new_text

|

| 146 |

+

|

| 147 |

+

|

| 148 |

+

def main():

|

| 149 |

+

parser = argparse.ArgumentParser(

|

| 150 |

+

description='QWen2-Instruct command-line interactive chat demo.')

|

| 151 |

+

parser.add_argument("-c", "--checkpoint-path", type=str, default=DEFAULT_CKPT_PATH,

|

| 152 |

+

help="Checkpoint name or path, default to %(default)r")

|

| 153 |

+

parser.add_argument("-s", "--seed", type=int, default=1234, help="Random seed")

|

| 154 |

+

parser.add_argument("--cpu-only", action="store_true", help="Run demo with CPU only")

|

| 155 |

+

args = parser.parse_args()

|

| 156 |

+

|

| 157 |

+

history, response = [], ''

|

| 158 |

+

|

| 159 |

+

model, tokenizer = _load_model_tokenizer(args)

|

| 160 |

+

orig_gen_config = deepcopy(model.generation_config)

|

| 161 |

+

|

| 162 |

+

_setup_readline()

|

| 163 |

+

|

| 164 |

+

_clear_screen()

|

| 165 |

+

print(_WELCOME_MSG)

|

| 166 |

+

|

| 167 |

+

seed = args.seed

|

| 168 |

+

|

| 169 |

+

while True:

|

| 170 |

+

query = _get_input()

|

| 171 |

+

|

| 172 |

+

# Process commands.

|

| 173 |

+

if query.startswith(':'):

|

| 174 |

+

command_words = query[1:].strip().split()

|

| 175 |

+

if not command_words:

|

| 176 |

+

command = ''

|

| 177 |

+

else:

|

| 178 |

+

command = command_words[0]

|

| 179 |

+

|

| 180 |

+

if command in ['exit', 'quit', 'q']:

|

| 181 |

+

break

|

| 182 |

+

elif command in ['clear', 'cl']:

|

| 183 |

+

_clear_screen()

|

| 184 |

+

print(_WELCOME_MSG)

|

| 185 |

+

_gc()

|

| 186 |

+

continue

|

| 187 |

+

elif command in ['clear-history', 'clh']:

|

| 188 |

+

print(f'[INFO] All {len(history)} history cleared')

|

| 189 |

+

history.clear()

|

| 190 |

+

_gc()

|

| 191 |

+

continue

|

| 192 |

+

elif command in ['help', 'h']:

|

| 193 |

+

print(_HELP_MSG)

|

| 194 |

+

continue

|

| 195 |

+

elif command in ['history', 'his']:

|

| 196 |

+

_print_history(history)

|

| 197 |

+

continue

|

| 198 |

+

elif command in ['seed']:

|

| 199 |

+

if len(command_words) == 1:

|

| 200 |

+

print(f'[INFO] Current random seed: {seed}')

|

| 201 |

+

continue

|

| 202 |

+

else:

|

| 203 |

+

new_seed_s = command_words[1]

|

| 204 |

+

try:

|

| 205 |

+

new_seed = int(new_seed_s)

|

| 206 |

+

except ValueError:

|

| 207 |

+

print(f'[WARNING] Fail to change random seed: {new_seed_s!r} is not a valid number')

|

| 208 |

+

else:

|

| 209 |

+

print(f'[INFO] Random seed changed to {new_seed}')

|

| 210 |

+

seed = new_seed

|

| 211 |

+

continue

|

| 212 |

+

elif command in ['conf']:

|

| 213 |

+

if len(command_words) == 1:

|

| 214 |

+

print(model.generation_config)

|

| 215 |

+

else:

|

| 216 |

+

for key_value_pairs_str in command_words[1:]:

|

| 217 |

+

eq_idx = key_value_pairs_str.find('=')

|

| 218 |

+

if eq_idx == -1:

|

| 219 |

+

print('[WARNING] format: <key>=<value>')

|

| 220 |

+

continue

|

| 221 |

+

conf_key, conf_value_str = key_value_pairs_str[:eq_idx], key_value_pairs_str[eq_idx + 1:]

|

| 222 |

+

try:

|

| 223 |

+

conf_value = eval(conf_value_str)

|

| 224 |

+

except Exception as e:

|

| 225 |

+

print(e)

|

| 226 |

+

continue

|

| 227 |

+

else:

|

| 228 |

+

print(f'[INFO] Change config: model.generation_config.{conf_key} = {conf_value}')

|

| 229 |

+

setattr(model.generation_config, conf_key, conf_value)

|

| 230 |

+

continue

|

| 231 |

+

elif command in ['reset-conf']:

|

| 232 |

+

print('[INFO] Reset generation config')

|

| 233 |

+

model.generation_config = deepcopy(orig_gen_config)

|

| 234 |

+

print(model.generation_config)

|

| 235 |

+

continue

|

| 236 |

+

else:

|

| 237 |

+

# As normal query.

|

| 238 |

+

pass

|

| 239 |

+

|

| 240 |

+

# Run chat.

|

| 241 |

+

set_seed(seed)

|

| 242 |

+

_clear_screen()

|

| 243 |

+

print(f"\nUser: {query}")

|

| 244 |

+

print(f"\nQwen2-Instruct: ", end="")

|

| 245 |

+

try:

|

| 246 |

+

partial_text = ''

|

| 247 |

+

for new_text in _chat_stream(model, tokenizer, query, history):

|

| 248 |

+

print(new_text, end='', flush=True)

|

| 249 |

+

partial_text += new_text

|

| 250 |

+

response = partial_text

|

| 251 |

+

print()

|

| 252 |

+

|

| 253 |

+

except KeyboardInterrupt:

|

| 254 |

+

print('[WARNING] Generation interrupted')

|

| 255 |

+

continue

|

| 256 |

+

|

| 257 |

+

history.append((query, response))

|

| 258 |

+

|

| 259 |

+

|

| 260 |

+

if __name__ == "__main__":

|

| 261 |

+

main()

|