update demo.py

Browse files

demo.py

CHANGED

|

@@ -98,256 +98,247 @@ example_videos = gr.components.Dataset(

|

|

| 98 |

)

|

| 99 |

|

| 100 |

|

| 101 |

-

|

| 102 |

-

|

| 103 |

-

|

| 104 |

-

|

| 105 |

-

model = Model(device=args.device)

|

| 106 |

-

|

| 107 |

-

with gr.Blocks(theme=args.theme, css="style.css") as demo:

|

| 108 |

-

gr.Markdown(DESCRIPTION)

|

| 109 |

-

|

| 110 |

-

with gr.Box():

|

| 111 |

-

gr.Markdown(

|

| 112 |

-

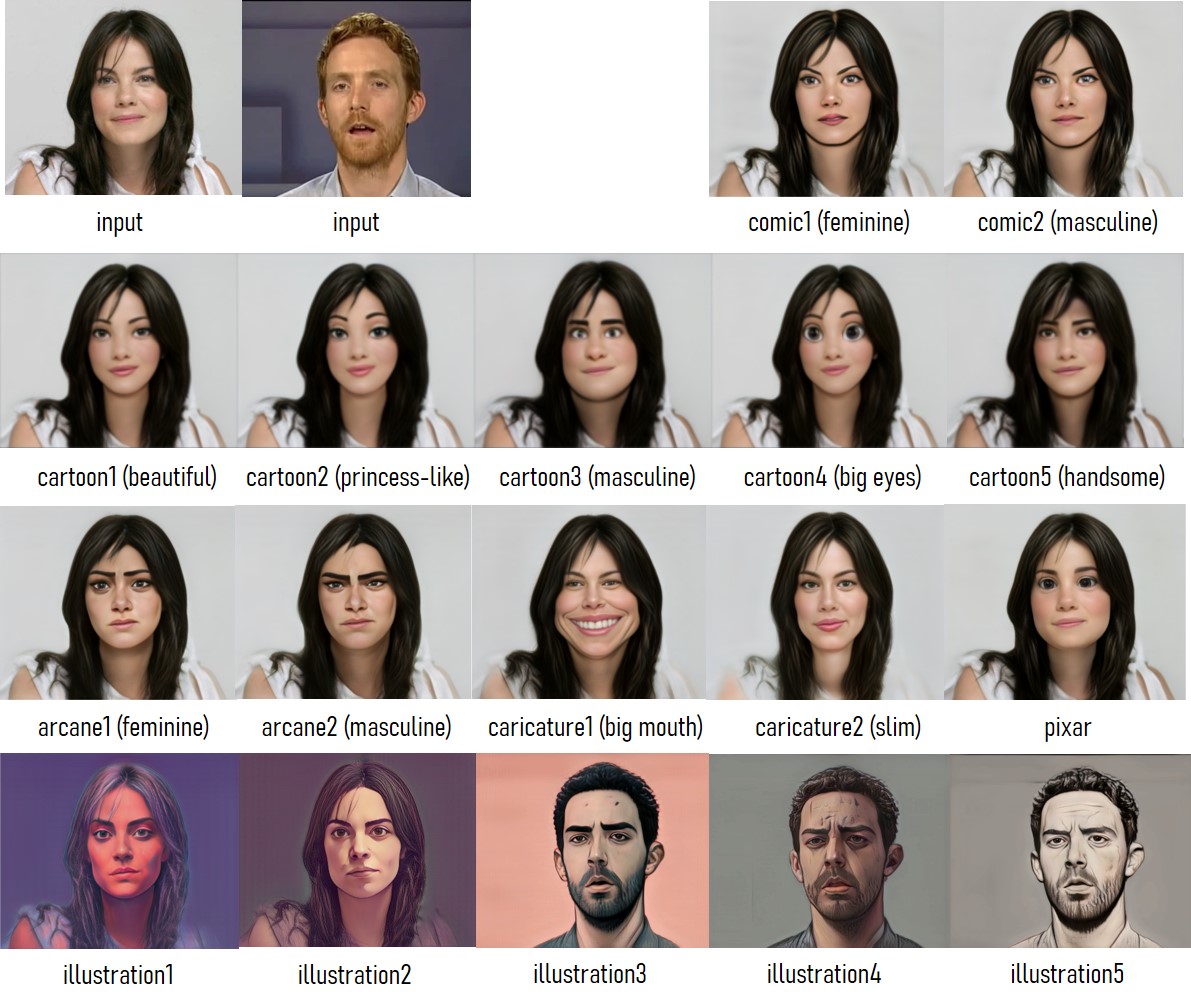

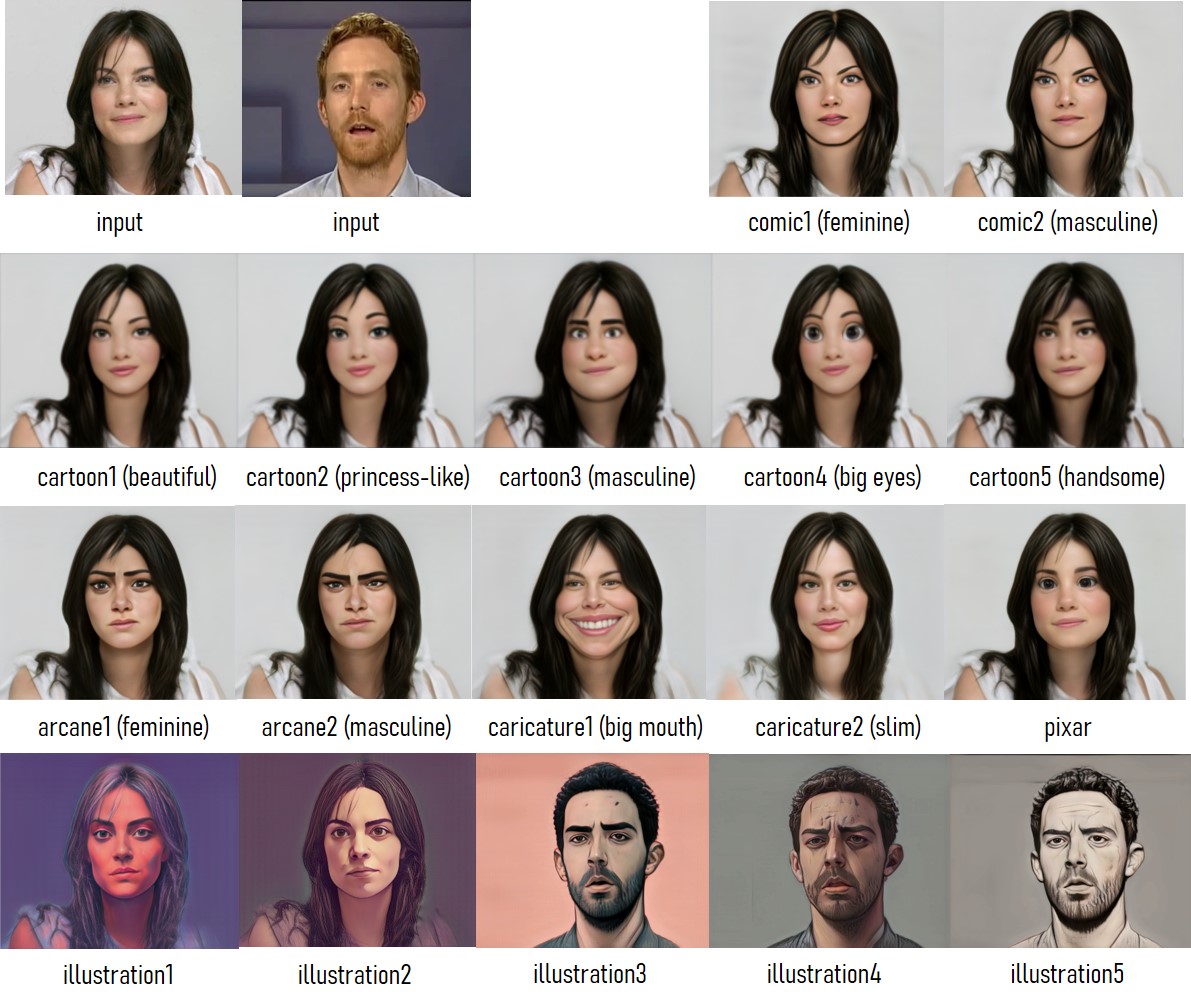

"""## Step 1(Select Style)

|

| 113 |

-

- Select **Style Type**.

|

| 114 |

-

- Type with `-d` means it supports style degree adjustment.

|

| 115 |

-

- Type without `-d` usually has better toonification quality.

|

| 116 |

-

|

| 117 |

-

"""

|

| 118 |

-

)

|

| 119 |

-

with gr.Row():

|

| 120 |

-

with gr.Column():

|

| 121 |

-

gr.Markdown("""Select Style Type""")

|

| 122 |

-

with gr.Row():

|

| 123 |

-

style_type = gr.Radio(

|

| 124 |

-

label="Style Type",

|

| 125 |

-

choices=[

|

| 126 |

-

"cartoon1",

|

| 127 |

-

"cartoon1-d",

|

| 128 |

-

"cartoon2-d",

|

| 129 |

-

"cartoon3-d",

|

| 130 |

-

"cartoon4",

|

| 131 |

-

"cartoon4-d",

|

| 132 |

-

"cartoon5-d",

|

| 133 |

-

"comic1-d",

|

| 134 |

-

"comic2-d",

|

| 135 |

-

"arcane1",

|

| 136 |

-

"arcane1-d",

|

| 137 |

-

"arcane2",

|

| 138 |

-

"arcane2-d",

|

| 139 |

-

"caricature1",

|

| 140 |

-

"caricature2",

|

| 141 |

-

"pixar",

|

| 142 |

-

"pixar-d",

|

| 143 |

-

"illustration1-d",

|

| 144 |

-

"illustration2-d",

|

| 145 |

-

"illustration3-d",

|

| 146 |

-

"illustration4-d",

|

| 147 |

-

"illustration5-d",

|

| 148 |

-

],

|

| 149 |

-

)

|

| 150 |

-

exstyle = gr.Variable()

|

| 151 |

-

with gr.Row():

|

| 152 |

-

loadmodel_button = gr.Button("Load Model")

|

| 153 |

-

with gr.Row():

|

| 154 |

-

load_info = gr.Textbox(

|

| 155 |

-

label="Process Information",

|

| 156 |

-

interactive=False,

|

| 157 |

-

value="No model loaded.",

|

| 158 |

-

)

|

| 159 |

-

with gr.Column():

|

| 160 |

-

gr.Markdown(

|

| 161 |

-

"""Reference Styles

|

| 162 |

-

"""

|

| 163 |

-

)

|

| 164 |

|

| 165 |

-

|

| 166 |

-

|

| 167 |

-

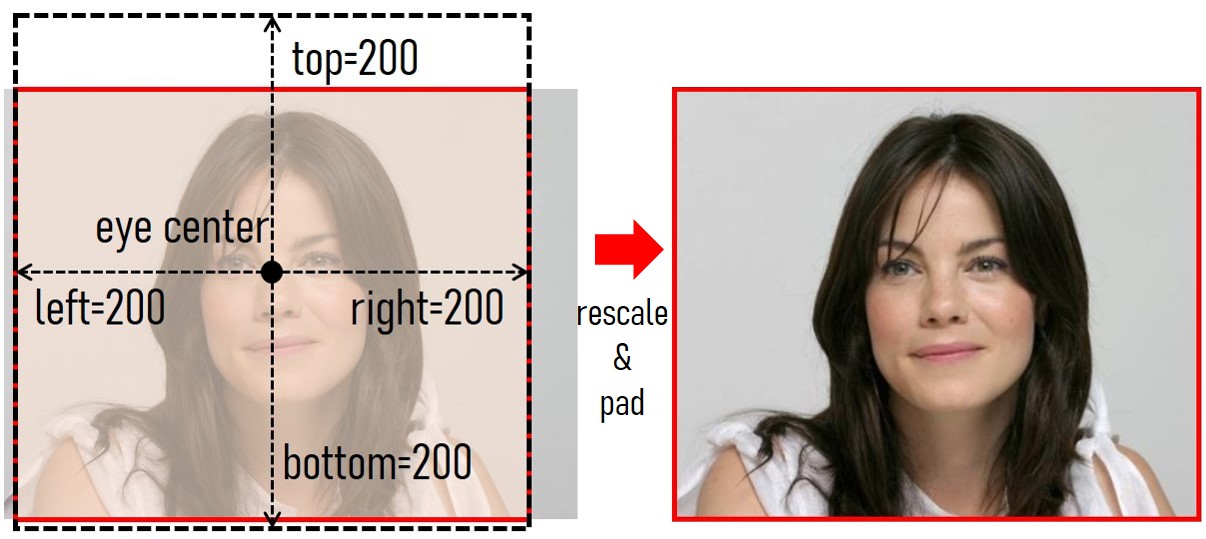

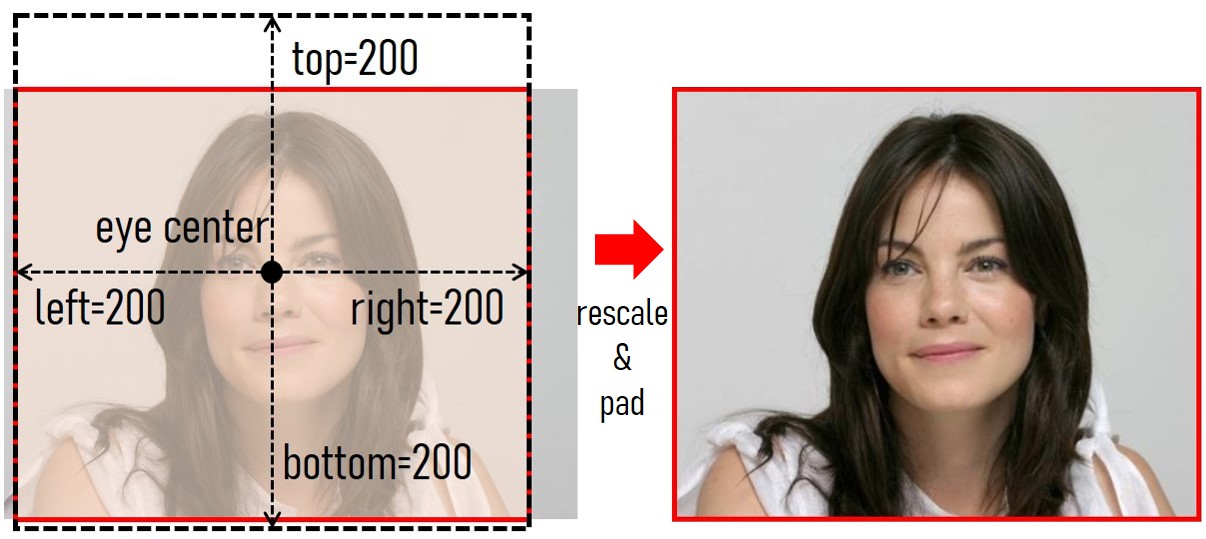

"""## Step 2 (Preprocess Input Image / Video)

|

| 168 |

-

- Drop an image/video containing a near-frontal face to the **Input Image**/**Input Video**.

|

| 169 |

-

- Hit the **Rescale Image**/**Rescale First Frame** button.

|

| 170 |

-

- Rescale the input to make it best fit the model.

|

| 171 |

-

- The final image result will be based on this **Rescaled Face**. Use padding parameters to adjust the background space.

|

| 172 |

-

- **<font color=red>Solution to [Error: no face detected!]</font>**: VToonify uses dlib.get_frontal_face_detector but sometimes it fails to detect a face. You can try several times or use other images until a face is detected, then switch back to the original image.

|

| 173 |

-

- For video input, further hit the **Rescale Video** button.

|

| 174 |

-

- The final video result will be based on this **Rescaled Video**. To avoid overload, video is cut to at most **100/300** frames for CPU/GPU, respectively.

|

| 175 |

-

|

| 176 |

-

"""

|

| 177 |

-

)

|

| 178 |

-

with gr.Row():

|

| 179 |

-

with gr.Box():

|

| 180 |

-

with gr.Column():

|

| 181 |

-

gr.Markdown(

|

| 182 |

-

"""Choose the padding parameters.

|

| 183 |

-

"""

|

| 184 |

-

)

|

| 185 |

-

with gr.Row():

|

| 186 |

-

top = gr.Slider(128, 256, value=200, step=8, label="top")

|

| 187 |

-

with gr.Row():

|

| 188 |

-

bottom = gr.Slider(

|

| 189 |

-

128, 256, value=200, step=8, label="bottom"

|

| 190 |

-

)

|

| 191 |

-

with gr.Row():

|

| 192 |

-

left = gr.Slider(128, 256, value=200, step=8, label="left")

|

| 193 |

-

with gr.Row():

|

| 194 |

-

right = gr.Slider(

|

| 195 |

-

128, 256, value=200, step=8, label="right"

|

| 196 |

-

)

|

| 197 |

-

with gr.Box():

|

| 198 |

-

with gr.Column():

|

| 199 |

-

gr.Markdown("""Input""")

|

| 200 |

-

with gr.Row():

|

| 201 |

-

input_image = gr.Image(label="Input Image", type="filepath")

|

| 202 |

-

with gr.Row():

|

| 203 |

-

preprocess_image_button = gr.Button("Rescale Image")

|

| 204 |

-

with gr.Row():

|

| 205 |

-

input_video = gr.Video(

|

| 206 |

-

label="Input Video",

|

| 207 |

-

mirror_webcam=False,

|

| 208 |

-

type="filepath",

|

| 209 |

-

)

|

| 210 |

-

with gr.Row():

|

| 211 |

-

preprocess_video0_button = gr.Button("Rescale First Frame")

|

| 212 |

-

preprocess_video1_button = gr.Button("Rescale Video")

|

| 213 |

-

|

| 214 |

-

with gr.Box():

|

| 215 |

-

with gr.Column():

|

| 216 |

-

gr.Markdown("""View""")

|

| 217 |

-

with gr.Row():

|

| 218 |

-

input_info = gr.Textbox(

|

| 219 |

-

label="Process Information",

|

| 220 |

-

interactive=False,

|

| 221 |

-

value="n.a.",

|

| 222 |

-

)

|

| 223 |

-

with gr.Row():

|

| 224 |

-

aligned_face = gr.Image(

|

| 225 |

-

label="Rescaled Face", type="numpy", interactive=False

|

| 226 |

-

)

|

| 227 |

-

instyle = gr.Variable()

|

| 228 |

-

with gr.Row():

|

| 229 |

-

aligned_video = gr.Video(

|

| 230 |

-

label="Rescaled Video", type="mp4", interactive=False

|

| 231 |

-

)

|

| 232 |

-

with gr.Row():

|

| 233 |

-

with gr.Column():

|

| 234 |

-

paths = [

|

| 235 |

-

"./vtoonify/data/pexels-andrea-piacquadio-733872.jpg",

|

| 236 |

-

"./vtoonify/data/i5R8hbZFDdc.jpg",

|

| 237 |

-

"./vtoonify/data/yRpe13BHdKw.jpg",

|

| 238 |

-

"./vtoonify/data/ILip77SbmOE.jpg",

|

| 239 |

-

"./vtoonify/data/077436.jpg",

|

| 240 |

-

"./vtoonify/data/081680.jpg",

|

| 241 |

-

]

|

| 242 |

-

example_images = gr.Dataset(

|

| 243 |

-

components=[input_image],

|

| 244 |

-

samples=[[path] for path in paths],

|

| 245 |

-

label="Image Examples",

|

| 246 |

-

)

|

| 247 |

-

with gr.Column():

|

| 248 |

-

# example_videos = gr.Dataset(components=[input_video], samples=[['./vtoonify/data/529.mp4']], type='values')

|

| 249 |

-

# to render video example on mouse hover/click

|

| 250 |

-

example_videos.render()

|

| 251 |

|

| 252 |

-

|

| 253 |

-

|

| 254 |

-

|

| 255 |

-

|

| 256 |

-

|

|

|

|

| 257 |

|

| 258 |

-

|

| 259 |

-

|

| 260 |

-

with gr.

|

| 261 |

-

gr.

|

| 262 |

-

|

| 263 |

-

with gr.

|

| 264 |

-

gr.

|

| 265 |

-

""

|

| 266 |

-

|

| 267 |

-

|

| 268 |

-

|

| 269 |

-

-

|

| 270 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 271 |

)

|

| 272 |

-

|

| 273 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 274 |

)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 275 |

with gr.Column():

|

| 276 |

gr.Markdown(

|

| 277 |

-

"""

|

| 278 |

-

|

| 279 |

)

|

| 280 |

-

|

| 281 |

-

|

| 282 |

-

|

| 283 |

-

|

| 284 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

| 285 |

with gr.Column():

|

|

|

|

| 286 |

with gr.Row():

|

| 287 |

-

|

| 288 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 289 |

)

|

| 290 |

with gr.Row():

|

| 291 |

-

|

|

|

|

|

|

|

|

|

|

| 292 |

with gr.Column():

|

|

|

|

| 293 |

with gr.Row():

|

| 294 |

-

|

| 295 |

-

label="

|

|

|

|

|

|

|

| 296 |

)

|

| 297 |

with gr.Row():

|

| 298 |

-

|

| 299 |

-

|

| 300 |

-

|

| 301 |

-

|

| 302 |

-

|

| 303 |

-

|

| 304 |

-

|

| 305 |

-

|

| 306 |

-

|

| 307 |

-

|

| 308 |

-

|

| 309 |

-

|

| 310 |

-

|

| 311 |

-

|

| 312 |

-

|

| 313 |

-

|

| 314 |

-

|

| 315 |

-

|

| 316 |

-

|

| 317 |

-

|

| 318 |

-

|

| 319 |

-

|

| 320 |

-

|

| 321 |

-

|

| 322 |

-

|

| 323 |

-

|

| 324 |

-

|

| 325 |

-

|

| 326 |

-

|

| 327 |

-

|

| 328 |

-

|

| 329 |

-

|

| 330 |

-

|

| 331 |

-

|

| 332 |

-

|

| 333 |

-

|

| 334 |

-

|

| 335 |

-

|

| 336 |

-

|

| 337 |

-

|

| 338 |

-

|

| 339 |

-

|

| 340 |

-

|

| 341 |

-

|

| 342 |

-

|

| 343 |

-

|

| 344 |

-

|

| 345 |

-

|

| 346 |

-

|

| 347 |

-

|

| 348 |

-

|

| 349 |

-

|

| 350 |

-

|

| 351 |

-

|

| 352 |

-

|

| 353 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 98 |

)

|

| 99 |

|

| 100 |

|

| 101 |

+

args = parse_args()

|

| 102 |

+

args.device = "cuda" if torch.cuda.is_available() else "cpu"

|

| 103 |

+

print("*** Now using %s." % (args.device))

|

| 104 |

+

model = Model(device=args.device)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 105 |

|

| 106 |

+

with gr.Blocks(theme=args.theme, css="style.css") as demo:

|

| 107 |

+

gr.Markdown(DESCRIPTION)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 108 |

|

| 109 |

+

with gr.Box():

|

| 110 |

+

gr.Markdown(

|

| 111 |

+

"""## Step 1(Select Style)

|

| 112 |

+

- Select **Style Type**.

|

| 113 |

+

- Type with `-d` means it supports style degree adjustment.

|

| 114 |

+

- Type without `-d` usually has better toonification quality.

|

| 115 |

|

| 116 |

+

"""

|

| 117 |

+

)

|

| 118 |

+

with gr.Row():

|

| 119 |

+

with gr.Column():

|

| 120 |

+

gr.Markdown("""Select Style Type""")

|

| 121 |

+

with gr.Row():

|

| 122 |

+

style_type = gr.Radio(

|

| 123 |

+

label="Style Type",

|

| 124 |

+

choices=[

|

| 125 |

+

"cartoon1",

|

| 126 |

+

"cartoon1-d",

|

| 127 |

+

"cartoon2-d",

|

| 128 |

+

"cartoon3-d",

|

| 129 |

+

"cartoon4",

|

| 130 |

+

"cartoon4-d",

|

| 131 |

+

"cartoon5-d",

|

| 132 |

+

"comic1-d",

|

| 133 |

+

"comic2-d",

|

| 134 |

+

"arcane1",

|

| 135 |

+

"arcane1-d",

|

| 136 |

+

"arcane2",

|

| 137 |

+

"arcane2-d",

|

| 138 |

+

"caricature1",

|

| 139 |

+

"caricature2",

|

| 140 |

+

"pixar",

|

| 141 |

+

"pixar-d",

|

| 142 |

+

"illustration1-d",

|

| 143 |

+

"illustration2-d",

|

| 144 |

+

"illustration3-d",

|

| 145 |

+

"illustration4-d",

|

| 146 |

+

"illustration5-d",

|

| 147 |

+

],

|

| 148 |

)

|

| 149 |

+

exstyle = gr.Variable()

|

| 150 |

+

with gr.Row():

|

| 151 |

+

loadmodel_button = gr.Button("Load Model")

|

| 152 |

+

with gr.Row():

|

| 153 |

+

load_info = gr.Textbox(

|

| 154 |

+

label="Process Information",

|

| 155 |

+

interactive=False,

|

| 156 |

+

value="No model loaded.",

|

| 157 |

)

|

| 158 |

+

with gr.Column():

|

| 159 |

+

gr.Markdown(

|

| 160 |

+

"""Reference Styles

|

| 161 |

+

"""

|

| 162 |

+

)

|

| 163 |

+

|

| 164 |

+

with gr.Box():

|

| 165 |

+

gr.Markdown(

|

| 166 |

+

"""## Step 2 (Preprocess Input Image / Video)

|

| 167 |

+

- Drop an image/video containing a near-frontal face to the **Input Image**/**Input Video**.

|

| 168 |

+

- Hit the **Rescale Image**/**Rescale First Frame** button.

|

| 169 |

+

- Rescale the input to make it best fit the model.

|

| 170 |

+

- The final image result will be based on this **Rescaled Face**. Use padding parameters to adjust the background space.

|

| 171 |

+

- **<font color=red>Solution to [Error: no face detected!]</font>**: VToonify uses dlib.get_frontal_face_detector but sometimes it fails to detect a face. You can try several times or use other images until a face is detected, then switch back to the original image.

|

| 172 |

+

- For video input, further hit the **Rescale Video** button.

|

| 173 |

+

- The final video result will be based on this **Rescaled Video**. To avoid overload, video is cut to at most **100/300** frames for CPU/GPU, respectively.

|

| 174 |

+

|

| 175 |

+

"""

|

| 176 |

+

)

|

| 177 |

+

with gr.Row():

|

| 178 |

+

with gr.Box():

|

| 179 |

with gr.Column():

|

| 180 |

gr.Markdown(

|

| 181 |

+

"""Choose the padding parameters.

|

| 182 |

+

"""

|

| 183 |

)

|

| 184 |

+

with gr.Row():

|

| 185 |

+

top = gr.Slider(128, 256, value=200, step=8, label="top")

|

| 186 |

+

with gr.Row():

|

| 187 |

+

bottom = gr.Slider(128, 256, value=200, step=8, label="bottom")

|

| 188 |

+

with gr.Row():

|

| 189 |

+

left = gr.Slider(128, 256, value=200, step=8, label="left")

|

| 190 |

+

with gr.Row():

|

| 191 |

+

right = gr.Slider(128, 256, value=200, step=8, label="right")

|

| 192 |

+

with gr.Box():

|

| 193 |

with gr.Column():

|

| 194 |

+

gr.Markdown("""Input""")

|

| 195 |

with gr.Row():

|

| 196 |

+

input_image = gr.Image(label="Input Image", type="filepath")

|

| 197 |

+

with gr.Row():

|

| 198 |

+

preprocess_image_button = gr.Button("Rescale Image")

|

| 199 |

+

with gr.Row():

|

| 200 |

+

input_video = gr.Video(

|

| 201 |

+

label="Input Video",

|

| 202 |

+

mirror_webcam=False,

|

| 203 |

+

type="filepath",

|

| 204 |

)

|

| 205 |

with gr.Row():

|

| 206 |

+

preprocess_video0_button = gr.Button("Rescale First Frame")

|

| 207 |

+

preprocess_video1_button = gr.Button("Rescale Video")

|

| 208 |

+

|

| 209 |

+

with gr.Box():

|

| 210 |

with gr.Column():

|

| 211 |

+

gr.Markdown("""View""")

|

| 212 |

with gr.Row():

|

| 213 |

+

input_info = gr.Textbox(

|

| 214 |

+

label="Process Information",

|

| 215 |

+

interactive=False,

|

| 216 |

+

value="n.a.",

|

| 217 |

)

|

| 218 |

with gr.Row():

|

| 219 |

+

aligned_face = gr.Image(

|

| 220 |

+

label="Rescaled Face", type="numpy", interactive=False

|

| 221 |

+

)

|

| 222 |

+

instyle = gr.Variable()

|

| 223 |

+

with gr.Row():

|

| 224 |

+

aligned_video = gr.Video(

|

| 225 |

+

label="Rescaled Video", type="mp4", interactive=False

|

| 226 |

+

)

|

| 227 |

+

with gr.Row():

|

| 228 |

+

with gr.Column():

|

| 229 |

+

paths = [

|

| 230 |

+

"./vtoonify/data/pexels-andrea-piacquadio-733872.jpg",

|

| 231 |

+

"./vtoonify/data/i5R8hbZFDdc.jpg",

|

| 232 |

+

"./vtoonify/data/yRpe13BHdKw.jpg",

|

| 233 |

+

"./vtoonify/data/ILip77SbmOE.jpg",

|

| 234 |

+

"./vtoonify/data/077436.jpg",

|

| 235 |

+

"./vtoonify/data/081680.jpg",

|

| 236 |

+

]

|

| 237 |

+

example_images = gr.Dataset(

|

| 238 |

+

components=[input_image],

|

| 239 |

+

samples=[[path] for path in paths],

|

| 240 |

+

label="Image Examples",

|

| 241 |

+

)

|

| 242 |

+

with gr.Column():

|

| 243 |

+

# example_videos = gr.Dataset(components=[input_video], samples=[['./vtoonify/data/529.mp4']], type='values')

|

| 244 |

+

# to render video example on mouse hover/click

|

| 245 |

+

example_videos.render()

|

| 246 |

+

|

| 247 |

+

# to load sample video into input_video upon clicking on it

|

| 248 |

+

def load_examples(video):

|

| 249 |

+

# print("****** inside load_example() ******")

|

| 250 |

+

# print("in_video is : ", video[0])

|

| 251 |

+

return video[0]

|

| 252 |

+

|

| 253 |

+

example_videos.click(load_examples, example_videos, input_video)

|

| 254 |

+

|

| 255 |

+

with gr.Box():

|

| 256 |

+

gr.Markdown("""## Step 3 (Generate Style Transferred Image/Video)""")

|

| 257 |

+

with gr.Row():

|

| 258 |

+

with gr.Column():

|

| 259 |

+

gr.Markdown(

|

| 260 |

+

"""

|

| 261 |

+

|

| 262 |

+

- Adjust **Style Degree**.

|

| 263 |

+

- Hit **Toonify!** to toonify one frame. Hit **VToonify!** to toonify full video.

|

| 264 |

+

- Estimated time on 1600x1440 video of 300 frames: 1 hour (CPU); 2 mins (GPU)

|

| 265 |

+

"""

|

| 266 |

+

)

|

| 267 |

+

style_degree = gr.Slider(

|

| 268 |

+

0, 1, value=0.5, step=0.05, label="Style Degree"

|

| 269 |

+

)

|

| 270 |

+

with gr.Column():

|

| 271 |

+

gr.Markdown(

|

| 272 |

+

"""

|

| 273 |

+

"""

|

| 274 |

+

)

|

| 275 |

+

with gr.Row():

|

| 276 |

+

output_info = gr.Textbox(

|

| 277 |

+

label="Process Information", interactive=False, value="n.a."

|

| 278 |

+

)

|

| 279 |

+

with gr.Row():

|

| 280 |

+

with gr.Column():

|

| 281 |

+

with gr.Row():

|

| 282 |

+

result_face = gr.Image(

|

| 283 |

+

label="Result Image", type="numpy", interactive=False

|

| 284 |

+

)

|

| 285 |

+

with gr.Row():

|

| 286 |

+

toonify_button = gr.Button("Toonify!")

|

| 287 |

+

with gr.Column():

|

| 288 |

+

with gr.Row():

|

| 289 |

+

result_video = gr.Video(

|

| 290 |

+

label="Result Video", type="mp4", interactive=False

|

| 291 |

+

)

|

| 292 |

+

with gr.Row():

|

| 293 |

+

vtoonify_button = gr.Button("VToonify!")

|

| 294 |

+

|

| 295 |

+

gr.Markdown(ARTICLE)

|

| 296 |

+

gr.Markdown(FOOTER)

|

| 297 |

+

|

| 298 |

+

loadmodel_button.click(

|

| 299 |

+

fn=model.load_model, inputs=[style_type], outputs=[exstyle, load_info]

|

| 300 |

+

)

|

| 301 |

+

|

| 302 |

+

style_type.change(fn=update_slider, inputs=style_type, outputs=style_degree)

|

| 303 |

+

|

| 304 |

+

preprocess_image_button.click(

|

| 305 |

+

fn=model.detect_and_align_image,

|

| 306 |

+

inputs=[input_image, top, bottom, left, right],

|

| 307 |

+

outputs=[aligned_face, instyle, input_info],

|

| 308 |

+

)

|

| 309 |

+

preprocess_video0_button.click(

|

| 310 |

+

fn=model.detect_and_align_video,

|

| 311 |

+

inputs=[input_video, top, bottom, left, right],

|

| 312 |

+

outputs=[aligned_face, instyle, input_info],

|

| 313 |

+

)

|

| 314 |

+

preprocess_video1_button.click(

|

| 315 |

+

fn=model.detect_and_align_full_video,

|

| 316 |

+

inputs=[input_video, top, bottom, left, right],

|

| 317 |

+

outputs=[aligned_video, instyle, input_info],

|

| 318 |

+

)

|

| 319 |

+

|

| 320 |

+

toonify_button.click(

|

| 321 |

+

fn=model.image_toonify,

|

| 322 |

+

inputs=[aligned_face, instyle, exstyle, style_degree, style_type],

|

| 323 |

+

outputs=[result_face, output_info],

|

| 324 |

+

)

|

| 325 |

+

vtoonify_button.click(

|

| 326 |

+

fn=model.video_tooniy,

|

| 327 |

+

inputs=[aligned_video, instyle, exstyle, style_degree, style_type],

|

| 328 |

+

outputs=[result_video, output_info],

|

| 329 |

+

)

|

| 330 |

+

|

| 331 |

+

example_images.click(

|

| 332 |

+

fn=set_example_image,

|

| 333 |

+

inputs=example_images,

|

| 334 |

+

outputs=example_images.components,

|

| 335 |

+

)

|

| 336 |

+

|

| 337 |

+

# demo.launch(

|

| 338 |

+

# enable_queue=args.enable_queue,

|

| 339 |

+

# server_port=args.port,

|

| 340 |

+

# share=args.share,

|

| 341 |

+

# )

|

| 342 |

+

|

| 343 |

+

demo.queue(concurrency_count=1, max_size=4)

|

| 344 |

+

demo.launch(server_port=8266)

|