prasant.goswivt

commited on

Commit

•

dabad06

1

Parent(s):

fd334ba

added sentiment analysis

Browse files- __pycache__/sentiment.cpython-310.pyc +0 -0

- data/novel_list.pkl +3 -0

- data/sentiment_analysis/Genius Seventh Prince_results.pkl +3 -0

- data/sentiment_analysis/Lord of the mysteries_results.pkl +3 -0

- data/sentiment_analysis/Mother of Learning_results.pkl +3 -0

- data/sentiment_analysis/The Perfect Run_results.pkl +3 -0

- data/similarity.pkl +3 -0

- sentiment.py +155 -0

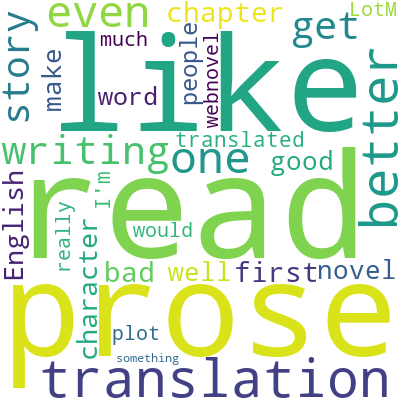

- static/images/wordcloud/Lord of the mysteries_cloud.png +0 -0

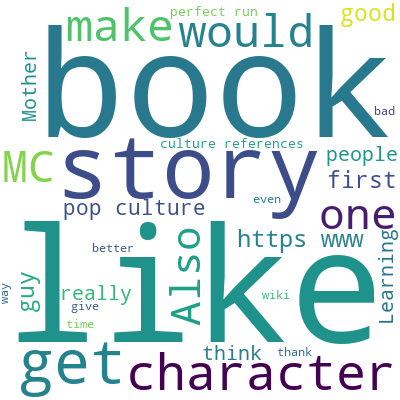

- static/images/wordcloud/Mother of Learning_cloud.png +0 -0

- static/images/wordcloud/The Perfect Run_cloud.png +0 -0

__pycache__/sentiment.cpython-310.pyc

ADDED

|

Binary file (4.45 kB). View file

|

|

|

data/novel_list.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:5af2f404aed4b4de777b9b2c0cb2fc1a744f9c95332f1a2b96ea4eb514d5a9aa

|

| 3 |

+

size 4886535

|

data/sentiment_analysis/Genius Seventh Prince_results.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:74aafc6b657ba1e59d202e97046cd1699c102750e3fb02a60360bc9640ec6869

|

| 3 |

+

size 19

|

data/sentiment_analysis/Lord of the mysteries_results.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:f18a626152311f0e9202975c342ddcfa9ecc343006ea7ea133b1fe3c708b235f

|

| 3 |

+

size 21735

|

data/sentiment_analysis/Mother of Learning_results.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:628f6afab594ad5d7a0f232c48f61c4d935dbea168fb6e166dc78ac680d31518

|

| 3 |

+

size 21848

|

data/sentiment_analysis/The Perfect Run_results.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:c5a3c88f7c51bb3bb492cc7b10bfd9c4d670b30d8ae8a714319d71cf34b021ae

|

| 3 |

+

size 21740

|

data/similarity.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:96a69cd6b71b1342f594eabb095aab1b619c27d1004b4b34e2725ffbe838f1a1

|

| 3 |

+

size 1313383915

|

sentiment.py

ADDED

|

@@ -0,0 +1,155 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from flask import Flask, request, render_template

|

| 2 |

+

import pickle

|

| 3 |

+

import os

|

| 4 |

+

import praw

|

| 5 |

+

import torch

|

| 6 |

+

from transformers import RobertaTokenizer, RobertaForSequenceClassification

|

| 7 |

+

import nltk

|

| 8 |

+

from nltk.stem.porter import PorterStemmer

|

| 9 |

+

from nltk.corpus import stopwords

|

| 10 |

+

import spacy

|

| 11 |

+

import string

|

| 12 |

+

import matplotlib.pyplot as plt

|

| 13 |

+

from wordcloud import WordCloud

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

def save_data(data, filename):

|

| 17 |

+

with open(filename, 'wb') as file:

|

| 18 |

+

pickle.dump(data, file)

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

def load_data(filename):

|

| 22 |

+

if os.path.exists(filename):

|

| 23 |

+

with open(filename, 'rb') as file:

|

| 24 |

+

return pickle.load(file)

|

| 25 |

+

else:

|

| 26 |

+

return None

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

# PRAW configs

|

| 30 |

+

REDDIT_CLIENT_ID = "lI0C_W9_eESoiS2mtUMNDg"

|

| 31 |

+

REDDIT_CLIENT_SECRET = "IK1Vn7s0EZGiNt6vMZ54sfT6pYvbHA"

|

| 32 |

+

REDDIT_USERNAME = "Tiger_in_the_Snow"

|

| 33 |

+

|

| 34 |

+

reddit = praw.Reddit(

|

| 35 |

+

client_id=REDDIT_CLIENT_ID,

|

| 36 |

+

client_secret=REDDIT_CLIENT_SECRET,

|

| 37 |

+

user_agent=f"script:sentiment-analysis:v0.0.1 (by {REDDIT_USERNAME})"

|

| 38 |

+

)

|

| 39 |

+

|

| 40 |

+

# NLP configs

|

| 41 |

+

stemmer = PorterStemmer()

|

| 42 |

+

nlp = spacy.load("en_core_web_sm")

|

| 43 |

+

nltk.download('punkt')

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

# Model configs

|

| 47 |

+

tokenizer = RobertaTokenizer.from_pretrained('aychang/roberta-base-imdb')

|

| 48 |

+

model = RobertaForSequenceClassification.from_pretrained(

|

| 49 |

+

'aychang/roberta-base-imdb', num_labels=2)

|

| 50 |

+

model.classifier = torch.nn.Linear(768, 2)

|

| 51 |

+

|

| 52 |

+

|

| 53 |

+

def get_sentiment(query):

|

| 54 |

+

print(query)

|

| 55 |

+

filename = f"D:/projects/Recon/data/sentiment_analysis/{query}_results.pkl"

|

| 56 |

+

saved_data = load_data(filename)

|

| 57 |

+

|

| 58 |

+

if saved_data:

|

| 59 |

+

positive, negative, _ = saved_data

|

| 60 |

+

wordcloud = f'static/images/wordcloud/{query}_cloud.png'

|

| 61 |

+

return positive, negative, wordcloud

|

| 62 |

+

else:

|

| 63 |

+

|

| 64 |

+

results = get_reddit_results(query)

|

| 65 |

+

if not results:

|

| 66 |

+

error = "No results found for query"

|

| 67 |

+

return error

|

| 68 |

+

|

| 69 |

+

positive, negative, wordcloud = analyze_comments(

|

| 70 |

+

results, query=query)

|

| 71 |

+

print(f'positive:{positive}')

|

| 72 |

+

save_data((positive, negative, wordcloud), filename)

|

| 73 |

+

return positive, negative, f'static/images/wordcloud/{query}_cloud.png'

|

| 74 |

+

|

| 75 |

+

|

| 76 |

+

def get_reddit_results(query):

|

| 77 |

+

print(query)

|

| 78 |

+

sub = reddit.subreddit('noveltranslations+progressionfantasy')

|

| 79 |

+

results = sub.search(query, limit=1)

|

| 80 |

+

|

| 81 |

+

print(results)

|

| 82 |

+

return list(results)

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

def transform_text(text):

|

| 86 |

+

text = text.lower()

|

| 87 |

+

text = nltk.word_tokenize(text)

|

| 88 |

+

text = [i for i in text if i.isalnum()]

|

| 89 |

+

text = [i for i in text if i not in stopwords.words(

|

| 90 |

+

'english') and i not in string.punctuation]

|

| 91 |

+

text = [stemmer.stem(i) for i in text]

|

| 92 |

+

return ' '.join(text)

|

| 93 |

+

|

| 94 |

+

|

| 95 |

+

def tokenize(text):

|

| 96 |

+

doc = nlp(text)

|

| 97 |

+

return [token.text for token in doc]

|

| 98 |

+

|

| 99 |

+

|

| 100 |

+

def analyze_comments(results, query):

|

| 101 |

+

total_positive = 0

|

| 102 |

+

total_negative = 0

|

| 103 |

+

total_comments = 0

|

| 104 |

+

comments_for_cloud = []

|

| 105 |

+

|

| 106 |

+

for submission in results:

|

| 107 |

+

submission.comments.replace_more(limit=None)

|

| 108 |

+

all_comments = submission.comments.list()

|

| 109 |

+

|

| 110 |

+

for comment in all_comments:

|

| 111 |

+

comment_body = comment.body

|

| 112 |

+

|

| 113 |

+

text = transform_text(comment_body)

|

| 114 |

+

comments_for_cloud.append(comment_body)

|

| 115 |

+

|

| 116 |

+

if text:

|

| 117 |

+

tokens = tokenize(text)

|

| 118 |

+

|

| 119 |

+

tokenized_input = tokenizer(

|

| 120 |

+

tokens, return_tensors='pt', truncation=True, padding=True)

|

| 121 |

+

|

| 122 |

+

outputs = model(**tokenized_input)

|

| 123 |

+

|

| 124 |

+

probabilities = torch.softmax(outputs.logits, dim=-1)

|

| 125 |

+

mean_probabilities = probabilities.mean(dim=1)

|

| 126 |

+

|

| 127 |

+

positive_pct = mean_probabilities[0][1].item() * 100

|

| 128 |

+

negative_pct = mean_probabilities[0][0].item() * 100

|

| 129 |

+

|

| 130 |

+

total_positive += positive_pct

|

| 131 |

+

total_negative += negative_pct

|

| 132 |

+

total_comments += 1

|

| 133 |

+

|

| 134 |

+

if total_comments > 0:

|

| 135 |

+

avg_positive = total_positive / total_comments

|

| 136 |

+

avg_negative = total_negative / total_comments

|

| 137 |

+

else:

|

| 138 |

+

avg_positive = 0

|

| 139 |

+

avg_negative = 0

|

| 140 |

+

|

| 141 |

+

if total_comments > 0:

|

| 142 |

+

all_comments_string = ' '.join(comments_for_cloud)

|

| 143 |

+

|

| 144 |

+

wordcloud = WordCloud(width=400, height=400,

|

| 145 |

+

background_color='white',

|

| 146 |

+

max_words=30,

|

| 147 |

+

stopwords=stopwords.words('english'),

|

| 148 |

+

min_font_size=10).generate(all_comments_string)

|

| 149 |

+

# Save the WordCloud image as a static file

|

| 150 |

+

wordcloud.to_file(

|

| 151 |

+

f'D:/projects/Recon/static/images/wordcloud/{query}_cloud.png')

|

| 152 |

+

else:

|

| 153 |

+

wordcloud = None

|

| 154 |

+

print(f'positive:{avg_positive}')

|

| 155 |

+

return round(avg_positive), round(avg_negative), wordcloud

|

static/images/wordcloud/Lord of the mysteries_cloud.png

ADDED

|

static/images/wordcloud/Mother of Learning_cloud.png

ADDED

|

static/images/wordcloud/The Perfect Run_cloud.png

ADDED

|