Spaces:

Running

on

Zero

Running

on

Zero

changed examples

Browse files- .gitattributes +1 -0

- app.py +10 -8

- example_images/campeones.jpg +0 -0

- example_images/document.jpg +0 -0

- example_images/docvqa_example.png +0 -3

- example_images/dogs.jpg +0 -0

- example_images/examples_wat_arun.jpg +0 -0

- example_images/math.jpg +0 -0

- example_images/newyork.jpg +0 -0

.gitattributes

CHANGED

|

@@ -36,3 +36,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 36 |

example_images/docvqa_example.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

example_images/gaulois.png filter=lfs diff=lfs merge=lfs -text

|

| 38 |

example_images/rococo.jpg filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 36 |

example_images/docvqa_example.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

example_images/gaulois.png filter=lfs diff=lfs merge=lfs -text

|

| 38 |

example_images/rococo.jpg filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

*.DS_Store filter=lfs diff=lfs merge=lfs -text

|

app.py

CHANGED

|

@@ -56,7 +56,6 @@ def model_inference(

|

|

| 56 |

"attention_mask": inputs.attention_mask,

|

| 57 |

"num_return_sequences": 1,

|

| 58 |

"no_repeat_ngram_size": 2,

|

| 59 |

-

"temperature": 0.7,

|

| 60 |

"max_new_tokens": 500,

|

| 61 |

"min_new_tokens": 10,

|

| 62 |

}

|

|

@@ -82,14 +81,17 @@ def model_inference(

|

|

| 82 |

|

| 83 |

|

| 84 |

examples=[

|

| 85 |

-

[{"text": "

|

| 86 |

-

[{"text": "

|

| 87 |

-

[{"text":

|

| 88 |

-

[{"text": "What

|

| 89 |

-

[{"text":

|

|

|

|

|

|

|

|

|

|

| 90 |

]

|

| 91 |

-

demo = gr.ChatInterface(fn=model_inference, title="SmolVLM:

|

| 92 |

-

description="Play with [HuggingFaceTB/SmolVLM-Instruct](https://huggingface.co/HuggingFaceTB/SmolVLM-Instruct) in this demo. To get started, upload an image and text or try one of the examples. This

|

| 93 |

examples=examples,

|

| 94 |

textbox=gr.MultimodalTextbox(label="Query Input", file_types=["image"], file_count="multiple"), stop_btn="Stop Generation", multimodal=True,

|

| 95 |

cache_examples=False

|

|

|

|

| 56 |

"attention_mask": inputs.attention_mask,

|

| 57 |

"num_return_sequences": 1,

|

| 58 |

"no_repeat_ngram_size": 2,

|

|

|

|

| 59 |

"max_new_tokens": 500,

|

| 60 |

"min_new_tokens": 10,

|

| 61 |

}

|

|

|

|

| 81 |

|

| 82 |

|

| 83 |

examples=[

|

| 84 |

+

[{"text": "Describe this image.", "files": ["example_images/newyork.jpg"]}],

|

| 85 |

+

[{"text": "Describe this image.", "files": ["example_images/dogs.jpg"]}],

|

| 86 |

+

[{"text": "Where do the severe droughts happen according to this diagram?", "files": ["example_images/examples_weather_events.png"]}],

|

| 87 |

+

[{"text": "What art era do these artpieces belong to?", "files": ["example_images/rococo.jpg", "example_images/rococo_1.jpg"]}],

|

| 88 |

+

[{"text": "Describe this image.", "files": ["example_images/campeones.jpg"]}],

|

| 89 |

+

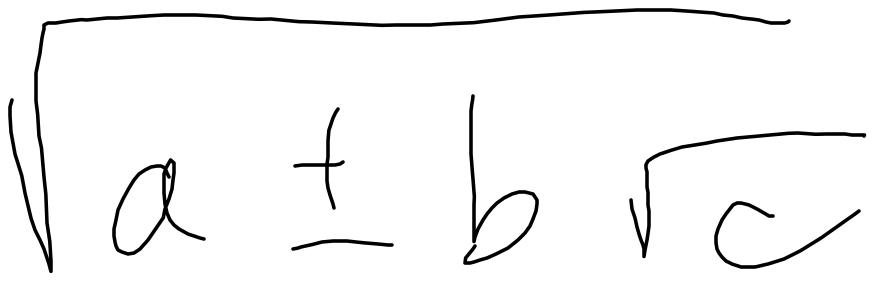

[{"text": "What does this say?", "files": ["example_images/math.jpg"]}],

|

| 90 |

+

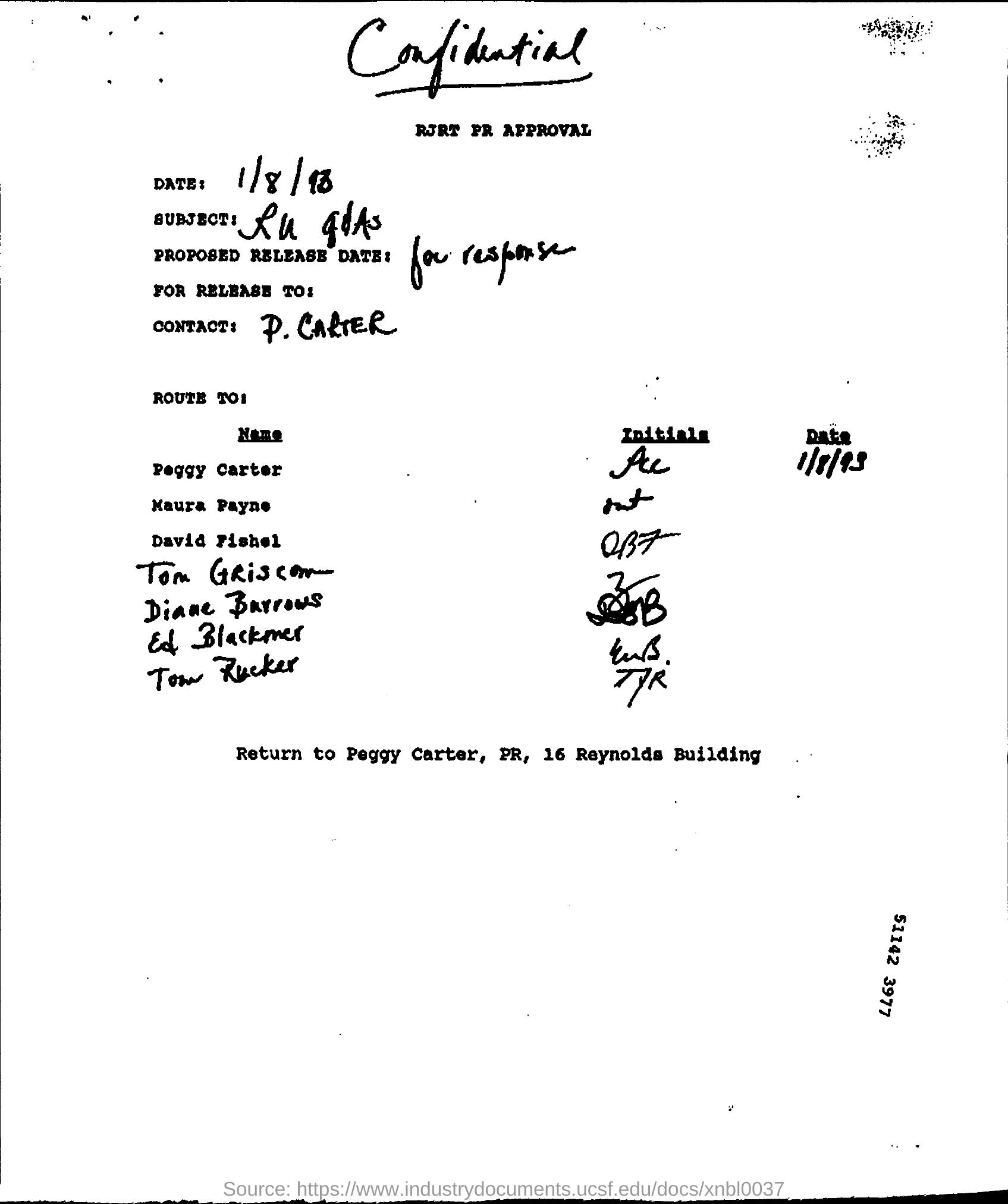

[{"text": "What is the date in this document?", "files": ["example_images/document.jpg"]}],

|

| 91 |

+

[{"text": "What is this UI about?", "files": ["example_images/s2w_example.png"]}],

|

| 92 |

]

|

| 93 |

+

demo = gr.ChatInterface(fn=model_inference, title="SmolVLM-250M: The Smollest VLM ever 💫",

|

| 94 |

+

description="Play with [HuggingFaceTB/SmolVLM-Instruct-250M](https://huggingface.co/HuggingFaceTB/SmolVLM-Instruct-250M) in this demo. To get started, upload an image and text or try one of the examples. This demo doesn't use history for the chat, so every chat you start is a new conversation.",

|

| 95 |

examples=examples,

|

| 96 |

textbox=gr.MultimodalTextbox(label="Query Input", file_types=["image"], file_count="multiple"), stop_btn="Stop Generation", multimodal=True,

|

| 97 |

cache_examples=False

|

example_images/campeones.jpg

ADDED

|

example_images/document.jpg

ADDED

|

example_images/docvqa_example.png

DELETED

Git LFS Details

|

example_images/dogs.jpg

ADDED

|

example_images/examples_wat_arun.jpg

DELETED

|

Binary file (786 kB)

|

|

|

example_images/math.jpg

ADDED

|

example_images/newyork.jpg

ADDED

|