duplicate from OCR

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- Home.py +17 -0

- README.md +2 -1

- __pycache__/multipage.cpython-37.pyc +0 -0

- app_pages/.DS_Store +0 -0

- app_pages/__pycache__/about.cpython-37.pyc +0 -0

- app_pages/__pycache__/home.cpython-37.pyc +0 -0

- app_pages/__pycache__/ocr_comparator.cpython-37.pyc +0 -0

- app_pages/about.py +37 -0

- app_pages/home.py +19 -0

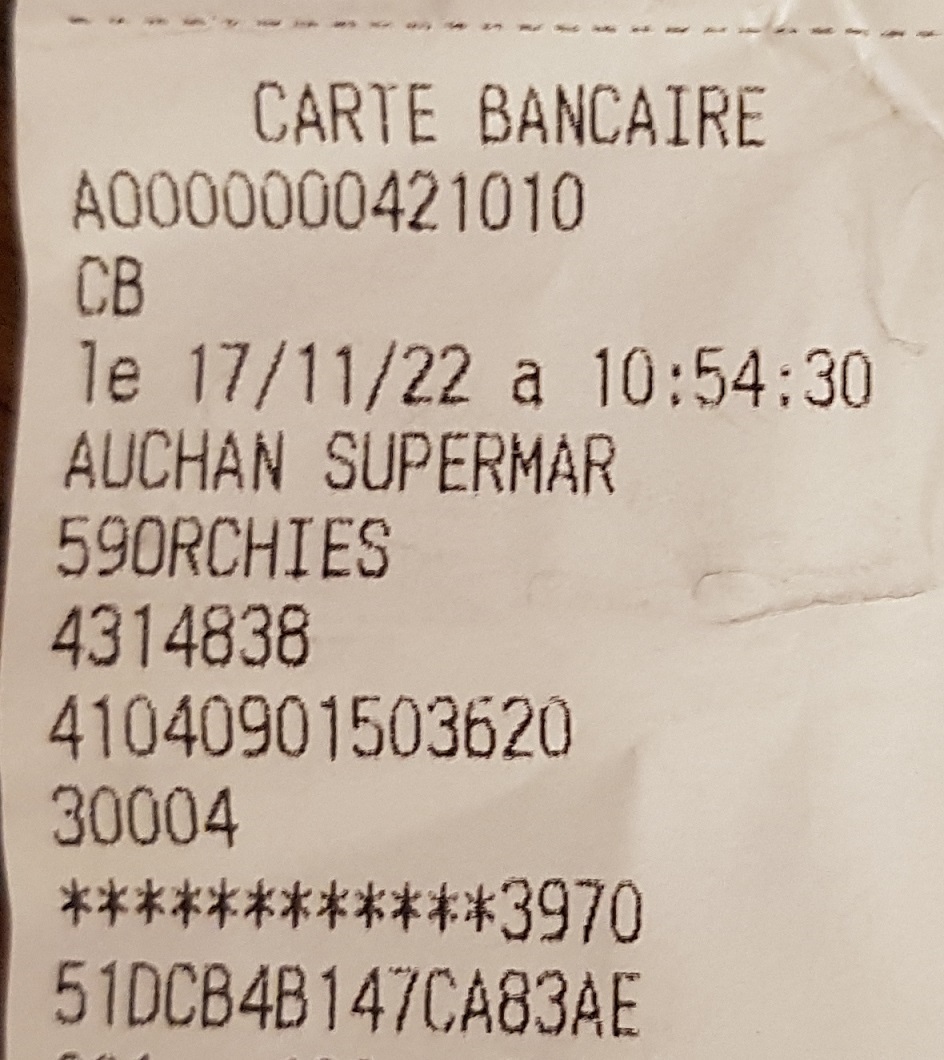

- app_pages/img_demo_1.jpg +0 -0

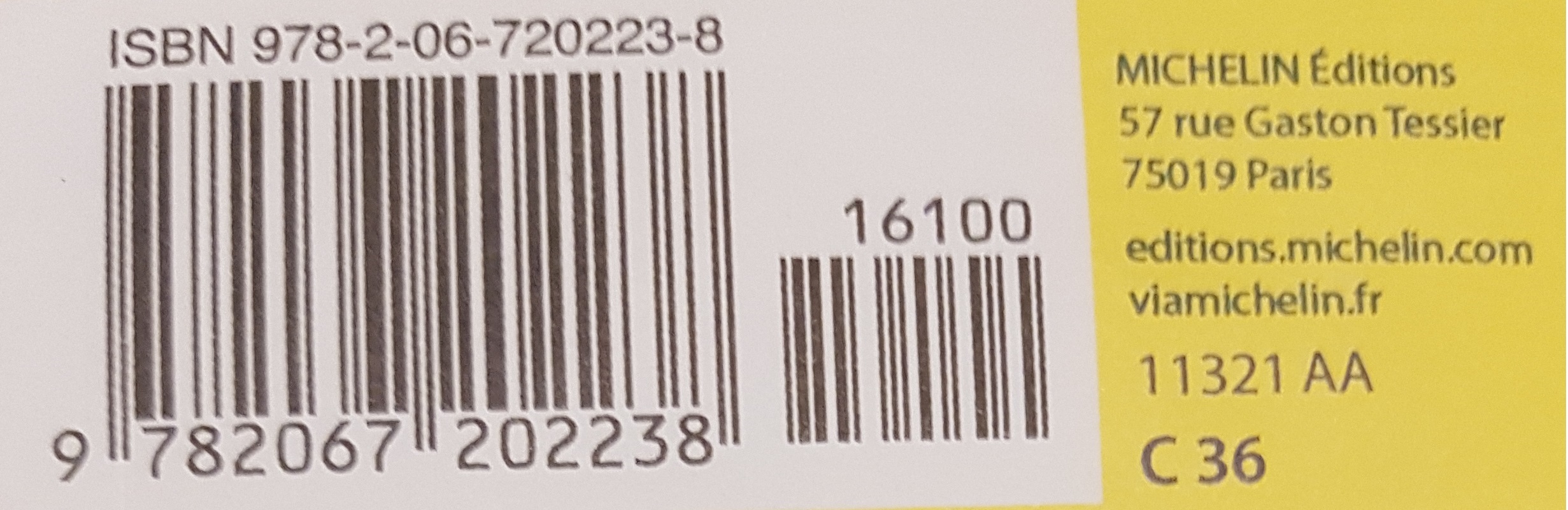

- app_pages/img_demo_2.jpg +0 -0

- app_pages/ocr.png +0 -0

- app_pages/ocr_comparator.py +1421 -0

- configs/_base_/default_runtime.py +17 -0

- configs/_base_/det_datasets/ctw1500.py +18 -0

- configs/_base_/det_datasets/icdar2015.py +18 -0

- configs/_base_/det_datasets/icdar2017.py +18 -0

- configs/_base_/det_datasets/synthtext.py +18 -0

- configs/_base_/det_datasets/toy_data.py +41 -0

- configs/_base_/det_models/dbnet_r18_fpnc.py +21 -0

- configs/_base_/det_models/dbnet_r50dcnv2_fpnc.py +23 -0

- configs/_base_/det_models/dbnetpp_r50dcnv2_fpnc.py +28 -0

- configs/_base_/det_models/drrg_r50_fpn_unet.py +21 -0

- configs/_base_/det_models/fcenet_r50_fpn.py +33 -0

- configs/_base_/det_models/fcenet_r50dcnv2_fpn.py +35 -0

- configs/_base_/det_models/ocr_mask_rcnn_r50_fpn_ohem.py +126 -0

- configs/_base_/det_models/ocr_mask_rcnn_r50_fpn_ohem_poly.py +126 -0

- configs/_base_/det_models/panet_r18_fpem_ffm.py +43 -0

- configs/_base_/det_models/panet_r50_fpem_ffm.py +21 -0

- configs/_base_/det_models/psenet_r50_fpnf.py +51 -0

- configs/_base_/det_models/textsnake_r50_fpn_unet.py +22 -0

- configs/_base_/det_pipelines/dbnet_pipeline.py +88 -0

- configs/_base_/det_pipelines/drrg_pipeline.py +60 -0

- configs/_base_/det_pipelines/fcenet_pipeline.py +118 -0

- configs/_base_/det_pipelines/maskrcnn_pipeline.py +57 -0

- configs/_base_/det_pipelines/panet_pipeline.py +156 -0

- configs/_base_/det_pipelines/psenet_pipeline.py +70 -0

- configs/_base_/det_pipelines/textsnake_pipeline.py +65 -0

- configs/_base_/recog_datasets/MJ_train.py +21 -0

- configs/_base_/recog_datasets/ST_MJ_alphanumeric_train.py +31 -0

- configs/_base_/recog_datasets/ST_MJ_train.py +29 -0

- configs/_base_/recog_datasets/ST_SA_MJ_real_train.py +81 -0

- configs/_base_/recog_datasets/ST_SA_MJ_train.py +48 -0

- configs/_base_/recog_datasets/ST_charbox_train.py +23 -0

- configs/_base_/recog_datasets/academic_test.py +57 -0

- configs/_base_/recog_datasets/seg_toy_data.py +34 -0

- configs/_base_/recog_datasets/toy_data.py +54 -0

- configs/_base_/recog_models/abinet.py +70 -0

- configs/_base_/recog_models/crnn.py +12 -0

- configs/_base_/recog_models/crnn_tps.py +18 -0

Home.py

ADDED

|

@@ -0,0 +1,17 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from multipage import MultiPage

|

| 3 |

+

from app_pages import home, about, ocr_comparator

|

| 4 |

+

|

| 5 |

+

app = MultiPage()

|

| 6 |

+

st.set_page_config(

|

| 7 |

+

page_title='OCR Comparator', layout ="wide",

|

| 8 |

+

initial_sidebar_state="expanded",

|

| 9 |

+

)

|

| 10 |

+

|

| 11 |

+

# Add all your application here

|

| 12 |

+

app.add_page("Home", "house", home.app)

|

| 13 |

+

app.add_page("About", "info-circle", about.app)

|

| 14 |

+

app.add_page("App", "cast", ocr_comparator.app)

|

| 15 |

+

|

| 16 |

+

# The main app

|

| 17 |

+

app.run()

|

README.md

CHANGED

|

@@ -5,7 +5,8 @@ colorFrom: purple

|

|

| 5 |

colorTo: green

|

| 6 |

sdk: streamlit

|

| 7 |

sdk_version: 1.27.2

|

| 8 |

-

app_file:

|

|

|

|

| 9 |

pinned: false

|

| 10 |

---

|

| 11 |

|

|

|

|

| 5 |

colorTo: green

|

| 6 |

sdk: streamlit

|

| 7 |

sdk_version: 1.27.2

|

| 8 |

+

app_file: Home.py

|

| 9 |

+

tags: [streamlit, ocr]

|

| 10 |

pinned: false

|

| 11 |

---

|

| 12 |

|

__pycache__/multipage.cpython-37.pyc

ADDED

|

Binary file (2.65 kB). View file

|

|

|

app_pages/.DS_Store

ADDED

|

Binary file (6.15 kB). View file

|

|

|

app_pages/__pycache__/about.cpython-37.pyc

ADDED

|

Binary file (2.02 kB). View file

|

|

|

app_pages/__pycache__/home.cpython-37.pyc

ADDED

|

Binary file (889 Bytes). View file

|

|

|

app_pages/__pycache__/ocr_comparator.cpython-37.pyc

ADDED

|

Binary file (48.1 kB). View file

|

|

|

app_pages/about.py

ADDED

|

@@ -0,0 +1,37 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

|

| 3 |

+

def app():

|

| 4 |

+

st.title("OCR solutions comparator")

|

| 5 |

+

|

| 6 |

+

st.write("")

|

| 7 |

+

st.write("")

|

| 8 |

+

st.write("")

|

| 9 |

+

|

| 10 |

+

st.markdown("##### This app allows you to compare, from a given picture, the results of different solutions:")

|

| 11 |

+

st.markdown("##### *EasyOcr, PaddleOCR, MMOCR, Tesseract*")

|

| 12 |

+

st.write("")

|

| 13 |

+

st.write("")

|

| 14 |

+

|

| 15 |

+

st.markdown(''' The 1st step is to choose the language for the text recognition (not all solutions \

|

| 16 |

+

support the same languages), and then choose the picture to consider. It is possible to upload a file, \

|

| 17 |

+

to take a picture, or to use a demo file. \

|

| 18 |

+

It is then possible to change the default values for the text area detection process, \

|

| 19 |

+

before launching the detection task for each solution.''')

|

| 20 |

+

st.write("")

|

| 21 |

+

|

| 22 |

+

st.markdown(''' The different results are then presented. The 2nd step is to choose one of these \

|

| 23 |

+

detection results, in order to carry out the text recognition process there. It is also possible to change \

|

| 24 |

+

the default settings for each solution.''')

|

| 25 |

+

st.write("")

|

| 26 |

+

|

| 27 |

+

st.markdown("###### The recognition results appear in 2 formats:")

|

| 28 |

+

st.markdown(''' - a visual format resumes the initial image, replacing the detected areas with \

|

| 29 |

+

the recognized text. The background is + or - strongly colored in green according to the \

|

| 30 |

+

confidence level of the recognition.

|

| 31 |

+

A slider allows you to change the font size, another \

|

| 32 |

+

allows you to modify the confidence threshold above which the text color changes: if it is at \

|

| 33 |

+

70% for example, then all the texts with a confidence threshold higher or equal to 70 will appear \

|

| 34 |

+

in white, in black otherwise.''')

|

| 35 |

+

|

| 36 |

+

st.markdown(" - a detailed format presents the results in a table, for each text box detected. \

|

| 37 |

+

It is possible to download this results in a local csv file.")

|

app_pages/home.py

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

|

| 3 |

+

def app():

|

| 4 |

+

st.image('ocr.png')

|

| 5 |

+

|

| 6 |

+

st.write("")

|

| 7 |

+

|

| 8 |

+

st.markdown('''#### OCR, or Optical Character Recognition, is a computer vision task, \

|

| 9 |

+

which includes the detection of text areas, and the recognition of characters.''')

|

| 10 |

+

st.write("")

|

| 11 |

+

st.write("")

|

| 12 |

+

|

| 13 |

+

st.markdown("##### This app allows you to compare, from a given image, the results of different solutions:")

|

| 14 |

+

st.markdown("##### *EasyOcr, PaddleOCR, MMOCR, Tesseract*")

|

| 15 |

+

st.write("")

|

| 16 |

+

st.write("")

|

| 17 |

+

st.markdown("👈 Select the **About** page from the sidebar for information on how the app works")

|

| 18 |

+

|

| 19 |

+

st.markdown("👈 or directly select the **App** page")

|

app_pages/img_demo_1.jpg

ADDED

|

app_pages/img_demo_2.jpg

ADDED

|

app_pages/ocr.png

ADDED

|

app_pages/ocr_comparator.py

ADDED

|

@@ -0,0 +1,1421 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""This Streamlit app allows you to compare, from a given image, the results of different solutions:

|

| 2 |

+

EasyOcr, PaddleOCR, MMOCR, Tesseract

|

| 3 |

+

"""

|

| 4 |

+

import streamlit as st

|

| 5 |

+

import plotly.express as px

|

| 6 |

+

import numpy as np

|

| 7 |

+

import math

|

| 8 |

+

import pandas as pd

|

| 9 |

+

from time import sleep

|

| 10 |

+

|

| 11 |

+

import cv2

|

| 12 |

+

from PIL import Image, ImageColor

|

| 13 |

+

import PIL

|

| 14 |

+

import easyocr

|

| 15 |

+

from paddleocr import PaddleOCR

|

| 16 |

+

from mmocr.utils.ocr import MMOCR

|

| 17 |

+

import pytesseract

|

| 18 |

+

from pytesseract import Output

|

| 19 |

+

import os

|

| 20 |

+

from mycolorpy import colorlist as mcp

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

###################################################################################################

|

| 24 |

+

## MAIN

|

| 25 |

+

###################################################################################################

|

| 26 |

+

def app():

|

| 27 |

+

|

| 28 |

+

###################################################################################################

|

| 29 |

+

## FUNCTIONS

|

| 30 |

+

###################################################################################################

|

| 31 |

+

|

| 32 |

+

@st.cache

|

| 33 |

+

def convert_df(in_df):

|

| 34 |

+

"""Convert data frame function, used by download button

|

| 35 |

+

|

| 36 |

+

Args:

|

| 37 |

+

in_df (data frame): data frame to convert

|

| 38 |

+

|

| 39 |

+

Returns:

|

| 40 |

+

data frame: converted data frame

|

| 41 |

+

"""

|

| 42 |

+

# IMPORTANT: Cache the conversion to prevent computation on every rerun

|

| 43 |

+

return in_df.to_csv().encode('utf-8')

|

| 44 |

+

|

| 45 |

+

###

|

| 46 |

+

def easyocr_coord_convert(in_list_coord):

|

| 47 |

+

"""Convert easyocr coordinates to standard format used by others functions

|

| 48 |

+

|

| 49 |

+

Args:

|

| 50 |

+

in_list_coord (list of numbers): format [x_min, x_max, y_min, y_max]

|

| 51 |

+

|

| 52 |

+

Returns:

|

| 53 |

+

list of lists: format [ [x_min, y_min], [x_max, y_min], [x_max, y_max], [x_min, y_max] ]

|

| 54 |

+

"""

|

| 55 |

+

|

| 56 |

+

coord = in_list_coord

|

| 57 |

+

return [[coord[0], coord[2]], [coord[1], coord[2]], [coord[1], coord[3]], [coord[0], coord[3]]]

|

| 58 |

+

|

| 59 |

+

###

|

| 60 |

+

@st.cache(show_spinner=False)

|

| 61 |

+

def initializations():

|

| 62 |

+

"""Initializations for the app

|

| 63 |

+

|

| 64 |

+

Returns:

|

| 65 |

+

list of strings : list of OCR solutions names

|

| 66 |

+

(['EasyOCR', 'PPOCR', 'MMOCR', 'Tesseract'])

|

| 67 |

+

dict : names and indices of the OCR solutions

|

| 68 |

+

({'EasyOCR': 0, 'PPOCR': 1, 'MMOCR': 2, 'Tesseract': 3})

|

| 69 |

+

list of dicts : list of languages supported by each OCR solution

|

| 70 |

+

list of int : columns for recognition details results

|

| 71 |

+

dict : confidence color scale

|

| 72 |

+

plotly figure : confidence color scale figure

|

| 73 |

+

"""

|

| 74 |

+

# the readers considered

|

| 75 |

+

out_reader_type_list = ['EasyOCR', 'PPOCR', 'MMOCR', 'Tesseract']

|

| 76 |

+

out_reader_type_dict = {'EasyOCR': 0, 'PPOCR': 1, 'MMOCR': 2, 'Tesseract': 3}

|

| 77 |

+

|

| 78 |

+

# Columns for recognition details results

|

| 79 |

+

out_cols_size = [2] + [2,1]*(len(out_reader_type_list)-1) # Except Tesseract

|

| 80 |

+

|

| 81 |

+

# Dicts of laguages supported by each reader

|

| 82 |

+

out_dict_lang_easyocr = {'Abaza': 'abq', 'Adyghe': 'ady', 'Afrikaans': 'af', 'Angika': 'ang', \

|

| 83 |

+

'Arabic': 'ar', 'Assamese': 'as', 'Avar': 'ava', 'Azerbaijani': 'az', 'Belarusian': 'be', \

|

| 84 |

+

'Bulgarian': 'bg', 'Bihari': 'bh', 'Bhojpuri': 'bho', 'Bengali': 'bn', 'Bosnian': 'bs', \

|

| 85 |

+

'Simplified Chinese': 'ch_sim', 'Traditional Chinese': 'ch_tra', 'Chechen': 'che', \

|

| 86 |

+

'Czech': 'cs', 'Welsh': 'cy', 'Danish': 'da', 'Dargwa': 'dar', 'German': 'de', \

|

| 87 |

+

'English': 'en', 'Spanish': 'es', 'Estonian': 'et', 'Persian (Farsi)': 'fa', 'French': 'fr', \

|

| 88 |

+

'Irish': 'ga', 'Goan Konkani': 'gom', 'Hindi': 'hi', 'Croatian': 'hr', 'Hungarian': 'hu', \

|

| 89 |

+

'Indonesian': 'id', 'Ingush': 'inh', 'Icelandic': 'is', 'Italian': 'it', 'Japanese': 'ja', \

|

| 90 |

+

'Kabardian': 'kbd', 'Kannada': 'kn', 'Korean': 'ko', 'Kurdish': 'ku', 'Latin': 'la', \

|

| 91 |

+

'Lak': 'lbe', 'Lezghian': 'lez', 'Lithuanian': 'lt', 'Latvian': 'lv', 'Magahi': 'mah', \

|

| 92 |

+

'Maithili': 'mai', 'Maori': 'mi', 'Mongolian': 'mn', 'Marathi': 'mr', 'Malay': 'ms', \

|

| 93 |

+

'Maltese': 'mt', 'Nepali': 'ne', 'Newari': 'new', 'Dutch': 'nl', 'Norwegian': 'no', \

|

| 94 |

+

'Occitan': 'oc', 'Pali': 'pi', 'Polish': 'pl', 'Portuguese': 'pt', 'Romanian': 'ro', \

|

| 95 |

+

'Russian': 'ru', 'Serbian (cyrillic)': 'rs_cyrillic', 'Serbian (latin)': 'rs_latin', \

|

| 96 |

+

'Nagpuri': 'sck', 'Slovak': 'sk', 'Slovenian': 'sl', 'Albanian': 'sq', 'Swedish': 'sv', \

|

| 97 |

+

'Swahili': 'sw', 'Tamil': 'ta', 'Tabassaran': 'tab', 'Telugu': 'te', 'Thai': 'th', \

|

| 98 |

+

'Tajik': 'tjk', 'Tagalog': 'tl', 'Turkish': 'tr', 'Uyghur': 'ug', 'Ukranian': 'uk', \

|

| 99 |

+

'Urdu': 'ur', 'Uzbek': 'uz', 'Vietnamese': 'vi'}

|

| 100 |

+

|

| 101 |

+

out_dict_lang_ppocr = {'Abaza': 'abq', 'Adyghe': 'ady', 'Afrikaans': 'af', 'Albanian': 'sq', \

|

| 102 |

+

'Angika': 'ang', 'Arabic': 'ar', 'Avar': 'ava', 'Azerbaijani': 'az', 'Belarusian': 'be', \

|

| 103 |

+

'Bhojpuri': 'bho','Bihari': 'bh','Bosnian': 'bs','Bulgarian': 'bg','Chinese & English': 'ch', \

|

| 104 |

+

'Chinese Traditional': 'chinese_cht', 'Croatian': 'hr', 'Czech': 'cs', 'Danish': 'da', \

|

| 105 |

+

'Dargwa': 'dar', 'Dutch': 'nl', 'English': 'en', 'Estonian': 'et', 'French': 'fr', \

|

| 106 |

+

'German': 'german','Goan Konkani': 'gom','Hindi': 'hi','Hungarian': 'hu','Icelandic': 'is', \

|

| 107 |

+

'Indonesian': 'id', 'Ingush': 'inh', 'Irish': 'ga', 'Italian': 'it', 'Japan': 'japan', \

|

| 108 |

+

'Kabardian': 'kbd', 'Korean': 'korean', 'Kurdish': 'ku', 'Lak': 'lbe', 'Latvian': 'lv', \

|

| 109 |

+

'Lezghian': 'lez', 'Lithuanian': 'lt', 'Magahi': 'mah', 'Maithili': 'mai', 'Malay': 'ms', \

|

| 110 |

+

'Maltese': 'mt', 'Maori': 'mi', 'Marathi': 'mr', 'Mongolian': 'mn', 'Nagpur': 'sck', \

|

| 111 |

+

'Nepali': 'ne', 'Newari': 'new', 'Norwegian': 'no', 'Occitan': 'oc', 'Persian': 'fa', \

|

| 112 |

+

'Polish': 'pl', 'Portuguese': 'pt', 'Romanian': 'ro', 'Russia': 'ru', 'Saudi Arabia': 'sa', \

|

| 113 |

+

'Serbian(cyrillic)': 'rs_cyrillic', 'Serbian(latin)': 'rs_latin', 'Slovak': 'sk', \

|

| 114 |

+

'Slovenian': 'sl', 'Spanish': 'es', 'Swahili': 'sw', 'Swedish': 'sv', 'Tabassaran': 'tab', \

|

| 115 |

+

'Tagalog': 'tl', 'Tamil': 'ta', 'Telugu': 'te', 'Turkish': 'tr', 'Ukranian': 'uk', \

|

| 116 |

+

'Urdu': 'ur', 'Uyghur': 'ug', 'Uzbek': 'uz', 'Vietnamese': 'vi', 'Welsh': 'cy'}

|

| 117 |

+

|

| 118 |

+

out_dict_lang_mmocr = {'English & Chinese': 'en'}

|

| 119 |

+

|

| 120 |

+

out_dict_lang_tesseract = {'Afrikaans': 'afr','Albanian': 'sqi','Amharic': 'amh', \

|

| 121 |

+

'Arabic': 'ara', 'Armenian': 'hye','Assamese': 'asm','Azerbaijani - Cyrilic': 'aze_cyrl', \

|

| 122 |

+

'Azerbaijani': 'aze', 'Basque': 'eus','Belarusian': 'bel','Bengali': 'ben','Bosnian': 'bos', \

|

| 123 |

+

'Breton': 'bre', 'Bulgarian': 'bul','Burmese': 'mya','Catalan; Valencian': 'cat', \

|

| 124 |

+

'Cebuano': 'ceb', 'Central Khmer': 'khm','Cherokee': 'chr','Chinese - Simplified': 'chi_sim', \

|

| 125 |

+

'Chinese - Traditional': 'chi_tra','Corsican': 'cos','Croatian': 'hrv','Czech': 'ces', \

|

| 126 |

+

'Danish':'dan','Dutch; Flemish':'nld','Dzongkha':'dzo','English, Middle (1100-1500)':'enm', \

|

| 127 |

+

'English': 'eng','Esperanto': 'epo','Estonian': 'est','Faroese': 'fao', \

|

| 128 |

+

'Filipino (old - Tagalog)': 'fil','Finnish': 'fin','French, Middle (ca.1400-1600)': 'frm', \

|

| 129 |

+

'French': 'fra','Galician': 'glg','Georgian - Old': 'kat_old','Georgian': 'kat', \

|

| 130 |

+

'German - Fraktur': 'frk','German': 'deu','Greek, Modern (1453-)': 'ell','Gujarati': 'guj', \

|

| 131 |

+

'Haitian; Haitian Creole': 'hat','Hebrew': 'heb','Hindi': 'hin','Hungarian': 'hun', \

|

| 132 |

+

'Icelandic': 'isl','Indonesian': 'ind','Inuktitut': 'iku','Irish': 'gle', \

|

| 133 |

+

'Italian - Old': 'ita_old','Italian': 'ita','Japanese': 'jpn','Javanese': 'jav', \

|

| 134 |

+

'Kannada': 'kan','Kazakh': 'kaz','Kirghiz; Kyrgyz': 'kir','Korean (vertical)': 'kor_vert', \

|

| 135 |

+

'Korean': 'kor','Kurdish (Arabic Script)': 'kur_ara','Lao': 'lao','Latin': 'lat', \

|

| 136 |

+

'Latvian':'lav','Lithuanian':'lit','Luxembourgish':'ltz','Macedonian':'mkd','Malay':'msa', \

|

| 137 |

+

'Malayalam': 'mal','Maltese': 'mlt','Maori': 'mri','Marathi': 'mar','Mongolian': 'mon', \

|

| 138 |

+

'Nepali': 'nep','Norwegian': 'nor','Occitan (post 1500)': 'oci', \

|

| 139 |

+

'Orientation and script detection module':'osd','Oriya':'ori','Panjabi; Punjabi':'pan', \

|

| 140 |

+

'Persian':'fas','Polish':'pol','Portuguese':'por','Pushto; Pashto':'pus','Quechua':'que', \

|

| 141 |

+

'Romanian; Moldavian; Moldovan': 'ron','Russian': 'rus','Sanskrit': 'san', \

|

| 142 |

+

'Scottish Gaelic': 'gla','Serbian - Latin': 'srp_latn','Serbian': 'srp','Sindhi': 'snd', \

|

| 143 |

+

'Sinhala; Sinhalese': 'sin','Slovak': 'slk','Slovenian': 'slv', \

|

| 144 |

+

'Spanish; Castilian - Old': 'spa_old','Spanish; Castilian': 'spa','Sundanese': 'sun', \

|

| 145 |

+

'Swahili': 'swa','Swedish': 'swe','Syriac': 'syr','Tajik': 'tgk','Tamil': 'tam', \

|

| 146 |

+

'Tatar':'tat','Telugu':'tel','Thai':'tha','Tibetan':'bod','Tigrinya':'tir','Tonga':'ton', \

|

| 147 |

+

'Turkish': 'tur','Uighur; Uyghur': 'uig','Ukrainian': 'ukr','Urdu': 'urd', \

|

| 148 |

+

'Uzbek - Cyrilic': 'uzb_cyrl','Uzbek': 'uzb','Vietnamese': 'vie','Welsh': 'cym', \

|

| 149 |

+

'Western Frisian': 'fry','Yiddish': 'yid','Yoruba': 'yor'}

|

| 150 |

+

|

| 151 |

+

out_list_dict_lang = [out_dict_lang_easyocr, out_dict_lang_ppocr, out_dict_lang_mmocr, \

|

| 152 |

+

out_dict_lang_tesseract]

|

| 153 |

+

|

| 154 |

+

# Initialization of detection form

|

| 155 |

+

if 'columns_size' not in st.session_state:

|

| 156 |

+

st.session_state.columns_size = [2] + [1 for x in out_reader_type_list[1:]]

|

| 157 |

+

if 'column_width' not in st.session_state:

|

| 158 |

+

st.session_state.column_width = [400] + [300 for x in out_reader_type_list[1:]]

|

| 159 |

+

if 'columns_color' not in st.session_state:

|

| 160 |

+

st.session_state.columns_color = ["rgb(228,26,28)"] + \

|

| 161 |

+

["rgb(0,0,0)" for x in out_reader_type_list[1:]]

|

| 162 |

+

if 'list_coordinates' not in st.session_state:

|

| 163 |

+

st.session_state.list_coordinates = []

|

| 164 |

+

|

| 165 |

+

# Confidence color scale

|

| 166 |

+

out_list_confid = list(np.arange(0,101,1))

|

| 167 |

+

out_list_grad = mcp.gen_color_normalized(cmap="Greens",data_arr=np.array(out_list_confid))

|

| 168 |

+

out_dict_back_colors = {out_list_confid[i]: out_list_grad[i] \

|

| 169 |

+

for i in range(len(out_list_confid))}

|

| 170 |

+

|

| 171 |

+

list_y = [1 for i in out_list_confid]

|

| 172 |

+

df_confid = pd.DataFrame({'% confidence scale': out_list_confid, 'y': list_y})

|

| 173 |

+

|

| 174 |

+

out_fig = px.scatter(df_confid, x='% confidence scale', y='y', \

|

| 175 |

+

hover_data={'% confidence scale': True, 'y': False},

|

| 176 |

+

color=out_dict_back_colors.values(), range_y=[0.9,1.1], range_x=[0,100],

|

| 177 |

+

color_discrete_map="identity",height=50,symbol='y',symbol_sequence=['square'])

|

| 178 |

+

out_fig.update_xaxes(showticklabels=False)

|

| 179 |

+

out_fig.update_yaxes(showticklabels=False, range=[0.1, 1.1], visible=False)

|

| 180 |

+

out_fig.update_traces(marker_size=50)

|

| 181 |

+

out_fig.update_layout(paper_bgcolor="white", margin=dict(b=0,r=0,t=0,l=0), xaxis_side="top", \

|

| 182 |

+

showlegend=False)

|

| 183 |

+

|

| 184 |

+

return out_reader_type_list, out_reader_type_dict, out_list_dict_lang, \

|

| 185 |

+

out_cols_size, out_dict_back_colors, out_fig

|

| 186 |

+

|

| 187 |

+

###

|

| 188 |

+

@st.experimental_memo(show_spinner=False)

|

| 189 |

+

def init_easyocr(in_params):

|

| 190 |

+

"""Initialization of easyOCR reader

|

| 191 |

+

|

| 192 |

+

Args:

|

| 193 |

+

in_params (list): list with the language

|

| 194 |

+

|

| 195 |

+

Returns:

|

| 196 |

+

easyocr reader: the easyocr reader instance

|

| 197 |

+

"""

|

| 198 |

+

out_ocr = easyocr.Reader(in_params)

|

| 199 |

+

return out_ocr

|

| 200 |

+

|

| 201 |

+

###

|

| 202 |

+

@st.cache(show_spinner=False)

|

| 203 |

+

def init_ppocr(in_params):

|

| 204 |

+

"""Initialization of PPOCR reader

|

| 205 |

+

|

| 206 |

+

Args:

|

| 207 |

+

in_params (dict): dict with parameters

|

| 208 |

+

|

| 209 |

+

Returns:

|

| 210 |

+

ppocr reader: the ppocr reader instance

|

| 211 |

+

"""

|

| 212 |

+

out_ocr = PaddleOCR(lang=in_params[0], **in_params[1])

|

| 213 |

+

return out_ocr

|

| 214 |

+

|

| 215 |

+

###

|

| 216 |

+

@st.experimental_memo(show_spinner=False)

|

| 217 |

+

def init_mmocr(in_params):

|

| 218 |

+

"""Initialization of MMOCR reader

|

| 219 |

+

|

| 220 |

+

Args:

|

| 221 |

+

in_params (dict): dict with parameters

|

| 222 |

+

|

| 223 |

+

Returns:

|

| 224 |

+

mmocr reader: the ppocr reader instance

|

| 225 |

+

"""

|

| 226 |

+

out_ocr = MMOCR(recog=None, **in_params[1])

|

| 227 |

+

return out_ocr

|

| 228 |

+

|

| 229 |

+

###

|

| 230 |

+

def init_readers(in_list_params):

|

| 231 |

+

"""Initialization of the readers, and return them as list

|

| 232 |

+

|

| 233 |

+

Args:

|

| 234 |

+

in_list_params (list): list of dicts of parameters for each reader

|

| 235 |

+

|

| 236 |

+

Returns:

|

| 237 |

+

list: list of the reader's instances

|

| 238 |

+

"""

|

| 239 |

+

# Instantiations of the readers :

|

| 240 |

+

# - EasyOCR

|

| 241 |

+

with st.spinner("EasyOCR reader initialization in progress ..."):

|

| 242 |

+

reader_easyocr = init_easyocr([in_list_params[0][0]])

|

| 243 |

+

|

| 244 |

+

# - PPOCR

|

| 245 |

+

# Paddleocr

|

| 246 |

+

with st.spinner("PPOCR reader initialization in progress ..."):

|

| 247 |

+

reader_ppocr = init_ppocr(in_list_params[1])

|

| 248 |

+

|

| 249 |

+

# - MMOCR

|

| 250 |

+

with st.spinner("MMOCR reader initialization in progress ..."):

|

| 251 |

+

reader_mmocr = init_mmocr(in_list_params[2])

|

| 252 |

+

|

| 253 |

+

out_list_readers = [reader_easyocr, reader_ppocr, reader_mmocr]

|

| 254 |

+

|

| 255 |

+

return out_list_readers

|

| 256 |

+

|

| 257 |

+

###

|

| 258 |

+

def load_image(in_image_file):

|

| 259 |

+

"""Load input file and open it

|

| 260 |

+

|

| 261 |

+

Args:

|

| 262 |

+

in_image_file (string or Streamlit UploadedFile): image to consider

|

| 263 |

+

|

| 264 |

+

Returns:

|

| 265 |

+

string : locally saved image path (img.)

|

| 266 |

+

PIL.Image : input file opened with Pillow

|

| 267 |

+

matrix : input file opened with Opencv

|

| 268 |

+

"""

|

| 269 |

+

|

| 270 |

+

#if isinstance(in_image_file, str):

|

| 271 |

+

# out_image_path = "img."+in_image_file.split('.')[-1]

|

| 272 |

+

#else:

|

| 273 |

+

# out_image_path = "img."+in_image_file.name.split('.')[-1]

|

| 274 |

+

|

| 275 |

+

if isinstance(in_image_file, str):

|

| 276 |

+

out_image_path = "tmp_"+in_image_file

|

| 277 |

+

else:

|

| 278 |

+

out_image_path = "tmp_"+in_image_file.name

|

| 279 |

+

|

| 280 |

+

img = Image.open(in_image_file)

|

| 281 |

+

img_saved = img.save(out_image_path)

|

| 282 |

+

|

| 283 |

+

# Read image

|

| 284 |

+

out_image_orig = Image.open(out_image_path)

|

| 285 |

+

out_image_cv2 = cv2.cvtColor(cv2.imread(out_image_path), cv2.COLOR_BGR2RGB)

|

| 286 |

+

|

| 287 |

+

return out_image_path, out_image_orig, out_image_cv2

|

| 288 |

+

|

| 289 |

+

###

|

| 290 |

+

@st.experimental_memo(show_spinner=False)

|

| 291 |

+

def easyocr_detect(_in_reader, in_image_path, in_params):

|

| 292 |

+

"""Detection with EasyOCR

|

| 293 |

+

|

| 294 |

+

Args:

|

| 295 |

+

_in_reader (EasyOCR reader) : the previously initialized instance

|

| 296 |

+

in_image_path (string ) : locally saved image path

|

| 297 |

+

in_params (list) : list with the parameters for detection

|

| 298 |

+

|

| 299 |

+

Returns:

|

| 300 |

+

list : list of the boxes coordinates

|

| 301 |

+

exception on error, string 'OK' otherwise

|

| 302 |

+

"""

|

| 303 |

+

try:

|

| 304 |

+

dict_param = in_params[1]

|

| 305 |

+

detection_result = _in_reader.detect(in_image_path,

|

| 306 |

+

#width_ths=0.7,

|

| 307 |

+

#mag_ratio=1.5

|

| 308 |

+

**dict_param

|

| 309 |

+

)

|

| 310 |

+

easyocr_coordinates = detection_result[0][0]

|

| 311 |

+

|

| 312 |

+

# The format of the coordinate is as follows: [x_min, x_max, y_min, y_max]

|

| 313 |

+

# Format boxes coordinates for draw

|

| 314 |

+

out_easyocr_boxes_coordinates = list(map(easyocr_coord_convert, easyocr_coordinates))

|

| 315 |

+

out_status = 'OK'

|

| 316 |

+

except Exception as e:

|

| 317 |

+

out_easyocr_boxes_coordinates = []

|

| 318 |

+

out_status = e

|

| 319 |

+

|

| 320 |

+

return out_easyocr_boxes_coordinates, out_status

|

| 321 |

+

|

| 322 |

+

###

|

| 323 |

+

@st.experimental_memo(show_spinner=False)

|

| 324 |

+

def ppocr_detect(_in_reader, in_image_path):

|

| 325 |

+

"""Detection with PPOCR

|

| 326 |

+

|

| 327 |

+

Args:

|

| 328 |

+

_in_reader (PPOCR reader) : the previously initialized instance

|

| 329 |

+

in_image_path (string ) : locally saved image path

|

| 330 |

+

|

| 331 |

+

Returns:

|

| 332 |

+

list : list of the boxes coordinates

|

| 333 |

+

exception on error, string 'OK' otherwise

|

| 334 |

+

"""

|

| 335 |

+

# PPOCR detection method

|

| 336 |

+

try:

|

| 337 |

+

out_ppocr_boxes_coordinates = _in_reader.ocr(in_image_path, rec=False)

|

| 338 |

+

out_status = 'OK'

|

| 339 |

+

except Exception as e:

|

| 340 |

+

out_ppocr_boxes_coordinates = []

|

| 341 |

+

out_status = e

|

| 342 |

+

|

| 343 |

+

return out_ppocr_boxes_coordinates, out_status

|

| 344 |

+

|

| 345 |

+

###

|

| 346 |

+

@st.experimental_memo(show_spinner=False)

|

| 347 |

+

def mmocr_detect(_in_reader, in_image_path):

|

| 348 |

+

"""Detection with MMOCR

|

| 349 |

+

|

| 350 |

+

Args:

|

| 351 |

+

_in_reader (EasyORC reader) : the previously initialized instance

|

| 352 |

+

in_image_path (string) : locally saved image path

|

| 353 |

+

in_params (list) : list with the parameters

|

| 354 |

+

|

| 355 |

+

Returns:

|

| 356 |

+

list : list of the boxes coordinates

|

| 357 |

+

exception on error, string 'OK' otherwise

|

| 358 |

+

"""

|

| 359 |

+

# MMOCR detection method

|

| 360 |

+

out_mmocr_boxes_coordinates = []

|

| 361 |

+

try:

|

| 362 |

+

det_result = _in_reader.readtext(in_image_path, details=True)

|

| 363 |

+

bboxes_list = [res['boundary_result'] for res in det_result]

|

| 364 |

+

for bboxes in bboxes_list:

|

| 365 |

+

for bbox in bboxes:

|

| 366 |

+

if len(bbox) > 9:

|

| 367 |

+

min_x = min(bbox[0:-1:2])

|

| 368 |

+

min_y = min(bbox[1:-1:2])

|

| 369 |

+

max_x = max(bbox[0:-1:2])

|

| 370 |

+

max_y = max(bbox[1:-1:2])

|

| 371 |

+

#box = [min_x, min_y, max_x, min_y, max_x, max_y, min_x, max_y]

|

| 372 |

+

else:

|

| 373 |

+

min_x = min(bbox[0:-1:2])

|

| 374 |

+

min_y = min(bbox[1::2])

|

| 375 |

+

max_x = max(bbox[0:-1:2])

|

| 376 |

+

max_y = max(bbox[1::2])

|

| 377 |

+

box4 = [ [min_x, min_y], [max_x, min_y], [max_x, max_y], [min_x, max_y] ]

|

| 378 |

+

out_mmocr_boxes_coordinates.append(box4)

|

| 379 |

+

out_status = 'OK'

|

| 380 |

+

except Exception as e:

|

| 381 |

+

out_status = e

|

| 382 |

+

|

| 383 |

+

return out_mmocr_boxes_coordinates, out_status

|

| 384 |

+

|

| 385 |

+

###

|

| 386 |

+

def cropped_1box(in_box, in_img):

|

| 387 |

+

"""Construction of an cropped image corresponding to an area of the initial image

|

| 388 |

+

|

| 389 |

+

Args:

|

| 390 |

+

in_box (list) : box with coordinates

|

| 391 |

+

in_img (matrix) : image

|

| 392 |

+

|

| 393 |

+

Returns:

|

| 394 |

+

matrix : cropped image

|

| 395 |

+

"""

|

| 396 |

+

box_ar = np.array(in_box).astype(np.int64)

|

| 397 |

+

x_min = box_ar[:, 0].min()

|