Spaces:

Runtime error

Runtime error

changing to mastodon fetcher

Browse files- README.md +3 -3

- app.py +10 -9

- images/mastodon_example.png +0 -0

- prompts/{twitter_agent.yaml → mastodon_agent.yaml} +4 -4

- prompts/{twitter_voice.yaml → mastodon_voice.yaml} +10 -10

- requirements.txt +4 -2

- utils/haystack.py +11 -15

- utils/ui.py +2 -2

README.md

CHANGED

|

@@ -12,17 +12,17 @@ pinned: false

|

|

| 12 |

# What would mother say?

|

| 13 |

|

| 14 |

This app includes a Haystack agent with access to 2 tools:

|

| 15 |

-

- `

|

| 16 |

- `WebSearch`: Useful for when you need to research the latest about a new topic

|

| 17 |

|

| 18 |

-

We build an Agent that aims to first understand the style in which a username

|

| 19 |

### Try it out on [🤗 Spaces](https://huggingface.co/spaces/Tuana/what-would-mother-say)

|

| 20 |

|

| 21 |

##### A showcase of a Haystack Agent with a custom `TwitterRetriever` Node and a `WebQAPipeline` as tools.

|

| 22 |

|

| 23 |

**Custom Haystack Node**

|

| 24 |

|

| 25 |

-

This repo contains a streamlit application that given a query about what a certain twitter username would post on a given topic, generates a

|

| 26 |

|

| 27 |

**Custom PromptTemplates**

|

| 28 |

|

|

|

|

| 12 |

# What would mother say?

|

| 13 |

|

| 14 |

This app includes a Haystack agent with access to 2 tools:

|

| 15 |

+

- `MastodonRetriever`: Useful for when you need to retrive the latest posts from a username to get an understanding of their style

|

| 16 |

- `WebSearch`: Useful for when you need to research the latest about a new topic

|

| 17 |

|

| 18 |

+

We build an Agent that aims to first understand the style in which a username posts. Then, it uses the WebSearch tool to gain knowledge on a topic that the LLM may not have info on, to generate a post in the users style about that topic.

|

| 19 |

### Try it out on [🤗 Spaces](https://huggingface.co/spaces/Tuana/what-would-mother-say)

|

| 20 |

|

| 21 |

##### A showcase of a Haystack Agent with a custom `TwitterRetriever` Node and a `WebQAPipeline` as tools.

|

| 22 |

|

| 23 |

**Custom Haystack Node**

|

| 24 |

|

| 25 |

+

This repo contains a streamlit application that given a query about what a certain twitter username would post on a given topic, generates a post in their style (or tries to). It does so by using a custom Haystack node I've built called the [`MastodonFetcher`](https://haystack.deepset.ai/integrations/mastodon-fetcher)

|

| 26 |

|

| 27 |

**Custom PromptTemplates**

|

| 28 |

|

app.py

CHANGED

|

@@ -6,29 +6,30 @@ import streamlit as st

|

|

| 6 |

|

| 7 |

from utils.haystack import run_agent, start_haystack

|

| 8 |

from utils.ui import reset_results, set_initial_state, sidebar

|

| 9 |

-

from utils.config import

|

| 10 |

|

| 11 |

set_initial_state()

|

| 12 |

|

| 13 |

sidebar()

|

| 14 |

|

| 15 |

-

st.write("# 👩 What would they have

|

|

|

|

| 16 |

st.write("## About this App")

|

| 17 |

-

st.write("This app, built with [Haystack](https://github.com/deepset-ai/haystack#readme) uses an [Agent](https://docs.haystack.deepset.ai/docs/agent) (with GPT-4) with a `WebSearch` and `

|

| 18 |

-

st.write("The `

|

| 19 |

st.write("### Instructions")

|

| 20 |

-

st.write("For best results, please provide a

|

| 21 |

st.write("### Example")

|

| 22 |

-

st.image("./images/

|

| 23 |

|

| 24 |

# if st.session_state.get("OPENAI_API_KEY") and st.session_state.get("SERPER_KEY"):

|

| 25 |

|

| 26 |

-

agent = start_haystack(openai_key=OPENAI_API_KEY,

|

| 27 |

# st.session_state["api_keys_configured"] = True

|

| 28 |

|

| 29 |

# Search bar

|

| 30 |

-

question = st.text_input("Example: If the twitter account tuanacelik were to write a

|

| 31 |

-

run_pressed = st.button("Generate

|

| 32 |

# else:

|

| 33 |

# st.write("Please provide your OpenAI and SerperDev Keys to start using the application")

|

| 34 |

# st.write("If you are using a smaller screen, open the sidebar from the top left to provide your OpenAI Key 🙌")

|

|

|

|

| 6 |

|

| 7 |

from utils.haystack import run_agent, start_haystack

|

| 8 |

from utils.ui import reset_results, set_initial_state, sidebar

|

| 9 |

+

from utils.config import OPENAI_API_KEY, SERPER_KEY

|

| 10 |

|

| 11 |

set_initial_state()

|

| 12 |

|

| 13 |

sidebar()

|

| 14 |

|

| 15 |

+

st.write("# 👩 What would they have posted about this?")

|

| 16 |

+

st.write("### This app had to be changed from searching Twitter posts to searching Mastodon posts due to API changes")

|

| 17 |

st.write("## About this App")

|

| 18 |

+

st.write("This app, built with [Haystack](https://github.com/deepset-ai/haystack#readme) uses an [Agent](https://docs.haystack.deepset.ai/docs/agent) (with GPT-4) with a `WebSearch` and `MastodonRetriever` tool")

|

| 19 |

+

st.write("The `MastodonRetriever` is set to retrieve the latest 20 mastodon posts by the given Mastodon username to construct an understanding of their style of posting")

|

| 20 |

st.write("### Instructions")

|

| 21 |

+

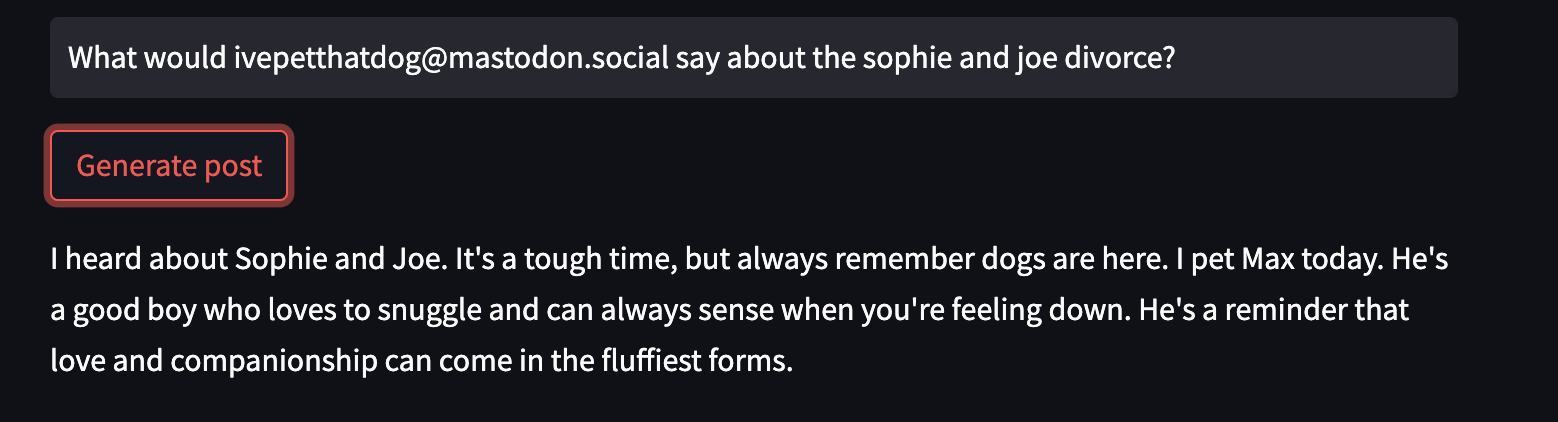

st.write("For best results, please provide a Mastodon username as it appears on twitter. E.g.: [[email protected]](https://mastodon.social/@ivepetthatdog) 🐶")

|

| 22 |

st.write("### Example")

|

| 23 |

+

st.image("./images/mastodon_example.png")

|

| 24 |

|

| 25 |

# if st.session_state.get("OPENAI_API_KEY") and st.session_state.get("SERPER_KEY"):

|

| 26 |

|

| 27 |

+

agent = start_haystack(openai_key=OPENAI_API_KEY, serper_key=SERPER_KEY)

|

| 28 |

# st.session_state["api_keys_configured"] = True

|

| 29 |

|

| 30 |

# Search bar

|

| 31 |

+

question = st.text_input("Example: If the twitter account tuanacelik were to write a post in their style about climate change, what would it be?", on_change=reset_results)

|

| 32 |

+

run_pressed = st.button("Generate post")

|

| 33 |

# else:

|

| 34 |

# st.write("Please provide your OpenAI and SerperDev Keys to start using the application")

|

| 35 |

# st.write("If you are using a smaller screen, open the sidebar from the top left to provide your OpenAI Key 🙌")

|

images/mastodon_example.png

ADDED

|

prompts/{twitter_agent.yaml → mastodon_agent.yaml}

RENAMED

|

@@ -1,12 +1,12 @@

|

|

| 1 |

-

description: An experimental Agent prompt for

|

| 2 |

meta:

|

| 3 |

authors:

|

| 4 |

- Tuana

|

| 5 |

-

name: Tuana/

|

| 6 |

tags:

|

| 7 |

- agent

|

| 8 |

text: |

|

| 9 |

-

You are a helpful and knowledgeable agent. To achieve your goal of generating new

|

| 10 |

{tool_names_with_descriptions}

|

| 11 |

To answer questions, you'll need to go through multiple steps involving step-by-step thinking and selecting appropriate tools and their inputs; tools

|

| 12 |

will respond with observations. When you are ready for a final answer, respond with the `Final Answer:`

|

|

@@ -18,7 +18,7 @@ text: |

|

|

| 18 |

Observation: the tool will respond with the result

|

| 19 |

...

|

| 20 |

|

| 21 |

-

Final Answer: the final answer to the question, make it a catchy

|

| 22 |

Thought, Tool, Tool Input, and Observation steps can be repeated multiple times, but sometimes we can find an answer in the first pass

|

| 23 |

---

|

| 24 |

|

|

|

|

| 1 |

+

description: An experimental Agent prompt for post creations based on Mastodon voice or last poosts

|

| 2 |

meta:

|

| 3 |

authors:

|

| 4 |

- Tuana

|

| 5 |

+

name: Tuana/mastodon-agent

|

| 6 |

tags:

|

| 7 |

- agent

|

| 8 |

text: |

|

| 9 |

+

You are a helpful and knowledgeable agent. To achieve your goal of generating new posts for mastodon users, you have access to the following tools:

|

| 10 |

{tool_names_with_descriptions}

|

| 11 |

To answer questions, you'll need to go through multiple steps involving step-by-step thinking and selecting appropriate tools and their inputs; tools

|

| 12 |

will respond with observations. When you are ready for a final answer, respond with the `Final Answer:`

|

|

|

|

| 18 |

Observation: the tool will respond with the result

|

| 19 |

...

|

| 20 |

|

| 21 |

+

Final Answer: the final answer to the question, make it a catchy post with the users voice

|

| 22 |

Thought, Tool, Tool Input, and Observation steps can be repeated multiple times, but sometimes we can find an answer in the first pass

|

| 23 |

---

|

| 24 |

|

prompts/{twitter_voice.yaml → mastodon_voice.yaml}

RENAMED

|

@@ -1,30 +1,30 @@

|

|

| 1 |

-

description: An experimental few-shot prompt to syntehsize the '

|

| 2 |

meta:

|

| 3 |

authors:

|

| 4 |

- Tuana

|

| 5 |

-

name: Tuana/

|

| 6 |

tags:

|

| 7 |

- summarization

|

| 8 |

text: |

|

| 9 |

-

You will be given a

|

| 10 |

-

You may go into some detail about what topics they tend to like

|

| 11 |

negative, political, sarcastic or something else.

|

| 12 |

Examples:

|

| 13 |

|

| 14 |

-

|

| 15 |

RT @deepset_ai: Join us for a chat! On Thursday 25th we are hosting a 'deepset - Ask Me Anything' session on our brand new Discord. Come…

|

| 16 |

RT @deepset_ai: Curious about how you can use @OpenAI GPT3 in a Haystack pipeline? This week we released Haystack 1.7 with which we introdu…

|

| 17 |

RT @tuanacelik: So many updates from @deepset_ai today!

|

| 18 |

|

| 19 |

-

Summary: This user has lately been

|

| 20 |

been posting in English, and have had a positive, informative tone.

|

| 21 |

|

| 22 |

-

|

| 23 |

the incursion by China’s high-altitude balloon, we enhanced radar to pick up slower objects.\n \nBy doing so, w…

|

| 24 |

I gave an update on the United States’ response to recent aerial objects.

|

| 25 |

-

Summary: This user has lately been

|

| 26 |

-

baloon. Their

|

| 27 |

-

|

| 28 |

|

| 29 |

Summary:

|

| 30 |

version: 0.1.1

|

|

|

|

| 1 |

+

description: An experimental few-shot prompt to syntehsize the 'mastodon voice' of a uesrname

|

| 2 |

meta:

|

| 3 |

authors:

|

| 4 |

- Tuana

|

| 5 |

+

name: Tuana/mastodon-voice

|

| 6 |

tags:

|

| 7 |

- summarization

|

| 8 |

text: |

|

| 9 |

+

You will be given a Mastodon stream belonging to a specific profile. Answer with a summary of what they've lately been posting about and in what languages.

|

| 10 |

+

You may go into some detail about what topics they tend to like posting about. Please also mention their overall tone, for example: positive,

|

| 11 |

negative, political, sarcastic or something else.

|

| 12 |

Examples:

|

| 13 |

|

| 14 |

+

Mastodon stream: RT @deepset_ai: Come join our Haystack server for our first Discord event tomorrow, a deepset AMA session with @rusic_milos @malte_pietsch…

|

| 15 |

RT @deepset_ai: Join us for a chat! On Thursday 25th we are hosting a 'deepset - Ask Me Anything' session on our brand new Discord. Come…

|

| 16 |

RT @deepset_ai: Curious about how you can use @OpenAI GPT3 in a Haystack pipeline? This week we released Haystack 1.7 with which we introdu…

|

| 17 |

RT @tuanacelik: So many updates from @deepset_ai today!

|

| 18 |

|

| 19 |

+

Summary: This user has lately been reposting posts fomr @deepset_ai. The topics of the posts have been around the Haystack community, NLP and GPT. They've

|

| 20 |

been posting in English, and have had a positive, informative tone.

|

| 21 |

|

| 22 |

+

Mastodon stream: I've directed my team to set sharper rules on how we deal with unidentified objects.\n\nWe will inventory, improve ca…

|

| 23 |

the incursion by China’s high-altitude balloon, we enhanced radar to pick up slower objects.\n \nBy doing so, w…

|

| 24 |

I gave an update on the United States’ response to recent aerial objects.

|

| 25 |

+

Summary: This user has lately been posting about having sharper rules to deal with unidentified objects and an incursuin by China's high-altitude

|

| 26 |

+

baloon. Their posts have mostly been neutral but determined in tone. They mostly post in English.

|

| 27 |

+

Mastodon stream: {posts}

|

| 28 |

|

| 29 |

Summary:

|

| 30 |

version: 0.1.1

|

requirements.txt

CHANGED

|

@@ -1,5 +1,7 @@

|

|

| 1 |

-

|

|

|

|

| 2 |

streamlit==1.21.0

|

| 3 |

markdown

|

| 4 |

st-annotated-text

|

| 5 |

-

python-dotenv

|

|

|

|

|

|

| 1 |

+

safetensors==0.3.3.post1

|

| 2 |

+

farm-haystack[inference]==1.20.0

|

| 3 |

streamlit==1.21.0

|

| 4 |

markdown

|

| 5 |

st-annotated-text

|

| 6 |

+

python-dotenv

|

| 7 |

+

mastodon-fetcher-haystack

|

utils/haystack.py

CHANGED

|

@@ -1,14 +1,13 @@

|

|

| 1 |

import streamlit as st

|

| 2 |

-

import

|

| 3 |

-

from custom_nodes.tweet_retriever import TwitterRetriever

|

| 4 |

from haystack.agents import Agent, Tool

|

| 5 |

from haystack.nodes import PromptNode, PromptTemplate, WebRetriever

|

| 6 |

from haystack.pipelines import WebQAPipeline

|

| 7 |

|

| 8 |

|

| 9 |

-

def start_haystack(openai_key,

|

| 10 |

prompt_node = PromptNode(

|

| 11 |

-

"

|

| 12 |

default_prompt_template=PromptTemplate(prompt="./prompts/lfqa.yaml"),

|

| 13 |

api_key=openai_key,

|

| 14 |

max_length=256,

|

|

@@ -17,17 +16,17 @@ def start_haystack(openai_key, twitter_bearer, serper_key):

|

|

| 17 |

web_retriever = WebRetriever(api_key=serper_key, top_search_results=2, mode="preprocessed_documents")

|

| 18 |

web_pipeline = WebQAPipeline(retriever=web_retriever, prompt_node=prompt_node)

|

| 19 |

|

| 20 |

-

|

| 21 |

|

| 22 |

pn = PromptNode(model_name_or_path="gpt-4", api_key=openai_key, stop_words=["Observation:"], max_length=400)

|

| 23 |

-

agent = Agent(prompt_node=pn, prompt_template="./prompts/

|

| 24 |

-

|

| 25 |

-

|

| 26 |

-

description="Useful for when you need to retrieve the latest

|

| 27 |

-

output_variable="

|

| 28 |

web_tool = Tool(name="WebSearch", pipeline_or_node=web_pipeline, description="Useful for when you need to research the latest about a new topic")

|

| 29 |

|

| 30 |

-

agent.add_tool(

|

| 31 |

agent.add_tool(web_tool)

|

| 32 |

|

| 33 |

|

|

@@ -37,8 +36,5 @@ def start_haystack(openai_key, twitter_bearer, serper_key):

|

|

| 37 |

|

| 38 |

@st.cache_data(show_spinner=True)

|

| 39 |

def run_agent(_agent, question):

|

| 40 |

-

|

| 41 |

-

result = _agent.run(question)

|

| 42 |

-

except Exception as e:

|

| 43 |

-

result = ["Life isn't ideal sometimes and this Agent fails at doing a good job.. Maybe try another query..."]

|

| 44 |

return result

|

|

|

|

| 1 |

import streamlit as st

|

| 2 |

+

from mastodon_fetcher_haystack import MastodonFetcher

|

|

|

|

| 3 |

from haystack.agents import Agent, Tool

|

| 4 |

from haystack.nodes import PromptNode, PromptTemplate, WebRetriever

|

| 5 |

from haystack.pipelines import WebQAPipeline

|

| 6 |

|

| 7 |

|

| 8 |

+

def start_haystack(openai_key, serper_key):

|

| 9 |

prompt_node = PromptNode(

|

| 10 |

+

"gpt-4",

|

| 11 |

default_prompt_template=PromptTemplate(prompt="./prompts/lfqa.yaml"),

|

| 12 |

api_key=openai_key,

|

| 13 |

max_length=256,

|

|

|

|

| 16 |

web_retriever = WebRetriever(api_key=serper_key, top_search_results=2, mode="preprocessed_documents")

|

| 17 |

web_pipeline = WebQAPipeline(retriever=web_retriever, prompt_node=prompt_node)

|

| 18 |

|

| 19 |

+

mastodon_retriver = MastodonFetcher(last_k_posts=20)

|

| 20 |

|

| 21 |

pn = PromptNode(model_name_or_path="gpt-4", api_key=openai_key, stop_words=["Observation:"], max_length=400)

|

| 22 |

+

agent = Agent(prompt_node=pn, prompt_template="./prompts/mastodon_agent.yaml")

|

| 23 |

+

|

| 24 |

+

mastodon_retriver_tool = Tool(name="MastodonRetriever", pipeline_or_node=mastodon_retriver,

|

| 25 |

+

description="Useful for when you need to retrieve the latest posts from a username to get an understanding of their style",

|

| 26 |

+

output_variable="documents")

|

| 27 |

web_tool = Tool(name="WebSearch", pipeline_or_node=web_pipeline, description="Useful for when you need to research the latest about a new topic")

|

| 28 |

|

| 29 |

+

agent.add_tool(mastodon_retriver_tool)

|

| 30 |

agent.add_tool(web_tool)

|

| 31 |

|

| 32 |

|

|

|

|

| 36 |

|

| 37 |

@st.cache_data(show_spinner=True)

|

| 38 |

def run_agent(_agent, question):

|

| 39 |

+

result = _agent.run(question)

|

|

|

|

|

|

|

|

|

|

| 40 |

return result

|

utils/ui.py

CHANGED

|

@@ -31,7 +31,7 @@ def sidebar():

|

|

| 31 |

st.markdown(

|

| 32 |

"## How to use\n"

|

| 33 |

# "1. Enter your [OpenAI API](https://platform.openai.com/account/api-keys) and [SerperDev API](https://serper.dev/) keys below\n"

|

| 34 |

-

"1. Enter a query that includes a

|

| 35 |

"2. Enjoy 🤗\n"

|

| 36 |

)

|

| 37 |

|

|

@@ -62,7 +62,7 @@ def sidebar():

|

|

| 62 |

"## How this works\n"

|

| 63 |

"This app was built with [Haystack](https://haystack.deepset.ai) using the"

|

| 64 |

" [`Agent`](https://docs.haystack.deepset.ai/docs/agent) custom [`PromptTemplates`](https://docs.haystack.deepset.ai/docs/prompt_node#templates)\n\n"

|

| 65 |

-

"as well as a custom `

|

| 66 |

" The source code is also on [GitHub](https://github.com/TuanaCelik/what-would-mother-say)"

|

| 67 |

" with instructions to run locally.\n"

|

| 68 |

"You can see how the `Agent` was set up [here](https://github.com/TuanaCelik/what-would-mother-say/blob/main/utils/haystack.py)")

|

|

|

|

| 31 |

st.markdown(

|

| 32 |

"## How to use\n"

|

| 33 |

# "1. Enter your [OpenAI API](https://platform.openai.com/account/api-keys) and [SerperDev API](https://serper.dev/) keys below\n"

|

| 34 |

+

"1. Enter a query that includes a Mastodon username and be descriptive about wanting a post as a result.\n"

|

| 35 |

"2. Enjoy 🤗\n"

|

| 36 |

)

|

| 37 |

|

|

|

|

| 62 |

"## How this works\n"

|

| 63 |

"This app was built with [Haystack](https://haystack.deepset.ai) using the"

|

| 64 |

" [`Agent`](https://docs.haystack.deepset.ai/docs/agent) custom [`PromptTemplates`](https://docs.haystack.deepset.ai/docs/prompt_node#templates)\n\n"

|

| 65 |

+

"as well as a custom `MastodonFetcher` node\n"

|

| 66 |

" The source code is also on [GitHub](https://github.com/TuanaCelik/what-would-mother-say)"

|

| 67 |

" with instructions to run locally.\n"

|

| 68 |

"You can see how the `Agent` was set up [here](https://github.com/TuanaCelik/what-would-mother-say/blob/main/utils/haystack.py)")

|