Spaces:

Runtime error

Runtime error

Commit

·

181722d

1

Parent(s):

5f3ae13

Upload 89 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +1 -0

- CODEOWNERS +2 -0

- LICENSE.txt +14 -0

- MANIFEST.in +7 -0

- MiniGPT_4.pdf +3 -0

- README.md +139 -12

- app.py +146 -0

- create_align_dataset.py +134 -0

- dataset/convert_cc_sbu.py +20 -0

- dataset/convert_laion.py +20 -0

- dataset/download_cc_sbu.sh +6 -0

- dataset/download_laion.sh +6 -0

- dataset/readme.md +92 -0

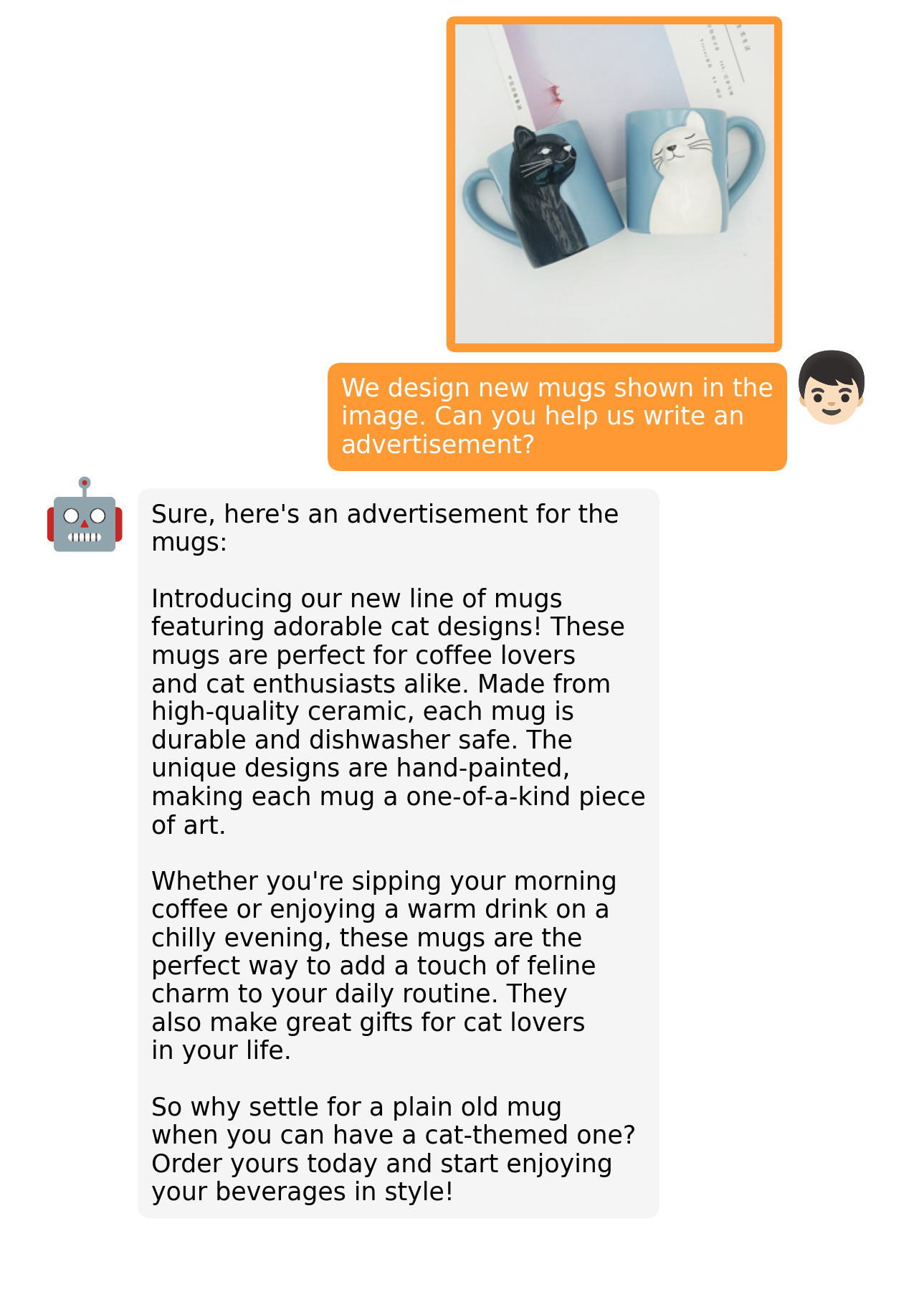

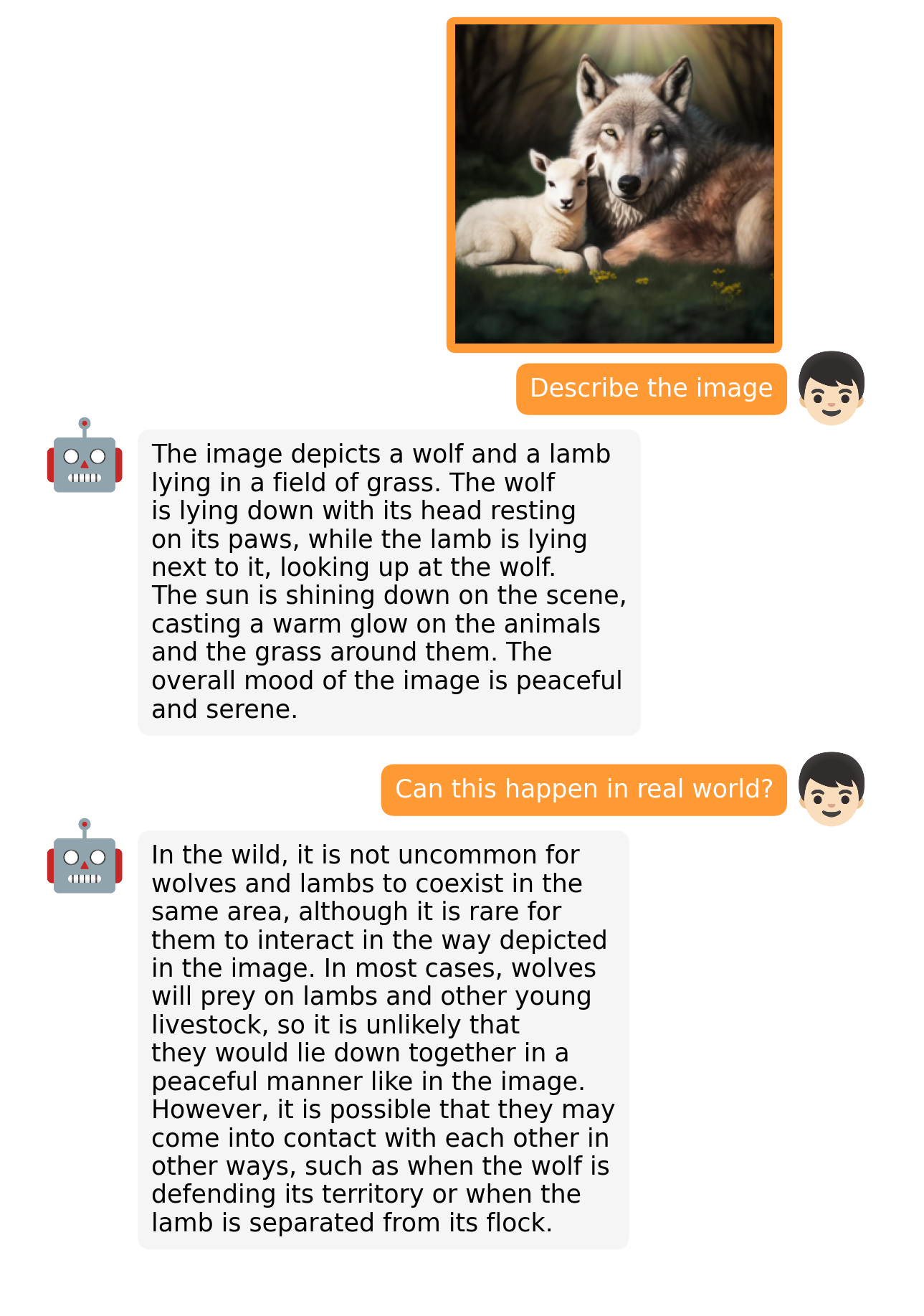

- demo_dev.ipynb +0 -0

- develop.ipynb +929 -0

- environment.yml +56 -0

- eval_configs/minigpt4.yaml +30 -0

- examples/ad_1.png +0 -0

- examples/ad_2.png +0 -0

- examples/cook_1.png +0 -0

- examples/cook_2.png +0 -0

- examples/describe_1.png +0 -0

- examples/describe_2.png +0 -0

- examples/fact_1.png +0 -0

- examples/fact_2.png +0 -0

- examples/fix_1.png +0 -0

- examples/fix_2.png +0 -0

- examples/fun_1.png +0 -0

- examples/fun_2.png +0 -0

- examples/logo_1.png +0 -0

- examples/op_1.png +0 -0

- examples/op_2.png +0 -0

- examples/people_1.png +0 -0

- examples/people_2.png +0 -0

- examples/rhyme_1.png +0 -0

- examples/rhyme_2.png +0 -0

- examples/story_1.png +0 -0

- examples/story_2.png +0 -0

- examples/web_1.png +0 -0

- examples/wop_1.png +0 -0

- examples/wop_2.png +0 -0

- minigpt4/__init__.py +31 -0

- minigpt4/common/__init__.py +0 -0

- minigpt4/common/config.py +468 -0

- minigpt4/common/dist_utils.py +137 -0

- minigpt4/common/gradcam.py +24 -0

- minigpt4/common/logger.py +195 -0

- minigpt4/common/optims.py +119 -0

- minigpt4/common/registry.py +329 -0

- minigpt4/common/utils.py +424 -0

.gitattributes

CHANGED

|

@@ -32,3 +32,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

MiniGPT_4.pdf filter=lfs diff=lfs merge=lfs -text

|

CODEOWNERS

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Comment line immediately above ownership line is reserved for related gus information. Please be careful while editing.

|

| 2 |

+

#ECCN:Open Source

|

LICENSE.txt

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

BSD 3-Clause License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2022 Salesforce, Inc.

|

| 4 |

+

All rights reserved.

|

| 5 |

+

|

| 6 |

+

Redistribution and use in source and binary forms, with or without modification, are permitted provided that the following conditions are met:

|

| 7 |

+

|

| 8 |

+

1. Redistributions of source code must retain the above copyright notice, this list of conditions and the following disclaimer.

|

| 9 |

+

|

| 10 |

+

2. Redistributions in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution.

|

| 11 |

+

|

| 12 |

+

3. Neither the name of Salesforce.com nor the names of its contributors may be used to endorse or promote products derived from this software without specific prior written permission.

|

| 13 |

+

|

| 14 |

+

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

|

MANIFEST.in

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

recursive-include minigpt4/configs *.yaml *.json

|

| 2 |

+

recursive-include minigpt4/projects *.yaml *.json

|

| 3 |

+

|

| 4 |

+

recursive-exclude minigpt4/datasets/download_scripts *

|

| 5 |

+

recursive-exclude minigpt4/output *

|

| 6 |

+

|

| 7 |

+

include requirements.txt

|

MiniGPT_4.pdf

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:5ef8de6eeefee0dcf33dea53e8de2a884939dc20617362052232e7a223941260

|

| 3 |

+

size 6614913

|

README.md

CHANGED

|

@@ -1,12 +1,139 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# MiniGPT-4: Enhancing Vision-language Understanding with Advanced Large Language Models

|

| 2 |

+

[Deyao Zhu](https://tsutikgiau.github.io/)* (On Job Market!), [Jun Chen](https://junchen14.github.io/)* (On Job Market!), Xiaoqian Shen, Xiang Li, and Mohamed Elhoseiny. *Equal Contribution

|

| 3 |

+

|

| 4 |

+

**King Abdullah University of Science and Technology**

|

| 5 |

+

|

| 6 |

+

[[Project Website]](https://minigpt-4.github.io/) [[Paper]](MiniGPT_4.pdf) [Online Demo]

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

## Online Demo

|

| 10 |

+

|

| 11 |

+

Chat with MiniGPT-4 around your images

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

## Examples

|

| 15 |

+

| | |

|

| 16 |

+

:-------------------------:|:-------------------------:

|

| 17 |

+

|

|

| 18 |

+

|

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

## Abstract

|

| 25 |

+

The recent GPT-4 has demonstrated extraordinary multi-modal abilities, such as directly generating websites from handwritten text and identifying humorous elements within images. These features are rarely observed in previous vision-language models. We believe the primary reason for GPT-4's advanced multi-modal generation capabilities lies in the utilization of a more advanced large language model (LLM). To examine this phenomenon, we present MiniGPT-4, which aligns a frozen visual encoder with a frozen LLM, Vicuna, using just one projection layer.

|

| 26 |

+

Our findings reveal that MiniGPT-4 processes many capabilities similar to those exhibited by GPT-4 like detailed image description generation and website creation from hand-written drafts. Furthermore, we also observe other emerging capabilities in MiniGPT-4, including writing stories and poems inspired by given images, providing solutions to problems shown in images, teaching users how to cook based on food photos, etc.

|

| 27 |

+

These advanced capabilities can be attributed to the use of a more advanced large language model.

|

| 28 |

+

Furthermore, our method is computationally efficient, as we only train a projection layer using roughly 5 million aligned image-text pairs and an additional 3,500 carefully curated high-quality pairs.

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

## Getting Started

|

| 38 |

+

### Installation

|

| 39 |

+

|

| 40 |

+

1. Prepare the code and the environment

|

| 41 |

+

|

| 42 |

+

Git clone our repository, creating a python environment and ativate it via the following command

|

| 43 |

+

|

| 44 |

+

```bash

|

| 45 |

+

git clone https://github.com/Vision-CAIR/MiniGPT-4.git

|

| 46 |

+

cd MiniGPT-4

|

| 47 |

+

conda env create -f environment.yml

|

| 48 |

+

conda activate minigpt4

|

| 49 |

+

```

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

2. Prepare the pretrained Vicuna weights

|

| 53 |

+

|

| 54 |

+

The current version of MiniGPT-4 is built on the v0 versoin of Vicuna-13B.

|

| 55 |

+

Please refer to their instructions [here](https://huggingface.co/lmsys/vicuna-13b-delta-v0) to obtaining the weights.

|

| 56 |

+

The final weights would be in a single folder with the following structure:

|

| 57 |

+

|

| 58 |

+

```

|

| 59 |

+

vicuna_weights

|

| 60 |

+

├── config.json

|

| 61 |

+

├── generation_config.json

|

| 62 |

+

├── pytorch_model.bin.index.json

|

| 63 |

+

├── pytorch_model-00001-of-00003.bin

|

| 64 |

+

...

|

| 65 |

+

```

|

| 66 |

+

|

| 67 |

+

Then, set the path to the vicuna weight in the model config file

|

| 68 |

+

[here](minigpt4/configs/models/minigpt4.yaml#L21) at Line 21.

|

| 69 |

+

|

| 70 |

+

3. Prepare the pretrained MiniGPT-4 checkpoint

|

| 71 |

+

|

| 72 |

+

To play with our pretrained model, download the pretrained checkpoint

|

| 73 |

+

[here](https://drive.google.com/file/d/1a4zLvaiDBr-36pasffmgpvH5P7CKmpze/view?usp=share_link).

|

| 74 |

+

Then, set the path to the pretrained checkpoint in the evaluation config file

|

| 75 |

+

in [eval_configs/minigpt4.yaml](eval_configs/minigpt4.yaml#L15) at Line 15.

|

| 76 |

+

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

|

| 80 |

+

|

| 81 |

+

### Launching Demo Locally

|

| 82 |

+

|

| 83 |

+

Try out our demo [demo.py](app.py) with your images for on your local machine by running

|

| 84 |

+

|

| 85 |

+

```

|

| 86 |

+

python demo.py --cfg-path eval_configs/minigpt4.yaml

|

| 87 |

+

```

|

| 88 |

+

|

| 89 |

+

|

| 90 |

+

|

| 91 |

+

|

| 92 |

+

|

| 93 |

+

### Training

|

| 94 |

+

The training of MiniGPT-4 contains two-stage alignments.

|

| 95 |

+

In the first stage, the model is trained using image-text pairs from Laion and CC datasets

|

| 96 |

+

to align the vision and language model. To download and prepare the datasets, please check

|

| 97 |

+

[here](dataset/readme.md).

|

| 98 |

+

After the first stage, the visual features are mapped and can be understood by the language

|

| 99 |

+

model.

|

| 100 |

+

To launch the first stage training, run

|

| 101 |

+

|

| 102 |

+

```bash

|

| 103 |

+

torchrun --nproc-per-node NUM_GPU train.py --cfg-path train_config/minigpt4_stage1_laion.yaml

|

| 104 |

+

```

|

| 105 |

+

|

| 106 |

+

In the second stage, we use a small high quality image-text pair dataset created by ourselves

|

| 107 |

+

and convert it to a conversation format to further align MiniGPT-4.

|

| 108 |

+

Our second stage dataset can be download from

|

| 109 |

+

[here](https://drive.google.com/file/d/1RnS0mQJj8YU0E--sfH08scu5-ALxzLNj/view?usp=share_link).

|

| 110 |

+

After the second stage alignment, MiniGPT-4 is able to talk about the image in

|

| 111 |

+

a smooth way.

|

| 112 |

+

To launch the second stage alignment, run

|

| 113 |

+

|

| 114 |

+

```bash

|

| 115 |

+

torchrun --nproc-per-node NUM_GPU train.py --cfg-path train_config/minigpt4_stage2_align.yaml

|

| 116 |

+

```

|

| 117 |

+

|

| 118 |

+

|

| 119 |

+

|

| 120 |

+

|

| 121 |

+

|

| 122 |

+

## Acknowledgement

|

| 123 |

+

|

| 124 |

+

+ [BLIP2](https://huggingface.co/docs/transformers/main/model_doc/blip-2)

|

| 125 |

+

+ [Vicuna](https://github.com/lm-sys/FastChat)

|

| 126 |

+

|

| 127 |

+

|

| 128 |

+

If you're using MiniGPT-4 in your research or applications, please cite using this BibTeX:

|

| 129 |

+

```bibtex

|

| 130 |

+

@misc{zhu2022minigpt4,

|

| 131 |

+

title={MiniGPT-4: Enhancing the Vision-language Understanding with Advanced Large Language Models},

|

| 132 |

+

author={Deyao Zhu and Jun Chen and Xiaoqian Shen and xiang Li and Mohamed Elhoseiny},

|

| 133 |

+

year={2023},

|

| 134 |

+

}

|

| 135 |

+

```

|

| 136 |

+

|

| 137 |

+

## License

|

| 138 |

+

This repository is built on [Lavis](https://github.com/salesforce/LAVIS) with BSD 3-Clause License

|

| 139 |

+

[BSD 3-Clause License](LICENSE.txt)

|

app.py

ADDED

|

@@ -0,0 +1,146 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import argparse

|

| 2 |

+

import os

|

| 3 |

+

import random

|

| 4 |

+

|

| 5 |

+

import numpy as np

|

| 6 |

+

import torch

|

| 7 |

+

import torch.backends.cudnn as cudnn

|

| 8 |

+

import gradio as gr

|

| 9 |

+

|

| 10 |

+

from minigpt4.common.config import Config

|

| 11 |

+

from minigpt4.common.dist_utils import get_rank

|

| 12 |

+

from minigpt4.common.registry import registry

|

| 13 |

+

from minigpt4.conversation.conversation import Chat, CONV_VISION

|

| 14 |

+

|

| 15 |

+

# imports modules for registration

|

| 16 |

+

from minigpt4.datasets.builders import *

|

| 17 |

+

from minigpt4.models import *

|

| 18 |

+

from minigpt4.processors import *

|

| 19 |

+

from minigpt4.runners import *

|

| 20 |

+

from minigpt4.tasks import *

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

def parse_args():

|

| 24 |

+

parser = argparse.ArgumentParser(description="Demo")

|

| 25 |

+

parser.add_argument("--cfg-path", type=str, default='eval_configs/minigpt4.yaml', help="path to configuration file.")

|

| 26 |

+

parser.add_argument(

|

| 27 |

+

"--options",

|

| 28 |

+

nargs="+",

|

| 29 |

+

help="override some settings in the used config, the key-value pair "

|

| 30 |

+

"in xxx=yyy format will be merged into config file (deprecate), "

|

| 31 |

+

"change to --cfg-options instead.",

|

| 32 |

+

)

|

| 33 |

+

args = parser.parse_args()

|

| 34 |

+

return args

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

def setup_seeds(config):

|

| 38 |

+

seed = config.run_cfg.seed + get_rank()

|

| 39 |

+

|

| 40 |

+

random.seed(seed)

|

| 41 |

+

np.random.seed(seed)

|

| 42 |

+

torch.manual_seed(seed)

|

| 43 |

+

|

| 44 |

+

cudnn.benchmark = False

|

| 45 |

+

cudnn.deterministic = True

|

| 46 |

+

|

| 47 |

+

|

| 48 |

+

# ========================================

|

| 49 |

+

# Model Initialization

|

| 50 |

+

# ========================================

|

| 51 |

+

|

| 52 |

+

print('Initializing Chat')

|

| 53 |

+

cfg = Config(parse_args())

|

| 54 |

+

|

| 55 |

+

model_config = cfg.model_cfg

|

| 56 |

+

model_cls = registry.get_model_class(model_config.arch)

|

| 57 |

+

model = model_cls.from_config(model_config).to('cuda:0')

|

| 58 |

+

|

| 59 |

+

vis_processor_cfg = cfg.datasets_cfg.cc_align.vis_processor.train

|

| 60 |

+

vis_processor = registry.get_processor_class(vis_processor_cfg.name).from_config(vis_processor_cfg)

|

| 61 |

+

chat = Chat(model, vis_processor)

|

| 62 |

+

print('Initialization Finished')

|

| 63 |

+

|

| 64 |

+

# ========================================

|

| 65 |

+

# Gradio Setting

|

| 66 |

+

# ========================================

|

| 67 |

+

|

| 68 |

+

def gradio_reset(chat_state, img_list):

|

| 69 |

+

chat_state.messages = []

|

| 70 |

+

img_list = []

|

| 71 |

+

return None, gr.update(value=None, interactive=True), gr.update(placeholder='Please upload your image first', interactive=False), gr.update(value="Upload & Start Chat", interactive=True), chat_state, img_list

|

| 72 |

+

|

| 73 |

+

def upload_img(gr_img, text_input, chat_state):

|

| 74 |

+

if gr_img is None:

|

| 75 |

+

return None, None, gr.update(interactive=True)

|

| 76 |

+

chat_state = CONV_VISION.copy()

|

| 77 |

+

img_list = []

|

| 78 |

+

llm_message = chat.upload_img(gr_img, chat_state, img_list)

|

| 79 |

+

return gr.update(interactive=False), gr.update(interactive=True, placeholder='Type and press Enter'), gr.update(value="Start Chatting", interactive=False), chat_state, img_list

|

| 80 |

+

|

| 81 |

+

def gradio_ask(user_message, chatbot, chat_state):

|

| 82 |

+

if len(user_message) == 0:

|

| 83 |

+

return gr.update(interactive=True, placeholder='Input should not be empty!'), chatbot, chat_state

|

| 84 |

+

chat.ask(user_message, chat_state)

|

| 85 |

+

chatbot = chatbot + [[user_message, None]]

|

| 86 |

+

return '', chatbot, chat_state

|

| 87 |

+

|

| 88 |

+

|

| 89 |

+

def gradio_answer(chatbot, chat_state, img_list, num_beams, temperature):

|

| 90 |

+

llm_message = chat.answer(conv=chat_state, img_list=img_list, max_new_tokens=1000, num_beams=num_beams, temperature=temperature)[0]

|

| 91 |

+

chatbot[-1][1] = llm_message

|

| 92 |

+

return chatbot, chat_state, img_list

|

| 93 |

+

|

| 94 |

+

title = """<h1 align="center">Demo of MiniGPT-4</h1>"""

|

| 95 |

+

description = """<h3>This is the demo of MiniGPT-4. Upload your images and start chatting!</h3>"""

|

| 96 |

+

article = """<strong>Paper</strong>: <a href='https://github.com/Vision-CAIR/MiniGPT-4/blob/main/MiniGPT_4.pdf' target='_blank'>Here</a>

|

| 97 |

+

<strong>Code</strong>: <a href='https://github.com/Vision-CAIR/MiniGPT-4' target='_blank'>Here</a>

|

| 98 |

+

<strong>Project Page</strong>: <a href='https://minigpt-4.github.io/' target='_blank'>Here</a>

|

| 99 |

+

"""

|

| 100 |

+

|

| 101 |

+

#TODO show examples below

|

| 102 |

+

|

| 103 |

+

with gr.Blocks() as demo:

|

| 104 |

+

gr.Markdown(title)

|

| 105 |

+

gr.Markdown(description)

|

| 106 |

+

gr.Markdown(article)

|

| 107 |

+

|

| 108 |

+

with gr.Row():

|

| 109 |

+

with gr.Column(scale=0.5):

|

| 110 |

+

image = gr.Image(type="pil")

|

| 111 |

+

upload_button = gr.Button(value="Upload & Start Chat", interactive=True, variant="primary")

|

| 112 |

+

clear = gr.Button("Restart")

|

| 113 |

+

|

| 114 |

+

num_beams = gr.Slider(

|

| 115 |

+

minimum=1,

|

| 116 |

+

maximum=16,

|

| 117 |

+

value=5,

|

| 118 |

+

step=1,

|

| 119 |

+

interactive=True,

|

| 120 |

+

label="beam search numbers)",

|

| 121 |

+

)

|

| 122 |

+

|

| 123 |

+

temperature = gr.Slider(

|

| 124 |

+

minimum=0.1,

|

| 125 |

+

maximum=2.0,

|

| 126 |

+

value=1.0,

|

| 127 |

+

step=0.1,

|

| 128 |

+

interactive=True,

|

| 129 |

+

label="Temperature",

|

| 130 |

+

)

|

| 131 |

+

|

| 132 |

+

|

| 133 |

+

with gr.Column():

|

| 134 |

+

chat_state = gr.State()

|

| 135 |

+

img_list = gr.State()

|

| 136 |

+

chatbot = gr.Chatbot(label='MiniGPT-4')

|

| 137 |

+

text_input = gr.Textbox(label='User', placeholder='Please upload your image first', interactive=False)

|

| 138 |

+

|

| 139 |

+

upload_button.click(upload_img, [image, text_input, chat_state], [image, text_input, upload_button, chat_state, img_list])

|

| 140 |

+

|

| 141 |

+

text_input.submit(gradio_ask, [text_input, chatbot, chat_state], [text_input, chatbot, chat_state]).then(

|

| 142 |

+

gradio_answer, [chatbot, chat_state, img_list, num_beams, temperature], [chatbot, chat_state, img_list]

|

| 143 |

+

)

|

| 144 |

+

clear.click(gradio_reset, [chat_state, img_list], [chatbot, image, text_input, upload_button, chat_state, img_list], queue=False)

|

| 145 |

+

|

| 146 |

+

demo.launch(share=True, enable_queue=True)

|

create_align_dataset.py

ADDED

|

@@ -0,0 +1,134 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import argparse

|

| 2 |

+

import os

|

| 3 |

+

import json

|

| 4 |

+

from tqdm import tqdm

|

| 5 |

+

import random

|

| 6 |

+

import numpy as np

|

| 7 |

+

from PIL import Image

|

| 8 |

+

import webdataset as wds

|

| 9 |

+

import torch

|

| 10 |

+

from torchvision.datasets import ImageFolder

|

| 11 |

+

import torchvision.transforms as transforms

|

| 12 |

+

|

| 13 |

+

import openai

|

| 14 |

+

from tenacity import (

|

| 15 |

+

retry,

|

| 16 |

+

stop_after_attempt,

|

| 17 |

+

wait_random_exponential,

|

| 18 |

+

) # for exponential backoff

|

| 19 |

+

|

| 20 |

+

from minigpt4.common.config import Config

|

| 21 |

+

from minigpt4.common.registry import registry

|

| 22 |

+

from minigpt4.conversation.conversation import Chat

|

| 23 |

+

|

| 24 |

+

openai.api_key = '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'

|

| 25 |

+

|

| 26 |

+

|

| 27 |

+

def prepare_chatgpt_message(task_prompt, paragraph):

|

| 28 |

+

messages = [{"role": "system", "content": task_prompt},

|

| 29 |

+

{"role": "user", "content": paragraph}]

|

| 30 |

+

return messages

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

@retry(wait=wait_random_exponential(min=1, max=60), stop=stop_after_attempt(6))

|

| 34 |

+

def call_chatgpt(chatgpt_messages, max_tokens=200, model="gpt-3.5-turbo"):

|

| 35 |

+

response = openai.ChatCompletion.create(model=model, messages=chatgpt_messages, temperature=0.7, max_tokens=max_tokens)

|

| 36 |

+

reply = response['choices'][0]['message']['content']

|

| 37 |

+

total_tokens = response['usage']['total_tokens']

|

| 38 |

+

return reply, total_tokens

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

def main(args):

|

| 42 |

+

|

| 43 |

+

print('Initializing Chat')

|

| 44 |

+

cfg = Config(args)

|

| 45 |

+

|

| 46 |

+

model_config = cfg.model_cfg

|

| 47 |

+

model_cls = registry.get_model_class(model_config.arch)

|

| 48 |

+

model = model_cls.from_config(model_config).to('cuda:{}'.format(args.device))

|

| 49 |

+

|

| 50 |

+

ckpt_path = '/ibex/project/c2133/vicuna_ckpt_test/Vicuna_pretrain_stage2_cc/20230405233_3GPU40kSTEP_MAIN/checkpoint_3.pth'

|

| 51 |

+

ckpt = torch.load(ckpt_path)

|

| 52 |

+

msg = model.load_state_dict(ckpt['model'], strict=False)

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

vis_processor_cfg = cfg.datasets_cfg.cc_combine.vis_processor.train

|

| 56 |

+

vis_processor = registry.get_processor_class(vis_processor_cfg.name).from_config(vis_processor_cfg)

|

| 57 |

+

|

| 58 |

+

text_processor_cfg = cfg.datasets_cfg.laion.text_processor.train

|

| 59 |

+

text_processor = registry.get_processor_class(text_processor_cfg.name).from_config(text_processor_cfg)

|

| 60 |

+

|

| 61 |

+

chat = Chat(model, vis_processor, args.device)

|

| 62 |

+

print('Initialization Finished')

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

|

| 66 |

+

texts = {}

|

| 67 |

+

negative_list = []

|

| 68 |

+

|

| 69 |

+

for i in tqdm(range(args.begin_id, args.end_id)):

|

| 70 |

+

image = Image.open(os.path.join(args.save_dir, 'image/{}.jpg'.format(i))).convert('RGB')

|

| 71 |

+

|

| 72 |

+

fix_prompt = \

|

| 73 |

+

"Fix the error in the given paragraph. " \

|

| 74 |

+

"Remove any repeating sentences, meanless characters, not English sentences, and so on." \

|

| 75 |

+

"Remove unnecessary repetition." \

|

| 76 |

+

"Rewrite any incomplete sentences." \

|

| 77 |

+

"Return directly the results WITHOUT explanation." \

|

| 78 |

+

"Return directly the input paragraph if it is already correct WITHOUT explanation."

|

| 79 |

+

|

| 80 |

+

answers = []

|

| 81 |

+

answer_tokens = 0

|

| 82 |

+

chat.reset()

|

| 83 |

+

chat.upload_img(image)

|

| 84 |

+

chat.ask("Describe this image in detail. Give as many details as possible. Say everything you see.")

|

| 85 |

+

answer, tokens = chat.answer()

|

| 86 |

+

answers.append(answer)

|

| 87 |

+

answer_tokens += tokens

|

| 88 |

+

if len(answer_tokens) < 80:

|

| 89 |

+

chat.ask("Continue")

|

| 90 |

+

answer, answer_token = chat.answer()

|

| 91 |

+

answers.append(answer)

|

| 92 |

+

answer_tokens += tokens

|

| 93 |

+

answer = ' '.join(answers)

|

| 94 |

+

|

| 95 |

+

chatgpt_message = prepare_chatgpt_message(fix_prompt, answer)

|

| 96 |

+

improved_answer, num_token = call_chatgpt(chatgpt_message)

|

| 97 |

+

|

| 98 |

+

if 'already correct' in improved_answer:

|

| 99 |

+

if 'repetition' in improved_answer:

|

| 100 |

+

continue

|

| 101 |

+

improved_answer = answer

|

| 102 |

+

if 'incomplete' in improved_answer or len(improved_answer) < 50:

|

| 103 |

+

negative_list.append(improved_answer)

|

| 104 |

+

else:

|

| 105 |

+

texts[i] = improved_answer

|

| 106 |

+

|

| 107 |

+

with open(os.path.join(args.save_dir, "cap_{}_{}.json".format(args.begin_id, args.end_id)), "w") as outfile:

|

| 108 |

+

# write the dictionary to the file in JSON format

|

| 109 |

+

json.dump(texts, outfile)

|

| 110 |

+

|

| 111 |

+

|

| 112 |

+

if __name__ == "__main__":

|

| 113 |

+

parser = argparse.ArgumentParser(description="Create Alignment")

|

| 114 |

+

|

| 115 |

+

parser.add_argument("--cfg-path", default='train_config/minigpt4_stage2_align.yaml')

|

| 116 |

+

parser.add_argument("--save-dir", default="/ibex/project/c2133/blip_dataset/image_alignment")

|

| 117 |

+

parser.add_argument("--begin-id", type=int)

|

| 118 |

+

parser.add_argument("--end-id", type=int)

|

| 119 |

+

parser.add_argument("--device", type=int)

|

| 120 |

+

parser.add_argument(

|

| 121 |

+

"--options",

|

| 122 |

+

nargs="+",

|

| 123 |

+

help="override some settings in the used config, the key-value pair "

|

| 124 |

+

"in xxx=yyy format will be merged into config file (deprecate), "

|

| 125 |

+

"change to --cfg-options instead.",

|

| 126 |

+

)

|

| 127 |

+

|

| 128 |

+

args = parser.parse_args()

|

| 129 |

+

|

| 130 |

+

print("begin_id: ", args.begin_id)

|

| 131 |

+

print("end_id: ", args.end_id)

|

| 132 |

+

print("device:", args.device)

|

| 133 |

+

|

| 134 |

+

main(args)

|

dataset/convert_cc_sbu.py

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import json

|

| 2 |

+

import csv

|

| 3 |

+

|

| 4 |

+

# specify input and output file paths

|

| 5 |

+

input_file = 'ccs_synthetic_filtered_large.json'

|

| 6 |

+

output_file = 'ccs_synthetic_filtered_large.tsv'

|

| 7 |

+

|

| 8 |

+

# load JSON data from input file

|

| 9 |

+

with open(input_file, 'r') as f:

|

| 10 |

+

data = json.load(f)

|

| 11 |

+

|

| 12 |

+

# extract header and data from JSON

|

| 13 |

+

header = data[0].keys()

|

| 14 |

+

rows = [x.values() for x in data]

|

| 15 |

+

|

| 16 |

+

# write data to TSV file

|

| 17 |

+

with open(output_file, 'w') as f:

|

| 18 |

+

writer = csv.writer(f, delimiter='\t')

|

| 19 |

+

writer.writerow(header)

|

| 20 |

+

writer.writerows(rows)

|

dataset/convert_laion.py

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import json

|

| 2 |

+

import csv

|

| 3 |

+

|

| 4 |

+

# specify input and output file paths

|

| 5 |

+

input_file = 'laion_synthetic_filtered_large.json'

|

| 6 |

+

output_file = 'laion_synthetic_filtered_large.tsv'

|

| 7 |

+

|

| 8 |

+

# load JSON data from input file

|

| 9 |

+

with open(input_file, 'r') as f:

|

| 10 |

+

data = json.load(f)

|

| 11 |

+

|

| 12 |

+

# extract header and data from JSON

|

| 13 |

+

header = data[0].keys()

|

| 14 |

+

rows = [x.values() for x in data]

|

| 15 |

+

|

| 16 |

+

# write data to TSV file

|

| 17 |

+

with open(output_file, 'w') as f:

|

| 18 |

+

writer = csv.writer(f, delimiter='\t')

|

| 19 |

+

writer.writerow(header)

|

| 20 |

+

writer.writerows(rows)

|

dataset/download_cc_sbu.sh

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/bin/bash

|

| 2 |

+

|

| 3 |

+

img2dataset --url_list ccs_synthetic_filtered_large.tsv --input_format "tsv"\

|

| 4 |

+

--url_col "url" --caption_col "caption" --output_format webdataset\

|

| 5 |

+

--output_folder cc_sbu_dataset --processes_count 16 --thread_count 128 --image_size 256 \

|

| 6 |

+

--enable_wandb True

|

dataset/download_laion.sh

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/bin/bash

|

| 2 |

+

|

| 3 |

+

img2dataset --url_list laion_synthetic_filtered_large.tsv --input_format "tsv"\

|

| 4 |

+

--url_col "url" --caption_col "caption" --output_format webdataset\

|

| 5 |

+

--output_folder laion_dataset --processes_count 16 --thread_count 128 --image_size 256 \

|

| 6 |

+

--enable_wandb True

|

dataset/readme.md

ADDED

|

@@ -0,0 +1,92 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## Download the filtered Conceptual Captions, SBU, LAION datasets

|

| 2 |

+

|

| 3 |

+

### Pre-training datasets download:

|

| 4 |

+

We use the filtered synthetic captions prepared by BLIP. For more details about the dataset, please refer to [BLIP](https://github.com/salesforce/BLIP).

|

| 5 |

+

|

| 6 |

+

It requires ~2.3T to store LAION and CC3M+CC12M+SBU datasets

|

| 7 |

+

|

| 8 |

+

Image source | Filtered synthetic caption by ViT-L

|

| 9 |

+

--- | :---:

|

| 10 |

+

CC3M+CC12M+SBU | <a href="https://storage.googleapis.com/sfr-vision-language-research/BLIP/datasets/ccs_synthetic_filtered_large.json">Download</a>

|

| 11 |

+

LAION115M | <a href="https://storage.googleapis.com/sfr-vision-language-research/BLIP/datasets/laion_synthetic_filtered_large.json">Download</a>

|

| 12 |

+

|

| 13 |

+

This will download two json files

|

| 14 |

+

```

|

| 15 |

+

ccs_synthetic_filtered_large.json

|

| 16 |

+

laion_synthetic_filtered_large.json

|

| 17 |

+

```

|

| 18 |

+

|

| 19 |

+

## prepare the data step-by-step

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

### setup the dataset folder and move the annotation file to the data storage folder

|

| 23 |

+

```

|

| 24 |

+

export MINIGPT4_DATASET=/YOUR/PATH/FOR/LARGE/DATASET/

|

| 25 |

+

mkdir ${MINIGPT4_DATASET}/cc_sbu

|

| 26 |

+

mkdir ${MINIGPT4_DATASET}/laion

|

| 27 |

+

mv ccs_synthetic_filtered_large.json ${MINIGPT4_DATASET}/cc_sbu

|

| 28 |

+

mv laion_synthetic_filtered_large.json ${MINIGPT4_DATASET}/laion

|

| 29 |

+

```

|

| 30 |

+

|

| 31 |

+

### Convert the scripts to data storate folder

|

| 32 |

+

```

|

| 33 |

+

cp convert_cc_sbu.py ${MINIGPT4_DATASET}/cc_sbu

|

| 34 |

+

cp download_cc_sbu.sh ${MINIGPT4_DATASET}/cc_sbu

|

| 35 |

+

cp convert_laion.py ${MINIGPT4_DATASET}/laion

|

| 36 |

+

cp download_laion.sh ${MINIGPT4_DATASET}/laion

|

| 37 |

+

```

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

### Convert the laion and cc_sbu annotation file format to be img2dataset format

|

| 41 |

+

```

|

| 42 |

+

cd ${MINIGPT4_DATASET}/cc_sbu

|

| 43 |

+

python convert_cc_sbu.py

|

| 44 |

+

|

| 45 |

+

cd ${MINIGPT4_DATASET}/laion

|

| 46 |

+

python convert_laion.py

|

| 47 |

+

```

|

| 48 |

+

|

| 49 |

+

### Download the datasets with img2dataset

|

| 50 |

+

```

|

| 51 |

+

cd ${MINIGPT4_DATASET}/cc_sbu

|

| 52 |

+

sh download_cc_sbu.sh

|

| 53 |

+

cd ${MINIGPT4_DATASET}/laion

|

| 54 |

+

sh download_laion.sh

|

| 55 |

+

```

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

The final dataset structure

|

| 59 |

+

|

| 60 |

+

```

|

| 61 |

+

.

|

| 62 |

+

├── ${MINIGPT4_DATASET}

|

| 63 |

+

│ ├── cc_sbu

|

| 64 |

+

│ ├── convert_cc_sbu.py

|

| 65 |

+

│ ├── download_cc_sbu.sh

|

| 66 |

+

│ ├── ccs_synthetic_filtered_large.json

|

| 67 |

+

│ ├── ccs_synthetic_filtered_large.tsv

|

| 68 |

+

│ └── cc_sbu_dataset

|

| 69 |

+

│ ├── 00000.tar

|

| 70 |

+

│ ├── 00000.parquet

|

| 71 |

+

│ ...

|

| 72 |

+

│ ├── laion

|

| 73 |

+

│ ├── convert_laion.py

|

| 74 |

+

│ ├── download_laion.sh

|

| 75 |

+

│ ├── laion_synthetic_filtered_large.json

|

| 76 |

+

│ ├── laion_synthetic_filtered_large.tsv

|

| 77 |

+

│ └── laion_dataset

|

| 78 |

+

│ ├── 00000.tar

|

| 79 |

+

│ ├── 00000.parquet

|

| 80 |

+

│ ...

|

| 81 |

+

...

|

| 82 |

+

```

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

## Set up the dataset configuration files

|

| 86 |

+

|

| 87 |

+

Then, set up the LAION dataset loading path in [here](../minigpt4/configs/datasets/laion/defaults.yaml#L13) at Line 13 as ${MINIGPT4_DATASET}/laion/laion_dataset/{00000..10488}.tar

|

| 88 |

+

|

| 89 |

+

Then, set up the Conceptual Captoin and SBU datasets loading path in [here](../minigpt4/configs/datasets/cc_sbu/defaults.yaml#L13) at Line 13 as ${MINIGPT4_DATASET}/cc_sbu/cc_sbu_dataset/{00000..01255}.tar

|

| 90 |

+

|

| 91 |

+

|

| 92 |

+

|

demo_dev.ipynb

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

develop.ipynb

ADDED

|

@@ -0,0 +1,929 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|