Spaces:

Build error

Build error

Upload 213 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +7 -0

- .gitignore +150 -0

- .pre-commit-config.yaml +65 -0

- CONTRIBUTING.md +113 -0

- MANIFEST.in +5 -0

- README.md +82 -12

- YOLOv8_DeepSORT_TRACKING_SCRIPT.ipynb +3 -0

- YOLOv8_Detection_Tracking_CustomData_Complete.ipynb +3 -0

- figure/figure1.png +3 -0

- figure/figure2.png +3 -0

- figure/figure3.png +3 -0

- mkdocs.yml +95 -0

- requirements.txt +46 -0

- setup.cfg +54 -0

- setup.py +53 -0

- ultralytics/__init__.py +9 -0

- ultralytics/hub/__init__.py +133 -0

- ultralytics/hub/auth.py +70 -0

- ultralytics/hub/session.py +122 -0

- ultralytics/hub/utils.py +150 -0

- ultralytics/models/README.md +36 -0

- ultralytics/models/v3/yolov3-spp.yaml +47 -0

- ultralytics/models/v3/yolov3-tiny.yaml +38 -0

- ultralytics/models/v3/yolov3.yaml +47 -0

- ultralytics/models/v5/yolov5l.yaml +44 -0

- ultralytics/models/v5/yolov5m.yaml +44 -0

- ultralytics/models/v5/yolov5n.yaml +44 -0

- ultralytics/models/v5/yolov5s.yaml +45 -0

- ultralytics/models/v5/yolov5x.yaml +44 -0

- ultralytics/models/v8/cls/yolov8l-cls.yaml +23 -0

- ultralytics/models/v8/cls/yolov8m-cls.yaml +23 -0

- ultralytics/models/v8/cls/yolov8n-cls.yaml +23 -0

- ultralytics/models/v8/cls/yolov8s-cls.yaml +23 -0

- ultralytics/models/v8/cls/yolov8x-cls.yaml +23 -0

- ultralytics/models/v8/seg/yolov8l-seg.yaml +40 -0

- ultralytics/models/v8/seg/yolov8m-seg.yaml +40 -0

- ultralytics/models/v8/seg/yolov8n-seg.yaml +40 -0

- ultralytics/models/v8/seg/yolov8s-seg.yaml +40 -0

- ultralytics/models/v8/seg/yolov8x-seg.yaml +40 -0

- ultralytics/models/v8/yolov8l.yaml +40 -0

- ultralytics/models/v8/yolov8m.yaml +40 -0

- ultralytics/models/v8/yolov8n.yaml +40 -0

- ultralytics/models/v8/yolov8s.yaml +40 -0

- ultralytics/models/v8/yolov8x.yaml +40 -0

- ultralytics/models/v8/yolov8x6.yaml +50 -0

- ultralytics/nn/__init__.py +0 -0

- ultralytics/nn/autobackend.py +381 -0

- ultralytics/nn/modules.py +688 -0

- ultralytics/nn/tasks.py +416 -0

- ultralytics/yolo/cli.py +52 -0

.gitattributes

CHANGED

|

@@ -32,3 +32,10 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

figure/figure1.png filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

figure/figure2.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

figure/figure3.png filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

ultralytics/yolo/v8/detect/deep_sort_pytorch/deep_sort/deep/checkpoint/ckpt.t7 filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

ultralytics/yolo/v8/detect/night_motorbikes.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

YOLOv8_DeepSORT_TRACKING_SCRIPT.ipynb filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

YOLOv8_Detection_Tracking_CustomData_Complete.ipynb filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,150 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Byte-compiled / optimized / DLL files

|

| 2 |

+

__pycache__/

|

| 3 |

+

*.py[cod]

|

| 4 |

+

*$py.class

|

| 5 |

+

|

| 6 |

+

# C extensions

|

| 7 |

+

*.so

|

| 8 |

+

|

| 9 |

+

# Distribution / packaging

|

| 10 |

+

.Python

|

| 11 |

+

build/

|

| 12 |

+

develop-eggs/

|

| 13 |

+

dist/

|

| 14 |

+

downloads/

|

| 15 |

+

eggs/

|

| 16 |

+

.eggs/

|

| 17 |

+

lib/

|

| 18 |

+

lib64/

|

| 19 |

+

parts/

|

| 20 |

+

sdist/

|

| 21 |

+

var/

|

| 22 |

+

wheels/

|

| 23 |

+

pip-wheel-metadata/

|

| 24 |

+

share/python-wheels/

|

| 25 |

+

*.egg-info/

|

| 26 |

+

.installed.cfg

|

| 27 |

+

*.egg

|

| 28 |

+

MANIFEST

|

| 29 |

+

|

| 30 |

+

# PyInstaller

|

| 31 |

+

# Usually these files are written by a python script from a template

|

| 32 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 33 |

+

*.manifest

|

| 34 |

+

*.spec

|

| 35 |

+

|

| 36 |

+

# Installer logs

|

| 37 |

+

pip-log.txt

|

| 38 |

+

pip-delete-this-directory.txt

|

| 39 |

+

|

| 40 |

+

# Unit test / coverage reports

|

| 41 |

+

htmlcov/

|

| 42 |

+

.tox/

|

| 43 |

+

.nox/

|

| 44 |

+

.coverage

|

| 45 |

+

.coverage.*

|

| 46 |

+

.cache

|

| 47 |

+

nosetests.xml

|

| 48 |

+

coverage.xml

|

| 49 |

+

*.cover

|

| 50 |

+

*.py,cover

|

| 51 |

+

.hypothesis/

|

| 52 |

+

.pytest_cache/

|

| 53 |

+

|

| 54 |

+

# Translations

|

| 55 |

+

*.mo

|

| 56 |

+

*.pot

|

| 57 |

+

|

| 58 |

+

# Django stuff:

|

| 59 |

+

*.log

|

| 60 |

+

local_settings.py

|

| 61 |

+

db.sqlite3

|

| 62 |

+

db.sqlite3-journal

|

| 63 |

+

|

| 64 |

+

# Flask stuff:

|

| 65 |

+

instance/

|

| 66 |

+

.webassets-cache

|

| 67 |

+

|

| 68 |

+

# Scrapy stuff:

|

| 69 |

+

.scrapy

|

| 70 |

+

|

| 71 |

+

# Sphinx documentation

|

| 72 |

+

docs/_build/

|

| 73 |

+

|

| 74 |

+

# PyBuilder

|

| 75 |

+

target/

|

| 76 |

+

|

| 77 |

+

# Jupyter Notebook

|

| 78 |

+

.ipynb_checkpoints

|

| 79 |

+

|

| 80 |

+

# IPython

|

| 81 |

+

profile_default/

|

| 82 |

+

ipython_config.py

|

| 83 |

+

|

| 84 |

+

# pyenv

|

| 85 |

+

.python-version

|

| 86 |

+

|

| 87 |

+

# pipenv

|

| 88 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 89 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 90 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 91 |

+

# install all needed dependencies.

|

| 92 |

+

#Pipfile.lock

|

| 93 |

+

|

| 94 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow

|

| 95 |

+

__pypackages__/

|

| 96 |

+

|

| 97 |

+

# Celery stuff

|

| 98 |

+

celerybeat-schedule

|

| 99 |

+

celerybeat.pid

|

| 100 |

+

|

| 101 |

+

# SageMath parsed files

|

| 102 |

+

*.sage.py

|

| 103 |

+

|

| 104 |

+

# Environments

|

| 105 |

+

.env

|

| 106 |

+

.venv

|

| 107 |

+

env/

|

| 108 |

+

venv/

|

| 109 |

+

ENV/

|

| 110 |

+

env.bak/

|

| 111 |

+

venv.bak/

|

| 112 |

+

|

| 113 |

+

# Spyder project settings

|

| 114 |

+

.spyderproject

|

| 115 |

+

.spyproject

|

| 116 |

+

|

| 117 |

+

# Rope project settings

|

| 118 |

+

.ropeproject

|

| 119 |

+

|

| 120 |

+

# mkdocs documentation

|

| 121 |

+

/site

|

| 122 |

+

|

| 123 |

+

# mypy

|

| 124 |

+

.mypy_cache/

|

| 125 |

+

.dmypy.json

|

| 126 |

+

dmypy.json

|

| 127 |

+

|

| 128 |

+

# Pyre type checker

|

| 129 |

+

.pyre/

|

| 130 |

+

|

| 131 |

+

# datasets and projects

|

| 132 |

+

datasets/

|

| 133 |

+

runs/

|

| 134 |

+

wandb/

|

| 135 |

+

|

| 136 |

+

.DS_Store

|

| 137 |

+

|

| 138 |

+

# Neural Network weights -----------------------------------------------------------------------------------------------

|

| 139 |

+

*.weights

|

| 140 |

+

*.pt

|

| 141 |

+

*.pb

|

| 142 |

+

*.onnx

|

| 143 |

+

*.engine

|

| 144 |

+

*.mlmodel

|

| 145 |

+

*.torchscript

|

| 146 |

+

*.tflite

|

| 147 |

+

*.h5

|

| 148 |

+

*_saved_model/

|

| 149 |

+

*_web_model/

|

| 150 |

+

*_openvino_model/

|

.pre-commit-config.yaml

ADDED

|

@@ -0,0 +1,65 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Define hooks for code formations

|

| 2 |

+

# Will be applied on any updated commit files if a user has installed and linked commit hook

|

| 3 |

+

|

| 4 |

+

default_language_version:

|

| 5 |

+

python: python3.8

|

| 6 |

+

|

| 7 |

+

exclude: 'docs/'

|

| 8 |

+

# Define bot property if installed via https://github.com/marketplace/pre-commit-ci

|

| 9 |

+

ci:

|

| 10 |

+

autofix_prs: true

|

| 11 |

+

autoupdate_commit_msg: '[pre-commit.ci] pre-commit suggestions'

|

| 12 |

+

autoupdate_schedule: monthly

|

| 13 |

+

# submodules: true

|

| 14 |

+

|

| 15 |

+

repos:

|

| 16 |

+

- repo: https://github.com/pre-commit/pre-commit-hooks

|

| 17 |

+

rev: v4.3.0

|

| 18 |

+

hooks:

|

| 19 |

+

# - id: end-of-file-fixer

|

| 20 |

+

- id: trailing-whitespace

|

| 21 |

+

- id: check-case-conflict

|

| 22 |

+

- id: check-yaml

|

| 23 |

+

- id: check-toml

|

| 24 |

+

- id: pretty-format-json

|

| 25 |

+

- id: check-docstring-first

|

| 26 |

+

|

| 27 |

+

- repo: https://github.com/asottile/pyupgrade

|

| 28 |

+

rev: v2.37.3

|

| 29 |

+

hooks:

|

| 30 |

+

- id: pyupgrade

|

| 31 |

+

name: Upgrade code

|

| 32 |

+

args: [ --py37-plus ]

|

| 33 |

+

|

| 34 |

+

- repo: https://github.com/PyCQA/isort

|

| 35 |

+

rev: 5.10.1

|

| 36 |

+

hooks:

|

| 37 |

+

- id: isort

|

| 38 |

+

name: Sort imports

|

| 39 |

+

|

| 40 |

+

- repo: https://github.com/pre-commit/mirrors-yapf

|

| 41 |

+

rev: v0.32.0

|

| 42 |

+

hooks:

|

| 43 |

+

- id: yapf

|

| 44 |

+

name: YAPF formatting

|

| 45 |

+

|

| 46 |

+

- repo: https://github.com/executablebooks/mdformat

|

| 47 |

+

rev: 0.7.16

|

| 48 |

+

hooks:

|

| 49 |

+

- id: mdformat

|

| 50 |

+

name: MD formatting

|

| 51 |

+

additional_dependencies:

|

| 52 |

+

- mdformat-gfm

|

| 53 |

+

- mdformat-black

|

| 54 |

+

# exclude: "README.md|README.zh-CN.md|CONTRIBUTING.md"

|

| 55 |

+

|

| 56 |

+

- repo: https://github.com/PyCQA/flake8

|

| 57 |

+

rev: 5.0.4

|

| 58 |

+

hooks:

|

| 59 |

+

- id: flake8

|

| 60 |

+

name: PEP8

|

| 61 |

+

|

| 62 |

+

#- repo: https://github.com/asottile/yesqa

|

| 63 |

+

# rev: v1.4.0

|

| 64 |

+

# hooks:

|

| 65 |

+

# - id: yesqa

|

CONTRIBUTING.md

ADDED

|

@@ -0,0 +1,113 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## Contributing to YOLOv8 🚀

|

| 2 |

+

|

| 3 |

+

We love your input! We want to make contributing to YOLOv8 as easy and transparent as possible, whether it's:

|

| 4 |

+

|

| 5 |

+

- Reporting a bug

|

| 6 |

+

- Discussing the current state of the code

|

| 7 |

+

- Submitting a fix

|

| 8 |

+

- Proposing a new feature

|

| 9 |

+

- Becoming a maintainer

|

| 10 |

+

|

| 11 |

+

YOLOv8 works so well due to our combined community effort, and for every small improvement you contribute you will be

|

| 12 |

+

helping push the frontiers of what's possible in AI 😃!

|

| 13 |

+

|

| 14 |

+

## Submitting a Pull Request (PR) 🛠️

|

| 15 |

+

|

| 16 |

+

Submitting a PR is easy! This example shows how to submit a PR for updating `requirements.txt` in 4 steps:

|

| 17 |

+

|

| 18 |

+

### 1. Select File to Update

|

| 19 |

+

|

| 20 |

+

Select `requirements.txt` to update by clicking on it in GitHub.

|

| 21 |

+

|

| 22 |

+

<p align="center"><img width="800" alt="PR_step1" src="https://user-images.githubusercontent.com/26833433/122260847-08be2600-ced4-11eb-828b-8287ace4136c.png"></p>

|

| 23 |

+

|

| 24 |

+

### 2. Click 'Edit this file'

|

| 25 |

+

|

| 26 |

+

Button is in top-right corner.

|

| 27 |

+

|

| 28 |

+

<p align="center"><img width="800" alt="PR_step2" src="https://user-images.githubusercontent.com/26833433/122260844-06f46280-ced4-11eb-9eec-b8a24be519ca.png"></p>

|

| 29 |

+

|

| 30 |

+

### 3. Make Changes

|

| 31 |

+

|

| 32 |

+

Change `matplotlib` version from `3.2.2` to `3.3`.

|

| 33 |

+

|

| 34 |

+

<p align="center"><img width="800" alt="PR_step3" src="https://user-images.githubusercontent.com/26833433/122260853-0a87e980-ced4-11eb-9fd2-3650fb6e0842.png"></p>

|

| 35 |

+

|

| 36 |

+

### 4. Preview Changes and Submit PR

|

| 37 |

+

|

| 38 |

+

Click on the **Preview changes** tab to verify your updates. At the bottom of the screen select 'Create a **new branch**

|

| 39 |

+

for this commit', assign your branch a descriptive name such as `fix/matplotlib_version` and click the green **Propose

|

| 40 |

+

changes** button. All done, your PR is now submitted to YOLOv8 for review and approval 😃!

|

| 41 |

+

|

| 42 |

+

<p align="center"><img width="800" alt="PR_step4" src="https://user-images.githubusercontent.com/26833433/122260856-0b208000-ced4-11eb-8e8e-77b6151cbcc3.png"></p>

|

| 43 |

+

|

| 44 |

+

### PR recommendations

|

| 45 |

+

|

| 46 |

+

To allow your work to be integrated as seamlessly as possible, we advise you to:

|

| 47 |

+

|

| 48 |

+

- ✅ Verify your PR is **up-to-date** with `ultralytics/ultralytics` `master` branch. If your PR is behind you can update

|

| 49 |

+

your code by clicking the 'Update branch' button or by running `git pull` and `git merge master` locally.

|

| 50 |

+

|

| 51 |

+

<p align="center"><img width="751" alt="Screenshot 2022-08-29 at 22 47 15" src="https://user-images.githubusercontent.com/26833433/187295893-50ed9f44-b2c9-4138-a614-de69bd1753d7.png"></p>

|

| 52 |

+

|

| 53 |

+

- ✅ Verify all YOLOv8 Continuous Integration (CI) **checks are passing**.

|

| 54 |

+

|

| 55 |

+

<p align="center"><img width="751" alt="Screenshot 2022-08-29 at 22 47 03" src="https://user-images.githubusercontent.com/26833433/187296922-545c5498-f64a-4d8c-8300-5fa764360da6.png"></p>

|

| 56 |

+

|

| 57 |

+

- ✅ Reduce changes to the absolute **minimum** required for your bug fix or feature addition. _"It is not daily increase

|

| 58 |

+

but daily decrease, hack away the unessential. The closer to the source, the less wastage there is."_ — Bruce Lee

|

| 59 |

+

|

| 60 |

+

### Docstrings

|

| 61 |

+

|

| 62 |

+

Not all functions or classes require docstrings but when they do, we follow [google-stlye docstrings format](https://google.github.io/styleguide/pyguide.html#38-comments-and-docstrings). Here is an example:

|

| 63 |

+

|

| 64 |

+

```python

|

| 65 |

+

"""

|

| 66 |

+

What the function does - performs nms on given detection predictions

|

| 67 |

+

|

| 68 |

+

Args:

|

| 69 |

+

arg1: The description of the 1st argument

|

| 70 |

+

arg2: The description of the 2nd argument

|

| 71 |

+

|

| 72 |

+

Returns:

|

| 73 |

+

What the function returns. Empty if nothing is returned

|

| 74 |

+

|

| 75 |

+

Raises:

|

| 76 |

+

Exception Class: When and why this exception can be raised by the function.

|

| 77 |

+

"""

|

| 78 |

+

```

|

| 79 |

+

|

| 80 |

+

## Submitting a Bug Report 🐛

|

| 81 |

+

|

| 82 |

+

If you spot a problem with YOLOv8 please submit a Bug Report!

|

| 83 |

+

|

| 84 |

+

For us to start investigating a possible problem we need to be able to reproduce it ourselves first. We've created a few

|

| 85 |

+

short guidelines below to help users provide what we need in order to get started.

|

| 86 |

+

|

| 87 |

+

When asking a question, people will be better able to provide help if you provide **code** that they can easily

|

| 88 |

+

understand and use to **reproduce** the problem. This is referred to by community members as creating

|

| 89 |

+

a [minimum reproducible example](https://stackoverflow.com/help/minimal-reproducible-example). Your code that reproduces

|

| 90 |

+

the problem should be:

|

| 91 |

+

|

| 92 |

+

- ✅ **Minimal** – Use as little code as possible that still produces the same problem

|

| 93 |

+

- ✅ **Complete** – Provide **all** parts someone else needs to reproduce your problem in the question itself

|

| 94 |

+

- ✅ **Reproducible** – Test the code you're about to provide to make sure it reproduces the problem

|

| 95 |

+

|

| 96 |

+

In addition to the above requirements, for [Ultralytics](https://ultralytics.com/) to provide assistance your code

|

| 97 |

+

should be:

|

| 98 |

+

|

| 99 |

+

- ✅ **Current** – Verify that your code is up-to-date with current

|

| 100 |

+

GitHub [master](https://github.com/ultralytics/ultralytics/tree/main), and if necessary `git pull` or `git clone` a new

|

| 101 |

+

copy to ensure your problem has not already been resolved by previous commits.

|

| 102 |

+

- ✅ **Unmodified** – Your problem must be reproducible without any modifications to the codebase in this

|

| 103 |

+

repository. [Ultralytics](https://ultralytics.com/) does not provide support for custom code ⚠️.

|

| 104 |

+

|

| 105 |

+

If you believe your problem meets all of the above criteria, please close this issue and raise a new one using the 🐛

|

| 106 |

+

**Bug Report** [template](https://github.com/ultralytics/ultralytics/issues/new/choose) and providing

|

| 107 |

+

a [minimum reproducible example](https://stackoverflow.com/help/minimal-reproducible-example) to help us better

|

| 108 |

+

understand and diagnose your problem.

|

| 109 |

+

|

| 110 |

+

## License

|

| 111 |

+

|

| 112 |

+

By contributing, you agree that your contributions will be licensed under

|

| 113 |

+

the [GPL-3.0 license](https://choosealicense.com/licenses/gpl-3.0/)

|

MANIFEST.in

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

include *.md

|

| 2 |

+

include requirements.txt

|

| 3 |

+

include LICENSE

|

| 4 |

+

include setup.py

|

| 5 |

+

recursive-include ultralytics *.yaml

|

README.md

CHANGED

|

@@ -1,12 +1,82 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

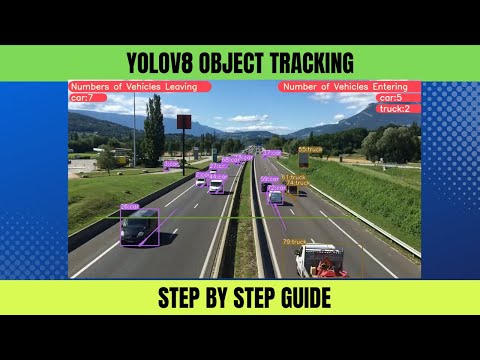

| 1 |

+

<H1 align="center">

|

| 2 |

+

YOLOv8 Object Detection with DeepSORT Tracking(ID + Trails) </H1>

|

| 3 |

+

|

| 4 |

+

## Google Colab File Link (A Single Click Solution)

|

| 5 |

+

The google colab file link for yolov8 object detection and tracking is provided below, you can check the implementation in Google Colab, and its a single click implementation, you just need to select the Run Time as GPU, and click on Run All.

|

| 6 |

+

|

| 7 |

+

[`Google Colab File`](https://colab.research.google.com/drive/1U6cnTQ0JwCg4kdHxYSl2NAhU4wK18oAu?usp=sharing)

|

| 8 |

+

|

| 9 |

+

## Object Detection and Tracking (ID + Trails) using YOLOv8 on Custom Data

|

| 10 |

+

## Google Colab File Link (A Single Click Solution)

|

| 11 |

+

[`Google Colab File`](https://colab.research.google.com/drive/1dEpI2k3m1i0vbvB4bNqPRQUO0gSBTz25?usp=sharing)

|

| 12 |

+

|

| 13 |

+

## YOLOv8 Segmentation with DeepSORT Object Tracking

|

| 14 |

+

|

| 15 |

+

[`Github Repo Link`](https://github.com/MuhammadMoinFaisal/YOLOv8_Segmentation_DeepSORT_Object_Tracking.git)

|

| 16 |

+

|

| 17 |

+

## Steps to run Code

|

| 18 |

+

|

| 19 |

+

- Clone the repository

|

| 20 |

+

```

|

| 21 |

+

git clone https://github.com/MuhammadMoinFaisal/YOLOv8-DeepSORT-Object-Tracking.git

|

| 22 |

+

```

|

| 23 |

+

- Goto the cloned folder.

|

| 24 |

+

```

|

| 25 |

+

cd YOLOv8-DeepSORT-Object-Tracking

|

| 26 |

+

```

|

| 27 |

+

- Install the dependecies

|

| 28 |

+

```

|

| 29 |

+

pip install -e '.[dev]'

|

| 30 |

+

|

| 31 |

+

```

|

| 32 |

+

|

| 33 |

+

- Setting the Directory.

|

| 34 |

+

```

|

| 35 |

+

cd ultralytics/yolo/v8/detect

|

| 36 |

+

|

| 37 |

+

```

|

| 38 |

+

- Downloading the DeepSORT Files From The Google Drive

|

| 39 |

+

```

|

| 40 |

+

|

| 41 |

+

https://drive.google.com/drive/folders/1kna8eWGrSfzaR6DtNJ8_GchGgPMv3VC8?usp=sharing

|

| 42 |

+

```

|

| 43 |

+

- After downloading the DeepSORT Zip file from the drive, unzip it go into the subfolders and place the deep_sort_pytorch folder into the yolo/v8/detect folder

|

| 44 |

+

|

| 45 |

+

- Downloading a Sample Video from the Google Drive

|

| 46 |

+

```

|

| 47 |

+

gdown "https://drive.google.com/uc?id=1rjBn8Fl1E_9d0EMVtL24S9aNQOJAveR5&confirm=t"

|

| 48 |

+

```

|

| 49 |

+

|

| 50 |

+

- Run the code with mentioned command below.

|

| 51 |

+

|

| 52 |

+

- For yolov8 object detection + Tracking

|

| 53 |

+

```

|

| 54 |

+

python predict.py model=yolov8l.pt source="test3.mp4" show=True

|

| 55 |

+

```

|

| 56 |

+

- For yolov8 object detection + Tracking + Vehicle Counting

|

| 57 |

+

- Download the updated predict.py file from the Google Drive and place it into ultralytics/yolo/v8/detect folder

|

| 58 |

+

- Google Drive Link

|

| 59 |

+

```

|

| 60 |

+

https://drive.google.com/drive/folders/1awlzTGHBBAn_2pKCkLFADMd1EN_rJETW?usp=sharing

|

| 61 |

+

```

|

| 62 |

+

- For yolov8 object detection + Tracking + Vehicle Counting

|

| 63 |

+

```

|

| 64 |

+

python predict.py model=yolov8l.pt source="test3.mp4" show=True

|

| 65 |

+

```

|

| 66 |

+

|

| 67 |

+

### RESULTS

|

| 68 |

+

|

| 69 |

+

#### Vehicles Detection, Tracking and Counting

|

| 70 |

+

|

| 71 |

+

|

| 72 |

+

#### Vehicles Detection, Tracking and Counting

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

|

| 76 |

+

### Watch the Complete Step by Step Explanation

|

| 77 |

+

|

| 78 |

+

- Video Tutorial Link [`YouTube Link`](https://www.youtube.com/watch?v=9jRRZ-WL698)

|

| 79 |

+

|

| 80 |

+

|

| 81 |

+

[]([https://www.youtube.com/watch?v=StTqXEQ2l-Y](https://www.youtube.com/watch?v=9jRRZ-WL698))

|

| 82 |

+

|

YOLOv8_DeepSORT_TRACKING_SCRIPT.ipynb

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:c0918af0bfa0ef2e0e9d26d9a8b06e2d706f5a5685d4e19eb58877a8036092ac

|

| 3 |

+

size 16618677

|

YOLOv8_Detection_Tracking_CustomData_Complete.ipynb

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:3d514434f9b1d8f5f3a7fb72e782f82d3c136523a7fc7bb41c2a2a390f4aa783

|

| 3 |

+

size 22625415

|

figure/figure1.png

ADDED

|

Git LFS Details

|

figure/figure2.png

ADDED

|

Git LFS Details

|

figure/figure3.png

ADDED

|

Git LFS Details

|

mkdocs.yml

ADDED

|

@@ -0,0 +1,95 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

site_name: Ultralytics Docs

|

| 2 |

+

repo_url: https://github.com/ultralytics/ultralytics

|

| 3 |

+

repo_name: Ultralytics

|

| 4 |

+

|

| 5 |

+

theme:

|

| 6 |

+

name: "material"

|

| 7 |

+

logo: https://github.com/ultralytics/assets/raw/main/logo/Ultralytics-logomark-white.png

|

| 8 |

+

icon:

|

| 9 |

+

repo: fontawesome/brands/github

|

| 10 |

+

admonition:

|

| 11 |

+

note: octicons/tag-16

|

| 12 |

+

abstract: octicons/checklist-16

|

| 13 |

+

info: octicons/info-16

|

| 14 |

+

tip: octicons/squirrel-16

|

| 15 |

+

success: octicons/check-16

|

| 16 |

+

question: octicons/question-16

|

| 17 |

+

warning: octicons/alert-16

|

| 18 |

+

failure: octicons/x-circle-16

|

| 19 |

+

danger: octicons/zap-16

|

| 20 |

+

bug: octicons/bug-16

|

| 21 |

+

example: octicons/beaker-16

|

| 22 |

+

quote: octicons/quote-16

|

| 23 |

+

|

| 24 |

+

palette:

|

| 25 |

+

# Palette toggle for light mode

|

| 26 |

+

- scheme: default

|

| 27 |

+

toggle:

|

| 28 |

+

icon: material/brightness-7

|

| 29 |

+

name: Switch to dark mode

|

| 30 |

+

|

| 31 |

+

# Palette toggle for dark mode

|

| 32 |

+

- scheme: slate

|

| 33 |

+

toggle:

|

| 34 |

+

icon: material/brightness-4

|

| 35 |

+

name: Switch to light mode

|

| 36 |

+

features:

|

| 37 |

+

- content.code.annotate

|

| 38 |

+

- content.tooltips

|

| 39 |

+

- search.highlight

|

| 40 |

+

- search.share

|

| 41 |

+

- search.suggest

|

| 42 |

+

- toc.follow

|

| 43 |

+

|

| 44 |

+

extra_css:

|

| 45 |

+

- stylesheets/style.css

|

| 46 |

+

|

| 47 |

+

markdown_extensions:

|

| 48 |

+

# Div text decorators

|

| 49 |

+

- admonition

|

| 50 |

+

- pymdownx.details

|

| 51 |

+

- pymdownx.superfences

|

| 52 |

+

- tables

|

| 53 |

+

- attr_list

|

| 54 |

+

- def_list

|

| 55 |

+

# Syntax highlight

|

| 56 |

+

- pymdownx.highlight:

|

| 57 |

+

anchor_linenums: true

|

| 58 |

+

- pymdownx.inlinehilite

|

| 59 |

+

- pymdownx.snippets

|

| 60 |

+

|

| 61 |

+

# Button

|

| 62 |

+

- attr_list

|

| 63 |

+

|

| 64 |

+

# Content tabs

|

| 65 |

+

- pymdownx.superfences

|

| 66 |

+

- pymdownx.tabbed:

|

| 67 |

+

alternate_style: true

|

| 68 |

+

|

| 69 |

+

# Highlight

|

| 70 |

+

- pymdownx.critic

|

| 71 |

+

- pymdownx.caret

|

| 72 |

+

- pymdownx.keys

|

| 73 |

+

- pymdownx.mark

|

| 74 |

+

- pymdownx.tilde

|

| 75 |

+

plugins:

|

| 76 |

+

- mkdocstrings

|

| 77 |

+

|

| 78 |

+

# Primary navigation

|

| 79 |

+

nav:

|

| 80 |

+

- Quickstart: quickstart.md

|

| 81 |

+

- CLI: cli.md

|

| 82 |

+

- Python Interface: sdk.md

|

| 83 |

+

- Configuration: config.md

|

| 84 |

+

- Customization Guide: engine.md

|

| 85 |

+

- Ultralytics HUB: hub.md

|

| 86 |

+

- iOS and Android App: app.md

|

| 87 |

+

- Reference:

|

| 88 |

+

- Python Model interface: reference/model.md

|

| 89 |

+

- Engine:

|

| 90 |

+

- Trainer: reference/base_trainer.md

|

| 91 |

+

- Validator: reference/base_val.md

|

| 92 |

+

- Predictor: reference/base_pred.md

|

| 93 |

+

- Exporter: reference/exporter.md

|

| 94 |

+

- nn Module: reference/nn.md

|

| 95 |

+

- operations: reference/ops.md

|

requirements.txt

ADDED

|

@@ -0,0 +1,46 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Ultralytics requirements

|

| 2 |

+

# Usage: pip install -r requirements.txt

|

| 3 |

+

|

| 4 |

+

# Base ----------------------------------------

|

| 5 |

+

hydra-core>=1.2.0

|

| 6 |

+

matplotlib>=3.2.2

|

| 7 |

+

numpy>=1.18.5

|

| 8 |

+

opencv-python>=4.1.1

|

| 9 |

+

Pillow>=7.1.2

|

| 10 |

+

PyYAML>=5.3.1

|

| 11 |

+

requests>=2.23.0

|

| 12 |

+

scipy>=1.4.1

|

| 13 |

+

torch>=1.7.0

|

| 14 |

+

torchvision>=0.8.1

|

| 15 |

+

tqdm>=4.64.0

|

| 16 |

+

|

| 17 |

+

# Logging -------------------------------------

|

| 18 |

+

tensorboard>=2.4.1

|

| 19 |

+

# clearml

|

| 20 |

+

# comet

|

| 21 |

+

|

| 22 |

+

# Plotting ------------------------------------

|

| 23 |

+

pandas>=1.1.4

|

| 24 |

+

seaborn>=0.11.0

|

| 25 |

+

|

| 26 |

+

# Export --------------------------------------

|

| 27 |

+

# coremltools>=6.0 # CoreML export

|

| 28 |

+

# onnx>=1.12.0 # ONNX export

|

| 29 |

+

# onnx-simplifier>=0.4.1 # ONNX simplifier

|

| 30 |

+

# nvidia-pyindex # TensorRT export

|

| 31 |

+

# nvidia-tensorrt # TensorRT export

|

| 32 |

+

# scikit-learn==0.19.2 # CoreML quantization

|

| 33 |

+

# tensorflow>=2.4.1 # TF exports (-cpu, -aarch64, -macos)

|

| 34 |

+

# tensorflowjs>=3.9.0 # TF.js export

|

| 35 |

+

# openvino-dev # OpenVINO export

|

| 36 |

+

|

| 37 |

+

# Extras --------------------------------------

|

| 38 |

+

ipython # interactive notebook

|

| 39 |

+

psutil # system utilization

|

| 40 |

+

thop>=0.1.1 # FLOPs computation

|

| 41 |

+

# albumentations>=1.0.3

|

| 42 |

+

# pycocotools>=2.0.6 # COCO mAP

|

| 43 |

+

# roboflow

|

| 44 |

+

|

| 45 |

+

# HUB -----------------------------------------

|

| 46 |

+

GitPython>=3.1.24

|

setup.cfg

ADDED

|

@@ -0,0 +1,54 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Project-wide configuration file, can be used for package metadata and other toll configurations

|

| 2 |

+

# Example usage: global configuration for PEP8 (via flake8) setting or default pytest arguments

|

| 3 |

+

# Local usage: pip install pre-commit, pre-commit run --all-files

|

| 4 |

+

|

| 5 |

+

[metadata]

|

| 6 |

+

license_file = LICENSE

|

| 7 |

+

description_file = README.md

|

| 8 |

+

|

| 9 |

+

[tool:pytest]

|

| 10 |

+

norecursedirs =

|

| 11 |

+

.git

|

| 12 |

+

dist

|

| 13 |

+

build

|

| 14 |

+

addopts =

|

| 15 |

+

--doctest-modules

|

| 16 |

+

--durations=25

|

| 17 |

+

--color=yes

|

| 18 |

+

|

| 19 |

+

[flake8]

|

| 20 |

+

max-line-length = 120

|

| 21 |

+

exclude = .tox,*.egg,build,temp

|

| 22 |

+

select = E,W,F

|

| 23 |

+

doctests = True

|

| 24 |

+

verbose = 2

|

| 25 |

+

# https://pep8.readthedocs.io/en/latest/intro.html#error-codes

|

| 26 |

+

format = pylint

|

| 27 |

+

# see: https://www.flake8rules.com/

|

| 28 |

+

ignore = E731,F405,E402,F401,W504,E127,E231,E501,F403

|

| 29 |

+

# E731: Do not assign a lambda expression, use a def

|

| 30 |

+

# F405: name may be undefined, or defined from star imports: module

|

| 31 |

+

# E402: module level import not at top of file

|

| 32 |

+

# F401: module imported but unused

|

| 33 |

+

# W504: line break after binary operator

|

| 34 |

+

# E127: continuation line over-indented for visual indent

|

| 35 |

+

# E231: missing whitespace after ‘,’, ‘;’, or ‘:’

|

| 36 |

+

# E501: line too long

|

| 37 |

+

# F403: ‘from module import *’ used; unable to detect undefined names

|

| 38 |

+

|

| 39 |

+

[isort]

|

| 40 |

+

# https://pycqa.github.io/isort/docs/configuration/options.html

|

| 41 |

+

line_length = 120

|

| 42 |

+

# see: https://pycqa.github.io/isort/docs/configuration/multi_line_output_modes.html

|

| 43 |

+

multi_line_output = 0

|

| 44 |

+

|

| 45 |

+

[yapf]

|

| 46 |

+

based_on_style = pep8

|

| 47 |

+

spaces_before_comment = 2

|

| 48 |

+

COLUMN_LIMIT = 120

|

| 49 |

+

COALESCE_BRACKETS = True

|

| 50 |

+

SPACES_AROUND_POWER_OPERATOR = True

|

| 51 |

+

SPACE_BETWEEN_ENDING_COMMA_AND_CLOSING_BRACKET = False

|

| 52 |

+

SPLIT_BEFORE_CLOSING_BRACKET = False

|

| 53 |

+

SPLIT_BEFORE_FIRST_ARGUMENT = False

|

| 54 |

+

# EACH_DICT_ENTRY_ON_SEPARATE_LINE = False

|

setup.py

ADDED

|

@@ -0,0 +1,53 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Ultralytics YOLO 🚀, GPL-3.0 license

|

| 2 |

+

|

| 3 |

+

import re

|

| 4 |

+

from pathlib import Path

|

| 5 |

+

|

| 6 |

+

import pkg_resources as pkg

|

| 7 |

+

from setuptools import find_packages, setup

|

| 8 |

+

|

| 9 |

+

# Settings

|

| 10 |

+

FILE = Path(__file__).resolve()

|

| 11 |

+

ROOT = FILE.parent # root directory

|

| 12 |

+

README = (ROOT / "README.md").read_text(encoding="utf-8")

|

| 13 |

+

REQUIREMENTS = [f'{x.name}{x.specifier}' for x in pkg.parse_requirements((ROOT / 'requirements.txt').read_text())]

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

def get_version():

|

| 17 |

+

file = ROOT / 'ultralytics/__init__.py'

|

| 18 |

+

return re.search(r'^__version__ = [\'"]([^\'"]*)[\'"]', file.read_text(), re.M)[1]

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

setup(

|

| 22 |

+

name="ultralytics", # name of pypi package

|

| 23 |

+

version=get_version(), # version of pypi package

|

| 24 |

+

python_requires=">=3.7.0",

|

| 25 |

+

license='GPL-3.0',

|

| 26 |

+

description='Ultralytics YOLOv8 and HUB',

|

| 27 |

+

long_description=README,

|

| 28 |

+

long_description_content_type="text/markdown",

|

| 29 |

+

url="https://github.com/ultralytics/ultralytics",

|

| 30 |

+

project_urls={

|

| 31 |

+

'Bug Reports': 'https://github.com/ultralytics/ultralytics/issues',

|

| 32 |

+

'Funding': 'https://ultralytics.com',

|

| 33 |

+

'Source': 'https://github.com/ultralytics/ultralytics',},

|

| 34 |

+

author="Ultralytics",

|

| 35 |

+

author_email='[email protected]',

|

| 36 |

+

packages=find_packages(), # required

|

| 37 |

+

include_package_data=True,

|

| 38 |

+

install_requires=REQUIREMENTS,

|

| 39 |

+

extras_require={

|

| 40 |

+

'dev':

|

| 41 |

+

['check-manifest', 'pytest', 'pytest-cov', 'coverage', 'mkdocs', 'mkdocstrings[python]', 'mkdocs-material'],},

|

| 42 |

+

classifiers=[

|

| 43 |

+

"Intended Audience :: Developers", "Intended Audience :: Science/Research",

|

| 44 |

+

"License :: OSI Approved :: GNU General Public License v3 (GPLv3)", "Programming Language :: Python :: 3",

|

| 45 |

+

"Programming Language :: Python :: 3.7", "Programming Language :: Python :: 3.8",

|

| 46 |

+

"Programming Language :: Python :: 3.9", "Programming Language :: Python :: 3.10",

|

| 47 |

+

"Topic :: Software Development", "Topic :: Scientific/Engineering",

|

| 48 |

+

"Topic :: Scientific/Engineering :: Artificial Intelligence",

|

| 49 |

+

"Topic :: Scientific/Engineering :: Image Recognition", "Operating System :: POSIX :: Linux",

|

| 50 |

+

"Operating System :: MacOS", "Operating System :: Microsoft :: Windows"],

|

| 51 |

+

keywords="machine-learning, deep-learning, vision, ML, DL, AI, YOLO, YOLOv3, YOLOv5, YOLOv8, HUB, Ultralytics",

|

| 52 |

+

entry_points={

|

| 53 |

+

'console_scripts': ['yolo = ultralytics.yolo.cli:cli', 'ultralytics = ultralytics.yolo.cli:cli'],})

|

ultralytics/__init__.py

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Ultralytics YOLO 🚀, GPL-3.0 license

|

| 2 |

+

|

| 3 |

+

__version__ = "8.0.3"

|

| 4 |

+

|

| 5 |

+

from ultralytics.hub import checks

|

| 6 |

+

from ultralytics.yolo.engine.model import YOLO

|

| 7 |

+

from ultralytics.yolo.utils import ops

|

| 8 |

+

|

| 9 |

+

__all__ = ["__version__", "YOLO", "hub", "checks"] # allow simpler import

|

ultralytics/hub/__init__.py

ADDED

|

@@ -0,0 +1,133 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Ultralytics YOLO 🚀, GPL-3.0 license

|

| 2 |

+

|

| 3 |

+

import os

|

| 4 |

+

import shutil

|

| 5 |

+

|

| 6 |

+

import psutil

|

| 7 |

+

import requests

|

| 8 |

+

from IPython import display # to display images and clear console output

|

| 9 |

+

|

| 10 |

+

from ultralytics.hub.auth import Auth

|

| 11 |

+

from ultralytics.hub.session import HubTrainingSession

|

| 12 |

+

from ultralytics.hub.utils import PREFIX, split_key

|

| 13 |

+

from ultralytics.yolo.utils import LOGGER, emojis, is_colab

|

| 14 |

+

from ultralytics.yolo.utils.torch_utils import select_device

|

| 15 |

+

from ultralytics.yolo.v8.detect import DetectionTrainer

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

def checks(verbose=True):

|

| 19 |

+

if is_colab():

|

| 20 |

+

shutil.rmtree('sample_data', ignore_errors=True) # remove colab /sample_data directory

|

| 21 |

+

|

| 22 |

+

if verbose:

|

| 23 |

+

# System info

|

| 24 |

+

gib = 1 << 30 # bytes per GiB

|

| 25 |

+

ram = psutil.virtual_memory().total

|

| 26 |

+

total, used, free = shutil.disk_usage("/")

|

| 27 |

+

display.clear_output()

|

| 28 |

+

s = f'({os.cpu_count()} CPUs, {ram / gib:.1f} GB RAM, {(total - free) / gib:.1f}/{total / gib:.1f} GB disk)'

|

| 29 |

+

else:

|

| 30 |

+

s = ''

|

| 31 |

+

|

| 32 |

+

select_device(newline=False)

|

| 33 |

+

LOGGER.info(f'Setup complete ✅ {s}')

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

def start(key=''):

|

| 37 |

+

# Start training models with Ultralytics HUB. Usage: from src.ultralytics import start; start('API_KEY')

|

| 38 |

+

def request_api_key(attempts=0):

|

| 39 |

+

"""Prompt the user to input their API key"""

|

| 40 |

+

import getpass

|

| 41 |

+

|

| 42 |

+

max_attempts = 3

|

| 43 |

+

tries = f"Attempt {str(attempts + 1)} of {max_attempts}" if attempts > 0 else ""

|

| 44 |

+

LOGGER.info(f"{PREFIX}Login. {tries}")

|

| 45 |

+

input_key = getpass.getpass("Enter your Ultralytics HUB API key:\n")

|

| 46 |

+

auth.api_key, model_id = split_key(input_key)

|

| 47 |

+

if not auth.authenticate():

|

| 48 |

+

attempts += 1

|

| 49 |

+

LOGGER.warning(f"{PREFIX}Invalid API key ⚠️\n")

|

| 50 |

+

if attempts < max_attempts:

|

| 51 |

+

return request_api_key(attempts)

|

| 52 |

+

raise ConnectionError(emojis(f"{PREFIX}Failed to authenticate ❌"))

|

| 53 |

+

else:

|

| 54 |

+

return model_id

|

| 55 |

+

|

| 56 |

+

try:

|

| 57 |

+

api_key, model_id = split_key(key)

|

| 58 |

+

auth = Auth(api_key) # attempts cookie login if no api key is present

|

| 59 |

+

attempts = 1 if len(key) else 0

|

| 60 |

+

if not auth.get_state():

|

| 61 |

+

if len(key):

|

| 62 |

+

LOGGER.warning(f"{PREFIX}Invalid API key ⚠️\n")

|

| 63 |

+

model_id = request_api_key(attempts)

|

| 64 |

+

LOGGER.info(f"{PREFIX}Authenticated ✅")

|

| 65 |

+

if not model_id:

|

| 66 |

+

raise ConnectionError(emojis('Connecting with global API key is not currently supported. ❌'))

|

| 67 |

+

session = HubTrainingSession(model_id=model_id, auth=auth)

|

| 68 |

+

session.check_disk_space()

|

| 69 |

+

|

| 70 |

+

# TODO: refactor, hardcoded for v8

|

| 71 |

+

args = session.model.copy()

|

| 72 |

+

args.pop("id")

|

| 73 |

+

args.pop("status")

|

| 74 |

+

args.pop("weights")

|

| 75 |

+

args["data"] = "coco128.yaml"

|

| 76 |

+

args["model"] = "yolov8n.yaml"

|

| 77 |

+

args["batch_size"] = 16

|

| 78 |

+

args["imgsz"] = 64

|

| 79 |

+

|

| 80 |

+

trainer = DetectionTrainer(overrides=args)

|

| 81 |

+

session.register_callbacks(trainer)

|

| 82 |

+

setattr(trainer, 'hub_session', session)

|

| 83 |

+

trainer.train()

|

| 84 |

+

except Exception as e:

|

| 85 |

+

LOGGER.warning(f"{PREFIX}{e}")

|

| 86 |

+

|

| 87 |

+

|

| 88 |

+

def reset_model(key=''):

|

| 89 |

+

# Reset a trained model to an untrained state

|

| 90 |

+

api_key, model_id = split_key(key)

|

| 91 |

+

r = requests.post('https://api.ultralytics.com/model-reset', json={"apiKey": api_key, "modelId": model_id})

|

| 92 |

+

|

| 93 |

+

if r.status_code == 200:

|

| 94 |

+

LOGGER.info(f"{PREFIX}model reset successfully")

|

| 95 |

+

return

|

| 96 |

+

LOGGER.warning(f"{PREFIX}model reset failure {r.status_code} {r.reason}")

|

| 97 |

+

|

| 98 |

+

|

| 99 |

+

def export_model(key='', format='torchscript'):

|

| 100 |

+

# Export a model to all formats

|

| 101 |

+

api_key, model_id = split_key(key)

|

| 102 |

+

formats = ('torchscript', 'onnx', 'openvino', 'engine', 'coreml', 'saved_model', 'pb', 'tflite', 'edgetpu', 'tfjs',

|

| 103 |

+

'ultralytics_tflite', 'ultralytics_coreml')

|

| 104 |

+

assert format in formats, f"ERROR: Unsupported export format '{format}' passed, valid formats are {formats}"

|

| 105 |

+

|

| 106 |

+

r = requests.post('https://api.ultralytics.com/export',

|

| 107 |

+

json={

|

| 108 |

+

"apiKey": api_key,

|

| 109 |

+

"modelId": model_id,

|

| 110 |

+

"format": format})

|

| 111 |

+

assert r.status_code == 200, f"{PREFIX}{format} export failure {r.status_code} {r.reason}"

|

| 112 |

+

LOGGER.info(f"{PREFIX}{format} export started ✅")

|

| 113 |

+

|

| 114 |

+

|

| 115 |

+

def get_export(key='', format='torchscript'):

|

| 116 |

+

# Get an exported model dictionary with download URL

|

| 117 |

+

api_key, model_id = split_key(key)

|

| 118 |

+

formats = ('torchscript', 'onnx', 'openvino', 'engine', 'coreml', 'saved_model', 'pb', 'tflite', 'edgetpu', 'tfjs',

|

| 119 |

+

'ultralytics_tflite', 'ultralytics_coreml')

|

| 120 |

+

assert format in formats, f"ERROR: Unsupported export format '{format}' passed, valid formats are {formats}"

|

| 121 |

+

|

| 122 |

+

r = requests.post('https://api.ultralytics.com/get-export',

|

| 123 |

+

json={

|

| 124 |

+

"apiKey": api_key,

|

| 125 |

+

"modelId": model_id,

|

| 126 |

+

"format": format})

|

| 127 |

+