Post

781

ColPali: A new approach to efficient and intelligent document retrieval 🚀

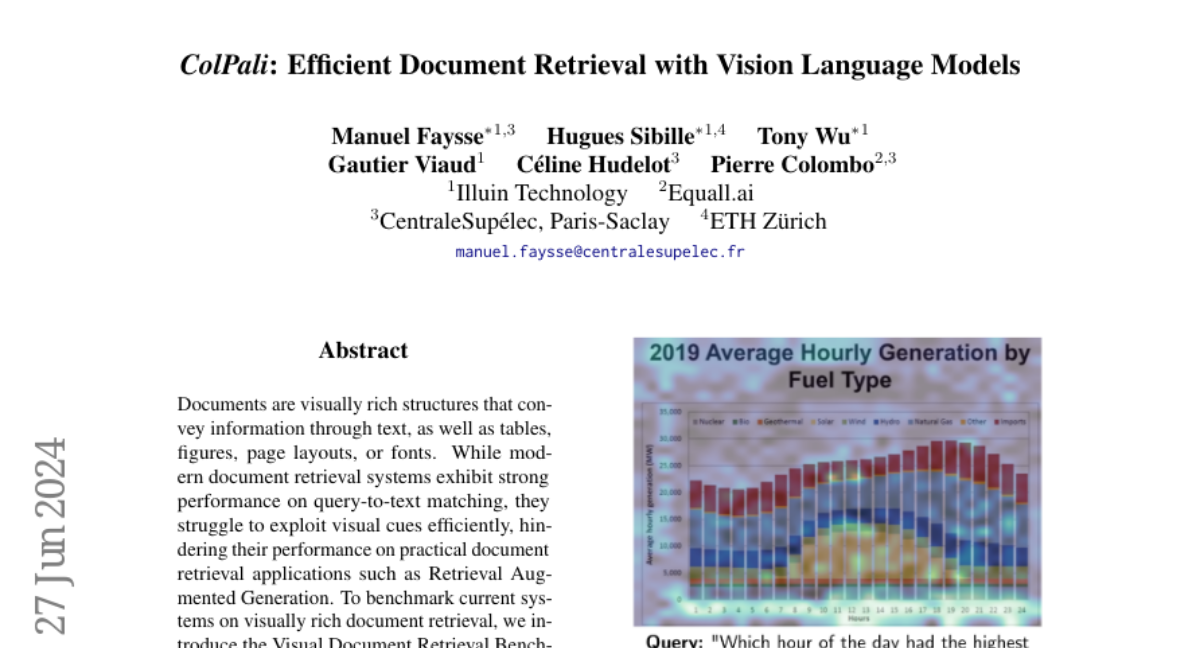

Our latest research paper, "ColPali: Efficient Document Retrieval with Vision Language Models," introduces a groundbreaking approach to large-scale visual document analysis. By leveraging Vision Language Models (VLMs), we have created a new framework for document retrieval that's both powerful and efficient.

Key Insights:

💡 ColPali combines ColBERT's multi-vector strategy with VLMs' document understanding capabilities

⚙️ ColPali is based on PaliGemma-3B (SigLIP, Gemma-2B) + a linear projection layer and is trained to maximize the similarity between the document and the query embeddings

📊 The Vision Document Retrieval benchmark (ViDoRe) is a challenging dataset that spans various industry topics and aims at matching real-life retrieval scenarios

🏆 ColPali outperforms existing models on all datasets in ViDoRe (average NDCG@5 of 81.3% vs 67.0% for the best baseline model)

⚡ ColPali is faster at document embedding compared to traditional PDF parser pipelines, making ColPali viable for industrial use

🔍 ColPali is highly interpretable thanks to patch-based similarity maps

Dive deeper into ColPali and explore our resources:

📑 Full paper: arxiv.org/abs/2407.01449

🛠️ Datasets, model weights, evaluation code, leaderboard, demos: huggingface.co/vidore

Shoutout to my amazing co-authors Manuel Faysse ( @manu ) and Hugues Sibille ( @HugSib ). We are grateful for the invaluable feedback from Bilel Omrani, Gautier Viaud, Celine Hudelot, and Pierre Colombo. This work is sponsored by ILLUIN Technology. ✨

Our latest research paper, "ColPali: Efficient Document Retrieval with Vision Language Models," introduces a groundbreaking approach to large-scale visual document analysis. By leveraging Vision Language Models (VLMs), we have created a new framework for document retrieval that's both powerful and efficient.

Key Insights:

💡 ColPali combines ColBERT's multi-vector strategy with VLMs' document understanding capabilities

⚙️ ColPali is based on PaliGemma-3B (SigLIP, Gemma-2B) + a linear projection layer and is trained to maximize the similarity between the document and the query embeddings

📊 The Vision Document Retrieval benchmark (ViDoRe) is a challenging dataset that spans various industry topics and aims at matching real-life retrieval scenarios

🏆 ColPali outperforms existing models on all datasets in ViDoRe (average NDCG@5 of 81.3% vs 67.0% for the best baseline model)

⚡ ColPali is faster at document embedding compared to traditional PDF parser pipelines, making ColPali viable for industrial use

🔍 ColPali is highly interpretable thanks to patch-based similarity maps

Dive deeper into ColPali and explore our resources:

📑 Full paper: arxiv.org/abs/2407.01449

🛠️ Datasets, model weights, evaluation code, leaderboard, demos: huggingface.co/vidore

Shoutout to my amazing co-authors Manuel Faysse ( @manu ) and Hugues Sibille ( @HugSib ). We are grateful for the invaluable feedback from Bilel Omrani, Gautier Viaud, Celine Hudelot, and Pierre Colombo. This work is sponsored by ILLUIN Technology. ✨