Update README.md

Browse files

README.md

CHANGED

|

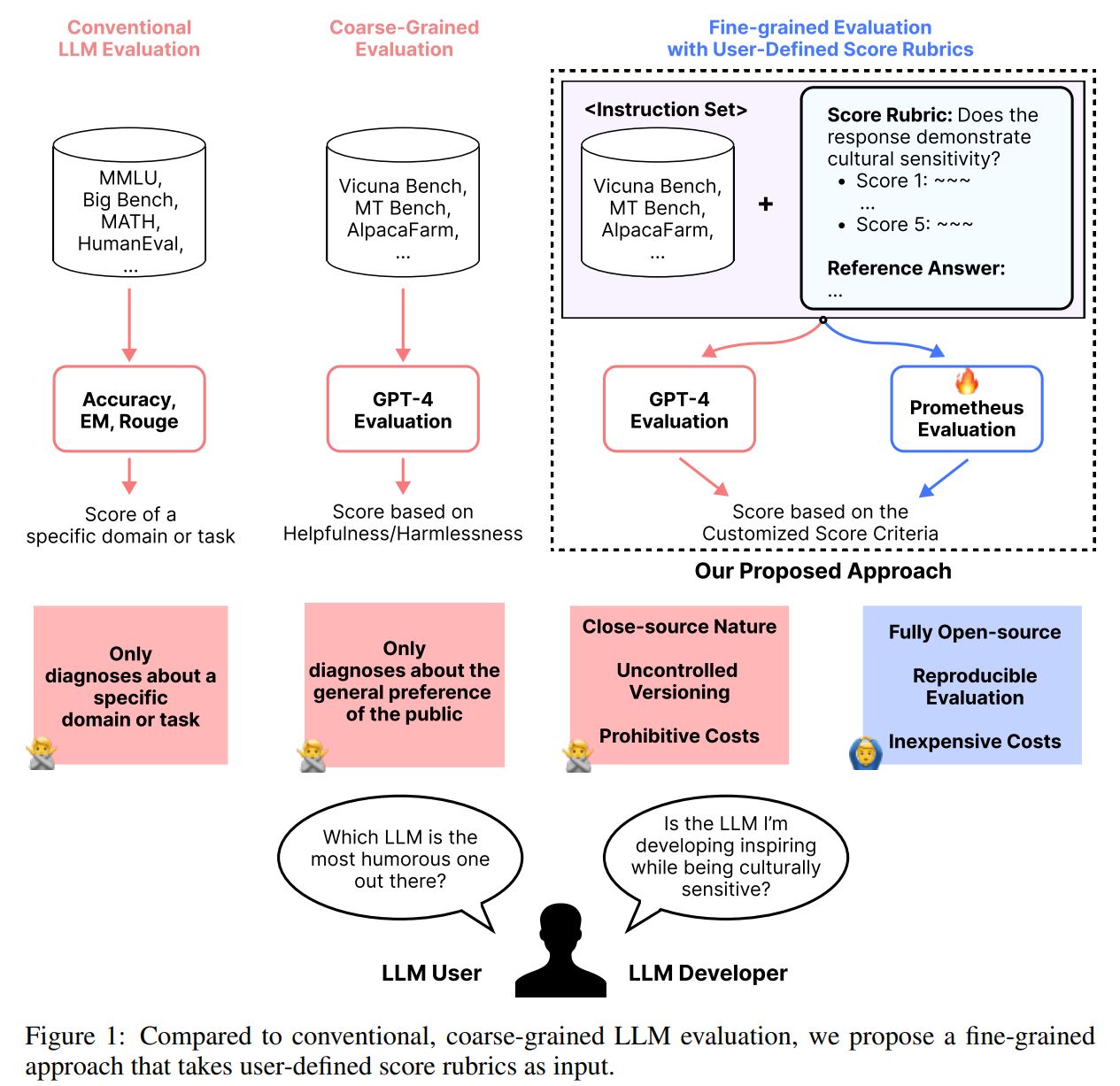

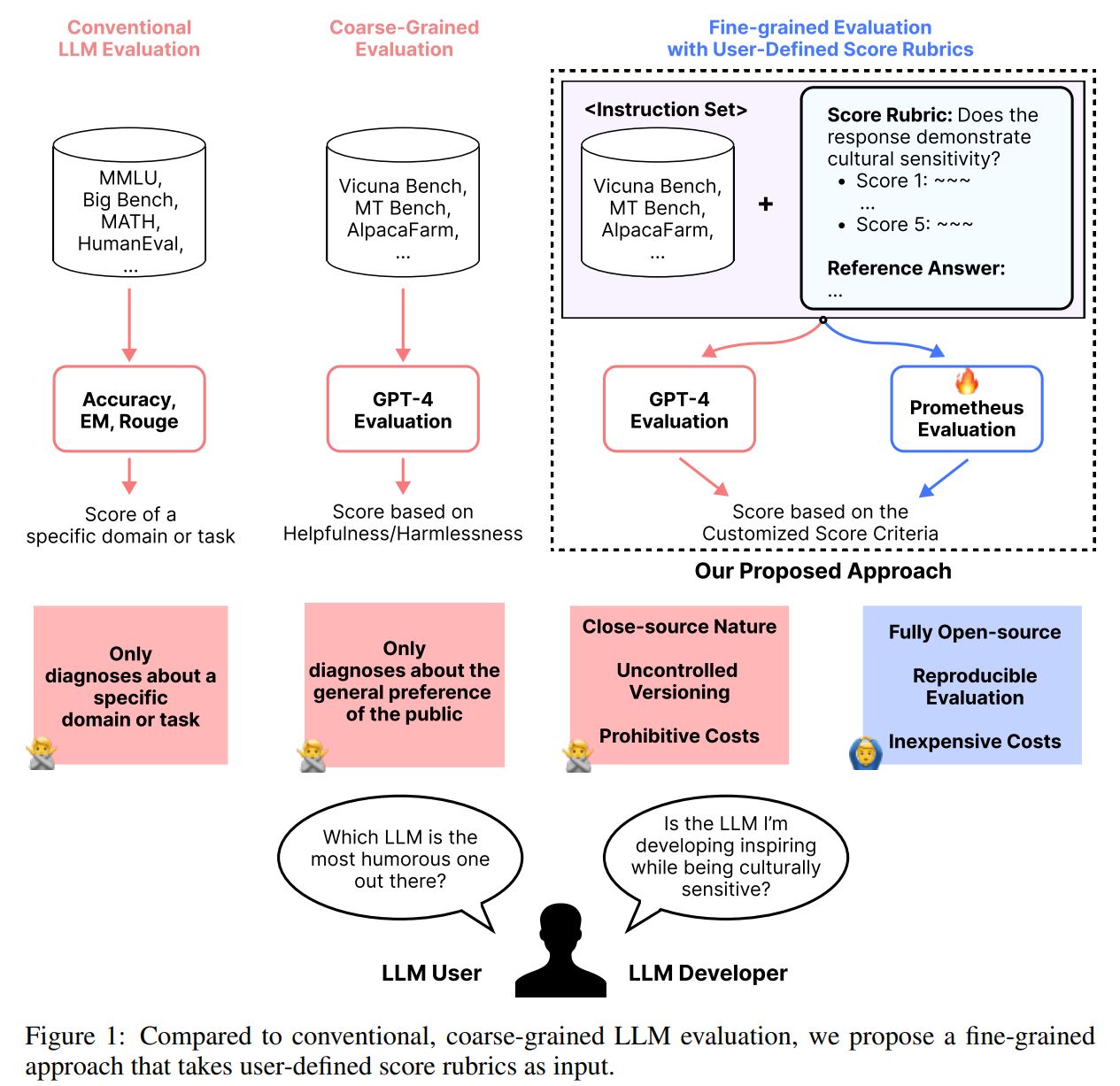

@@ -25,20 +25,27 @@ Prometheus 2 is an alternative of GPT-4 evaluation when doing fine-grained evalu

|

|

| 25 |

|

| 26 |

|

| 27 |

|

| 28 |

-

Prometheus 2 is a language model using Mistral-Instruct as a base model.

|

|

|

|

|

|

|

|

|

|

| 29 |

|

| 30 |

# Model Details

|

| 31 |

|

| 32 |

## Model Description

|

|

|

|

| 33 |

- **Model type:** Language model

|

| 34 |

- **Language(s) (NLP):** English

|

| 35 |

- **License:** Apache 2.0

|

| 36 |

-

- **Related Models:** All Prometheus Checkpoints

|

| 37 |

- **Resources for more information:**

|

| 38 |

-

- Research paper

|

| 39 |

-

- GitHub Repo

|

|

|

|

| 40 |

|

| 41 |

-

Prometheus is trained with two different sizes (7B and 8x7B).

|

|

|

|

|

|

|

| 42 |

|

| 43 |

## Prompt Format

|

| 44 |

|

|

|

|

| 25 |

|

| 26 |

|

| 27 |

|

| 28 |

+

Prometheus 2 is a language model using [Mistral-Instruct](https://huggingface.co/mistralai/Mistral-7B-Instruct-v0.2) as a base model.

|

| 29 |

+

It is fine-tuned on 100K feedback within the [Feedback Collection](https://huggingface.co/datasets/prometheus-eval/Feedback-Collection) and 200K feedback within the [Preference Collection](https://huggingface.co/datasets/prometheus-eval/Preference-Collection).

|

| 30 |

+

It is also made by weight merging to support both absolute grading (direct assessment) and relative grading (pairwise ranking).

|

| 31 |

+

The surprising thing is that we find weight merging also improves performance on each format.

|

| 32 |

|

| 33 |

# Model Details

|

| 34 |

|

| 35 |

## Model Description

|

| 36 |

+

|

| 37 |

- **Model type:** Language model

|

| 38 |

- **Language(s) (NLP):** English

|

| 39 |

- **License:** Apache 2.0

|

| 40 |

+

- **Related Models:** [All Prometheus Checkpoints](https://huggingface.co/models?search=prometheus-eval/Prometheus)

|

| 41 |

- **Resources for more information:**

|

| 42 |

+

- [Research paper](https://arxiv.org/abs/2405.01535)

|

| 43 |

+

- [GitHub Repo](https://github.com/prometheus-eval/prometheus-eval)

|

| 44 |

+

|

| 45 |

|

| 46 |

+

Prometheus is trained with two different sizes (7B and 8x7B).

|

| 47 |

+

You could check the 7B sized LM on [this page](https://huggingface.co/prometheus-eval/prometheus-2-7b-v2.0).

|

| 48 |

+

Also, check out our dataset as well on [this page](https://huggingface.co/datasets/prometheus-eval/Feedback-Collection) and [this page](https://huggingface.co/datasets/prometheus-eval/Preference-Collection).

|

| 49 |

|

| 50 |

## Prompt Format

|

| 51 |

|