Finetuned model of gemma 2B for crewai library

Crewai Finetuned Model

This is a LoRA finetuned model of gemma-2B for crewai library that produces the Goal and Backstory description automatically in it's agent method or agent() by taking only Role as user input, this helps to generate proper descriptions of those parameters through a llm instead of manually writing it.

You can run the model on a GPU using the following code.

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "DrDrek/crewai-finetuned-model"

input_text = "junior software developer"

torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model = AutoModelForCausalLM.from_pretrained(

model_name,

low_cpu_mem_usage=True,

return_dict=True,

torch_dtype=torch.float16,

device_map="auto",

)

tokenizer = AutoTokenizer.from_pretrained(model_name, trust_remote_code=True)

input_ids = tokenizer(input_text, return_tensors="pt")

outputs = model.generate(**input_ids, max_length=128)

output = tokenizer.decode(outputs[0])

#print("llm output:",output)

backstory=(output.split("\n\n"))[1].split("\n\n")[0]

goal=(output.split(backstory)[1].replace("<eos>","")).replace("\n\n","")

print("backstory:",backstory)

print("goal:",goal)

#Output:

#>>backstory: I am a junior software developer with a passion for building innovative and user-friendly applications. I am currently studying Computer Science at the University of Waterloo, and I am always looking for new challenges and opportunities to grow as a developer.

#>>goal: I am a strong believer in the power of technology to improve people's lives, and I am dedicated to using my skills to make a positive impact in the world.I am always looking for new ways to learn and grow, and I am excited to see where my journey takes me.

Training Data

We have 103 rows of descriptions of different roles and their respective goals and backstory which is used to train the models, see this dataset for details.

Evaluation

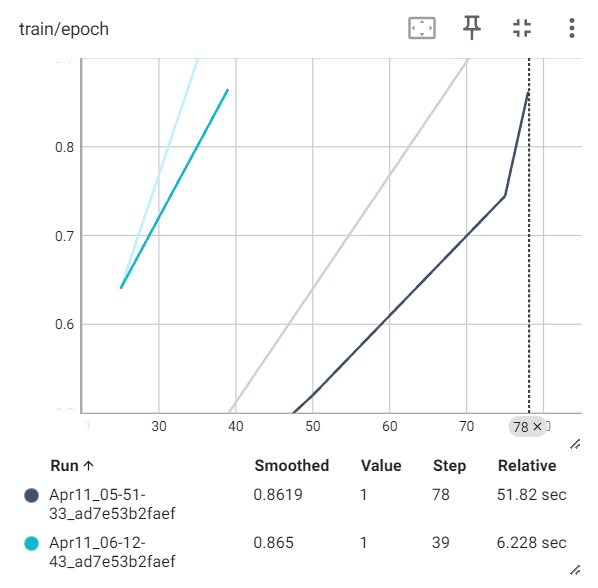

The model gives a training loss of 0.931 on training data with 25 setps batch size as shown in below figure:

Future Developement

As the Model is trained on tiny dataset it may yeild inaccurate output sometimes, to avoid this we can train the model on a bigger dataset in future.

- Downloads last month

- 259