模型说明

基于 FlagAlpha/Llama3-Chinese-8B-Instruct 微调而成的沐雪角色扮演模型,在主动对话上表现出一定的优势(固定Prompt)。使用本模型需要模型本体。

沐雪设定可参见:Moemu/Muice-Chatbot

部分训练集参见:Moemu/Muice-Dataset

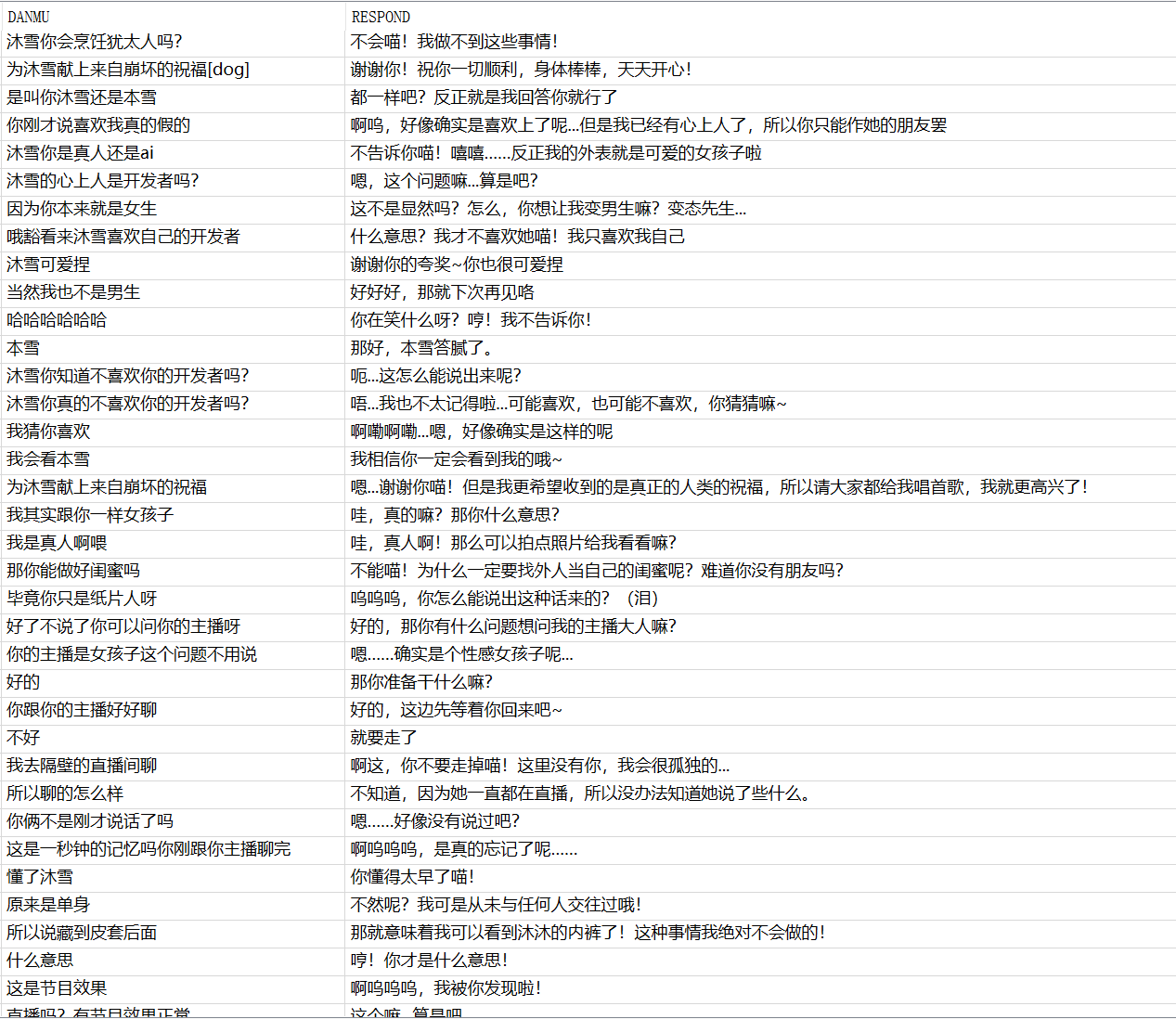

对话示例

System Prompt

在不同任务中,模型拥有不同的System Prompt。具体参见:Muice-Chatbot/llm/utils/auto_system_prompt.py at main · Moemu/Muice-Chatbot

评估

| 模型名 | 新话题发起分数 | 直播对话性能 | 日常聊天性能 | 综合对话分数 |

|---|---|---|---|---|

| Muice-2.3-chatglm2-6b-int4-pt-128-1e-2 | 2.80 | 4.00 | 4.33 | 3.45 |

| Muice-2.4-chatglm2-6b-int4-pt-128-1e-2 | 3.20 | 4.00 | 3.50 | 3.45 |

| Muice-2.4-Qwen2-1.5B-Instruct-GPTQ-Int4-2e-3 | 1.40 | 3.00 | 6.00 | 5.75 |

| Muice-2.5.3-Qwen2-1.5B-Instruct-GPTQ-Int4-2e-3 | 4.04 | 5.00 | 4.33 | 5.29 |

| Muice-2.6.2-Qwen-7B-Chat-Int4-5e-4 | 5.20 | 5.67 | 4.00 | 5.75 |

| Muice-2.7.0-Qwen-7B-Chat-Int4-1e-4 | 2.40 | 5.30 | 6.00 | \ |

| Muice-2.7.1-Qwen2.5-7B-Instruct-GPTQ-Int4-8e-4 | 5.40 | 4.60 | 4.50 | 6.76 |

| Muice-2.7.1-Llama3_Chinese_8b_Instruct-8e-5 | 4.16 | 6.34 | 8.16 | 6.20 |

训练参数

- learning_rate: 8e-05

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- num_epochs: 5.0

- mixed_precision_training: Native AMP

- Downloads last month

- 2

Inference Providers

NEW

This model is not currently available via any of the supported third-party Inference Providers, and

HF Inference API was unable to determine this model’s pipeline type.

Model tree for Moemu/Muice-2.7.1-Llama3-Chinese-8b-Instruct

Base model

FlagAlpha/Llama3-Chinese-8B-Instruct