CRISPR-viva

CRISPR-viva is a a universal and host cell context-aware guide RNA design framework for CRISPR-based RNA virus detection and inhibition facilitated by a foundation model.

Model Details

Model Description

The foundation model module of CRISPR-viva is a LLM that harnesses the recent development of the causal attention mechanism to mimic the mechanism of DNA-RNA and RNA-RNA interactions during CRISPR editing events, where the guide RNA binds the host/virus DNA/RNA in a specific order based on the type of CRISPR system. Therefore, the foundation model is designed and trained to learn the representation of sequence interactions between guide RNAs and cellular DNA/RNAs as well as viral RNAs, an approach expected to significantly improve the performance of the downstream tasks of virus detection and inhibition.

The foundation model is pretrained with CRISPRviva-3B, which contains candidate guide RNA sequences from the genomes and transcriptomes of 23 cell lines and 26464 viral segmented genomes of RNA virususes.

Uses

Downstream Use

- We apply the pretrained foundation model to multiple downstream tasks in virus detection and inhibition scenarios with respect to 9 CRISPR systems, where Cas12a, Cas12b, Cas12c, LwCas13a, LbuCas13a, Cas13b, Cas13d, Cas14 for detection task and LwCas13a, Cas13d and Cas7-11 for inhibition task.

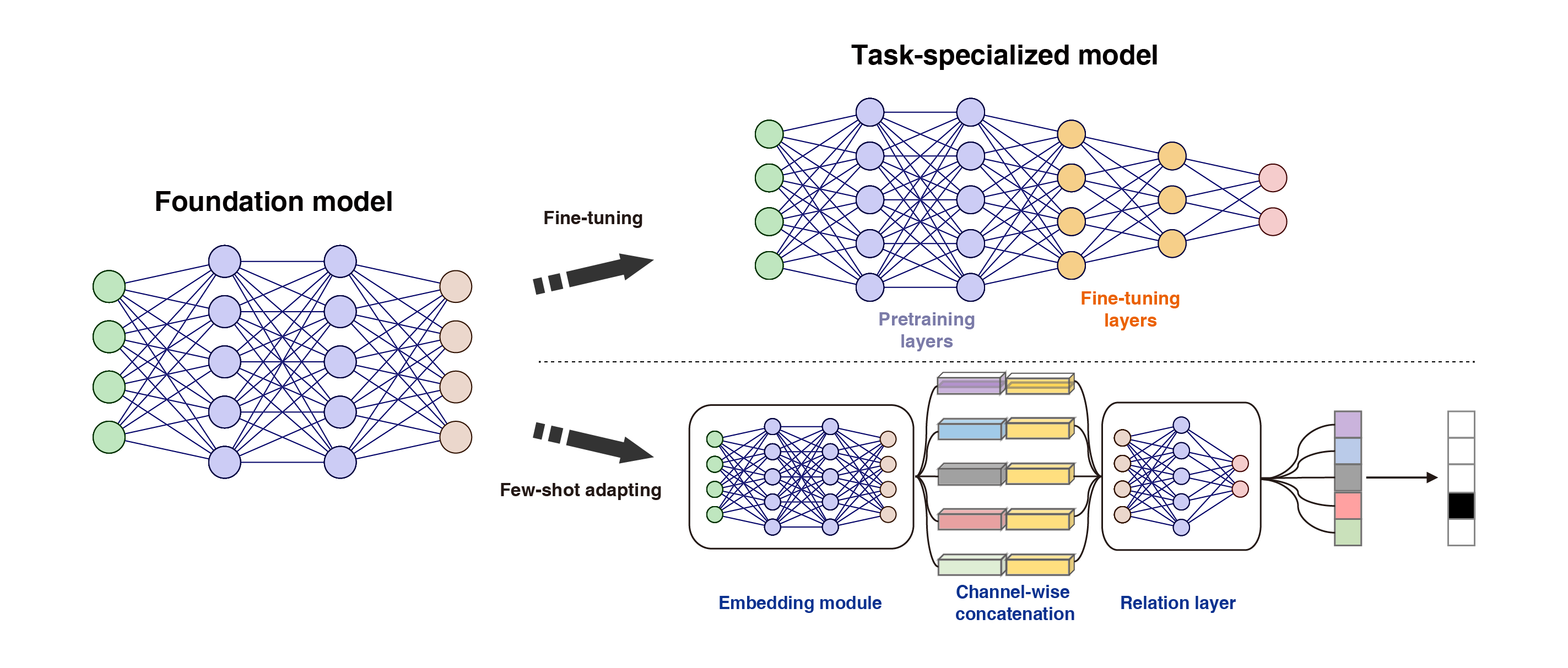

- The fine-tuning strategy is applied for medium data volume scenarios for LwCas13a, Cas12a and Cas13d. The few-shot adaptation strategy is applied for newly developed CRISPR systems, including the Cas12b, Cas12c, LbuCas13a, Cas13b, Cas13c, and Cas14 and Cas7-11 systems, to achieve robust generalizability.

Preprocessing

Sequence encoding

We utilized and modified the attention block, which is widely used in various research and industrial applications, to construct our sequence attention module, which functions as the building block of our CRISPR-viva system. The input of the module is a sequence of nucleotide characters composed of “A”, “C”, “G”, “T” and “N”, where “N” acts as both mask token for pretraining task and padding token for downstreaming task.

Compute Infrastructure

Hardware

- Four NVIDIA GeForce GTX 4090 GPUs to train the foundation model

- One NVIDIA GeForce GTX 4090 GPU each to train each task-specialized model.

Software

- pytorch >= 2.3

- dask == 2024.5.0

- transformers == 4.29.2

- datasets == 2.19.1