| proof of concept of a failed project | |

| a notebook for producing your own "stable inversions" is included in this repo but I wouldn't recommend doing so (they suck). It works on Colab free tier though. | |

| [link to notebook for you to download](https://huggingface.co/crumb/genshin-stable-inversion/blob/main/stable_inversion%20(1).ipynb) | |

| how you can load this into a diffusers-based notebook like [Doohickey](https://github.com/aicrumb/doohickey) might look something like this | |

| ``` | |

| from huggingface_hub import hf_hub_download | |

| stable_inversion = "user/my-stable-inversion" #@param {type:"string"} | |

| if len(stable_inversion)>1: | |

| g = hf_hub_download(repo_id=stable_inversion, filename="token_embeddings.pt") | |

| text_encoder.text_model.embeddings.token_embedding.weight = torch.load(g) | |

| ``` | |

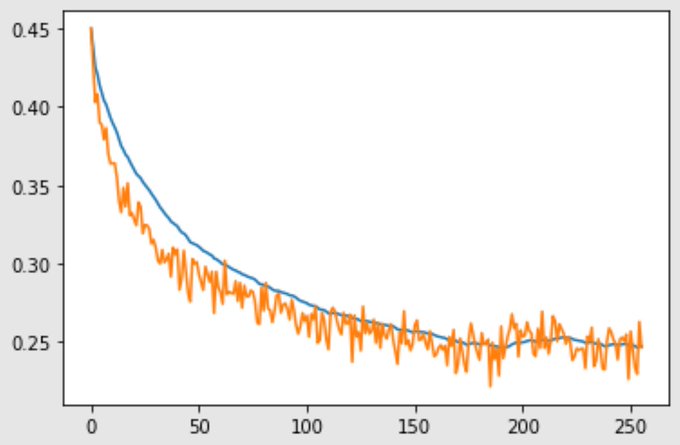

| it was trained on 1024 images matching the 'genshin_impact' tag on safebooru, epochs 1 and 2 had the model being fed the full captions, epoch 3 had 50% of the tags in the caption, and epoch 4 had 25% of the tags in the caption. Learning rate was 1e-3 and the loss curve looked like this  | |

| Samples from this finetuned inversion for the prompt "beidou_(genshin_impact)" | |

|  | |

|  | |

|  |