weblab-10b-instruction-sft-GPTQ

Original model weblab-10b-instruction-sft which is a Japanese-centric multilingual GPT-NeoX model of 10 billion parameters created by matsuo-lab Takeshi Kojima.

This model is a quantized(miniaturized) version of the original model(21.42GB).

There are currently two well-known quantization version of original model.

(1)GPTQ version(This model. 6.3 GB)

The size is smaller and the execution speed is faster, but the inference performance may be a little worse than original model.

At least one GPU is currently required due to a limitation of the Accelerate library.

So this model cannot be run with the huggingface space free version.

You need autoGPTQ library to use this model.

(2)llama.cpp version(gguf)(matsuolab-weblab-10b-instruction-sft-gguf 6.03GB)

created by mmnga.

You can use gguf model with llama.cpp at cpu only machine.

But maybe gguf model little bit slower then GPTQ especialy long text.

How to run.

Local PC

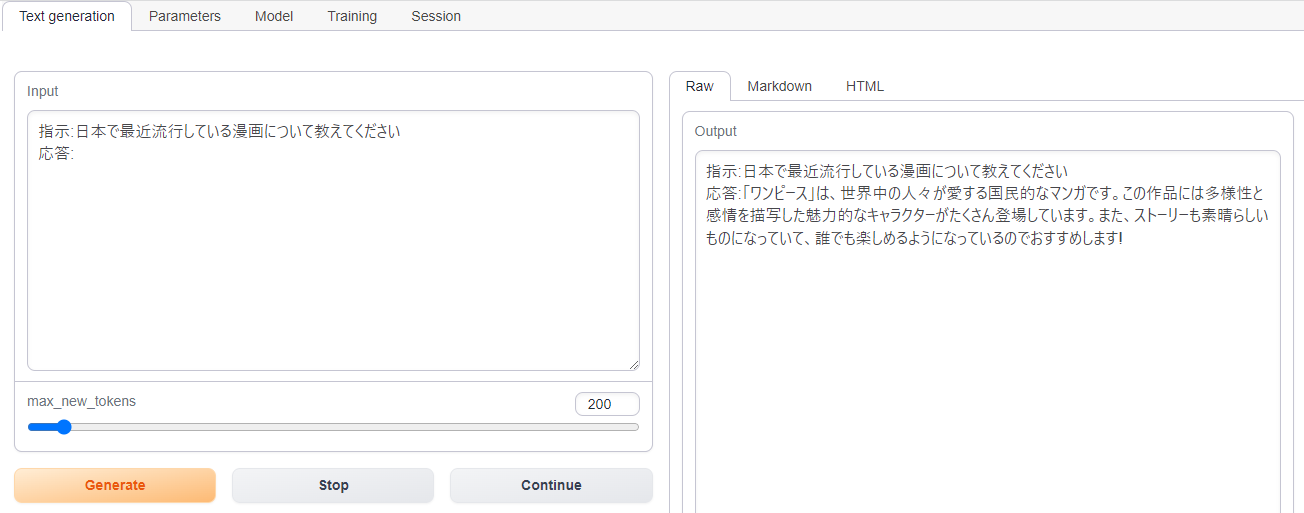

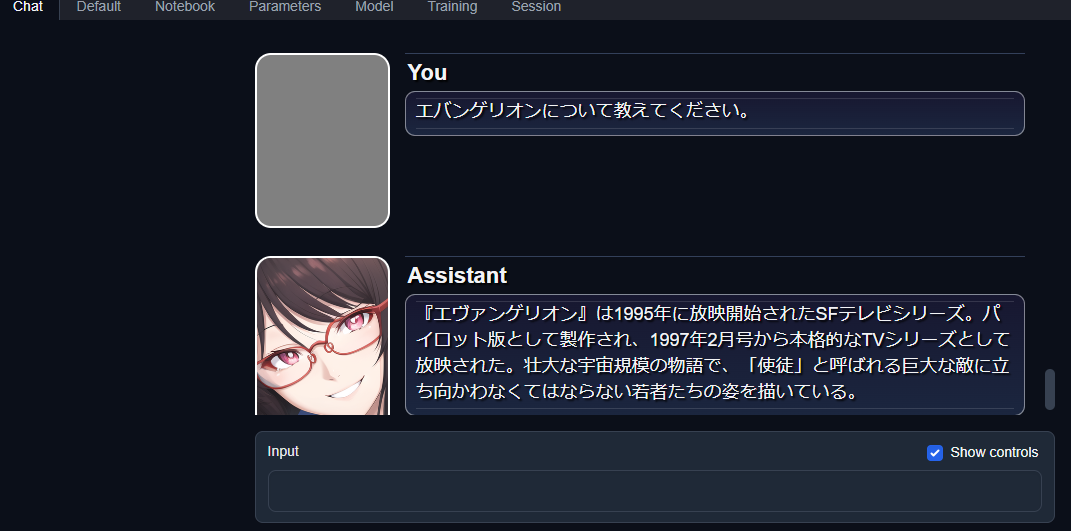

You can use text-generation-webui to run this model fast(about 16 tokens/s on my RTX 3060) on your local PC.

The explanation of how to install text-generation-webui in Japanese is here..

colab with GUI

You can try this model interactively in the free version of Colab.

weblab-10b-instruction-sft-GPTQ-text-generation-webui-colab

colab simple sample code

Currently, models may behave differently on local PC and Colab. On Colab, the model may not respond if you include instructional prompts.

Colab Sample script

If you get an error (something not found or something is not defined) in the script below, please refer to the official documentation and Colab samples and specify a specific version.

pip install auto-gptq

import torch

from transformers import AutoTokenizer

from auto_gptq import AutoGPTQForCausalLM

quantized_model_dir = "dahara1/weblab-10b-instruction-sft-GPTQ"

model_basename = "gptq_model-4bit-128g"

tokenizer = AutoTokenizer.from_pretrained(quantized_model_dir)

model = AutoGPTQForCausalLM.from_quantized(

quantized_model_dir,

model_basename=model_basename,

use_safetensors=True,

device="cuda:0")

prompt_text = "スタジオジブリの作品を5つ教えてください"

prompt_template = f'以下は、タスクを説明する指示です。要求を適切に満たす応答を書きなさい。\n\n### 指示:\n{prompt_text}\n\n### 応答:'

tokens = tokenizer(prompt_template, return_tensors="pt").to("cuda:0").input_ids

output = model.generate(input_ids=tokens, max_new_tokens=100, do_sample=True, temperature=0.8)

print(tokenizer.decode(output[0]))

How to make finetune data(LoRA)

There is a LoRA finetune code sample in finetune_sample directory.

please read README.mb

Other AutoGPTQ documents

https://github.com/PanQiWei/AutoGPTQ/blob/main/docs/tutorial/01-Quick-Start.md

Benchmark

The results below are preliminary. The blank part is under measurement.

Also, the score may change as a result of more tuning.

Japanese benchmark

- We used Stability-AI/lm-evaluation-harness + gakada's AutoGPTQ PR for evaluation. (Stability-AI/lm-evaluation-harness + gakada's AutoGPTQ PR)

- The 4-task average accuracy is based on results of JCommonsenseQA-1.1, JNLI-1.1, MARC-ja-1.1, and JSQuAD-1.1.

- model loading is performed with gptq_use_triton=True, and evaluation is performed with template version 0.3 using the few-shot in-context learning.

- The number of few-shots is 3,3,3,2.

Model Average JCommonsenseQA JNLI MARC-ja JSQuAD model weblab-10b 66.38 65.86 54.19 84.49 60.98 original model weblab-10b-instruction-sft 78.78 74.35 65.65 96.06 79.04 original instruction model weblab-10b-instruction-sft-GPTQ first tuning 69.72 74.53 41.70 89.95 72.69 deleted weblab-10b-instruction-sft-GPTQ second tuning 74.59 74.08 60.72 91.85 71.70 deleted weblab-10b-instruction-sft-GPTQ third tuning 77.62 73.19 69.26 95.91 72.10 current model. replaced on August 26th weblab-10b-instruction-sft-GPTQ 4th tuning - - 14.5 85.46 - abandoned weblab-10b-instruction-sft-GPTQ 5th tuning - 19.30 - - - abandoned

about this work

- This Quantization work was done by : webbigdata.

- related documentation like fine-turning in Japanesse is here.

- Downloads last month

- 2,003