url

stringlengths 61

61

| repository_url

stringclasses 1

value | labels_url

stringlengths 75

75

| comments_url

stringlengths 70

70

| events_url

stringlengths 68

68

| html_url

stringlengths 49

51

| id

int64 986M

1.61B

| node_id

stringlengths 18

32

| number

int64 2.87k

5.61k

| title

stringlengths 1

290

| user

dict | labels

list | state

stringclasses 2

values | locked

bool 1

class | assignee

dict | assignees

list | milestone

dict | comments

sequence | created_at

timestamp[s] | updated_at

timestamp[s] | closed_at

timestamp[s] | author_association

stringclasses 3

values | active_lock_reason

null | body

stringlengths 2

36.2k

⌀ | reactions

dict | timeline_url

stringlengths 70

70

| performed_via_github_app

null | state_reason

stringclasses 3

values | draft

bool 2

classes | pull_request

dict | is_pull_request

bool 2

classes |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/datasets/issues/5609 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5609/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5609/comments | https://api.github.com/repos/huggingface/datasets/issues/5609/events | https://github.com/huggingface/datasets/issues/5609 | 1,610,062,862 | I_kwDODunzps5f95wO | 5,609 | `load_from_disk` vs `load_dataset` performance. | {

"login": "davidgilbertson",

"id": 4443482,

"node_id": "MDQ6VXNlcjQ0NDM0ODI=",

"avatar_url": "https://avatars.githubusercontent.com/u/4443482?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/davidgilbertson",

"html_url": "https://github.com/davidgilbertson",

"followers_url": "https://api.github.com/users/davidgilbertson/followers",

"following_url": "https://api.github.com/users/davidgilbertson/following{/other_user}",

"gists_url": "https://api.github.com/users/davidgilbertson/gists{/gist_id}",

"starred_url": "https://api.github.com/users/davidgilbertson/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/davidgilbertson/subscriptions",

"organizations_url": "https://api.github.com/users/davidgilbertson/orgs",

"repos_url": "https://api.github.com/users/davidgilbertson/repos",

"events_url": "https://api.github.com/users/davidgilbertson/events{/privacy}",

"received_events_url": "https://api.github.com/users/davidgilbertson/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [] | 2023-03-05T05:27:15 | 2023-03-05T05:27:15 | null | NONE | null | ### Describe the bug

I have downloaded `openwebtext` (~12GB) and filtered out a small amount of junk (it's still huge). Now, I would like to use this filtered version for future work. It seems I have two choices:

1. Use `load_dataset` each time, relying on the cache mechanism, and re-run my filtering.

2. `save_to_disk` and then use `load_from_disk` to load the filtered version.

The performance of these two approaches is wildly different:

* Using `load_dataset` takes about 20 seconds to load the dataset, and a few seconds to re-filter (thanks to the brilliant filter/map caching)

* Using `load_from_disk` takes 14 minutes! And the second time I tried, the session just crashed (on a machine with 32GB of RAM)

I don't know if you'd call this a bug, but it seems like there shouldn't need to be two methods to load from disk, or that they should not take such wildly different amounts of time, or that one should not crash. Or maybe that the docs could offer some guidance about when to pick which method and why two methods exist, or just how do most people do it?

Something I couldn't work out from reading the docs was this: can I modify a dataset from the hub, save it (locally) and use `load_dataset` to load it? This [post seemed to suggest that the answer is no](https://discuss.huggingface.co/t/save-and-load-datasets/9260).

### Steps to reproduce the bug

See above

### Expected behavior

Load times should be about the same.

### Environment info

- `datasets` version: 2.9.0

- Platform: Linux-5.10.102.1-microsoft-standard-WSL2-x86_64-with-glibc2.31

- Python version: 3.10.8

- PyArrow version: 11.0.0

- Pandas version: 1.5.3 | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/5609/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/5609/timeline | null | null | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/5608 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5608/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5608/comments | https://api.github.com/repos/huggingface/datasets/issues/5608/events | https://github.com/huggingface/datasets/issues/5608 | 1,609,996,563 | I_kwDODunzps5f9pkT | 5,608 | audiofolder only creates dataset of 13 rows (files) when the data folder it's reading from has 20,000 mp3 files. | {

"login": "jcho19",

"id": 107211437,

"node_id": "U_kgDOBmPqrQ",

"avatar_url": "https://avatars.githubusercontent.com/u/107211437?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/jcho19",

"html_url": "https://github.com/jcho19",

"followers_url": "https://api.github.com/users/jcho19/followers",

"following_url": "https://api.github.com/users/jcho19/following{/other_user}",

"gists_url": "https://api.github.com/users/jcho19/gists{/gist_id}",

"starred_url": "https://api.github.com/users/jcho19/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/jcho19/subscriptions",

"organizations_url": "https://api.github.com/users/jcho19/orgs",

"repos_url": "https://api.github.com/users/jcho19/repos",

"events_url": "https://api.github.com/users/jcho19/events{/privacy}",

"received_events_url": "https://api.github.com/users/jcho19/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [] | 2023-03-05T00:14:45 | 2023-03-05T00:14:45 | null | NONE | null | ### Describe the bug

x = load_dataset("audiofolder", data_dir="x")

When running this, x is a dataset of 13 rows (files) when it should be 20,000 rows (files) as the data_dir "x" has 20,000 mp3 files. Does anyone know what could possibly cause this (naming convention of mp3 files, etc.)

### Steps to reproduce the bug

x = load_dataset("audiofolder", data_dir="x")

### Expected behavior

x = load_dataset("audiofolder", data_dir="x") should create a dataset of 20,000 rows (files).

### Environment info

- `datasets` version: 2.9.0

- Platform: Linux-3.10.0-1160.80.1.el7.x86_64-x86_64-with-glibc2.17

- Python version: 3.9.16

- PyArrow version: 11.0.0

- Pandas version: 1.5.3 | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/5608/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/5608/timeline | null | null | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/5607 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5607/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5607/comments | https://api.github.com/repos/huggingface/datasets/issues/5607/events | https://github.com/huggingface/datasets/pull/5607 | 1,609,166,035 | PR_kwDODunzps5LQPbG | 5,607 | Don't save dataset info to cache dir when skipping verifications | {

"login": "polinaeterna",

"id": 16348744,

"node_id": "MDQ6VXNlcjE2MzQ4NzQ0",

"avatar_url": "https://avatars.githubusercontent.com/u/16348744?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/polinaeterna",

"html_url": "https://github.com/polinaeterna",

"followers_url": "https://api.github.com/users/polinaeterna/followers",

"following_url": "https://api.github.com/users/polinaeterna/following{/other_user}",

"gists_url": "https://api.github.com/users/polinaeterna/gists{/gist_id}",

"starred_url": "https://api.github.com/users/polinaeterna/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/polinaeterna/subscriptions",

"organizations_url": "https://api.github.com/users/polinaeterna/orgs",

"repos_url": "https://api.github.com/users/polinaeterna/repos",

"events_url": "https://api.github.com/users/polinaeterna/events{/privacy}",

"received_events_url": "https://api.github.com/users/polinaeterna/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [

"The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/datasets/pr_5607). All of your documentation changes will be reflected on that endpoint."

] | 2023-03-03T19:50:29 | 2023-03-03T20:13:36 | null | CONTRIBUTOR | null | I think it makes sense not to save `dataset_info.json` file to a dataset cache directory when loading dataset with `verification_mode="no_checks"` because otherwise when next time the dataset is loaded **without** `verification_mode="no_checks"`, it will be loaded successfully, despite some values in info might not correspond to the ones in the repo which was the reason for using `verification_mode="no_checks"` first. | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/5607/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/5607/timeline | null | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/5607",

"html_url": "https://github.com/huggingface/datasets/pull/5607",

"diff_url": "https://github.com/huggingface/datasets/pull/5607.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/5607.patch",

"merged_at": null

} | true |

https://api.github.com/repos/huggingface/datasets/issues/5606 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5606/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5606/comments | https://api.github.com/repos/huggingface/datasets/issues/5606/events | https://github.com/huggingface/datasets/issues/5606 | 1,608,911,632 | I_kwDODunzps5f5gsQ | 5,606 | Add `Dataset.to_list` to the API | {

"login": "mariosasko",

"id": 47462742,

"node_id": "MDQ6VXNlcjQ3NDYyNzQy",

"avatar_url": "https://avatars.githubusercontent.com/u/47462742?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/mariosasko",

"html_url": "https://github.com/mariosasko",

"followers_url": "https://api.github.com/users/mariosasko/followers",

"following_url": "https://api.github.com/users/mariosasko/following{/other_user}",

"gists_url": "https://api.github.com/users/mariosasko/gists{/gist_id}",

"starred_url": "https://api.github.com/users/mariosasko/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mariosasko/subscriptions",

"organizations_url": "https://api.github.com/users/mariosasko/orgs",

"repos_url": "https://api.github.com/users/mariosasko/repos",

"events_url": "https://api.github.com/users/mariosasko/events{/privacy}",

"received_events_url": "https://api.github.com/users/mariosasko/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1935892871,

"node_id": "MDU6TGFiZWwxOTM1ODkyODcx",

"url": "https://api.github.com/repos/huggingface/datasets/labels/enhancement",

"name": "enhancement",

"color": "a2eeef",

"default": true,

"description": "New feature or request"

},

{

"id": 1935892877,

"node_id": "MDU6TGFiZWwxOTM1ODkyODc3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/good%20first%20issue",

"name": "good first issue",

"color": "7057ff",

"default": true,

"description": "Good for newcomers"

}

] | open | false | null | [] | null | [

"Hello, I have an interest in this issue.\r\nIs the `Dataset.to_dict` you are describing correct in the code here?\r\n\r\nhttps://github.com/huggingface/datasets/blob/35b789e8f6826b6b5a6b48fcc2416c890a1f326a/src/datasets/arrow_dataset.py#L4633-L4667"

] | 2023-03-03T16:17:10 | 2023-03-04T06:27:08 | null | CONTRIBUTOR | null | Since there is `Dataset.from_list` in the API, we should also add `Dataset.to_list` to be consistent.

Regarding the implementation, we can re-use `Dataset.to_dict`'s code and replace the `to_pydict` calls with `to_pylist`. | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/5606/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/5606/timeline | null | null | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/5605 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5605/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5605/comments | https://api.github.com/repos/huggingface/datasets/issues/5605/events | https://github.com/huggingface/datasets/pull/5605 | 1,608,865,460 | PR_kwDODunzps5LPPf5 | 5,605 | Update README logo | {

"login": "gary149",

"id": 3841370,

"node_id": "MDQ6VXNlcjM4NDEzNzA=",

"avatar_url": "https://avatars.githubusercontent.com/u/3841370?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/gary149",

"html_url": "https://github.com/gary149",

"followers_url": "https://api.github.com/users/gary149/followers",

"following_url": "https://api.github.com/users/gary149/following{/other_user}",

"gists_url": "https://api.github.com/users/gary149/gists{/gist_id}",

"starred_url": "https://api.github.com/users/gary149/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/gary149/subscriptions",

"organizations_url": "https://api.github.com/users/gary149/orgs",

"repos_url": "https://api.github.com/users/gary149/repos",

"events_url": "https://api.github.com/users/gary149/events{/privacy}",

"received_events_url": "https://api.github.com/users/gary149/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"_The documentation is not available anymore as the PR was closed or merged._",

"Are you sure it's safe to remove? https://github.com/huggingface/datasets/pull/3866",

"<details>\n<summary>Show benchmarks</summary>\n\nPyArrow==6.0.0\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.009520 / 0.011353 (-0.001833) | 0.005319 / 0.011008 (-0.005690) | 0.099372 / 0.038508 (0.060863) | 0.036173 / 0.023109 (0.013064) | 0.295752 / 0.275898 (0.019853) | 0.362882 / 0.323480 (0.039402) | 0.008442 / 0.007986 (0.000456) | 0.004225 / 0.004328 (-0.000103) | 0.076645 / 0.004250 (0.072394) | 0.044198 / 0.037052 (0.007146) | 0.311948 / 0.258489 (0.053459) | 0.342963 / 0.293841 (0.049122) | 0.038613 / 0.128546 (-0.089933) | 0.012127 / 0.075646 (-0.063519) | 0.334427 / 0.419271 (-0.084844) | 0.048309 / 0.043533 (0.004776) | 0.297046 / 0.255139 (0.041907) | 0.314562 / 0.283200 (0.031363) | 0.105797 / 0.141683 (-0.035886) | 1.460967 / 1.452155 (0.008812) | 1.500907 / 1.492716 (0.008190) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.216185 / 0.018006 (0.198179) | 0.438924 / 0.000490 (0.438435) | 0.001210 / 0.000200 (0.001011) | 0.000081 / 0.000054 (0.000027) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.026193 / 0.037411 (-0.011219) | 0.105888 / 0.014526 (0.091363) | 0.115812 / 0.176557 (-0.060744) | 0.158748 / 0.737135 (-0.578387) | 0.121514 / 0.296338 (-0.174824) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.399837 / 0.215209 (0.184628) | 3.996992 / 2.077655 (1.919338) | 1.784964 / 1.504120 (0.280844) | 1.591078 / 1.541195 (0.049883) | 1.666424 / 1.468490 (0.197934) | 0.711450 / 4.584777 (-3.873327) | 3.787814 / 3.745712 (0.042102) | 2.056776 / 5.269862 (-3.213085) | 1.332163 / 4.565676 (-3.233514) | 0.085755 / 0.424275 (-0.338520) | 0.012033 / 0.007607 (0.004426) | 0.511500 / 0.226044 (0.285455) | 5.098999 / 2.268929 (2.830071) | 2.288261 / 55.444624 (-53.156364) | 1.947483 / 6.876477 (-4.928994) | 1.987838 / 2.142072 (-0.154234) | 0.852241 / 4.805227 (-3.952986) | 0.164781 / 6.500664 (-6.335883) | 0.061825 / 0.075469 (-0.013644) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.202253 / 1.841788 (-0.639534) | 14.632608 / 8.074308 (6.558300) | 13.331320 / 10.191392 (3.139928) | 0.157944 / 0.680424 (-0.522480) | 0.029284 / 0.534201 (-0.504917) | 0.446636 / 0.579283 (-0.132647) | 0.437009 / 0.434364 (0.002645) | 0.521883 / 0.540337 (-0.018455) | 0.606687 / 1.386936 (-0.780249) |\n\n</details>\nPyArrow==latest\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.007528 / 0.011353 (-0.003825) | 0.005274 / 0.011008 (-0.005734) | 0.073524 / 0.038508 (0.035016) | 0.033893 / 0.023109 (0.010784) | 0.335432 / 0.275898 (0.059534) | 0.379981 / 0.323480 (0.056501) | 0.005954 / 0.007986 (-0.002031) | 0.004126 / 0.004328 (-0.000203) | 0.072891 / 0.004250 (0.068641) | 0.046517 / 0.037052 (0.009465) | 0.337241 / 0.258489 (0.078752) | 0.385562 / 0.293841 (0.091721) | 0.036410 / 0.128546 (-0.092136) | 0.012246 / 0.075646 (-0.063401) | 0.085974 / 0.419271 (-0.333298) | 0.049665 / 0.043533 (0.006133) | 0.330919 / 0.255139 (0.075780) | 0.352041 / 0.283200 (0.068841) | 0.103751 / 0.141683 (-0.037931) | 1.468851 / 1.452155 (0.016696) | 1.565380 / 1.492716 (0.072663) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.260431 / 0.018006 (0.242425) | 0.444554 / 0.000490 (0.444064) | 0.016055 / 0.000200 (0.015855) | 0.000283 / 0.000054 (0.000228) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.029130 / 0.037411 (-0.008281) | 0.112002 / 0.014526 (0.097476) | 0.120769 / 0.176557 (-0.055788) | 0.169345 / 0.737135 (-0.567790) | 0.129609 / 0.296338 (-0.166730) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.432211 / 0.215209 (0.217002) | 4.293008 / 2.077655 (2.215353) | 2.071291 / 1.504120 (0.567171) | 1.859322 / 1.541195 (0.318127) | 1.971434 / 1.468490 (0.502943) | 0.704042 / 4.584777 (-3.880735) | 3.791696 / 3.745712 (0.045983) | 3.142632 / 5.269862 (-2.127230) | 1.735151 / 4.565676 (-2.830525) | 0.086203 / 0.424275 (-0.338072) | 0.012542 / 0.007607 (0.004935) | 0.534870 / 0.226044 (0.308826) | 5.326042 / 2.268929 (3.057113) | 2.547960 / 55.444624 (-52.896664) | 2.212730 / 6.876477 (-4.663747) | 2.296177 / 2.142072 (0.154105) | 0.840311 / 4.805227 (-3.964917) | 0.168353 / 6.500664 (-6.332311) | 0.065949 / 0.075469 (-0.009520) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.255589 / 1.841788 (-0.586199) | 14.947344 / 8.074308 (6.873036) | 13.253721 / 10.191392 (3.062329) | 0.162349 / 0.680424 (-0.518075) | 0.017579 / 0.534201 (-0.516622) | 0.420758 / 0.579283 (-0.158525) | 0.430030 / 0.434364 (-0.004334) | 0.524669 / 0.540337 (-0.015669) | 0.623920 / 1.386936 (-0.763016) |\n\n</details>\n</details>\n\n\n"

] | 2023-03-03T15:46:31 | 2023-03-03T21:57:18 | 2023-03-03T21:50:17 | CONTRIBUTOR | null | null | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/5605/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/5605/timeline | null | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/5605",

"html_url": "https://github.com/huggingface/datasets/pull/5605",

"diff_url": "https://github.com/huggingface/datasets/pull/5605.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/5605.patch",

"merged_at": "2023-03-03T21:50:17"

} | true |

https://api.github.com/repos/huggingface/datasets/issues/5604 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5604/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5604/comments | https://api.github.com/repos/huggingface/datasets/issues/5604/events | https://github.com/huggingface/datasets/issues/5604 | 1,608,304,775 | I_kwDODunzps5f3MiH | 5,604 | Problems with downloading The Pile | {

"login": "sentialx",

"id": 11065386,

"node_id": "MDQ6VXNlcjExMDY1Mzg2",

"avatar_url": "https://avatars.githubusercontent.com/u/11065386?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/sentialx",

"html_url": "https://github.com/sentialx",

"followers_url": "https://api.github.com/users/sentialx/followers",

"following_url": "https://api.github.com/users/sentialx/following{/other_user}",

"gists_url": "https://api.github.com/users/sentialx/gists{/gist_id}",

"starred_url": "https://api.github.com/users/sentialx/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/sentialx/subscriptions",

"organizations_url": "https://api.github.com/users/sentialx/orgs",

"repos_url": "https://api.github.com/users/sentialx/repos",

"events_url": "https://api.github.com/users/sentialx/events{/privacy}",

"received_events_url": "https://api.github.com/users/sentialx/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [] | 2023-03-03T09:52:08 | 2023-03-03T09:52:08 | null | NONE | null | ### Describe the bug

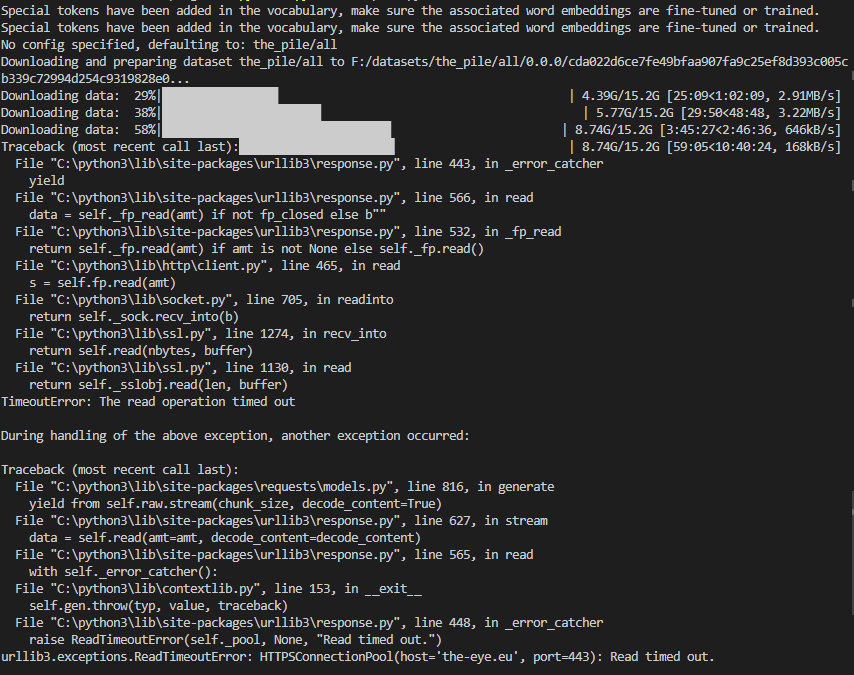

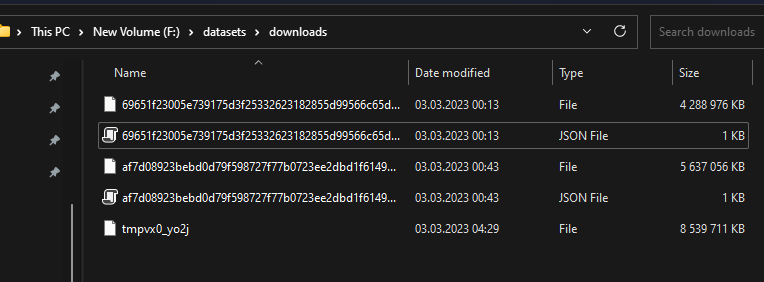

The downloads in the screenshot seem to be interrupted after some time and the last download throws a "Read timed out" error.

Here are the downloaded files:

They should be all 14GB like here (https://the-eye.eu/public/AI/pile/train/).

Alternatively, can I somehow download the files by myself and use the datasets preparing script?

### Steps to reproduce the bug

dataset = load_dataset('the_pile', split='train', cache_dir='F:\datasets')

### Expected behavior

The files should be downloaded correctly.

### Environment info

- `datasets` version: 2.10.1

- Platform: Windows-10-10.0.22623-SP0

- Python version: 3.10.5

- PyArrow version: 9.0.0

- Pandas version: 1.4.2 | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/5604/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/5604/timeline | null | null | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/5603 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5603/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5603/comments | https://api.github.com/repos/huggingface/datasets/issues/5603/events | https://github.com/huggingface/datasets/pull/5603 | 1,607,143,509 | PR_kwDODunzps5LJZzG | 5,603 | Don't compute checksums if not necessary in `datasets-cli test` | {

"login": "lhoestq",

"id": 42851186,

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/lhoestq",

"html_url": "https://github.com/lhoestq",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"_The documentation is not available anymore as the PR was closed or merged._",

"<details>\n<summary>Show benchmarks</summary>\n\nPyArrow==6.0.0\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.008550 / 0.011353 (-0.002803) | 0.004476 / 0.011008 (-0.006532) | 0.100902 / 0.038508 (0.062394) | 0.029684 / 0.023109 (0.006575) | 0.308081 / 0.275898 (0.032183) | 0.363435 / 0.323480 (0.039955) | 0.006987 / 0.007986 (-0.000999) | 0.003401 / 0.004328 (-0.000927) | 0.078218 / 0.004250 (0.073967) | 0.036657 / 0.037052 (-0.000395) | 0.319670 / 0.258489 (0.061181) | 0.349952 / 0.293841 (0.056111) | 0.033416 / 0.128546 (-0.095130) | 0.011511 / 0.075646 (-0.064135) | 0.323888 / 0.419271 (-0.095384) | 0.042429 / 0.043533 (-0.001104) | 0.307310 / 0.255139 (0.052171) | 0.329459 / 0.283200 (0.046259) | 0.085209 / 0.141683 (-0.056474) | 1.475893 / 1.452155 (0.023739) | 1.502782 / 1.492716 (0.010065) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.200137 / 0.018006 (0.182131) | 0.411269 / 0.000490 (0.410780) | 0.000415 / 0.000200 (0.000215) | 0.000061 / 0.000054 (0.000006) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.022626 / 0.037411 (-0.014785) | 0.097045 / 0.014526 (0.082519) | 0.102955 / 0.176557 (-0.073602) | 0.148411 / 0.737135 (-0.588725) | 0.107238 / 0.296338 (-0.189100) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.421683 / 0.215209 (0.206474) | 4.203031 / 2.077655 (2.125376) | 1.908232 / 1.504120 (0.404112) | 1.698867 / 1.541195 (0.157672) | 1.743561 / 1.468490 (0.275071) | 0.693199 / 4.584777 (-3.891578) | 3.361022 / 3.745712 (-0.384690) | 2.989610 / 5.269862 (-2.280251) | 1.533036 / 4.565676 (-3.032641) | 0.082675 / 0.424275 (-0.341601) | 0.012419 / 0.007607 (0.004812) | 0.531543 / 0.226044 (0.305499) | 5.330595 / 2.268929 (3.061666) | 2.347519 / 55.444624 (-53.097105) | 1.975672 / 6.876477 (-4.900804) | 2.039541 / 2.142072 (-0.102532) | 0.810281 / 4.805227 (-3.994946) | 0.148917 / 6.500664 (-6.351747) | 0.065441 / 0.075469 (-0.010028) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.266213 / 1.841788 (-0.575574) | 13.628106 / 8.074308 (5.553798) | 13.852191 / 10.191392 (3.660799) | 0.149004 / 0.680424 (-0.531420) | 0.028549 / 0.534201 (-0.505652) | 0.399824 / 0.579283 (-0.179459) | 0.401231 / 0.434364 (-0.033133) | 0.473251 / 0.540337 (-0.067086) | 0.561094 / 1.386936 (-0.825842) |\n\n</details>\nPyArrow==latest\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.006669 / 0.011353 (-0.004684) | 0.004477 / 0.011008 (-0.006532) | 0.077514 / 0.038508 (0.039006) | 0.027489 / 0.023109 (0.004380) | 0.341935 / 0.275898 (0.066037) | 0.377392 / 0.323480 (0.053912) | 0.004947 / 0.007986 (-0.003039) | 0.004600 / 0.004328 (0.000271) | 0.075938 / 0.004250 (0.071687) | 0.039586 / 0.037052 (0.002534) | 0.344966 / 0.258489 (0.086477) | 0.392181 / 0.293841 (0.098340) | 0.031838 / 0.128546 (-0.096708) | 0.011572 / 0.075646 (-0.064075) | 0.085811 / 0.419271 (-0.333461) | 0.042250 / 0.043533 (-0.001283) | 0.345605 / 0.255139 (0.090466) | 0.367814 / 0.283200 (0.084615) | 0.090683 / 0.141683 (-0.051000) | 1.483168 / 1.452155 (0.031014) | 1.559724 / 1.492716 (0.067008) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.235655 / 0.018006 (0.217649) | 0.399016 / 0.000490 (0.398527) | 0.003096 / 0.000200 (0.002896) | 0.000077 / 0.000054 (0.000022) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.024454 / 0.037411 (-0.012957) | 0.100710 / 0.014526 (0.086185) | 0.107950 / 0.176557 (-0.068606) | 0.161560 / 0.737135 (-0.575576) | 0.111840 / 0.296338 (-0.184498) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.441362 / 0.215209 (0.226153) | 4.428105 / 2.077655 (2.350450) | 2.074501 / 1.504120 (0.570381) | 1.866672 / 1.541195 (0.325477) | 1.928266 / 1.468490 (0.459776) | 0.703561 / 4.584777 (-3.881216) | 3.396537 / 3.745712 (-0.349175) | 3.047369 / 5.269862 (-2.222492) | 1.595133 / 4.565676 (-2.970543) | 0.084028 / 0.424275 (-0.340247) | 0.012349 / 0.007607 (0.004741) | 0.539354 / 0.226044 (0.313310) | 5.401535 / 2.268929 (3.132606) | 2.499874 / 55.444624 (-52.944750) | 2.161406 / 6.876477 (-4.715071) | 2.197385 / 2.142072 (0.055313) | 0.810864 / 4.805227 (-3.994363) | 0.152277 / 6.500664 (-6.348387) | 0.067266 / 0.075469 (-0.008203) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.280900 / 1.841788 (-0.560887) | 13.815731 / 8.074308 (5.741423) | 13.007438 / 10.191392 (2.816046) | 0.129711 / 0.680424 (-0.550713) | 0.016852 / 0.534201 (-0.517349) | 0.380775 / 0.579283 (-0.198508) | 0.384143 / 0.434364 (-0.050221) | 0.459954 / 0.540337 (-0.080383) | 0.549335 / 1.386936 (-0.837601) |\n\n</details>\n</details>\n\n\n",

"<details>\n<summary>Show benchmarks</summary>\n\nPyArrow==6.0.0\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.009570 / 0.011353 (-0.001783) | 0.005219 / 0.011008 (-0.005789) | 0.098472 / 0.038508 (0.059964) | 0.035429 / 0.023109 (0.012320) | 0.303086 / 0.275898 (0.027188) | 0.365926 / 0.323480 (0.042446) | 0.008797 / 0.007986 (0.000811) | 0.004220 / 0.004328 (-0.000108) | 0.076670 / 0.004250 (0.072419) | 0.045596 / 0.037052 (0.008543) | 0.309476 / 0.258489 (0.050987) | 0.343958 / 0.293841 (0.050117) | 0.038741 / 0.128546 (-0.089805) | 0.011990 / 0.075646 (-0.063657) | 0.332326 / 0.419271 (-0.086945) | 0.048897 / 0.043533 (0.005364) | 0.296002 / 0.255139 (0.040863) | 0.322048 / 0.283200 (0.038849) | 0.104403 / 0.141683 (-0.037280) | 1.461777 / 1.452155 (0.009622) | 1.516362 / 1.492716 (0.023645) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.201565 / 0.018006 (0.183559) | 0.435781 / 0.000490 (0.435291) | 0.004215 / 0.000200 (0.004015) | 0.000282 / 0.000054 (0.000227) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.027272 / 0.037411 (-0.010139) | 0.106157 / 0.014526 (0.091631) | 0.116948 / 0.176557 (-0.059609) | 0.160404 / 0.737135 (-0.576731) | 0.122518 / 0.296338 (-0.173820) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.397721 / 0.215209 (0.182512) | 3.966433 / 2.077655 (1.888778) | 1.755410 / 1.504120 (0.251290) | 1.566480 / 1.541195 (0.025285) | 1.623684 / 1.468490 (0.155194) | 0.696820 / 4.584777 (-3.887957) | 3.750437 / 3.745712 (0.004725) | 2.105875 / 5.269862 (-3.163986) | 1.442026 / 4.565676 (-3.123650) | 0.085026 / 0.424275 (-0.339249) | 0.012239 / 0.007607 (0.004632) | 0.502613 / 0.226044 (0.276569) | 5.049016 / 2.268929 (2.780087) | 2.314499 / 55.444624 (-53.130126) | 1.967943 / 6.876477 (-4.908534) | 2.033507 / 2.142072 (-0.108565) | 0.861908 / 4.805227 (-3.943319) | 0.167784 / 6.500664 (-6.332880) | 0.063022 / 0.075469 (-0.012447) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.210434 / 1.841788 (-0.631353) | 14.979319 / 8.074308 (6.905011) | 14.095263 / 10.191392 (3.903871) | 0.174203 / 0.680424 (-0.506221) | 0.028547 / 0.534201 (-0.505654) | 0.442509 / 0.579283 (-0.136774) | 0.445811 / 0.434364 (0.011447) | 0.531313 / 0.540337 (-0.009024) | 0.636541 / 1.386936 (-0.750395) |\n\n</details>\nPyArrow==latest\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.007341 / 0.011353 (-0.004012) | 0.005197 / 0.011008 (-0.005811) | 0.075413 / 0.038508 (0.036905) | 0.033261 / 0.023109 (0.010152) | 0.339596 / 0.275898 (0.063698) | 0.376051 / 0.323480 (0.052571) | 0.005827 / 0.007986 (-0.002159) | 0.005473 / 0.004328 (0.001144) | 0.074851 / 0.004250 (0.070600) | 0.049059 / 0.037052 (0.012007) | 0.357182 / 0.258489 (0.098693) | 0.384589 / 0.293841 (0.090748) | 0.037122 / 0.128546 (-0.091424) | 0.012298 / 0.075646 (-0.063348) | 0.088191 / 0.419271 (-0.331081) | 0.052002 / 0.043533 (0.008469) | 0.343216 / 0.255139 (0.088077) | 0.364534 / 0.283200 (0.081334) | 0.105462 / 0.141683 (-0.036221) | 1.486717 / 1.452155 (0.034562) | 1.584725 / 1.492716 (0.092009) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.199210 / 0.018006 (0.181203) | 0.439069 / 0.000490 (0.438580) | 0.000436 / 0.000200 (0.000236) | 0.000059 / 0.000054 (0.000005) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.029931 / 0.037411 (-0.007480) | 0.109564 / 0.014526 (0.095038) | 0.122284 / 0.176557 (-0.054273) | 0.170819 / 0.737135 (-0.566317) | 0.125886 / 0.296338 (-0.170452) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.422724 / 0.215209 (0.207515) | 4.210304 / 2.077655 (2.132650) | 2.001481 / 1.504120 (0.497361) | 1.810818 / 1.541195 (0.269623) | 1.901367 / 1.468490 (0.432877) | 0.686004 / 4.584777 (-3.898773) | 3.768850 / 3.745712 (0.023138) | 2.079501 / 5.269862 (-3.190360) | 1.326970 / 4.565676 (-3.238706) | 0.085991 / 0.424275 (-0.338284) | 0.012298 / 0.007607 (0.004690) | 0.526878 / 0.226044 (0.300833) | 5.267241 / 2.268929 (2.998312) | 2.451781 / 55.444624 (-52.992843) | 2.109143 / 6.876477 (-4.767333) | 2.185426 / 2.142072 (0.043353) | 0.830165 / 4.805227 (-3.975063) | 0.166167 / 6.500664 (-6.334497) | 0.064077 / 0.075469 (-0.011392) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.270430 / 1.841788 (-0.571358) | 14.844852 / 8.074308 (6.770544) | 13.196672 / 10.191392 (3.005280) | 0.162853 / 0.680424 (-0.517571) | 0.017727 / 0.534201 (-0.516474) | 0.424803 / 0.579283 (-0.154480) | 0.439970 / 0.434364 (0.005606) | 0.530691 / 0.540337 (-0.009647) | 0.630474 / 1.386936 (-0.756462) |\n\n</details>\n</details>\n\n\n"

] | 2023-03-02T16:42:39 | 2023-03-03T15:45:32 | 2023-03-03T15:38:28 | MEMBER | null | we only need them if there exists a `dataset_infos.json` | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/5603/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/5603/timeline | null | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/5603",

"html_url": "https://github.com/huggingface/datasets/pull/5603",

"diff_url": "https://github.com/huggingface/datasets/pull/5603.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/5603.patch",

"merged_at": "2023-03-03T15:38:28"

} | true |

https://api.github.com/repos/huggingface/datasets/issues/5602 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5602/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5602/comments | https://api.github.com/repos/huggingface/datasets/issues/5602/events | https://github.com/huggingface/datasets/pull/5602 | 1,607,054,110 | PR_kwDODunzps5LJGfa | 5,602 | Return dict structure if columns are lists - to_tf_dataset | {

"login": "amyeroberts",

"id": 22614925,

"node_id": "MDQ6VXNlcjIyNjE0OTI1",

"avatar_url": "https://avatars.githubusercontent.com/u/22614925?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/amyeroberts",

"html_url": "https://github.com/amyeroberts",

"followers_url": "https://api.github.com/users/amyeroberts/followers",

"following_url": "https://api.github.com/users/amyeroberts/following{/other_user}",

"gists_url": "https://api.github.com/users/amyeroberts/gists{/gist_id}",

"starred_url": "https://api.github.com/users/amyeroberts/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/amyeroberts/subscriptions",

"organizations_url": "https://api.github.com/users/amyeroberts/orgs",

"repos_url": "https://api.github.com/users/amyeroberts/repos",

"events_url": "https://api.github.com/users/amyeroberts/events{/privacy}",

"received_events_url": "https://api.github.com/users/amyeroberts/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [

"The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/datasets/pr_5602). All of your documentation changes will be reflected on that endpoint.",

"This is a great PR! Thinking about the UX though, maybe we could do it without the extra argument? Before this PR, the logic in `to_tf_dataset` was that if the user passed a single column name in either `columns` or `label_cols`, we converted it to a length-1 list. Then, later in the code, we convert output dicts with only one key to naked Tensors.\r\n\r\nWould it be easier if we removed the argument, but instead treated the cases differently? Passing a column name as a string could yield a single naked Tensor in the output as before, but passing a list of length 1 would yield a full dict? That way if you wanted dict output with a single key you could just say `columns=[col_name]`.\r\n\r\n(I'm not totally convinced this is a good idea yet, it just seems like it might be more intuitive)",

"@Rocketknight1 Happy to implement it that way - it's certainly cleaner to not have another arg. In this case, am I right in saying we'd effectively set `return_dict` [here](https://github.com/huggingface/datasets/blob/6569014a9948eab7d031a3587405e64ba92d6c59/src/datasets/arrow_dataset.py#L410) - where columns are made into a list if they were a string? \r\n\r\nThere only concern I have is this changes the default behaviour, which might break things for people who were happily using `columns=[\"my_col_str\"]` before. \r\n\r\n\r\n",

"@amyeroberts That's correct! Probably the simplest way to implement it would be to just add the flag there.\r\n\r\nAnd yeah, I'm aware this might be a slightly breaking change, but we've mostly tried to move users to `prepare_tf_dataset` in `transformers` at this point, so hopefully as long as that method doesn't break then most users won't be negatively affected by the change.",

"@lhoestq @Rocketknight1 - I've remove the `return_dict` argument and implemented @Rocketknight1 's suggestion. LMK what you think :) ",

"@lhoestq Of course :) I've opened a draft PR here for the updates needed in transformers examples and docs to keep the returned data structure consistent: https://github.com/huggingface/transformers/pull/21935. Note: even with the different structure, `model.fit` can still successfully be called. \r\n\r\nFor the [link you shared](https://github.com/huggingface/datasets/pull/url) - for me it returns a 404 error. Is there another link I could follow to see how to run the transformers CI with this branch? \r\n\r\nCurrently looking into the failing tests 😭 ",

"Oh sorry - I fixed the URL: https://github.com/huggingface/transformers/commit/4eb55bbd593adf2e49362613ee32a11ddc4a854d",

"The error shows `There appear to be 80 leaked shared_memory objects to clean up at shutdown`. IIRC to_tf_dataset does some shared memory stuff for multiprocessing - maybe @Rocketknight1 you know what's going on ?"

] | 2023-03-02T15:51:12 | 2023-03-03T21:21:39 | null | CONTRIBUTOR | null | This PR introduces new logic to `to_tf_dataset` affecting the returned data structure, enabling a dictionary structure to be returned, even if only one feature column is selected.

If the passed in `columns` or `label_cols` to `to_tf_dataset` are a list, they are returned as a dictionary, respectively. If they are a string, the tensor is returned.

An outline of the behaviour:

```

dataset,to_tf_dataset(columns=["col_1"], label_cols="col_2")

# ({'col_1': col_1}, col_2}

dataset,to_tf_dataset(columns="col1", label_cols="col_2")

# (col1, col2)

dataset,to_tf_dataset(columns="col1")

# col1

dataset,to_tf_dataset(columns=["col_1"], labels=["col_2"])

# ({'col1': tensor}, {'col2': tensor}}

dataset,to_tf_dataset(columns="col_1", labels=["col_2"])

# (col1, {'col2': tensor}}

```

## Motivation

Currently, when calling `to_tf_dataset`, the returned dataset will always return a tuple structure if a single feature column is used. This can cause issues when calling `model.fit` on models which train without labels e.g. [TFVitMAEForPreTraining](https://github.com/huggingface/transformers/blob/b6f47b539377ac1fd845c7adb4ccaa5eb514e126/src/transformers/models/vit_mae/modeling_vit_mae.py#L849). Specifically, [this line](https://github.com/huggingface/transformers/blob/d9e28d91a8b2d09b51a33155d3a03ad9fcfcbd1f/src/transformers/modeling_tf_utils.py#L1521) where it's assumed the input `x` is a dictionary if there is no label.

## Example

Previous behaviour

```python

In [1]: import tensorflow as tf

...: from datasets import load_dataset

...:

...:

...: def transform(batch):

...: def _transform_img(img):

...: img = img.convert("RGB")

...: img = tf.keras.utils.img_to_array(img)

...: img = tf.image.resize(img, (224, 224))

...: img /= 255.0

...: img = tf.transpose(img, perm=[2, 0, 1])

...: return img

...: batch['pixel_values'] = [_transform_img(pil_img) for pil_img in batch['img']]

...: return batch

...:

...:

...: def collate_fn(examples):

...: pixel_values = tf.stack([example["pixel_values"] for example in examples])

...: return {"pixel_values": pixel_values}

...:

...:

...: dataset = load_dataset('cifar10')['train']

...: dataset = dataset.with_transform(transform)

...: dataset.to_tf_dataset(batch_size=8, columns=['pixel_values'], collate_fn=collate_fn)

Out[1]: <PrefetchDataset element_spec=TensorSpec(shape=(None, 3, 224, 224), dtype=tf.float32, name=None)>

```

New behaviour

```python

In [1]: import tensorflow as tf

...: from datasets import load_dataset

...:

...:

...: def transform(batch):

...: def _transform_img(img):

...: img = img.convert("RGB")

...: img = tf.keras.utils.img_to_array(img)

...: img = tf.image.resize(img, (224, 224))

...: img /= 255.0

...: img = tf.transpose(img, perm=[2, 0, 1])

...: return img

...: batch['pixel_values'] = [_transform_img(pil_img) for pil_img in batch['img']]

...: return batch

...:

...:

...: def collate_fn(examples):

...: pixel_values = tf.stack([example["pixel_values"] for example in examples])

...: return {"pixel_values": pixel_values}

...:

...:

...: dataset = load_dataset('cifar10')['train']

...: dataset = dataset.with_transform(transform)

...: dataset.to_tf_dataset(batch_size=8, columns=['pixel_values'], collate_fn=collate_fn)

Out[1]: <PrefetchDataset element_spec={'pixel_values': TensorSpec(shape=(None, 3, 224, 224), dtype=tf.float32, name=None)}>

``` | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/5602/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/5602/timeline | null | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/5602",

"html_url": "https://github.com/huggingface/datasets/pull/5602",

"diff_url": "https://github.com/huggingface/datasets/pull/5602.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/5602.patch",

"merged_at": null

} | true |

https://api.github.com/repos/huggingface/datasets/issues/5601 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5601/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5601/comments | https://api.github.com/repos/huggingface/datasets/issues/5601/events | https://github.com/huggingface/datasets/issues/5601 | 1,606,685,976 | I_kwDODunzps5fxBUY | 5,601 | Authorization error | {

"login": "OleksandrKorovii",

"id": 107404835,

"node_id": "U_kgDOBmbeIw",

"avatar_url": "https://avatars.githubusercontent.com/u/107404835?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/OleksandrKorovii",

"html_url": "https://github.com/OleksandrKorovii",

"followers_url": "https://api.github.com/users/OleksandrKorovii/followers",

"following_url": "https://api.github.com/users/OleksandrKorovii/following{/other_user}",

"gists_url": "https://api.github.com/users/OleksandrKorovii/gists{/gist_id}",

"starred_url": "https://api.github.com/users/OleksandrKorovii/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/OleksandrKorovii/subscriptions",

"organizations_url": "https://api.github.com/users/OleksandrKorovii/orgs",

"repos_url": "https://api.github.com/users/OleksandrKorovii/repos",

"events_url": "https://api.github.com/users/OleksandrKorovii/events{/privacy}",

"received_events_url": "https://api.github.com/users/OleksandrKorovii/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [] | 2023-03-02T12:08:39 | 2023-03-03T07:32:54 | null | NONE | null | ### Describe the bug

Get `Authorization error` when try to push data into hugginface datasets hub.

### Steps to reproduce the bug

I did all steps in the [tutorial](https://huggingface.co/docs/datasets/share),

1. `huggingface-cli login` with WRITE token

2. `git lfs install`

3. `git clone https://huggingface.co/datasets/namespace/your_dataset_name`

4.

```

cp /somewhere/data/*.json .

git lfs track *.json

git add .gitattributes

git add *.json

git commit -m "add json files"

```

but when I execute `git push` I got the error:

```

Uploading LFS objects: 0% (0/1), 0 B | 0 B/s, done.

batch response: Authorization error.

error: failed to push some refs to 'https://huggingface.co/datasets/zeusfsx/ukrainian-news'

```

Size of data ~100Gb. I have five json files - different parts.

### Expected behavior

All my data pushed into hub

### Environment info

- `datasets` version: 2.10.1

- Platform: macOS-13.2.1-arm64-arm-64bit

- Python version: 3.10.10

- PyArrow version: 11.0.0

- Pandas version: 1.5.3 | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/5601/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/5601/timeline | null | null | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/5600 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5600/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5600/comments | https://api.github.com/repos/huggingface/datasets/issues/5600/events | https://github.com/huggingface/datasets/issues/5600 | 1,606,585,596 | I_kwDODunzps5fwoz8 | 5,600 | Dataloader getitem not working for DreamboothDatasets | {

"login": "salahiguiliz",

"id": 76955987,

"node_id": "MDQ6VXNlcjc2OTU1OTg3",

"avatar_url": "https://avatars.githubusercontent.com/u/76955987?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/salahiguiliz",

"html_url": "https://github.com/salahiguiliz",

"followers_url": "https://api.github.com/users/salahiguiliz/followers",

"following_url": "https://api.github.com/users/salahiguiliz/following{/other_user}",

"gists_url": "https://api.github.com/users/salahiguiliz/gists{/gist_id}",

"starred_url": "https://api.github.com/users/salahiguiliz/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/salahiguiliz/subscriptions",

"organizations_url": "https://api.github.com/users/salahiguiliz/orgs",

"repos_url": "https://api.github.com/users/salahiguiliz/repos",

"events_url": "https://api.github.com/users/salahiguiliz/events{/privacy}",

"received_events_url": "https://api.github.com/users/salahiguiliz/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [] | 2023-03-02T11:00:27 | 2023-03-02T11:00:27 | null | NONE | null | ### Describe the bug

Dataloader getitem is not working as before (see example of DreamboothDatasets)

moving to 2.8.0 solved the issue.

### Steps to reproduce the bug

1- using DreamBoothDataset to load some images

2- error after loading when trying to visualise the images

### Expected behavior

I was expecting a numpy array of the image

### Environment info

- Platform: Linux-5.10.147+-x86_64-with-glibc2.29

- Python version: 3.8.10

- PyArrow version: 9.0.0

- Pandas version: 1.3.5 | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/5600/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/5600/timeline | null | null | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/5598 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5598/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5598/comments | https://api.github.com/repos/huggingface/datasets/issues/5598/events | https://github.com/huggingface/datasets/pull/5598 | 1,605,018,478 | PR_kwDODunzps5LCMiX | 5,598 | Fix push_to_hub with no dataset_infos | {

"login": "lhoestq",

"id": 42851186,

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/lhoestq",

"html_url": "https://github.com/lhoestq",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"_The documentation is not available anymore as the PR was closed or merged._",

"<details>\n<summary>Show benchmarks</summary>\n\nPyArrow==6.0.0\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.008823 / 0.011353 (-0.002529) | 0.004738 / 0.011008 (-0.006270) | 0.102338 / 0.038508 (0.063830) | 0.030603 / 0.023109 (0.007494) | 0.302995 / 0.275898 (0.027097) | 0.362080 / 0.323480 (0.038600) | 0.007096 / 0.007986 (-0.000889) | 0.003493 / 0.004328 (-0.000835) | 0.079129 / 0.004250 (0.074878) | 0.037966 / 0.037052 (0.000914) | 0.310412 / 0.258489 (0.051923) | 0.346740 / 0.293841 (0.052899) | 0.033795 / 0.128546 (-0.094751) | 0.011595 / 0.075646 (-0.064051) | 0.325189 / 0.419271 (-0.094083) | 0.041679 / 0.043533 (-0.001854) | 0.302339 / 0.255139 (0.047200) | 0.322519 / 0.283200 (0.039319) | 0.089058 / 0.141683 (-0.052625) | 1.496223 / 1.452155 (0.044068) | 1.512562 / 1.492716 (0.019845) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.009298 / 0.018006 (-0.008709) | 0.406726 / 0.000490 (0.406236) | 0.003753 / 0.000200 (0.003553) | 0.000082 / 0.000054 (0.000028) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.023327 / 0.037411 (-0.014084) | 0.098175 / 0.014526 (0.083649) | 0.106040 / 0.176557 (-0.070516) | 0.151934 / 0.737135 (-0.585201) | 0.108465 / 0.296338 (-0.187873) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.419073 / 0.215209 (0.203864) | 4.188012 / 2.077655 (2.110358) | 1.857667 / 1.504120 (0.353547) | 1.664124 / 1.541195 (0.122929) | 1.704341 / 1.468490 (0.235851) | 0.699671 / 4.584777 (-3.885106) | 3.391110 / 3.745712 (-0.354602) | 1.871136 / 5.269862 (-3.398725) | 1.176794 / 4.565676 (-3.388882) | 0.083322 / 0.424275 (-0.340953) | 0.012450 / 0.007607 (0.004843) | 0.525058 / 0.226044 (0.299014) | 5.265425 / 2.268929 (2.996497) | 2.320672 / 55.444624 (-53.123952) | 1.964806 / 6.876477 (-4.911671) | 2.027055 / 2.142072 (-0.115017) | 0.819768 / 4.805227 (-3.985459) | 0.149638 / 6.500664 (-6.351026) | 0.064774 / 0.075469 (-0.010695) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.204575 / 1.841788 (-0.637212) | 13.651878 / 8.074308 (5.577570) | 13.751973 / 10.191392 (3.560581) | 0.154781 / 0.680424 (-0.525643) | 0.028887 / 0.534201 (-0.505314) | 0.404905 / 0.579283 (-0.174379) | 0.411320 / 0.434364 (-0.023043) | 0.485026 / 0.540337 (-0.055311) | 0.579690 / 1.386936 (-0.807246) |\n\n</details>\nPyArrow==latest\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.006615 / 0.011353 (-0.004737) | 0.004606 / 0.011008 (-0.006402) | 0.076099 / 0.038508 (0.037591) | 0.027247 / 0.023109 (0.004137) | 0.360731 / 0.275898 (0.084833) | 0.393688 / 0.323480 (0.070208) | 0.005079 / 0.007986 (-0.002906) | 0.003345 / 0.004328 (-0.000984) | 0.077184 / 0.004250 (0.072934) | 0.037850 / 0.037052 (0.000797) | 0.379738 / 0.258489 (0.121249) | 0.400474 / 0.293841 (0.106633) | 0.031581 / 0.128546 (-0.096966) | 0.011508 / 0.075646 (-0.064138) | 0.084966 / 0.419271 (-0.334306) | 0.041740 / 0.043533 (-0.001793) | 0.349887 / 0.255139 (0.094748) | 0.384405 / 0.283200 (0.101205) | 0.089022 / 0.141683 (-0.052661) | 1.503448 / 1.452155 (0.051293) | 1.564870 / 1.492716 (0.072154) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.233581 / 0.018006 (0.215574) | 0.413819 / 0.000490 (0.413330) | 0.000398 / 0.000200 (0.000198) | 0.000060 / 0.000054 (0.000006) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.024805 / 0.037411 (-0.012607) | 0.101348 / 0.014526 (0.086822) | 0.108701 / 0.176557 (-0.067856) | 0.160011 / 0.737135 (-0.577124) | 0.111696 / 0.296338 (-0.184642) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.436303 / 0.215209 (0.221094) | 4.368684 / 2.077655 (2.291029) | 2.082366 / 1.504120 (0.578247) | 1.888108 / 1.541195 (0.346913) | 1.958295 / 1.468490 (0.489804) | 0.700858 / 4.584777 (-3.883919) | 3.408321 / 3.745712 (-0.337391) | 1.872960 / 5.269862 (-3.396902) | 1.165116 / 4.565676 (-3.400560) | 0.083556 / 0.424275 (-0.340719) | 0.012348 / 0.007607 (0.004741) | 0.536551 / 0.226044 (0.310506) | 5.359974 / 2.268929 (3.091045) | 2.539043 / 55.444624 (-52.905581) | 2.200314 / 6.876477 (-4.676162) | 2.222051 / 2.142072 (0.079979) | 0.808567 / 4.805227 (-3.996661) | 0.151222 / 6.500664 (-6.349442) | 0.066351 / 0.075469 (-0.009118) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.265502 / 1.841788 (-0.576286) | 13.692066 / 8.074308 (5.617758) | 13.124507 / 10.191392 (2.933115) | 0.129545 / 0.680424 (-0.550879) | 0.016827 / 0.534201 (-0.517374) | 0.380326 / 0.579283 (-0.198957) | 0.387268 / 0.434364 (-0.047096) | 0.463722 / 0.540337 (-0.076616) | 0.553681 / 1.386936 (-0.833255) |\n\n</details>\n</details>\n\n\n"

] | 2023-03-01T13:54:06 | 2023-03-02T13:47:13 | 2023-03-02T13:40:17 | MEMBER | null | As reported in https://github.com/vijaydwivedi75/lrgb/issues/10, `push_to_hub` fails if the remote repository already exists and has a README.md without `dataset_info` in the YAML tags

cc @clefourrier | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/5598/reactions",

"total_count": 1,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 1,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/5598/timeline | null | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/5598",

"html_url": "https://github.com/huggingface/datasets/pull/5598",

"diff_url": "https://github.com/huggingface/datasets/pull/5598.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/5598.patch",

"merged_at": "2023-03-02T13:40:17"

} | true |

https://api.github.com/repos/huggingface/datasets/issues/5597 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5597/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5597/comments | https://api.github.com/repos/huggingface/datasets/issues/5597/events | https://github.com/huggingface/datasets/issues/5597 | 1,604,928,721 | I_kwDODunzps5fqUTR | 5,597 | in-place dataset update | {

"login": "speedcell4",

"id": 3585459,

"node_id": "MDQ6VXNlcjM1ODU0NTk=",

"avatar_url": "https://avatars.githubusercontent.com/u/3585459?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/speedcell4",

"html_url": "https://github.com/speedcell4",

"followers_url": "https://api.github.com/users/speedcell4/followers",

"following_url": "https://api.github.com/users/speedcell4/following{/other_user}",

"gists_url": "https://api.github.com/users/speedcell4/gists{/gist_id}",

"starred_url": "https://api.github.com/users/speedcell4/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/speedcell4/subscriptions",

"organizations_url": "https://api.github.com/users/speedcell4/orgs",

"repos_url": "https://api.github.com/users/speedcell4/repos",

"events_url": "https://api.github.com/users/speedcell4/events{/privacy}",

"received_events_url": "https://api.github.com/users/speedcell4/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1935892913,

"node_id": "MDU6TGFiZWwxOTM1ODkyOTEz",

"url": "https://api.github.com/repos/huggingface/datasets/labels/wontfix",

"name": "wontfix",

"color": "ffffff",

"default": true,

"description": "This will not be worked on"

}

] | closed | false | null | [] | null | [

"We won't support in-place modifications since `datasets` is based on the Apache Arrow format which doesn't support in-place modifications.\r\n\r\nIn your case the old dataset is garbage collected pretty quickly so you won't have memory issues.\r\n\r\nNote that datasets loaded from disk (memory mapped) are not loaded in memory, and therefore the new dataset actually use the same buffers as the old one.",

"Thank you for your detailed reply.\r\n\r\n> In your case the old dataset is garbage collected pretty quickly so you won't have memory issues.\r\n\r\nI understand this, but it still copies the old dataset to create the new one, is this correct? So maybe it is not memory-consuming, but time-consuming?",

"Indeed, and because of that it is more efficient to add multiple rows at once instead of one by one, using `concatenate_datasets` for example."