id

int64 5

1.93M

| title

stringlengths 0

128

| description

stringlengths 0

25.5k

| collection_id

int64 0

28.1k

| published_timestamp

timestamp[s] | canonical_url

stringlengths 14

581

| tag_list

stringlengths 0

120

| body_markdown

stringlengths 0

716k

| user_username

stringlengths 2

30

|

|---|---|---|---|---|---|---|---|---|

1,926,167 | The Ultimate Guide to Custom Theming with React Native Paper, Expo and Expo Router | React Native Paper is an excellent and user-friendly UI library for React Native, especially for... | 0 | 2024-07-17T04:29:40 | https://dev.to/hemanshum/the-ultimate-guide-to-custom-theming-with-react-native-paper-expo-and-expo-router-3hjl | reactnative, exporouter, beginners, tutorial | React Native Paper is an excellent and user-friendly UI library for React Native, especially for customizing dark and light themes with its [Dynamic Theme Colors Tool](https://callstack.github.io/react-native-paper/docs/guides/theming/#creating-dynamic-theme-colors). However, configuring it with Expo and Expo Router can be tricky. Additionally, creating a toggle button for theme switching without a central state management system can be challenging. Expo Router can help with this.

In this article, we will learn how to:

- Create custom light and dark themes.

- Configure these themes for use with React Native Paper, Expo and Expo Router.

- Implement a toggle button to switch between light and dark modes within the app.

If you like to watch this tutorial you can check out the video tutorial here: {% embed https://youtu.be/JkepeUIrwUs %}

**Setup a Expo Project**

Open your terminal and type:

`npx create-expo-app@latest`

It will ask for a project name, in my case I gave “rnpPractice”.

After Installation go into project directory and open your code editor in it and run.

Now it’s install React Native Paper and required dependency packages in the project folder.

`npm install react-native-paper react-native-safe-area-context`

Open babel.config.js in you code editor and change the following code:

```

module.exports = function(api) {

api.cache(true);

return {

presets: ['babel-preset-expo'],

//ADD CODE START

env: {

production: {

plugins: ['react-native-paper/babel'],

},

},

//ADD CODE END

};

};

```

Let’s reset the project so we can remove the unnecessary files, run the following to reset our project:

`npm run reset-project`

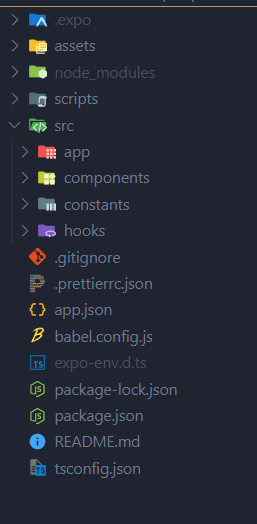

We will create a new folder in the root of our project called ‘ src ’ and move following folders in it:

- app

- components

- constants

- hooks

It will look the following screenshot:

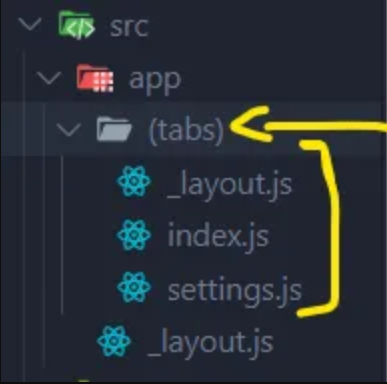

Now in app folder let’s create a folder name (tabs), you must create a folder with parenthesis around the word tabs, that how we can create a bottom tab navigation in Expo Router. We will move our index.js file from app (the parent folder) to (tabs) (the child folder) and now create two more files in the (tabs) folder. one is “_layout.js” and 2nd settings.js. Now you app folder should look like the following screenshot:

Let’s fill settings.js with some boiler plate code:

```

import { StyleSheet, View, Text } from "react-native";

const Settings = () => {

return (

<View>

<Text>Settings</Text>

</View>

);

};

const styles = StyleSheet.create({});

export default Settings;

```

Now for Expo router to work properly we need to update the “_layout.js” files in app and (tabs) folder:

Update _layout.js in app folder with following code:

```

import {Stack} from 'expo-router';

export default function RootLayout() {

return (

<Stack>

<Stack.Screen

name="(tabs)"

options={{

headerShown: false,

}}

/>

</Stack>

)

}

```

and in (tabs) folder _layout.js file:

```

import { Tabs } from "expo-router";

import { Feather } from "@expo/vector-icons";

export default function TabLayout() {

return (

<Tabs>

<Tabs.Screen

name="index"

options={{

title: "Home",

tabBarIcon: ({ color }) => (

<Feather name="home" size={24} color={color} />

),

}}

/>

<Tabs.Screen

name="settings"

options={{

title: "Setting",

tabBarIcon: ({ color }) => (

<Feather name="settings" size={24} color={color} />

),

}}

/>

</Tabs>

);

}

```

Now let’s run the app and see everything is running ok.

`npm run start`

Now your project will start, you can run your project on emulator or simulator or on you phone by scanning the QR code and install Expo Go App on your phone.

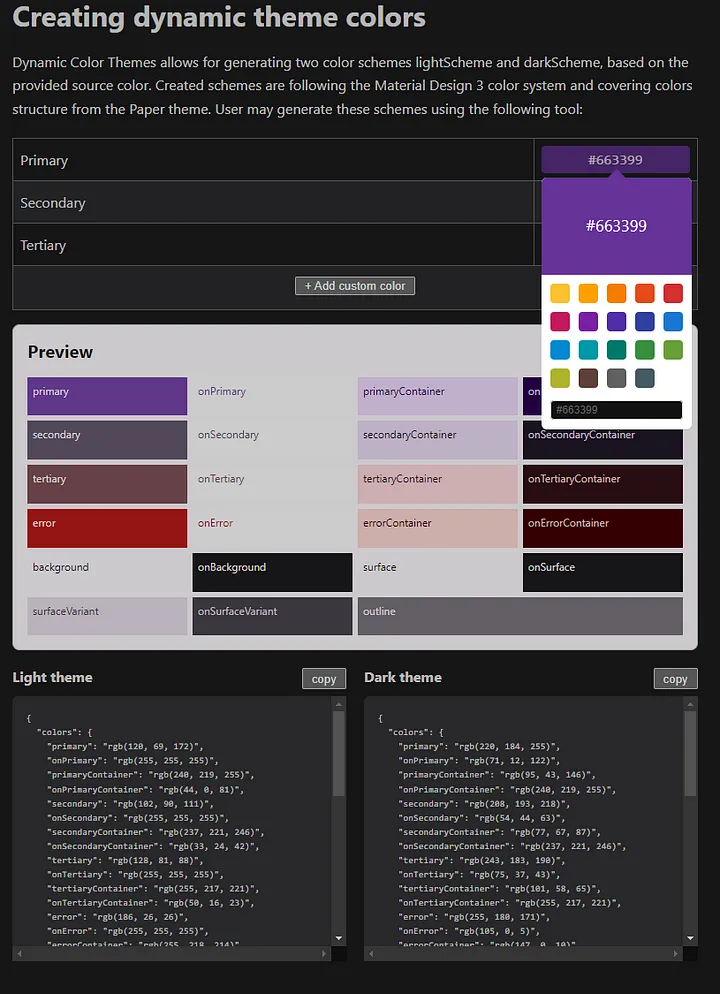

**Create and Configure the React Native Paper Theme**

Let’s create our custom dark & light theme first, for that [follow the link](https://callstack.github.io/react-native-paper/docs/guides/theming/#creating-dynamic-theme-colors). Here you just have to select the colors Primary, Secondary and Tertiary and it will create you a light and dark theme.

Now rename your Colors.ts file to Colors.js if you are using JavaScript and not TypeScript, then open that file. Copy the light theme colors object from website and paste it in light colors object in Colors.js file and do same for the Dark theme and your file will look likes this:

```

export const Colors = {

light: {

primary: "rgb(176, 46, 0)",

onPrimary: "rgb(255, 255, 255)",

primaryContainer: "rgb(255, 219, 209)",

onPrimaryContainer: "rgb(59, 9, 0)",

secondary: "rgb(0, 99, 154)",

onSecondary: "rgb(255, 255, 255)",

secondaryContainer: "rgb(206, 229, 255)",

onSecondaryContainer: "rgb(0, 29, 50)",

tertiary: "rgb(121, 89, 0)",

onTertiary: "rgb(255, 255, 255)",

tertiaryContainer: "rgb(255, 223, 160)",

onTertiaryContainer: "rgb(38, 26, 0)",

error: "rgb(186, 26, 26)",

onError: "rgb(255, 255, 255)",

errorContainer: "rgb(255, 218, 214)",

onErrorContainer: "rgb(65, 0, 2)",

background: "rgb(255, 251, 255)",

onBackground: "rgb(32, 26, 24)",

surface: "rgb(255, 251, 255)",

onSurface: "rgb(32, 26, 24)",

surfaceVariant: "rgb(245, 222, 216)",

onSurfaceVariant: "rgb(83, 67, 63)",

outline: "rgb(133, 115, 110)",

outlineVariant: "rgb(216, 194, 188)",

shadow: "rgb(0, 0, 0)",

scrim: "rgb(0, 0, 0)",

inverseSurface: "rgb(54, 47, 45)",

inverseOnSurface: "rgb(251, 238, 235)",

inversePrimary: "rgb(255, 181, 160)",

elevation: {

level0: "transparent",

level1: "rgb(251, 241, 242)",

level2: "rgb(249, 235, 235)",

level3: "rgb(246, 229, 227)",

level4: "rgb(246, 226, 224)",

level5: "rgb(244, 222, 219)",

},

surfaceDisabled: "rgba(32, 26, 24, 0.12)",

onSurfaceDisabled: "rgba(32, 26, 24, 0.38)",

backdrop: "rgba(59, 45, 41, 0.4)",

},

dark: {

primary: "rgb(255, 181, 160)",

onPrimary: "rgb(96, 21, 0)",

primaryContainer: "rgb(135, 33, 0)",

onPrimaryContainer: "rgb(255, 219, 209)",

secondary: "rgb(150, 204, 255)",

onSecondary: "rgb(0, 51, 83)",

secondaryContainer: "rgb(0, 74, 117)",

onSecondaryContainer: "rgb(206, 229, 255)",

tertiary: "rgb(248, 189, 42)",

onTertiary: "rgb(64, 45, 0)",

tertiaryContainer: "rgb(92, 67, 0)",

onTertiaryContainer: "rgb(255, 223, 160)",

error: "rgb(255, 180, 171)",

onError: "rgb(105, 0, 5)",

errorContainer: "rgb(147, 0, 10)",

onErrorContainer: "rgb(255, 180, 171)",

background: "rgb(32, 26, 24)",

onBackground: "rgb(237, 224, 221)",

surface: "rgb(32, 26, 24)",

onSurface: "rgb(237, 224, 221)",

surfaceVariant: "rgb(83, 67, 63)",

onSurfaceVariant: "rgb(216, 194, 188)",

outline: "rgb(160, 140, 135)",

outlineVariant: "rgb(83, 67, 63)",

shadow: "rgb(0, 0, 0)",

scrim: "rgb(0, 0, 0)",

inverseSurface: "rgb(237, 224, 221)",

inverseOnSurface: "rgb(54, 47, 45)",

inversePrimary: "rgb(176, 46, 0)",

elevation: {

level0: "transparent",

level1: "rgb(43, 34, 31)",

level2: "rgb(50, 38, 35)",

level3: "rgb(57, 43, 39)",

level4: "rgb(59, 45, 40)",

level5: "rgb(63, 48, 43)",

},

surfaceDisabled: "rgba(237, 224, 221, 0.12)",

onSurfaceDisabled: "rgba(237, 224, 221, 0.38)",

backdrop: "rgba(59, 45, 41, 0.4)",

},

};

```

Now let’s use our theme, for that open the _layout.js file in app folder and import:

```

import {Stack} from 'expo-router';

//Import the code Start

import {

MD3DarkTheme,

MD3LightTheme,

PaperProvider,

} from "react-native-paper";

//Import the code End

export default function RootLayout() {

return (

<Stack>

<Stack.Screen

name="(tabs)"

options={{

headerShown: false,

}}

/>

</Stack>

)

}

```

Now let’s wrap our code in <PaperProvider> in _layout.js file in app folder like the following:

```

import {Stack} from 'expo-router';

import {

MD3DarkTheme,

MD3LightTheme,

PaperProvider,

} from "react-native-paper";

export default function RootLayout() {

return (

<PaperProvider> //Start there

<Stack>

<Stack.Screen

name="(tabs)"

options={{

headerShown: false,

}}

/>

</Stack>

</PaperProvider> //End here

)

}

```

👆 This will allow us to use React Native Paper components in our app.

Now to apply our color theme we need to import Colors from the Colors.js file and merge it on the colors object in the current theme in React Native Paper. Confusing? 🤔

Let write the code to understand 😁. Open _layout.js file in app folder:

```

import {Stack} from 'expo-router';

import {

MD3DarkTheme,

MD3LightTheme,

PaperProvider,

} from "react-native-paper";

//1. Import Our Colors

import { Colors } from "../constants/Colors";

//2. Overwrite it on the current theme

const customDarkTheme = { ...MD3DarkTheme, colors: Colors.dark };

const customLightTheme = { ...MD3LightTheme, colors: Colors.light };

export default function RootLayout() {

return (

// 3.Use any theme you like for your app

<PaperProvider theme={customDarkTheme}>

<Stack>

<Stack.Screen

name="(tabs)"

options={{

headerShown: false,

}}

/>

</Stack>

</PaperProvider>

)

}

```

Let’s make our app decide which theme to use as per the users device. For that we are going to use a Hook provided by React Native called useColorScheme. The useColorScheme React hook provides and subscribes to color scheme preferred by the user’s device. [Read More.](https://reactnative.dev/docs/usecolorscheme)

Open _layout.js file in app folder:

```

import { Stack } from 'expo-router';

//1. Import the useColorScheme hook

import { useColorScheme } from 'react-native';

import {

MD3DarkTheme,

MD3LightTheme,

PaperProvider,

} from "react-native-paper";

import { Colors } from "../constants/Colors";

const customDarkTheme = { ...MD3DarkTheme, colors: Colors.dark };

const customLightTheme = { ...MD3LightTheme, colors: Colors.light };

export default function RootLayout() {

//2. Get the value in a const

const colorScheme = useColorScheme();

//3. Let's decide which theme to use

const paperTheme =

colorScheme === "dark" ? customDarkTheme : customLightTheme;

return (

//4. apply the theme

<PaperProvider theme={paperTheme}>

<Stack>

<Stack.Screen

name="(tabs)"

options={{

headerShown: false,

}}

/>

</Stack>

</PaperProvider>

)

}

```

Now it’s time to test, let’s go into (tabs) folder and open index.js and copy following code:

```

import { View } from "react-native";

import { Avatar, Button, Card, Text } from "react-native-paper";

const LeftContent = (props) => <Avatar.Icon {...props} icon="folder" />;

export default function Index() {

return (

<View

style={{

flex: 1,

margin: 16,

}}

>

<Card>

<Card.Cover source={{ uri: "https://picsum.photos/700" }} />

<Card.Title

title="Card Title"

subtitle="Card Subtitle"

left={LeftContent}

/>

<Card.Content>

<Text variant="bodyMedium">

Lorem ipsum dolor sit amet consectetur adipisicing elit. Quisquam

tenetur odit eveniet inventore magnam officia quia nemo porro?

Dolore sapiente quos illo distinctio nisi incidunt? Eaque officiis

iusto exercitationem natus?

</Text>

</Card.Content>

<Card.Actions>

<Button>Open</Button>

</Card.Actions>

</Card>

</View>

);

}

```

You can see that your theme is applied but only on the card you imported from React Native Paper, your navigation is still using a default theme provided by Expo Router.

Now let’s merge the Expo Router theme in React Native Paper theme and use the same theme for both. To achieve that let’s get back to _layout.js file in app folder and make the following changes:

```

import { Stack } from 'expo-router';

import { useColorScheme } from 'react-native';

import {

MD3DarkTheme,

MD3LightTheme,

PaperProvider,

adaptNavigationTheme, //1. Import this package

} from "react-native-paper";

//2. Import Router Theme

import {

DarkTheme as NavigationDarkTheme,

DefaultTheme as NavigationDefaultTheme,

ThemeProvider,

} from "@react-navigation/native";

//3. Install deepmerge first and import it

import merge from "deepmerge";

import { Colors } from "../constants/Colors";

const customDarkTheme = { ...MD3DarkTheme, colors: Colors.dark };

const customLightTheme = { ...MD3LightTheme, colors: Colors.light };

//4. The adaptNavigationTheme function takes an existing React Navigation

// theme and returns a React Navigation theme using the colors from

// Material Design 3.

const { LightTheme, DarkTheme } = adaptNavigationTheme({

reactNavigationLight: NavigationDefaultTheme,

reactNavigationDark: NavigationDarkTheme,

});

//5.We will merge React Native Paper Theme and Expo Router Theme

// using deepmerge

const CombinedLightTheme = merge(LightTheme, customLightTheme);

const CombinedDarkTheme = merge(DarkTheme, customDarkTheme);

export default function RootLayout() {

const colorScheme = useColorScheme();

//6. Let's use the merged themes

const paperTheme =

colorScheme === "dark" ? CombinedDarkTheme : CombinedLightTheme;

return (

<PaperProvider theme={paperTheme}>

//7.We need to use theme provider from react navigation

//to apply our theme on Navigation components

<ThemeProvider value={paperTheme}>

<Stack>

<Stack.Screen

name="(tabs)"

options={{

headerShown: false,

}}

/>

</Stack>

</ThemeProvider>

</PaperProvider>

)

}

```

That's it guys this should apply our theme to both React Native Paper Components and Navigation Components like Header Navigation or Bottom Tag Navigation.

Find the source code here:

https://github.com/hemanshum/React-Native-Paper-Practic-App

**Wrapping Up**

We’ve covered a lot of ground in this blog post, from creating custom light and dark themes to configuring them for use with React Native Paper, Expo and Expo Router. By now, you should have a solid foundation for implementing theming in your Expo projects. For those looking to add a toggle button to switch between these themes within your app, I’ve created a detailed video tutorial. Check out the video, with a convenient timestamp for the relevant section, here: [Video Tutorial.](https://youtu.be/JkepeUIrwUs)

Happy coding, and may your apps always look great — in light and in dark! 🌓✨

| hemanshum |

1,926,175 | Building Microservices with nodejs nestjs #series | The video Series Building Microservices and Deploying for SAAS Product" covers all about building... | 0 | 2024-07-17T04:18:45 | https://dev.to/tkssharma/building-microservices-with-nodejs-nestjs-series-2j7b | nestjs, node, microservices, javascript | The video Series Building Microservices and Deploying for SAAS Product" covers all about building microservices for the Enterprise World with Node JS Ecosystem

{% embed https://www.youtube.com/watch?v=aP55ZJNBM38&list=PLIGDNOJWiL19tboY7wTzz6_RY6h2gpNrH %}

Link - https://www.youtube.com/playlist?list=PLIGDNOJWiL19tboY7wTzz6_RY6h2gpNrH

Old GitHub Links

Github: https://github.com/tkssharma/12-factor-app-microservices

https://github.com/tkssharma/nestjs-graphql-microservices

https://github.com/tkssharma/nodejs-microservices-patterns

In this playlist, we will talk about microservices development with node js of all different types

like

- Express/Nest JS with Typescript with ORM (TypeORM, knex, Prisma)

- Deploying Services with AWS CDK Constructs with RDS/Dynamodb

- Building Different Microservice Architecture

- Using Event-Driven Arch, CQRS, Event Sourcing based Arch

- Deploying services using AWS ECS or Lambda using AWS CDK

Here are some common microservices architecture patterns and best practices when using Node.js:

1. Single Service Microservice Architecture:

2. Layered Microservice Architecture:

3. Event-Driven Microservice Architecture:

4. API Gateway Microservice Architecture:

5. Service Mesh Microservice Architecture:

6. Serverless Microservices:

7. Containerized Microservices:

8. Event Sourcing and CQRS:

9. BFF (Backend For Frontend) Microservice Architecture:

10. Database Microservice Architecture:

I’m Tarun, I am Publisher, Trainer Developer, working on Enterprise and open source Technologies JavaScript frameworks (React Angular, sveltekit, nextjs), I work with client-side and server-side javascript programming which includes node js or any other frameworks Currently working with JavaScript framework React & Node js 🚀 with Graphql 🎉 developer publications.

I am a passionate Javascript developer writing end-to-end applications using javascript using React, Angular 🅰️, and Vue JS with Node JS, I publish video tutorials and write about everything I know. I aim to create a beautiful corner of the web free of ads, sponsored posts, | tkssharma |

1,926,169 | Unlocking the Power of C++: A Fun Journey into Game Development | Unlocking the Power of C++: A Fun Journey into Game Development ... | 0 | 2024-07-17T04:07:17 | https://dev.to/isamarsoftwareengineer/unlocking-the-power-of-c-a-fun-journey-into-game-development-2d6f | cpp, c, gamedev |

## Unlocking the Power of C++: A Fun Journey into Game Development

## Introduction

C++ is a programming language that has stood the test of time. Known for its performance and efficiency, C++ is a favorite among game developers. Whether you're a beginner or a seasoned programmer, learning C++ can open up a world of possibilities in game development. This blog will take you through the journey of learning C++ and the thrill of using it to create gaming applications.

## Why Learn C++?

### 1. Performance and Efficiency

C++ is known for its high performance and efficiency. Unlike some other programming languages, C++ gives you control over system resources, memory management, and hardware interaction. This control is crucial in game development, where performance can make or break the gaming experience.

### 2. Industry Standard

C++ is widely used in the gaming industry. Major game engines like Unreal Engine are built using C++. Learning C++ equips you with skills that are in high demand in the game development industry.

### 3. Versatility

C++ is versatile and can be used for developing a wide range of applications. From system software to game engines, C++'s versatility makes it an invaluable tool in a programmer's toolkit.

## Getting Started with C++

### 1. Setting Up Your Environment

Before you can start coding in C++, you need to set up your development environment. For macOS users, you can use Xcode or other text editors like Visual Studio Code or Sublime Text. For Windows users, Visual Studio is a popular choice.

### 2. Writing Your First Program

Start with a simple "Hello, World!" program. This basic program will give you a feel for the syntax and structure of C++.

```cpp

#include <iostream>

int main() {

std::cout << "Hello, World!" << std::endl;

return 0;

}

```

Compile and run your program to see the output.

### 3. Understanding the Basics

Learn the fundamental concepts of C++ such as variables, data types, control structures, functions, and object-oriented programming. These basics are the building blocks for more complex programs.

## **The Fun Part: Game Development**

##1. Creating Simple Games

Start with simple games like Tic-Tac-Toe or a text-based adventure game. These projects will help you apply the basics of C++ in a fun and engaging way.

##2. Exploring Game Engines

Once you're comfortable with the basics, dive into game engines like Unreal Engine or Unity (which supports C++ scripting). These engines provide powerful tools and libraries that simplify the game development process.

### 3. Building Your Own Game

Challenge yourself by building your own game from scratch. This project will push your C++ skills to the limit and give you a sense of accomplishment.

### 4. Optimizing for Performance

One of the most exciting aspects of using C++ in game development is optimizing your game for performance. Fine-tuning your code to run efficiently on different hardware can be incredibly satisfying.

## **The Joy of Creating**

### 1. Seeing Your Ideas Come to Life

There's nothing quite like seeing your ideas come to life in the form of a game. The process of designing, coding, and testing your game can be a deeply rewarding experience.

### 2. Sharing with Others

Share your games with friends, family, or the gaming community. Getting feedback and seeing others enjoy your creation can be incredibly motivating.

### 3. Continuous Learning

Game development with C++ is a continuous learning journey. There's always something new to learn, whether it's a new algorithm, a design pattern, or a cutting-edge graphics technique.

## Conclusion

Learning C++ and using it for game development can be a fun and rewarding experience. The combination of C++'s performance, control, and industry relevance makes it an excellent choice for aspiring game developers. So, dive in, start coding, and unlock the power of C++ in your gaming projects. | isamarsoftwareengineer |

1,926,170 | Python Beginneris-01 | 1.What is python? 2.How many types of languages are there in python? 3.Is python is interpreted... | 0 | 2024-07-17T04:13:06 | https://dev.to/04d5lakshmi_prasannapras/python-beginneris-01-17i7 | 1.What is python?

2.How many types of languages are there in python?

3.Is python is interpreted language or not?

4.what is interpretation and what is compiler? | 04d5lakshmi_prasannapras |

|

1,926,171 | Sick of managing docker objects? This tool is for you... | Consider this scenario, you are working on a project with your team and you use docker for... | 0 | 2024-07-17T16:52:19 | https://dev.to/ajaydsan/sick-of-managing-docker-objects-this-tool-is-for-you-1ffe | docker, programming, devops, go | Consider this scenario, you are working on a project with your team and you use docker for containerization for easy collaboration, great. You are focused, working at peak efficiency, and are in THE ZONE. Now, you realize you have stop the current container (for whatever reason), but it is not that straightforward...you first do `docker ps` and DRAG YOUR MOUSE across the screen to select and copy the ID of the container, and then you do `docker container stop {ID}` to stop the container. And then when you think you have accomplished your goal you realize you copied the wrong ID and stopped the wrong container.

There is a decent chance you've previously had this kind of experience, at least once. And if you are like me, you do not want to spin up a whole browser (psst..electron) and drag your mouse across the screen to manage your docker objects inefficiently (i.e. docker desktop).

My point is `docker cli`, though great, is annoying to use repeatedly. And god forbid if you do not use docker enough, you drop everything and check docker docs on how to delete a container (I'm guilty of this), this takes you out of your workflow and introduces more distraction. There has to be a better way to do this. That's when I decided to write this TUI in Golang and it's named `goManageDocker` (get it ?🤭)

TLDR:

gmd is a TUI tool that lets you manage your docker objects quickly and efficiently using sensible keybindings. It has VIM keybinds as well (for all you VIM nerds).

I know what you are thinking, "This is so cool, just give me the repo link already!!". [This is it](https://github.com/ajayd-san/gomanagedocker)

This post aims to give a brief tour of the tool.

## Navigation

As I mentioned before this tool focuses on efficiency and speed ( and nothing can be faster than VIM, ofc 🙄), so you have VIM keybinds at your disposal for MAX SPEED!

## Easy object management:

With this tool, you can easily delete, prune, start, and stop with a single keystroke.

I'll demonstrate the container delete function:

You can also, press `D` to force delete (this would not show the prompt).

## Cleanest way to exec into a container:

Instead of typing two different commands to exec, this is a cooler way to exec:

Just press `x` on the container you want!!

You can also exec directly from images (this starts a container and then execs into it).

## Blazing fast fuzzy search:

I know this isn't rust 🦀, but regardless this is a BLAZING FAST way to search for an object. Just press `/` and search away!

## Let gmd scout for you

If you like performing `docker scout quickview`, `gmd` has your back. Just press `s` on an image and you'll see a neatly formatted table.

Isn't this cool!?

And this marks the end of my post. There are a lot of things that I haven't shown because I want to keep this brief. But, if this interests you, you can check out the project at [here](https://github.com/ajayd-san/gomanagedocker). There is pretty detailed readme, so you'll find everything over there (including config options).

I'm considering adding more features to this project in the future. If you have any suggestions, be sure to open a new issue and contributions to existing issues are welcome!

Feel free to post your queries in comments.

Thanks for reading so far!

You have a great day ahead 😸! | ajaydsan |

1,926,173 | Tailwind CSS: Customizing Configuration | Introduction: Tailwind CSS is a popular open-source CSS framework that has gained immense popularity... | 0 | 2024-07-17T04:15:44 | https://dev.to/tailwine/tailwind-css-customizing-configuration-3a61 | Introduction:

Tailwind CSS is a popular open-source CSS framework that has gained immense popularity among web developers in recent years. It provides a unique customizable approach to creating beautiful and modern user interfaces. One of the key features that sets Tailwind CSS apart from other CSS frameworks is its customizable configuration. In this article, we will discuss the advantages and disadvantages of customizing configuration in Tailwind CSS, as well as its notable features.

Advantages:

Customizing configuration in Tailwind CSS allows developers to have full control over their website's design and styles. This eliminates the need for writing additional CSS code, reducing development time and improving overall efficiency. With Tailwind CSS, developers can customize colors, breakpoints, and even spacing between elements with ease. Its utility-first approach also makes it easy to make changes to specific elements without affecting the others. Additionally, customizing configuration can also result in a lightweight and optimized code base, improving website performance.

Disadvantages:

One of the main drawbacks of customizing configuration in Tailwind CSS is the steep learning curve for beginners. The plethora of options and utility classes may seem overwhelming at first, requiring some time to understand and master. It also may not be suitable for smaller, simple projects as the customizations may not be utilized to their full potential.

Features:

Tailwind CSS offers a range of features that make customization seamless and efficient. With responsive design in mind, it includes pre-defined breakpoints to create responsive layouts easily. Its extensive set of utility classes gives developers the ability to create any design they can imagine. Furthermore, these classes follow a consistent naming convention, making it easier to understand and remember. Moreover, Tailwind CSS provides real-time customization through its intuitive browser extension, enabling developers to see changes in real-time.

Conclusion:

Tailwind CSS's customizable configuration allows developers to create beautiful and modern websites with ease. Its rich features, along with its utility-first approach, make it a popular choice among developers. While it may have a steep learning curve, the end result is a lightweight, optimized code base that is easy to maintain. With Tailwind CSS, the possibilities for design and customization are endless. | tailwine |

|

1,926,174 | You can now Animate `height: auto` in CSS Without JavaScript!🚀 | Introduction Animating height: auto in CSS has been a long-standing challenge for web... | 0 | 2024-07-17T04:15:50 | https://dev.to/srijan_karki/you-can-now-animate-height-auto-in-css-without-javascript-4o20 | webdev, beginners, css, animation | ### Introduction

Animating `height: auto` in CSS has been a long-standing challenge for web developers. Traditionally, CSS requires a specific height value to animate, making it impossible to transition to/from `height: auto` directly. This limitation forced developers to resort to JavaScript for calculating and animating element heights. But now, CSS introduces the game-changing `calc-size()` function, making these animations not only possible but also straightforward.

### The Magic of `calc-size()`

The `calc-size()` function operates similarly to the `calc()` function but extends its capabilities to handle automatically calculated sizes by the browser, including:

- `auto`

- `min-content`

- `max-content`

- `fit-content`

- `stretch`

- `contain`

Essentially, `calc-size()` converts values like `auto` into specific pixel values, which can then be used in calculations with other values. This is particularly useful for animating elements with dynamic sizes.

#### Basic Usage

Consider this simple example:

```css

.element {

height: 0;

overflow: hidden;

transition: height 0.3s;

}

.element.open {

height: calc-size(auto);

}

```

By wrapping the `auto` value in the `calc-size()` function, we can now animate the height of an element from 0 to `auto` without any JavaScript. Here's how it looks in action:

- **Normal Expansion**

- **Animated Expansion Using `calc-size()`**

### Limitations and Workarounds

It's important to note that you cannot animate between two automatically calculated values, such as `auto` and `min-content`. However, you can use `calc-size()` on non-automatic values within animations, ensuring smooth transitions:

```css

.element {

height: calc-size(0px);

overflow: hidden;

transition: height 0.3s;

}

.element.open {

height: auto;

}

```

### Advanced Calculations

While the primary use case for `calc-size()` is animations, it also supports more complex calculations:

```css

.element {

width: calc-size(min-content, size + 50px);

}

```

In this example, the width of the element is set to the minimum content size plus 50px. The syntax involves two arguments: the size to be calculated and the operation to perform. The `size` keyword represents the current size of the first property passed to `calc-size`.

You can even nest multiple `calc-size()` functions for more sophisticated calculations:

```css

.element {

width: calc-size(calc-size(min-content, size + 50px), size * 2);

}

```

This calculates the min-content size, adds 50px, and then doubles the result.

### Browser Support

Currently, `calc-size()` is only supported in Chrome Canary with the `#enable-experimental-web-platform-features` flag enabled. As it's a progressive enhancement, using it won't break your site in unsupported browsers—it simply won't animate.

Here's how you can implement it:

```css

.element {

height: 0;

overflow: hidden;

transition: height 0.3s;

}

.element.open {

height: auto;

height: calc-size(auto);

}

```

In supported browsers, the animation works seamlessly, while in others, the element will display without animation.

### Conclusion

The `calc-size()` function is a fantastic addition to CSS, simplifying animations involving dynamic sizes and enabling previously impossible calculations. Although it's currently in an experimental stage, its potential to enhance web development is immense. We eagerly await full support across all browsers!

Stay tuned and start experimenting with `calc-size()` to elevate your CSS animations to new heights! | srijan_karki |

1,926,196 | Creating Absolute Imports in a Vite React App: A Step-by-Step Guide | Creating Absolute Imports in a Vite React App: A Step-by-Step Guide Table of... | 0 | 2024-07-17T08:47:40 | https://dev.to/nagakumar_reddy_316f25396/creating-absolute-imports-in-a-vite-react-app-a-step-by-step-guide-31he |

**Creating Absolute Imports in a Vite React App: A Step-by-Step Guide**

### Table of Contents

1. Introduction

2. The Problem

3. Prerequisite

4. Setting up the Vite React Project for Absolute Imports

- Creating a Vite React App

- Configuring the Project for Absolute Imports

- Configuring VS Code IntelliSense

5. Practical Tips

6. Conclusion

### Introduction

Relative imports can be cumbersome in large projects. Absolute imports simplify locating and referencing source files. This guide will show you how to set up absolute imports in a Vite-powered React app, configure your project, and set up VS Code IntelliSense.

### The Problem

Relative imports can lead to confusing paths like `import Home from "../../../components/Home";`. Moving files requires updating all related import paths, which is time-consuming. Absolute imports fix this by providing a fixed path, like `import Home from "@/components/Home";`, making the code easier to manage.

### Prerequisite

- Node.js and Vite installed

- Familiarity with ES6 import/export syntax

- Basic knowledge of React

### Setting up the Vite React Project for Absolute Imports

#### Creating a Vite React App

1. Run the command to create a new React app:

```bash

npm create vite@latest absolute-imports -- --template react

```

2. Navigate to your project directory:

```bash

cd absolute-imports

```

3. Install dependencies:

```bash

npm install

```

4. Start the development server:

```bash

npm run dev

```

#### Configuring the Project for Absolute Imports

1. Open `vite.config.js` and add the following configuration to resolve absolute imports:

```javascript

import { defineConfig } from "vite";

import react from "@vitejs/plugin-react";

import path from "path";

export default defineConfig({

resolve: {

alias: {

"@": path.resolve(__dirname, "./src"),

},

},

plugins: [react()],

});

```

#### Configuring VS Code IntelliSense

1. Create or update `jsconfig.json` (or `tsconfig.json` for TypeScript) in the root of your project:

```json

{

"compilerOptions": {

"baseUrl": ".",

"paths": {

"@/*": ["src/*"]

}

}

}

```

2. Open your VS Code settings (`settings.json`) and add the following line to ensure IntelliSense uses non-relative imports:

```json

"javascript.preferences.importModuleSpecifier": "non-relative"

```

### Practical Tips

- Consistently use absolute imports to maintain a clean and manageable codebase.

- Regularly check and update your configurations to match project changes.

### Conclusion

Absolute imports simplify your project structure and make your codebase more maintainable. By following this guide, you can easily set up absolute imports in your Vite React app and enhance your development experience.

| nagakumar_reddy_316f25396 |

|

1,926,197 | Integrated Traffic Management System with Predictive Modeling and Visualization | Overview The Traffic Management System (TMS) presented here integrates predictive modeling... | 0 | 2024-07-17T04:22:09 | https://dev.to/ekemini_thompson/integrated-traffic-management-system-with-predictive-modeling-and-visualization-37ef | python, tinker, machinelearning | ## Overview

The Traffic Management System (TMS) presented here integrates predictive modeling and real-time visualization to facilitate efficient traffic control and incident management. Developed using Python and Tkinter for the graphical interface, this system leverages machine learning algorithms to forecast traffic volume based on weather conditions and rush hour dynamics. The application visualizes historical and predicted traffic data through interactive graphs, providing insights crucial for decision-making in urban traffic management.

## Key Features

- **Traffic Prediction:** Utilizes machine learning models (Linear Regression and Random Forest) to predict traffic volume based on temperature, precipitation, and rush hour indicators.

- **Graphical Visualization:** Displays historical traffic trends alongside predicted volumes on interactive graphs, enhancing understanding and monitoring capabilities.

- **Real-time Traffic Simulation:** Simulates traffic light changes to replicate real-world scenarios, aiding in assessing system responses under various conditions.

- **Incident Reporting:** Allows users to report incidents, capturing location and description for prompt management and response.

## Getting Started

### Prerequisites

Ensure Python 3.x is installed. Install dependencies using pip:

```bash

pip install pandas matplotlib scikit-learn

```

### Installation

1. **Clone the repository:**

```bash

git clone <https://github.com/EkeminiThompson/traffic_management_system.git>

cd traffic-management-system

```

2. **Install dependencies:**

```bash

pip install -r requirements.txt

```

3. **Run the application:**

```bash

python main.py

```

## Usage

1. **Traffic Prediction:**

- Select a location, date, and model (Linear Regression or Random Forest).

- Click "Predict Traffic" to see the predicted traffic volume.

- Clear the graph using "Clear Graph" button.

2. **Graphical Visualization:**

- The graph shows historical traffic data and predicted volumes for the selected date.

- Red dashed line indicates the prediction date, and green dot shows the predicted traffic volume.

3. **Traffic Light Control:**

- Simulates changing traffic light colors (Red, Green, Yellow) to assess traffic flow dynamics.

4. **Incident Reporting:**

- Report traffic incidents by entering location and description.

- Click "Report Incident" to submit the report.

## Code Overview

### `main.py`

```python

# Main application using Tkinter for GUI

import tkinter as tk

from tkinter import messagebox, ttk

import pandas as pd

import matplotlib.pyplot as plt

from matplotlib.backends.backend_tkagg import FigureCanvasTkAgg

import random

from datetime import datetime

from sklearn.linear_model import LinearRegression

from sklearn.ensemble import RandomForestRegressor

# Mock data for demonstration

data = {

'temperature': [25, 28, 30, 22, 20],

'precipitation': [0, 0, 0.2, 0.5, 0],

'hour': [8, 9, 10, 17, 18],

'traffic_volume': [100, 200, 400, 300, 250]

}

df = pd.DataFrame(data)

# Feature engineering

df['is_rush_hour'] = df['hour'].apply(lambda x: 1 if (x >= 7 and x <= 9) or (x >= 16 and x <= 18) else 0)

# Model training

X = df[['temperature', 'precipitation', 'is_rush_hour']]

y = df['traffic_volume']

# Create models

linear_model = LinearRegression()

linear_model.fit(X, y)

forest_model = RandomForestRegressor(n_estimators=100, random_state=42)

forest_model.fit(X, y)

class TrafficManagementApp:

def __init__(self, root):

# Initialization of GUI

# ...

def on_submit(self):

# Handling traffic prediction submission

# ...

def update_graph(self, location, date_str, prediction):

# Updating graph with historical and predicted traffic data

# ...

# Other methods for GUI components and functionality

if __name__ == "__main__":

root = tk.Tk()

app = TrafficManagementApp(root)

root.mainloop()

```

## Conclusion

The Traffic Management System is a sophisticated tool for urban planners and traffic controllers, combining advanced predictive analytics with intuitive graphical interfaces. By forecasting traffic patterns and visualizing data trends, the system enhances decision-making capabilities and facilitates proactive management of traffic resources. Its user-friendly design ensures accessibility and practicality, making it a valuable asset in modern urban infrastructure management. | ekemini_thompson |

1,926,199 | The Ultimate Guide to Download Workshop Manuals | In the modern age of digital convenience, downloading workshop manuals has become an essential... | 0 | 2024-07-17T04:24:45 | https://dev.to/org45ss/the-ultimate-guide-to-download-workshop-manuals-2kml | In the modern age of digital convenience, downloading workshop manuals has become an essential practice for mechanics, DIY enthusiasts, and vehicle owners alike. These comprehensive guides are invaluable tools that provide detailed instructions,**[Download Workshop Manuals](https://workshopmanuals.org/)** technical specifications, and troubleshooting tips for a wide range of vehicles. This article explores everything you need to know about workshop manuals, their benefits, and where to download them.

**What Are Workshop Manuals?**

Workshop manuals, also known as service manuals, are detailed instructional books created by vehicle manufacturers or third-party publishers. They cover all aspects of a vehicle's maintenance and repair, offering step-by-step guidance on various tasks, from basic maintenance to complex repairs.

**Key Components of a Workshop Manual**

Technical Specifications: Detailed descriptions of the vehicle's parts, including engine specs, fluid capacities, and torque settings.

Maintenance Procedures: Guides for routine maintenance tasks such as oil changes, tire rotations, and brake inspections.

Repair Procedures: Step-by-step instructions for diagnosing and repairing mechanical and electrical issues.

Wiring Diagrams: Comprehensive schematics of the vehicle's electrical system.

Troubleshooting Guides: Methods for identifying and resolving common vehicle problems.

**Benefits of Downloading Workshop Manuals**

Convenience

One of the primary benefits of downloading workshop manuals is the convenience they offer. Having a digital copy on your computer, tablet, or smartphone means you can access the information you need anytime, anywhere. This is especially useful when you're in the garage or on the road and need immediate guidance.

Cost-Effective

Downloading workshop manuals is often more cost-effective than purchasing physical copies. Many online sources offer these manuals at a lower price, and some are even available for free. This affordability makes it easier for vehicle owners to obtain the information they need without breaking the bank.

Up-to-Date Information

Manufacturers frequently update their digital workshop manuals to include the latest information and procedures. By downloading the latest versions, you ensure that you have the most current and accurate data for your vehicle.

Comprehensive Coverage

Digital workshop manuals provide the same comprehensive coverage as their printed counterparts. They include detailed instructions, diagrams, and specifications that cater to all levels of expertise, from novice DIYers to professional mechanics.

**How to Download Workshop Manuals**

Identify Your Vehicle

Before downloading a workshop manual, you need to know your vehicle’s make, model, and year. This information is crucial to ensure you download the correct manual for your specific vehicle.

Choose a Reputable Source

Not all sources for workshop manuals are created equal. It's important to choose a reputable site that offers accurate and reliable manuals. Look for websites that have positive reviews and a wide selection of manuals.

Verify the Manual

Once you find a manual for your vehicle, verify its contents to ensure it covers all necessary aspects of your vehicle. Check for sections on maintenance, repair procedures, and wiring diagrams to ensure you are getting a comprehensive guide.

Download and Save

After verifying the manual, download it and save it to a convenient location on your device. It’s also a good idea to create a backup copy on an external drive or cloud storage to ensure you don’t lose this valuable resource.

**Where to Download Workshop Manuals**

Official Manufacturer Websites

Many vehicle manufacturers provide digital versions of their workshop manuals on their official websites. These manuals are typically free to download for vehicle owners and are the most reliable source of information.

Specialized Websites

There are numerous specialized websites that offer downloadable workshop manuals for a wide range of makes and models. These sites often provide manuals for both common and rare vehicles, making them a valuable resource.

Online Marketplaces

Platforms like eBay and Amazon have sellers offering digital workshop manuals. Be sure to purchase from reputable sellers to avoid counterfeit or incomplete manuals.

Automotive Forums and Communities

Online automotive forums and communities can be excellent resources for finding workshop manuals. Members often share links to manuals or provide advice on where to find them.

**Using Workshop Manuals Effectively**

Familiarize Yourself with the Manual

Before starting any repair or maintenance task, take some time to familiarize yourself with the layout and structure of the manual. Understanding how the information is organized will help you quickly find the sections you need.

Follow Step-by-Step Instructions

When performing a repair or maintenance task, follow the step-by-step instructions provided in the manual carefully. Pay close attention to details and do not skip any steps to ensure the job is done correctly.

Utilize Diagrams and Illustrations

The diagrams and illustrations in the manual are invaluable for visualizing components and understanding how they fit together. Refer to these visual aids to ensure that you are correctly following the procedures.

Adhere to Safety Precautions

Always follow the safety precautions outlined in the manual. Using the right tools and wearing appropriate safety gear is crucial to prevent injuries and ensure a safe working environment.

**Choosing the Right Workshop Manual**

Verify the Source

Ensure that the manual you are downloading is from a reliable and reputable source. Manuals provided by the vehicle manufacturer or well-known publishers are more likely to be accurate and comprehensive.

Check for Completeness

Make sure that the manual covers all aspects of the vehicle you are working on. It should include detailed information on the engine, transmission, electrical systems, and other critical components.

Read Reviews

If you are purchasing a manual from an online marketplace or specialized website, read reviews from other buyers. Their feedback can provide insights into the quality and accuracy of the manual.

**Conclusion**

Downloading workshop manuals is a smart and convenient way to access essential information for vehicle maintenance and repair. These comprehensive guides provide detailed instructions, technical specifications, and troubleshooting tips that are invaluable for both professional mechanics and DIY enthusiasts.

By understanding how to use these manuals and where to find them, you can enhance your automotive skills, save on repair costs, and ensure that your vehicle remains in top condition. Whether you are performing routine maintenance or tackling a complex repair, having a reliable workshop manual at your fingertips is one of the best decisions you can make for your vehicle.

Embrace the digital age and take advantage of the convenience and cost savings offered by downloadable workshop manuals. Equip yourself with the knowledge and confidence needed to tackle any repair or maintenance task, ensuring that your vehicle runs smoothly and safely for years to come.

| org45ss |

|

1,926,200 | Mastering Microservices: Node.js 12 Factor App Development | The 12 Factor App is a methodology for building software-as-a-service apps that emphasizes... | 0 | 2024-07-17T04:25:30 | https://dev.to/tkssharma/mastering-microservices-nodejs-12-factor-app-development-23d5 | nextjs, node, javascript, microservices |

{% embed https://www.youtube.com/watch?v=GjyxQBy2cNo %}

The 12 Factor App is a methodology for building software-as-a-service apps that emphasizes portability, scalability, and maintainability. Developed by engineers at Heroku, the 12-factor methodology is intended to standardize and streamline app development and deployment. Below are the twelve factors in detail:

### 1. **Codebase**

- **One codebase tracked in revision control, many deploys**

- There should be a single codebase for a project, which is tracked in a version control system like Git. Multiple environments (e.g., production, staging, development) should be different deployments of the same codebase.

### 2. **Dependencies**

- **Explicitly declare and isolate dependencies**

- All dependencies should be declared explicitly in a dependency declaration file (e.g., `requirements.txt` for Python, `package.json` for Node.js). Use a dependency management tool to ensure these dependencies are isolated and versioned properly.

### 3. **Config**

- **Store config in the environment**

- Configuration that varies between deploys (such as credentials or resource handles) should be stored in the environment. This separates config from code, allowing for different configurations in different environments.

### 4. **Backing Services**

- **Treat backing services as attached resources**

- Backing services (e.g., databases, messaging systems, caches) should be treated as attached resources that can be attached and detached as needed, without making changes to the app's code.

### 5. **Build, Release, Run**

- **Strictly separate build and run stages**

- The build stage converts a code repo into an executable bundle (e.g., compiling code). The release stage takes the build and combines it with the current config to create a release. The run stage runs the app in the execution environment.

### 6. **Processes**

- **Execute the app as one or more stateless processes**

- The app should run as stateless processes, with any persistent data stored in a stateful backing service. This allows for easy scaling and resilience.

### 7. **Port Binding**

- **Export services via port binding**

- The app should be self-contained and make services available by listening on a port. This makes the app independent of the execution environment and easy to run.

### 8. **Concurrency**

- **Scale out via the process model**

- The app should be designed to scale out by running multiple instances of its processes. Use a process management tool to manage these processes effectively.

### 9. **Disposability**

- **Maximize robustness with fast startup and graceful shutdown**

- The app's processes should start up quickly and shut down gracefully. This improves resilience and allows for rapid deployment of changes.

### 10. **Dev/Prod Parity**

- **Keep development, staging, and production as similar as possible**

- Minimize the differences between development and production environments to catch issues early and ensure smoother deployments.

### 11. **Logs**

- **Treat logs as event streams**

- The app should not manage or write log files. Instead, it should treat logs as event streams that are sent to a centralized logging service for aggregation and analysis.

### 12. **Admin Processes**

- **Run admin/management tasks as one-off processes**

- Administrative or management tasks (e.g., database migrations) should be run as one-off processes in the same environment as the app, using the same codebase and config.

Adhering to these principles helps developers create applications that are more scalable, maintainable, and portable across different environments, ensuring a smoother development and deployment process. | tkssharma |

1,926,201 | Spice Set With Spices | Elevate your culinary creations with our "Spice it Your Way" collection, featuring a variety of... | 0 | 2024-07-17T04:25:38 | https://dev.to/spiceityourway/spice-set-with-spices-3ge3 | spice, spiceset | Elevate your culinary creations with our "Spice it Your Way" collection, featuring a variety of premium spices tailored to your taste. Perfect for both seasoned chefs and home cooks, this set adds depth and complexity to any dish. Buy our [spice set with spices](https://www.spiceityourway.com/collections/spices) today and cook with confidence.

| spiceityourway |

1,926,202 | Deploy Microservice to AWS EC2 Instances | In this Video or Series of Videos, we are talking about service deployment to AWS EC2 using... | 0 | 2024-07-17T04:30:52 | https://dev.to/tkssharma/deploy-microservice-to-aws-ec2-instances-35o6 | microservices, node, nextjs, javascript |

{% embed https://www.youtube.com/watch?v=QuwBkPnSA3c&list=PLIGDNOJWiL19tboY7wTzz6_RY6h2gpNrH&index=32 %}

In this Video or Series of Videos, we are talking about service deployment to AWS EC2 using GitLab CI or Github Actions

- Creating a service

- Building Deploy Script

- Configure CI GitLab or GitHub actions

- Deploy applications using CI scripts

This is a section of the Major playlist "Advance Microservices"

In this playlist, we are talking about microservices development with node js of all different types and their deployments on EC2,ECS or Lambda

We are covering lots of things here like

- Express/Nest JS with Typescript with ORM (TypeORM, knex, Prisma)

- Deploying Services with AWS CDK Constructs with RDS/Dynamodb

- Building Different Microservice Architecture

- Using Event-Driven Arch, CQRS, Event Sourcing based Arch

- Deploying services using AWS ECS or Lambda using AWS CDK

Here are some common microservices architecture patterns and best practices when using Node.js:

1. Single Service Microservice Architecture:

2. Layered Microservice Architecture:

3. Event-Driven Microservice Architecture:

4. API Gateway Microservice Architecture:

5. Service Mesh Microservice Architecture:

6. Serverless Microservices:

7. Containerized Microservices:

8. Event Sourcing and CQRS:

9. BFF (Backend For Frontend) Microservice Architecture:

10. Database Microservice Architecture:

| tkssharma |

1,926,203 | Why learn coding? | I believe coding languages are among the most remarkable creations of humanity. Learning to code not... | 0 | 2024-07-17T04:35:11 | https://dev.to/qbts_load_1d475b5619cf613/why-learn-coding-51cg | I believe coding languages are among the most remarkable creations of humanity. Learning to code not only develops your brain but also unlocks the potential to create unimaginable things. With coding, you can build websites, applications, drawings, animations, and charts to track your progress. And that's just the beginning.

A few years ago, a friend of mine reconnected with me just as I was starting my coding journey. I persuaded him to start coding with the ESP-32, and now he develops projects for greenhouses, dwellings, chicken coops, and more.

The best part of learning to code is that it keeps you engaged and constantly learning something new. You can create innovative solutions, like a home assistant robot or custom devices and tools for your kitchen or garden. It's all achievable with patience and dedication. Keep learning, stay persistent, and don't give up. | qbts_load_1d475b5619cf613 |

|

1,926,204 | Buy verified BYBIT account | https://dmhelpshop.com/product/buy-verified-bybit-account/ Buy verified BYBIT account In the... | 0 | 2024-07-17T04:40:56 | https://dev.to/tacarec183/buy-verified-bybit-account-45d1 | webdev, javascript, beginners, programming | https://dmhelpshop.com/product/buy-verified-bybit-account/

Buy verified BYBIT account

In the evolving landscape of cryptocurrency trading, the role of a dependable and protected platform cannot be overstated. Bybit, an esteemed crypto derivatives exchange, stands out as a platform that empowers traders to capitalize on their expertise and effectively maneuver the market.

This article sheds light on the concept of Buy Verified Bybit Accounts, emphasizing the importance of account verification, the benefits it offers, and its role in ensuring a secure and seamless trading experience for all individuals involved.

What is a Verified Bybit Account?

Ensuring the security of your trading experience entails furnishing personal identification documents and participating in a video verification call to validate your identity. This thorough process is designed to not only establish trust but also to provide a secure trading environment that safeguards against potential threats.

By rigorously verifying identities, we prioritize the protection and integrity of every individual’s trading interactions, cultivating a space where confidence and security are paramount. Buy verified BYBIT account

Verification on Bybit lies at the core of ensuring security and trust within the platform, going beyond mere regulatory requirements. By implementing robust verification processes, Bybit effectively minimizes risks linked to fraudulent activities and enhances identity protection, thus establishing a solid foundation for a safe trading environment.

Verified accounts not only represent a commitment to compliance but also unlock higher withdrawal limits, empowering traders to effectively manage their assets while upholding stringent safety standards.

Advantages of a Verified Bybit Account

Discover the multitude of advantages a verified Bybit account offers beyond just security. Verified users relish in heightened withdrawal limits, presenting them with the flexibility necessary to effectively manage their crypto assets. This is especially advantageous for traders aiming to conduct substantial transactions with confidence, ensuring a stress-free and efficient trading experience.

Procuring Verified Bybit Accounts

The concept of acquiring buy Verified Bybit Accounts is increasingly favored by traders looking to enhance their competitive advantage in the market. Well-established sources and platforms now offer authentic verified accounts, enabling users to enjoy a superior trading experience. Buy verified BYBIT account.

Just as one exercises diligence in their trading activities, it is vital to carefully choose a reliable source for obtaining a verified account to guarantee a smooth and reliable transition.

Conclusionhow to get around bybit kyc

Understanding the importance of Bybit’s KYC (Know Your Customer) process is crucial for all users. Bybit’s implementation of KYC is not just to comply with legal regulations but also to safeguard its platform against fraud.

Although the process might appear burdensome, it plays a pivotal role in ensuring the security and protection of your account and funds. Embracing KYC is a proactive step towards maintaining a safe and secure trading environment for everyone involved.

Ensuring the security of your account is crucial, even if the KYC process may seem burdensome. By verifying your identity through KYC and submitting necessary documentation, you are fortifying the protection of your personal information and assets against potential unauthorized breaches and fraudulent undertakings. Buy verified BYBIT account.

Safeguarding your account with these added security measures not only safeguards your own interests but also contributes to maintaining the overall integrity of the online ecosystem. Embrace KYC as a proactive step towards ensuring a safe and secure online experience for yourself and everyone around you.

How many Bybit users are there?

With over 2 million registered users, Bybit stands out as a prominent player in the cryptocurrency realm, showcasing its increasing influence and capacity to appeal to a wide spectrum of traders.

The rapid expansion of its user base highlights Bybit’s proactive approach to integrating innovative functionalities and prioritizing customer experience. This exponential growth mirrors the intensifying interest in digital assets, positioning Bybit as a leading platform in the evolving landscape of cryptocurrency trading.

With over 2 million registered users leveraging its platform for cryptocurrency trading, Buy Verified ByBiT Accounts has witnessed remarkable growth in its user base. Bybit’s commitment to security, provision of advanced trading tools, and top-tier customer support services have solidified its position as a prominent competitor within the cryptocurrency exchange market.

For those seeking a dependable and feature-rich platform to engage in digital asset trading, Bybit emerges as an excellent choice for both novice and experienced traders alike.

Enhancing Trading Across Borders

Leverage the power of buy verified Bybit accounts to unlock global trading prospects. Whether you reside in bustling financial districts or the most distant corners of the globe, a verified account provides you with the gateway to engage in safe and seamless cross-border transactions.

The credibility that comes with a verified account strengthens your trading activities, ensuring a secure and reliable trading environment for all your endeavors.

A Badge of Trust and Opportunity

By verifying your BYBIT account, you are making a prudent choice that underlines your dedication to safe trading practices while gaining access to an array of enhanced features and advantages on the platform. Buy verified BYBIT account.

With upgraded security measures in place, elevated withdrawal thresholds, and privileged access to exclusive opportunities, a verified BYBIT account equips you with the confidence to maneuver through the cryptocurrency trading realm effectively.

Why is Verification Important on Bybit?

Ensuring verification on Bybit is essential in creating a secure and trusted trading space for all users. It effectively reduces the potential threats linked to fraudulent behaviors, offers a shield for personal identities, and enables verified individuals to enjoy increased withdrawal limits, enhancing their ability to efficiently manage assets.

By undergoing the verification process, users safeguard their investments and contribute to a safer and more regulated ecosystem, promoting a more secure and reliable trading environment overall. Buy verified BYBIT account.

Conclusion

In the ever-evolving landscape of digital cryptocurrency trading, having a Verified Bybit Account is paramount in establishing trust and security. By offering elevated withdrawal limits, fortified security measures, and the assurance that comes with verification, traders are equipped with a robust foundation to navigate the complexities of the trading sphere with peace of mind.

Discover the power of ByBiT Accounts, the ultimate financial management solution offering a centralized platform to monitor your finances seamlessly. With a user-friendly interface, effortlessly monitor your income, expenses, and savings, empowering you to make well-informed financial decisions. Buy verified BYBIT account.

Whether you are aiming for a significant investment or securing your retirement fund, ByBiT Accounts is equipped with all the tools necessary to keep you organized and on the right financial path. Join today and take control of your financial future with ease.

Contact Us / 24 Hours Reply

Telegram:dmhelpshop

WhatsApp: +1 (980) 277-2786

Skype:dmhelpshop

Email:[email protected] | tacarec183 |

1,926,205 | Ubat Buasir Herba | Ubat Buasir Herba: Penyelesaian Semulajadi untuk Masalah Buasir Pengenalan kepada Buasir Buasir... | 0 | 2024-07-17T04:41:56 | https://dev.to/indah_indri_a299aff67faef/ubat-buasir-herba-5fm | webdev |

**Ubat Buasir Herba: Penyelesaian Semulajadi untuk Masalah Buasir

Pengenalan kepada Buasir**

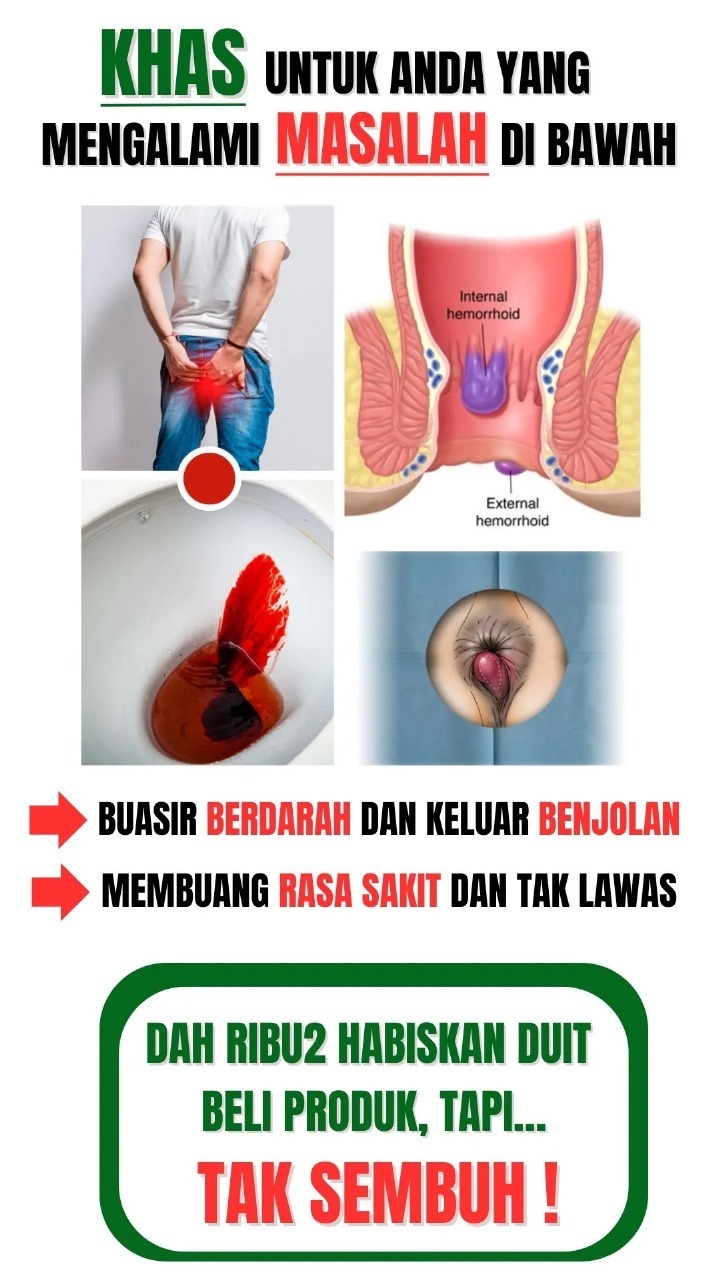

Buasir adalah pembengkakan atau keradangan saluran darah di sekitar dubur atau rektum bawah. Ini adalah masalah kesihatan yang biasa, terutama di kalangan orang dewasa.

**Punca Buasir**

Antara punca utama buasir termasuklah:

1. Sembelit kronik

2. Kehamilan

3. Obesiti

4. Duduk terlalu lama

5. Diet rendah serat

**Tanda-tanda Buasir**

1. Pendarahan semasa atau selepas membuang air besar

2. Kesakitan atau ketidakselesaan di kawasan dubur

3. Pembengkakan di sekitar dubur

4. Gatal-gatal atau iritasi di kawasan dubur

5. Ubat Buasir Herba

Perubatan herba telah lama digunakan untuk merawat pelbagai masalah kesihatan, termasuk buasir. Berikut adalah beberapa herba yang boleh membantu merawat buasir:

Aloe Vera

Kegunaan: Aloe vera mempunyai sifat anti-radang yang membantu mengurangkan keradangan dan kesakitan.

Cara Penggunaan: Sapukan gel aloe vera segar ke kawasan yang terjejas beberapa kali sehari.

Witch Hazel

Kegunaan: Witch hazel adalah astringen semulajadi yang membantu mengecutkan tisu dan mengurangkan pendarahan serta gatal-gatal.

Cara Penggunaan: Sapukan witch hazel dalam bentuk cecair pada kapas dan letakkan di kawasan yang terjejas.

Daun Sireh

Kegunaan: Daun sireh mempunyai sifat antiseptik dan anti-radang yang boleh membantu meredakan buasir.

Cara Penggunaan: Rebus daun sireh dan gunakan air rebusan tersebut untuk mencuci kawasan dubur.

Kunyit

Kegunaan: Kunyit mempunyai sifat anti-radang dan penyembuhan.

Cara Penggunaan: Campurkan serbuk kunyit dengan sedikit minyak kelapa dan sapukan pada kawasan buasir.

Bawang Putih

Kegunaan: Bawang putih adalah antiseptik semulajadi yang boleh membantu mengurangkan keradangan dan membunuh bakteria.

Cara Penggunaan: Hancurkan bawang putih dan campurkan dengan sedikit minyak kelapa. Sapukan pada kawasan yang terjejas.

Daun Pegaga (Gotu Kola)

Kegunaan: Daun pegaga membantu memperbaiki peredaran darah dan menguatkan saluran darah.

Cara Penggunaan: Boleh diminum sebagai teh atau diambil dalam bentuk kapsul.

Cuka Epal (Apple Cider Vinegar)

Kegunaan: Cuka epal membantu mengecutkan buasir dan mengurangkan keradangan.

Cara Penggunaan: Celup kapas dalam cuka epal yang tidak dicairkan dan letakkan pada kawasan yang terjejas.

Cara Menggunakan Herba untuk Buasir

Topikal

Sapukan herba yang sesuai secara langsung ke kawasan yang terjejas. Pastikan kawasan tersebut bersih sebelum penggunaan.

Oral

Beberapa herba boleh diambil secara oral dalam bentuk teh, kapsul, atau tincture.

**Pencegahan Buasir**

1. Mengamalkan diet tinggi serat.

2. Minum air secukupnya.

3. Mengelakkan duduk terlalu lama.

4. Berhenti menahan diri dari membuang air besar.

5. Bersenam secara teratur.

HUBUNGI KAMI

KLIK DISINI

SUMBER https://www.sembuhlah.com/ubat-buasir-herba/

| indah_indri_a299aff67faef |

1,926,206 | Python for Begineer-01 | 1.What is python? 2.python is a interpreted language or not? 3.how many types of languages are there... | 0 | 2024-07-17T04:44:03 | https://dev.to/04d5lakshmi_prasannapras/python-for-begineer-01-2ifm | 1.What is python?

2.python is a interpreted language or not?

3.how many types of languages are there in python?

4.what is interpretation and what is compilation? | 04d5lakshmi_prasannapras |

|

1,926,242 | Newbie | I am new here, anyone willing to give me a tour? | 0 | 2024-07-17T05:57:10 | https://dev.to/luke_manyamazi_14765e8475/newbie-5hi9 | newbie, python, softwareengineering | I am new here, anyone willing to give me a tour? | luke_manyamazi_14765e8475 |

1,926,207 | Digital Pioneer. | A post by Paul Fallon | 0 | 2024-07-17T04:45:24 | https://dev.to/faldesign/digital-pioneer-imc |

| faldesign |

|

1,926,208 | Digital Pioneer. | A post by Paul Fallon | 0 | 2024-07-17T04:49:19 | https://dev.to/faldesign/digital-pioneer-47ol | ai, opensource, machinelearning, career |

| faldesign |