metadata

language: it

license: afl-3.0

widget:

- text: Il <mask> ha chiesto revocarsi l'obbligo di pagamento

ITALIAN-LEGAL-BERT-SC

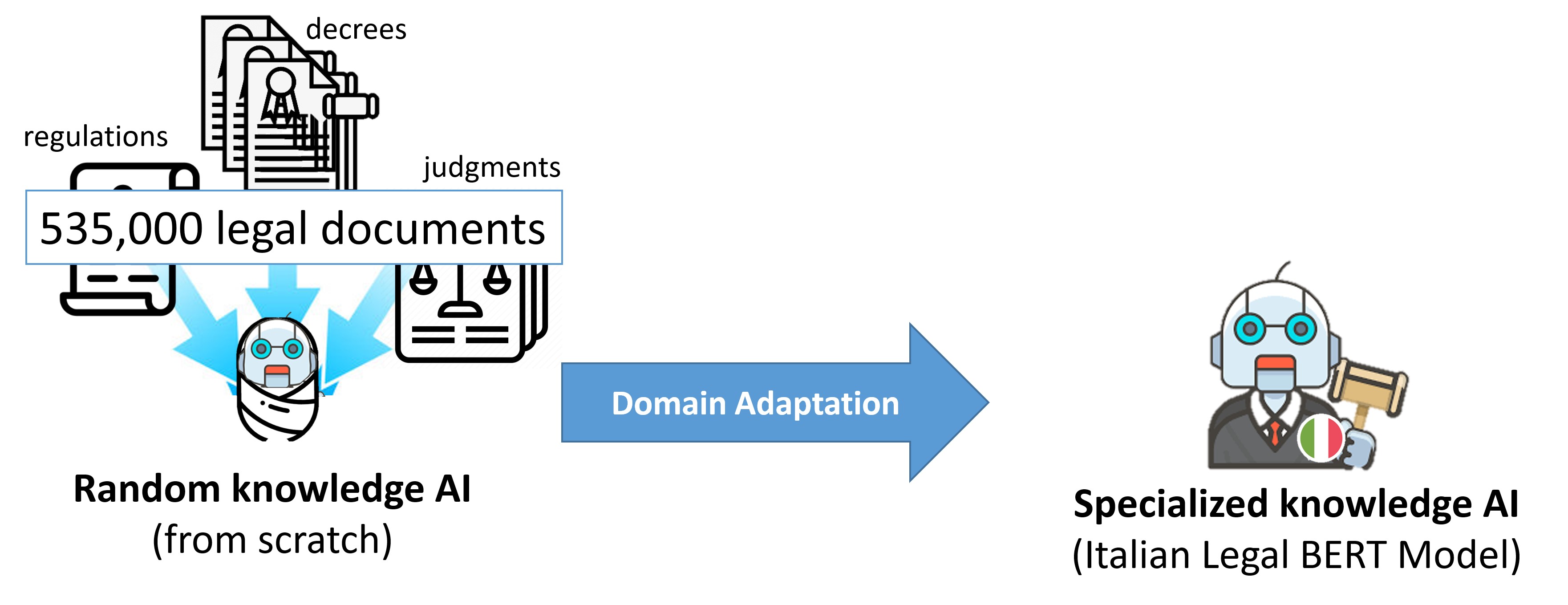

It is the ITALIAN-LEGAL-BERT variant pre-trained from scratch on Italian legal documents (ITA-LEGAL-BERT-SC) based on the CamemBERT architecture

Training procedure

It was trained from scratch using a larger training dataset, 6.6GB of civil and criminal cases. We used CamemBERT architecture with a language modeling head on top, AdamW Optimizer, initial learning rate 2e-5 (with linear learning rate decay), sequence length 512, batch size 18, 1 million training steps, device 8*NVIDIA A100 40GB using distributed data parallel (each step performs 8 batches). It uses SentencePiece tokenization trained from scratch on a subset of training set (5 milions sentences) and vocabulary size of 32000