Upload README.md

#30

by

AlexZheng

- opened

README.md

CHANGED

|

@@ -4,14 +4,11 @@ tags:

|

|

| 4 |

- stable-diffusion

|

| 5 |

- text-to-image

|

| 6 |

---

|

| 7 |

-

#

|

| 8 |

-

This is the fine-tuned Stable Diffusion model trained on images from the

|

| 9 |

-

|

| 10 |

|

| 11 |

-

|

| 12 |

-

[](https://patreon.com/user?u=79196446)

|

| 13 |

-

|

| 14 |

-

### 🧨 Diffusers

|

| 15 |

|

| 16 |

This model can be used just like any other Stable Diffusion model. For more information,

|

| 17 |

please have a look at the [Stable Diffusion](https://huggingface.co/docs/diffusers/api/pipelines/stable_diffusion).

|

|

@@ -23,35 +20,51 @@ You can also export the model to [ONNX](https://huggingface.co/docs/diffusers/op

|

|

| 23 |

from diffusers import StableDiffusionPipeline

|

| 24 |

import torch

|

| 25 |

|

| 26 |

-

model_id = "

|

| 27 |

pipe = StableDiffusionPipeline.from_pretrained(model_id, torch_dtype=torch.float16)

|

| 28 |

pipe = pipe.to("cuda")

|

| 29 |

|

| 30 |

-

prompt = "

|

| 31 |

image = pipe(prompt).images[0]

|

| 32 |

|

| 33 |

-

image.save("./

|

| 34 |

```

|

| 35 |

|

| 36 |

-

# Gradio & Colab

|

| 37 |

|

| 38 |

-

We also support a [Gradio](https://github.com/gradio-app/gradio) Web UI and Colab with Diffusers to run fine-tuned Stable Diffusion models:

|

| 39 |

-

[](https://huggingface.co/spaces/anzorq/finetuned_diffusion)

|

| 40 |

-

[](https://colab.research.google.com/drive/1j5YvfMZoGdDGdj3O3xRU1m4ujKYsElZO?usp=sharing)

|

| 41 |

|

| 42 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 43 |

|

| 44 |

-

|

| 45 |

-

|

| 46 |

-

|

| 47 |

-

### Sample images from the model:

|

| 48 |

-

|

| 49 |

-

### Sample images used for training:

|

| 50 |

-

|

| 51 |

|

| 52 |

-

**Version 3** (arcane-diffusion-v3): This version uses the new _train-text-encoder_ setting and improves the quality and edibility of the model immensely. Trained on 95 images from the show in 8000 steps.

|

| 53 |

|

| 54 |

-

**Version 2** (arcane-diffusion-v2): This uses the diffusers based dreambooth training and prior-preservation loss is way more effective. The diffusers where then converted with a script to a ckpt file in order to work with automatics repo.

|

| 55 |

-

Training was done with 5k steps for a direct comparison to v1 and results show that it needs more steps for a more prominent result. Version 3 will be tested with 11k steps.

|

| 56 |

|

| 57 |

-

**Version 1** (arcane-diffusion-5k): This model was trained using _Unfrozen Model Textual Inversion_ utilizing the _Training with prior-preservation loss_ methods. There is still a slight shift towards the style, while not using the arcane token.

|

|

|

|

| 4 |

- stable-diffusion

|

| 5 |

- text-to-image

|

| 6 |

---

|

| 7 |

+

# Galactic Diffusion

|

| 8 |

+

This is the fine-tuned Stable Diffusion model trained on images from the <b>entergalactic</b> on Netflix..

|

| 9 |

+

No tokens is needed.

|

| 10 |

|

| 11 |

+

### Diffusers

|

|

|

|

|

|

|

|

|

|

| 12 |

|

| 13 |

This model can be used just like any other Stable Diffusion model. For more information,

|

| 14 |

please have a look at the [Stable Diffusion](https://huggingface.co/docs/diffusers/api/pipelines/stable_diffusion).

|

|

|

|

| 20 |

from diffusers import StableDiffusionPipeline

|

| 21 |

import torch

|

| 22 |

|

| 23 |

+

model_id = "alexzheng/"

|

| 24 |

pipe = StableDiffusionPipeline.from_pretrained(model_id, torch_dtype=torch.float16)

|

| 25 |

pipe = pipe.to("cuda")

|

| 26 |

|

| 27 |

+

prompt = "a beautiful young female with long dark hair, clothed in full dress"

|

| 28 |

image = pipe(prompt).images[0]

|

| 29 |

|

| 30 |

+

image.save("./samples/0_0.png")

|

| 31 |

```

|

| 32 |

|

|

|

|

| 33 |

|

|

|

|

|

|

|

|

|

|

| 34 |

|

| 35 |

+

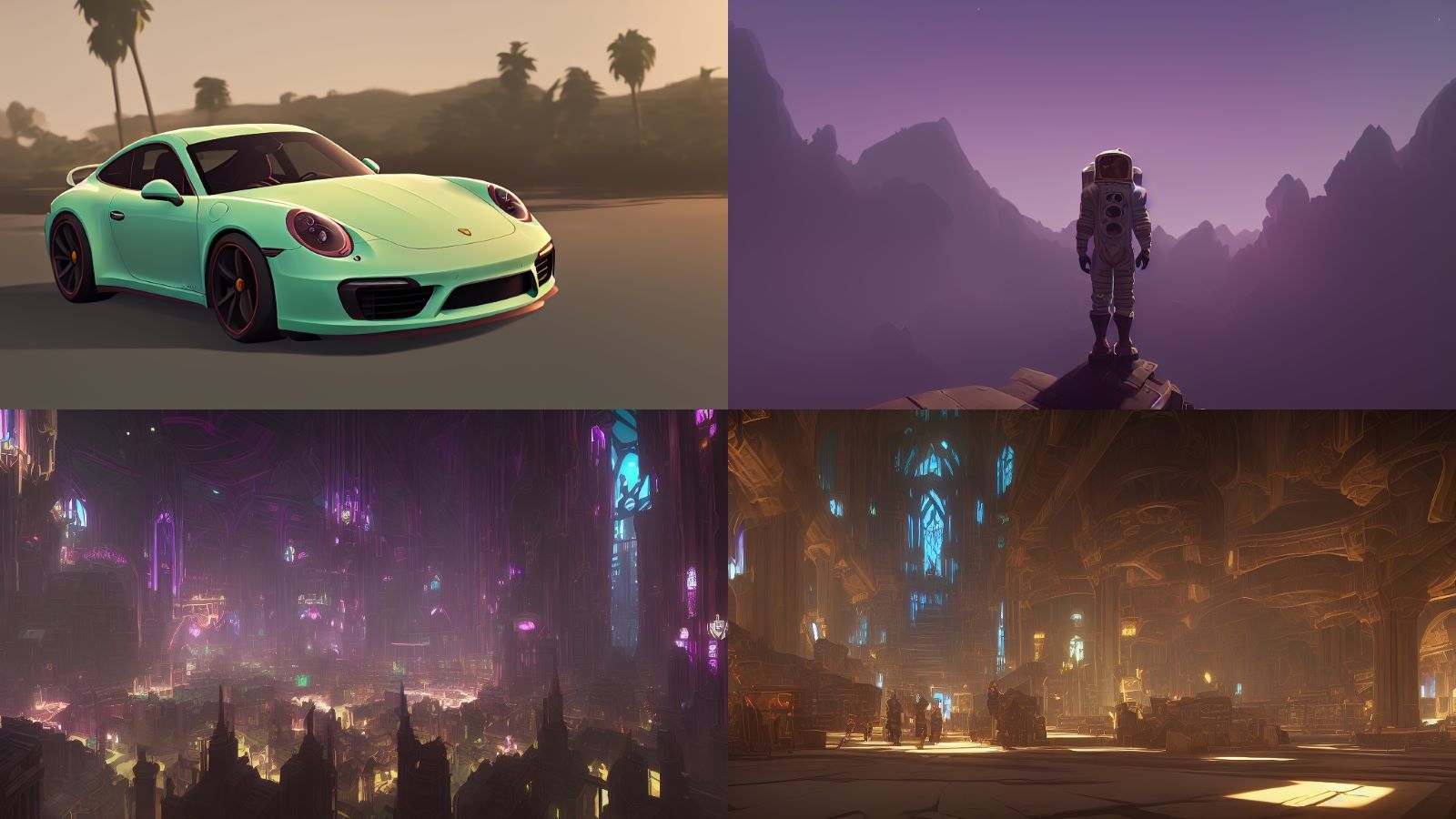

### Sample images

|

| 36 |

+

|

| 37 |

+

"a beautiful young female with long dark hair, clothed in full dress"

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

"a strong handsome young male clothed in metal armors"

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

|

| 45 |

+

"a British shorthair cat sitting on the floor"

|

| 46 |

+

|

| 47 |

+

|

| 48 |

+

|

| 49 |

+

"a golden retriever running in the park"

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

|

| 53 |

+

"a blue shining Porsche sports car"

|

| 54 |

+

|

| 55 |

+

|

| 56 |

+

|

| 57 |

+

"a modern concept house, two stories, no people"

|

| 58 |

+

|

| 59 |

+

|

| 60 |

+

|

| 61 |

+

"a warm and sweet living room, a TV, no people"

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

"a beautiful city night scene, no people"

|

| 66 |

|

| 67 |

+

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 68 |

|

|

|

|

| 69 |

|

|

|

|

|

|

|

| 70 |

|

|

|