Update README.md

Browse files

README.md

CHANGED

|

@@ -20,7 +20,7 @@ metrics:

|

|

| 20 |

|

| 21 |

---

|

| 22 |

|

| 23 |

-

> [!TIP]

|

| 24 |

|

| 25 |

Lamarck 14B v0.7: A generalist merge with emphasis on multi-step reasoning, prose, and multi-language ability. The 14B parameter model class has a lot of strong performers, and Lamarck strives to be well-rounded and solid:

|

| 26 |

|

|

|

|

| 20 |

|

| 21 |

---

|

| 22 |

|

| 23 |

+

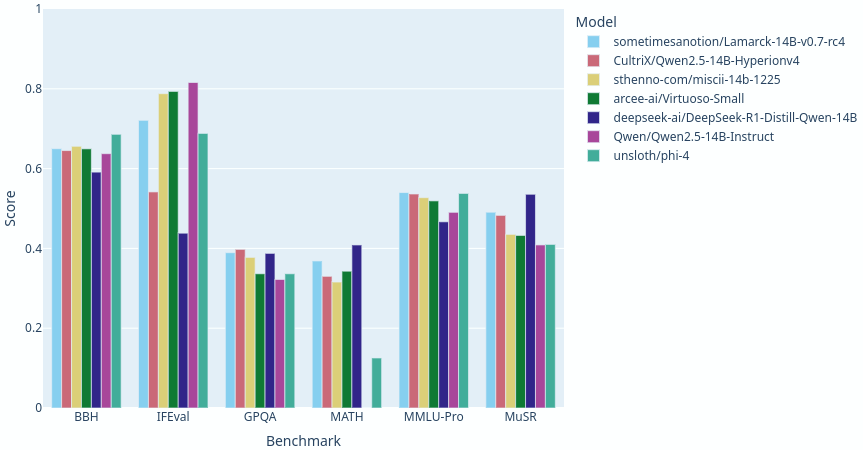

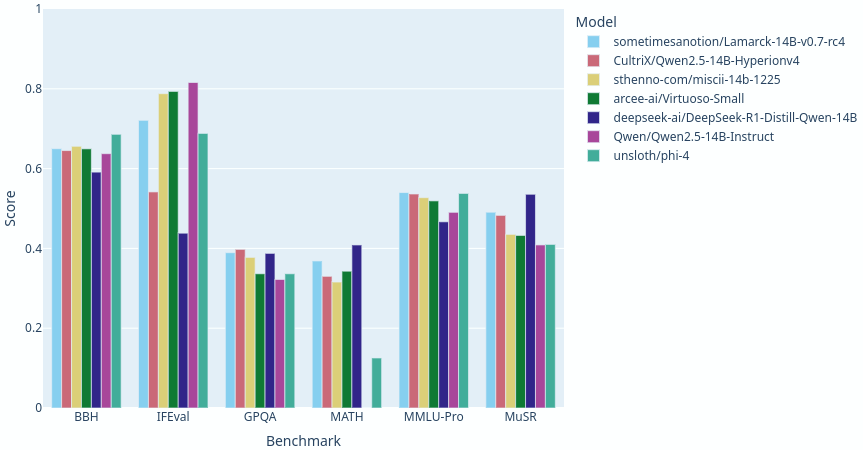

> [!TIP] With no regressions, only gains over the previous release, this version of Lamarck has [broken the 41.0 average](https://shorturl.at/jUqEk) maximum for 14B parameter models, and as of this writing, ranks #8 among models under 70B parameters on the Open LLM Leaderboard. Given the respectable performance in the 32B range, I think Lamarck deserves his shades. A little layer analysis in the 14B range goes a long, long way.

|

| 24 |

|

| 25 |

Lamarck 14B v0.7: A generalist merge with emphasis on multi-step reasoning, prose, and multi-language ability. The 14B parameter model class has a lot of strong performers, and Lamarck strives to be well-rounded and solid:

|

| 26 |

|