url

stringlengths 58

61

| repository_url

stringclasses 1

value | labels_url

stringlengths 72

75

| comments_url

stringlengths 67

70

| events_url

stringlengths 65

68

| html_url

stringlengths 46

51

| id

int64 600M

2.05B

| node_id

stringlengths 18

32

| number

int64 2

6.51k

| title

stringlengths 1

290

| user

dict | labels

listlengths 0

4

| state

stringclasses 2

values | locked

bool 1

class | assignee

dict | assignees

listlengths 0

4

| milestone

dict | comments

sequencelengths 0

30

| created_at

unknown | updated_at

unknown | closed_at

unknown | author_association

stringclasses 3

values | active_lock_reason

float64 | draft

float64 0

1

⌀ | pull_request

dict | body

stringlengths 0

228k

⌀ | reactions

dict | timeline_url

stringlengths 67

70

| performed_via_github_app

float64 | state_reason

stringclasses 3

values | is_pull_request

bool 2

classes |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/datasets/issues/3346 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3346/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3346/comments | https://api.github.com/repos/huggingface/datasets/issues/3346/events | https://github.com/huggingface/datasets/issues/3346 | 1,067,632,365 | I_kwDODunzps4_osbt | 3,346 | Failed to convert `string` with pyarrow for QED since 1.15.0 | {

"avatar_url": "https://avatars.githubusercontent.com/u/4812544?v=4",

"events_url": "https://api.github.com/users/tianjianjiang/events{/privacy}",

"followers_url": "https://api.github.com/users/tianjianjiang/followers",

"following_url": "https://api.github.com/users/tianjianjiang/following{/other_user}",

"gists_url": "https://api.github.com/users/tianjianjiang/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/tianjianjiang",

"id": 4812544,

"login": "tianjianjiang",

"node_id": "MDQ6VXNlcjQ4MTI1NDQ=",

"organizations_url": "https://api.github.com/users/tianjianjiang/orgs",

"received_events_url": "https://api.github.com/users/tianjianjiang/received_events",

"repos_url": "https://api.github.com/users/tianjianjiang/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/tianjianjiang/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/tianjianjiang/subscriptions",

"type": "User",

"url": "https://api.github.com/users/tianjianjiang"

} | [

{

"color": "d73a4a",

"default": true,

"description": "Something isn't working",

"id": 1935892857,

"name": "bug",

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug"

}

] | closed | false | {

"avatar_url": "https://avatars.githubusercontent.com/u/47462742?v=4",

"events_url": "https://api.github.com/users/mariosasko/events{/privacy}",

"followers_url": "https://api.github.com/users/mariosasko/followers",

"following_url": "https://api.github.com/users/mariosasko/following{/other_user}",

"gists_url": "https://api.github.com/users/mariosasko/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/mariosasko",

"id": 47462742,

"login": "mariosasko",

"node_id": "MDQ6VXNlcjQ3NDYyNzQy",

"organizations_url": "https://api.github.com/users/mariosasko/orgs",

"received_events_url": "https://api.github.com/users/mariosasko/received_events",

"repos_url": "https://api.github.com/users/mariosasko/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/mariosasko/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mariosasko/subscriptions",

"type": "User",

"url": "https://api.github.com/users/mariosasko"

} | [

{

"avatar_url": "https://avatars.githubusercontent.com/u/47462742?v=4",

"events_url": "https://api.github.com/users/mariosasko/events{/privacy}",

"followers_url": "https://api.github.com/users/mariosasko/followers",

"following_url": "https://api.github.com/users/mariosasko/following{/other_user}",

"gists_url": "https://api.github.com/users/mariosasko/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/mariosasko",

"id": 47462742,

"login": "mariosasko",

"node_id": "MDQ6VXNlcjQ3NDYyNzQy",

"organizations_url": "https://api.github.com/users/mariosasko/orgs",

"received_events_url": "https://api.github.com/users/mariosasko/received_events",

"repos_url": "https://api.github.com/users/mariosasko/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/mariosasko/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mariosasko/subscriptions",

"type": "User",

"url": "https://api.github.com/users/mariosasko"

}

] | null | [

"Scratch that, probably the old and incompatible usage of dataset builder from promptsource.",

"Actually, re-opening this issue cause the error persists\r\n\r\n```python\r\n>>> load_dataset(\"qed\")\r\nDownloading and preparing dataset qed/qed (download: 13.43 MiB, generated: 9.70 MiB, post-processed: Unknown size, total: 23.14 MiB) to /home/victor_huggingface_co/.cache/huggingface/datasets/qed/qed/1.0.0/47d8b6f033393aa520a8402d4baf2d6bdc1b2fbde3dc156e595d2ef34caf7d75...\r\n100%|███████████████████████████████████████████████████████████████████████| 2/2 [00:00<00:00, 2228.64it/s]\r\nTraceback (most recent call last): \r\n File \"<stdin>\", line 1, in <module>\r\n File \"/home/victor_huggingface_co/miniconda3/envs/promptsource/lib/python3.7/site-packages/datasets/load.py\", line 1669, in load_dataset\r\n use_auth_token=use_auth_token,\r\n File \"/home/victor_huggingface_co/miniconda3/envs/promptsource/lib/python3.7/site-packages/datasets/builder.py\", line 594, in download_and_prepare\r\n dl_manager=dl_manager, verify_infos=verify_infos, **download_and_prepare_kwargs\r\n File \"/home/victor_huggingface_co/miniconda3/envs/promptsource/lib/python3.7/site-packages/datasets/builder.py\", line 681, in _download_and_prepare\r\n self._prepare_split(split_generator, **prepare_split_kwargs)\r\n File \"/home/victor_huggingface_co/miniconda3/envs/promptsource/lib/python3.7/site-packages/datasets/builder.py\", line 1083, in _prepare_split\r\n num_examples, num_bytes = writer.finalize()\r\n File \"/home/victor_huggingface_co/miniconda3/envs/promptsource/lib/python3.7/site-packages/datasets/arrow_writer.py\", line 468, in finalize\r\n self.write_examples_on_file()\r\n File \"/home/victor_huggingface_co/miniconda3/envs/promptsource/lib/python3.7/site-packages/datasets/arrow_writer.py\", line 339, in write_examples_on_file\r\n pa_array = pa.array(typed_sequence)\r\n File \"pyarrow/array.pxi\", line 229, in pyarrow.lib.array\r\n File \"pyarrow/array.pxi\", line 110, in pyarrow.lib._handle_arrow_array_protocol\r\n File \"/home/victor_huggingface_co/miniconda3/envs/promptsource/lib/python3.7/site-packages/datasets/arrow_writer.py\", line 125, in __arrow_array__\r\n out = pa.array(cast_to_python_objects(self.data, only_1d_for_numpy=True), type=type)\r\n File \"pyarrow/array.pxi\", line 315, in pyarrow.lib.array\r\n File \"pyarrow/array.pxi\", line 39, in pyarrow.lib._sequence_to_array\r\n File \"pyarrow/error.pxi\", line 143, in pyarrow.lib.pyarrow_internal_check_status\r\n File \"pyarrow/error.pxi\", line 99, in pyarrow.lib.check_status\r\npyarrow.lib.ArrowInvalid: Could not convert 'in' with type str: tried to convert to boolean\r\n```\r\n\r\nEnvironment (datasets and pyarrow):\r\n\r\n```bash\r\n(promptsource) victor_huggingface_co@victor-dev:~/promptsource$ datasets-cli env\r\n\r\nCopy-and-paste the text below in your GitHub issue.\r\n\r\n- `datasets` version: 1.16.1\r\n- Platform: Linux-5.0.0-1020-gcp-x86_64-with-debian-buster-sid\r\n- Python version: 3.7.11\r\n- PyArrow version: 6.0.1\r\n```\r\n```bash\r\n(promptsource) victor_huggingface_co@victor-dev:~/promptsource$ pip show pyarrow\r\nName: pyarrow\r\nVersion: 6.0.1\r\nSummary: Python library for Apache Arrow\r\nHome-page: https://arrow.apache.org/\r\nAuthor: \r\nAuthor-email: \r\nLicense: Apache License, Version 2.0\r\nLocation: /home/victor_huggingface_co/miniconda3/envs/promptsource/lib/python3.7/site-packages\r\nRequires: numpy\r\nRequired-by: streamlit, datasets\r\n```"

] | "2021-11-30T20:11:42Z" | "2021-12-14T14:39:05Z" | "2021-12-14T14:39:05Z" | CONTRIBUTOR | null | null | null | ## Describe the bug

Loading QED was fine until 1.15.0.

related: bigscience-workshop/promptsource#659, bigscience-workshop/promptsource#670

Not sure where the root cause is, but here are some candidates:

- #3158

- #3120

- #3196

- #2891

## Steps to reproduce the bug

```python

load_dataset("qed")

```

## Expected results

Loading completed.

## Actual results

```shell

ArrowInvalid: Could not convert in with type str: tried to convert to boolean

Traceback:

File "/Users/s0s0cr3/Library/Python/3.9/lib/python/site-packages/streamlit/script_runner.py", line 354, in _run_script

exec(code, module.__dict__)

File "/Users/s0s0cr3/Documents/GitHub/promptsource/promptsource/app.py", line 260, in <module>

dataset = get_dataset(dataset_key, str(conf_option.name) if conf_option else None)

File "/Users/s0s0cr3/Library/Python/3.9/lib/python/site-packages/streamlit/caching.py", line 543, in wrapped_func

return get_or_create_cached_value()

File "/Users/s0s0cr3/Library/Python/3.9/lib/python/site-packages/streamlit/caching.py", line 527, in get_or_create_cached_value

return_value = func(*args, **kwargs)

File "/Users/s0s0cr3/Documents/GitHub/promptsource/promptsource/utils.py", line 49, in get_dataset

builder_instance.download_and_prepare()

File "/Users/s0s0cr3/Library/Python/3.9/lib/python/site-packages/datasets/builder.py", line 607, in download_and_prepare

self._download_and_prepare(

File "/Users/s0s0cr3/Library/Python/3.9/lib/python/site-packages/datasets/builder.py", line 697, in _download_and_prepare

self._prepare_split(split_generator, **prepare_split_kwargs)

File "/Users/s0s0cr3/Library/Python/3.9/lib/python/site-packages/datasets/builder.py", line 1106, in _prepare_split

num_examples, num_bytes = writer.finalize()

File "/Users/s0s0cr3/Library/Python/3.9/lib/python/site-packages/datasets/arrow_writer.py", line 456, in finalize

self.write_examples_on_file()

File "/Users/s0s0cr3/Library/Python/3.9/lib/python/site-packages/datasets/arrow_writer.py", line 325, in write_examples_on_file

pa_array = pa.array(typed_sequence)

File "pyarrow/array.pxi", line 222, in pyarrow.lib.array

File "pyarrow/array.pxi", line 110, in pyarrow.lib._handle_arrow_array_protocol

File "/Users/s0s0cr3/Library/Python/3.9/lib/python/site-packages/datasets/arrow_writer.py", line 121, in __arrow_array__

out = pa.array(cast_to_python_objects(self.data, only_1d_for_numpy=True), type=type)

File "pyarrow/array.pxi", line 305, in pyarrow.lib.array

File "pyarrow/array.pxi", line 39, in pyarrow.lib._sequence_to_array

File "pyarrow/error.pxi", line 122, in pyarrow.lib.pyarrow_internal_check_status

File "pyarrow/error.pxi", line 84, in pyarrow.lib.check_status

```

## Environment info

<!-- You can run the command `datasets-cli env` and copy-and-paste its output below. -->

- `datasets` version: 1.15.0, 1.16.1

- Platform: macOS 1.15.7 or above

- Python version: 3.7.12 and 3.9

- PyArrow version: 3.0.0, 5.0.0, 6.0.1

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3346/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/3346/timeline | null | completed | false |

https://api.github.com/repos/huggingface/datasets/issues/1408 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1408/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1408/comments | https://api.github.com/repos/huggingface/datasets/issues/1408/events | https://github.com/huggingface/datasets/pull/1408 | 760,590,589 | MDExOlB1bGxSZXF1ZXN0NTM1Mzk3MTAw | 1,408 | adding fake-news-english | {

"avatar_url": "https://avatars.githubusercontent.com/u/15351802?v=4",

"events_url": "https://api.github.com/users/MisbahKhan789/events{/privacy}",

"followers_url": "https://api.github.com/users/MisbahKhan789/followers",

"following_url": "https://api.github.com/users/MisbahKhan789/following{/other_user}",

"gists_url": "https://api.github.com/users/MisbahKhan789/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/MisbahKhan789",

"id": 15351802,

"login": "MisbahKhan789",

"node_id": "MDQ6VXNlcjE1MzUxODAy",

"organizations_url": "https://api.github.com/users/MisbahKhan789/orgs",

"received_events_url": "https://api.github.com/users/MisbahKhan789/received_events",

"repos_url": "https://api.github.com/users/MisbahKhan789/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/MisbahKhan789/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/MisbahKhan789/subscriptions",

"type": "User",

"url": "https://api.github.com/users/MisbahKhan789"

} | [] | closed | false | null | [] | null | [

"also don't forget to format your code using `make style` to fix the CI"

] | "2020-12-09T19:02:07Z" | "2020-12-13T00:49:19Z" | "2020-12-13T00:49:19Z" | CONTRIBUTOR | null | 0 | {

"diff_url": "https://github.com/huggingface/datasets/pull/1408.diff",

"html_url": "https://github.com/huggingface/datasets/pull/1408",

"merged_at": null,

"patch_url": "https://github.com/huggingface/datasets/pull/1408.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/1408"

} | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/1408/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/1408/timeline | null | null | true |

|

https://api.github.com/repos/huggingface/datasets/issues/6136 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/6136/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/6136/comments | https://api.github.com/repos/huggingface/datasets/issues/6136/events | https://github.com/huggingface/datasets/issues/6136 | 1,844,887,866 | I_kwDODunzps5t9sE6 | 6,136 | CI check_code_quality error: E721 Do not compare types, use `isinstance()` | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova"

} | [

{

"color": "d4c5f9",

"default": false,

"description": "Maintenance tasks",

"id": 4296013012,

"name": "maintenance",

"node_id": "LA_kwDODunzps8AAAABAA_01A",

"url": "https://api.github.com/repos/huggingface/datasets/labels/maintenance"

}

] | closed | false | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova"

} | [

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova"

}

] | null | [] | "2023-08-10T10:19:50Z" | "2023-08-10T11:22:58Z" | "2023-08-10T11:22:58Z" | MEMBER | null | null | null | After latest release of `ruff` (https://pypi.org/project/ruff/0.0.284/), we get the following CI error:

```

src/datasets/utils/py_utils.py:689:12: E721 Do not compare types, use `isinstance()`

``` | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/6136/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/6136/timeline | null | completed | false |

https://api.github.com/repos/huggingface/datasets/issues/1491 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1491/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1491/comments | https://api.github.com/repos/huggingface/datasets/issues/1491/events | https://github.com/huggingface/datasets/pull/1491 | 762,920,920 | MDExOlB1bGxSZXF1ZXN0NTM3NDIxMTc3 | 1,491 | added opus GNOME data | {

"avatar_url": "https://avatars.githubusercontent.com/u/22396042?v=4",

"events_url": "https://api.github.com/users/rkc007/events{/privacy}",

"followers_url": "https://api.github.com/users/rkc007/followers",

"following_url": "https://api.github.com/users/rkc007/following{/other_user}",

"gists_url": "https://api.github.com/users/rkc007/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/rkc007",

"id": 22396042,

"login": "rkc007",

"node_id": "MDQ6VXNlcjIyMzk2MDQy",

"organizations_url": "https://api.github.com/users/rkc007/orgs",

"received_events_url": "https://api.github.com/users/rkc007/received_events",

"repos_url": "https://api.github.com/users/rkc007/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/rkc007/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/rkc007/subscriptions",

"type": "User",

"url": "https://api.github.com/users/rkc007"

} | [] | closed | false | null | [] | null | [

"merging since the Ci is fixed on master"

] | "2020-12-11T21:21:51Z" | "2020-12-17T14:20:23Z" | "2020-12-17T14:20:23Z" | CONTRIBUTOR | null | 0 | {

"diff_url": "https://github.com/huggingface/datasets/pull/1491.diff",

"html_url": "https://github.com/huggingface/datasets/pull/1491",

"merged_at": "2020-12-17T14:20:23Z",

"patch_url": "https://github.com/huggingface/datasets/pull/1491.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/1491"

} | Dataset : http://opus.nlpl.eu/GNOME.php | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/1491/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/1491/timeline | null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/707 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/707/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/707/comments | https://api.github.com/repos/huggingface/datasets/issues/707/events | https://github.com/huggingface/datasets/issues/707 | 713,954,666 | MDU6SXNzdWU3MTM5NTQ2NjY= | 707 | Requirements should specify pyarrow<1 | {

"avatar_url": "https://avatars.githubusercontent.com/u/918541?v=4",

"events_url": "https://api.github.com/users/mathcass/events{/privacy}",

"followers_url": "https://api.github.com/users/mathcass/followers",

"following_url": "https://api.github.com/users/mathcass/following{/other_user}",

"gists_url": "https://api.github.com/users/mathcass/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/mathcass",

"id": 918541,

"login": "mathcass",

"node_id": "MDQ6VXNlcjkxODU0MQ==",

"organizations_url": "https://api.github.com/users/mathcass/orgs",

"received_events_url": "https://api.github.com/users/mathcass/received_events",

"repos_url": "https://api.github.com/users/mathcass/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/mathcass/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mathcass/subscriptions",

"type": "User",

"url": "https://api.github.com/users/mathcass"

} | [] | closed | false | null | [] | null | [

"Hello @mathcass I would want to work on this issue. May I do the same? ",

"@punitaojha, certainly. Feel free to work on this. Let me know if you need any help or clarity.",

"Hello @mathcass \r\n1. I did fork the repository and clone the same on my local system. \r\n\r\n2. Then learnt about how we can publish our package on pypi.org. Also, found some instructions on same in setup.py documentation.\r\n\r\n3. Then I Perplexity document link that you shared above. I created a colab link from there keep both tensorflow and pytorch means a mixed option and tried to run it in colab but I encountered no errors at a point where you mentioned. Can you help me to figure out the issue. \r\n\r\n4.Here is the link of the colab file with my saved responses. \r\nhttps://colab.research.google.com/drive/1hfYz8Ira39FnREbxgwa_goZWpOojp2NH?usp=sharing",

"Also, please share some links which made you conclude that pyarrow < 1 would help. ",

"Access granted for the colab link. ",

"Thanks for looking at this @punitaojha and thanks for sharing the notebook. \r\n\r\nI just tried to reproduce this on my own (based on the environment where I had this issue) and I can't reproduce it somehow. If I run into this again, I'll include some steps to reproduce it. I'll close this as invalid. \r\n\r\nThanks again. ",

"I am sorry for hijacking this closed issue, but I believe I was able to reproduce this very issue. Strangely enough, it also turned out that running `pip install \"pyarrow<1\" --upgrade` did indeed fix the issue (PyArrow was installed in version `0.14.1` in my case).\r\n\r\nPlease see the Colab below:\r\n\r\nhttps://colab.research.google.com/drive/15QQS3xWjlKW2aK0J74eEcRFuhXUddUST\r\n\r\nThanks!"

] | "2020-10-02T23:39:39Z" | "2020-12-04T08:22:39Z" | "2020-10-04T20:50:28Z" | NONE | null | null | null | I was looking at the docs on [Perplexity](https://huggingface.co/transformers/perplexity.html) via GPT2. When you load datasets and try to load Wikitext, you get the error,

```

module 'pyarrow' has no attribute 'PyExtensionType'

```

I traced it back to datasets having installed PyArrow 1.0.1 but there's not pinning in the setup file.

https://github.com/huggingface/datasets/blob/e86a2a8f869b91654e782c9133d810bb82783200/setup.py#L68

Downgrading by installing `pip install "pyarrow<1"` resolved the issue. | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/707/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/707/timeline | null | completed | false |

https://api.github.com/repos/huggingface/datasets/issues/1395 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1395/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1395/comments | https://api.github.com/repos/huggingface/datasets/issues/1395/events | https://github.com/huggingface/datasets/pull/1395 | 760,448,255 | MDExOlB1bGxSZXF1ZXN0NTM1Mjc4MTQ2 | 1,395 | Add WikiSource Dataset | {

"avatar_url": "https://avatars.githubusercontent.com/u/1183441?v=4",

"events_url": "https://api.github.com/users/abhishekkrthakur/events{/privacy}",

"followers_url": "https://api.github.com/users/abhishekkrthakur/followers",

"following_url": "https://api.github.com/users/abhishekkrthakur/following{/other_user}",

"gists_url": "https://api.github.com/users/abhishekkrthakur/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/abhishekkrthakur",

"id": 1183441,

"login": "abhishekkrthakur",

"node_id": "MDQ6VXNlcjExODM0NDE=",

"organizations_url": "https://api.github.com/users/abhishekkrthakur/orgs",

"received_events_url": "https://api.github.com/users/abhishekkrthakur/received_events",

"repos_url": "https://api.github.com/users/abhishekkrthakur/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/abhishekkrthakur/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/abhishekkrthakur/subscriptions",

"type": "User",

"url": "https://api.github.com/users/abhishekkrthakur"

} | [] | closed | false | null | [] | null | [

"@lhoestq fixed :) "

] | "2020-12-09T15:52:06Z" | "2020-12-14T10:24:14Z" | "2020-12-14T10:24:13Z" | MEMBER | null | 0 | {

"diff_url": "https://github.com/huggingface/datasets/pull/1395.diff",

"html_url": "https://github.com/huggingface/datasets/pull/1395",

"merged_at": "2020-12-14T10:24:13Z",

"patch_url": "https://github.com/huggingface/datasets/pull/1395.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/1395"

} | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/1395/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/1395/timeline | null | null | true |

|

https://api.github.com/repos/huggingface/datasets/issues/3725 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3725/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3725/comments | https://api.github.com/repos/huggingface/datasets/issues/3725/events | https://github.com/huggingface/datasets/pull/3725 | 1,138,835,625 | PR_kwDODunzps4y3bOG | 3,725 | Pin pandas to avoid bug in streaming mode | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova"

} | [] | closed | false | null | [] | null | [] | "2022-02-15T15:21:00Z" | "2022-02-15T15:52:38Z" | "2022-02-15T15:52:37Z" | MEMBER | null | 0 | {

"diff_url": "https://github.com/huggingface/datasets/pull/3725.diff",

"html_url": "https://github.com/huggingface/datasets/pull/3725",

"merged_at": "2022-02-15T15:52:37Z",

"patch_url": "https://github.com/huggingface/datasets/pull/3725.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3725"

} | Temporarily pin pandas version to avoid bug in streaming mode (patching no longer works).

Related to #3724. | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3725/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/3725/timeline | null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/4405 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/4405/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/4405/comments | https://api.github.com/repos/huggingface/datasets/issues/4405/events | https://github.com/huggingface/datasets/issues/4405 | 1,248,574,087 | I_kwDODunzps5Ka7qH | 4,405 | [TypeError: Couldn't cast array of type] Cannot process dataset in v2.2.2 | {

"avatar_url": "https://avatars.githubusercontent.com/u/39762734?v=4",

"events_url": "https://api.github.com/users/jiangwangyi/events{/privacy}",

"followers_url": "https://api.github.com/users/jiangwangyi/followers",

"following_url": "https://api.github.com/users/jiangwangyi/following{/other_user}",

"gists_url": "https://api.github.com/users/jiangwangyi/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/jiangwangyi",

"id": 39762734,

"login": "jiangwangyi",

"node_id": "MDQ6VXNlcjM5NzYyNzM0",

"organizations_url": "https://api.github.com/users/jiangwangyi/orgs",

"received_events_url": "https://api.github.com/users/jiangwangyi/received_events",

"repos_url": "https://api.github.com/users/jiangwangyi/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/jiangwangyi/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/jiangwangyi/subscriptions",

"type": "User",

"url": "https://api.github.com/users/jiangwangyi"

} | [

{

"color": "d73a4a",

"default": true,

"description": "Something isn't working",

"id": 1935892857,

"name": "bug",

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug"

}

] | closed | false | null | [] | null | [

"And if the problem is that the way I am to construct the {Entity Type: list of spans} makes entity types without any spans hard to handle, is there a better way to meet the demand? Although I have verified that to make entity types without any spans to behave like `entity_chunk[label] = [[\"\"]]` can perform normally, I still wonder if there is a more elegant way?"

] | "2022-05-25T18:56:43Z" | "2022-06-07T14:27:20Z" | "2022-06-07T14:27:20Z" | NONE | null | null | null | ## Describe the bug

I am trying to process the [conll2012_ontonotesv5](https://huggingface.co/datasets/conll2012_ontonotesv5) dataset in `datasets` v2.2.2 and am running into a type error when casting the features.

## Steps to reproduce the bug

```python

import os

from typing import (

List,

Dict,

)

from collections import (

defaultdict,

)

from dataclasses import (

dataclass,

)

from datasets import (

load_dataset,

)

@dataclass

class ConllConverter:

path: str

name: str

cache_dir: str

def __post_init__(

self,

):

self.dataset = load_dataset(

path=self.path,

name=self.name,

cache_dir=self.cache_dir,

)

def convert(

self,

):

class_label = self.dataset["train"].features["sentences"][0]["named_entities"].feature

# label_set = list(set([

# label.split("-")[1] if label != "O" else label for label in class_label.names

# ]))

def prepare_chunk(token, entity):

assert len(token) == len(entity)

# Sequence length

length = len(token)

# Variable used

entity_chunk = defaultdict(list)

idx = flag = 0

# While loop

while idx < length:

if entity[idx] == "O":

flag += 1

idx += 1

else:

iob_tp, lab_tp = entity[idx].split("-")

assert iob_tp == "B"

idx += 1

while idx < length and entity[idx].startswith("I-"):

idx += 1

entity_chunk[lab_tp].append(token[flag: idx])

flag = idx

entity_chunk = dict(entity_chunk)

# for label in label_set:

# if label != "O" and label not in entity_chunk.keys():

# entity_chunk[label] = None

return entity_chunk

def prepare_features(

batch: Dict[str, List],

) -> Dict[str, List]:

sentence = [

sent for doc_sent in batch["sentences"] for sent in doc_sent

]

feature = {

"sentence": list(),

}

for sent in sentence:

token = sent["words"]

entity = class_label.int2str(sent["named_entities"])

entity_chunk = prepare_chunk(token, entity)

sent_feat = {

"token": token,

"entity": entity,

"entity_chunk": entity_chunk,

}

feature["sentence"].append(sent_feat)

return feature

column_names = self.dataset.column_names["train"]

dataset = self.dataset.map(

function=prepare_features,

with_indices=False,

batched=True,

batch_size=3,

remove_columns=column_names,

num_proc=1,

)

dataset.save_to_disk(

dataset_dict_path=os.path.join("data", self.path, self.name)

)

if __name__ == "__main__":

converter = ConllConverter(

path="conll2012_ontonotesv5",

name="english_v4",

cache_dir="cache",

)

converter.convert()

```

## Expected results

I want to use the dataset to perform NER task and to change the label list into a {Entity Type: list of spans} format.

## Actual results

<details>

<summary>Traceback</summary>

```python

Traceback (most recent call last): | 0/81 [00:00<?, ?ba/s]

File "/home2/jiangwangyi/miniconda3/lib/python3.9/site-packages/multiprocess/pool.py", line 125, in worker

result = (True, func(*args, **kwds))

File "/home2/jiangwangyi/miniconda3/lib/python3.9/site-packages/datasets/arrow_dataset.py", line 532, in wrapper

out: Union["Dataset", "DatasetDict"] = func(self, *args, **kwargs)

File "/home2/jiangwangyi/miniconda3/lib/python3.9/site-packages/datasets/arrow_dataset.py", line 499, in wrapper

out: Union["Dataset", "DatasetDict"] = func(self, *args, **kwargs)

File "/home2/jiangwangyi/miniconda3/lib/python3.9/site-packages/datasets/fingerprint.py", line 458, in wrapper

out = func(self, *args, **kwargs)

File "/home2/jiangwangyi/miniconda3/lib/python3.9/site-packages/datasets/arrow_dataset.py", line 2751, in _map_single

writer.write_batch(batch)

File "/home2/jiangwangyi/miniconda3/lib/python3.9/site-packages/datasets/arrow_writer.py", line 503, in write_batch

arrays.append(pa.array(typed_sequence))

File "pyarrow/array.pxi", line 230, in pyarrow.lib.array

File "pyarrow/array.pxi", line 110, in pyarrow.lib._handle_arrow_array_protocol

File "/home2/jiangwangyi/miniconda3/lib/python3.9/site-packages/datasets/arrow_writer.py", line 198, in __arrow_array__

out = cast_array_to_feature(out, type, allow_number_to_str=not self.trying_type)

File "/home2/jiangwangyi/miniconda3/lib/python3.9/site-packages/datasets/table.py", line 1675, in wrapper

return func(array, *args, **kwargs)

File "/home2/jiangwangyi/miniconda3/lib/python3.9/site-packages/datasets/table.py", line 1793, in cast_array_to_feature

arrays = [_c(array.field(name), subfeature) for name, subfeature in feature.items()]

File "/home2/jiangwangyi/miniconda3/lib/python3.9/site-packages/datasets/table.py", line 1793, in <listcomp>

arrays = [_c(array.field(name), subfeature) for name, subfeature in feature.items()]

File "/home2/jiangwangyi/miniconda3/lib/python3.9/site-packages/datasets/table.py", line 1675, in wrapper

return func(array, *args, **kwargs)

File "/home2/jiangwangyi/miniconda3/lib/python3.9/site-packages/datasets/table.py", line 1844, in cast_array_to_feature

raise TypeError(f"Couldn't cast array of type\n{array.type}\nto\n{feature}")

TypeError: Couldn't cast array of type

struct<CARDINAL: list<item: list<item: string>>, DATE: list<item: list<item: string>>, EVENT: list<item: list<item: string>>, FAC: list<item: list<item: string>>, GPE: list<item: list<item: string>>, LANGUAGE: list<item: list<item: string>>, LAW: list<item: list<item: string>>, LOC: list<item: list<item: string>>, MONEY: list<item: list<item: string>>, NORP: list<item: list<item: string>>, ORDINAL: list<item: list<item: string>>, ORG: list<item: list<item: string>>, PERCENT: list<item: list<item: string>>, PERSON: list<item: list<item: string>>, QUANTITY: list<item: list<item: string>>, TIME: list<item: list<item: string>>, WORK_OF_ART: list<item: list<item: string>>>

to

{'CARDINAL': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'DATE': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'EVENT': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'FAC': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'GPE': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'LAW': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'LOC': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'MONEY': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'NORP': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'ORDINAL': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'ORG': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'PERCENT': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'PERSON': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'PRODUCT': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'QUANTITY': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'TIME': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'WORK_OF_ART': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None)}

"""

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/home2/jiangwangyi/workspace/work/Entity/dataconverter.py", line 110, in <module>

converter.convert()

File "/home2/jiangwangyi/workspace/work/Entity/dataconverter.py", line 91, in convert

dataset = self.dataset.map(

File "/home2/jiangwangyi/miniconda3/lib/python3.9/site-packages/datasets/dataset_dict.py", line 770, in map

{

File "/home2/jiangwangyi/miniconda3/lib/python3.9/site-packages/datasets/dataset_dict.py", line 771, in <dictcomp>

k: dataset.map(

File "/home2/jiangwangyi/miniconda3/lib/python3.9/site-packages/datasets/arrow_dataset.py", line 2459, in map

transformed_shards[index] = async_result.get()

File "/home2/jiangwangyi/miniconda3/lib/python3.9/site-packages/multiprocess/pool.py", line 771, in get

raise self._value

TypeError: Couldn't cast array of type

struct<CARDINAL: list<item: list<item: string>>, DATE: list<item: list<item: string>>, EVENT: list<item: list<item: string>>, FAC: list<item: list<item: string>>, GPE: list<item: list<item: string>>, LANGUAGE: list<item: list<item: string>>, LAW: list<item: list<item: string>>, LOC: list<item: list<item: string>>, MONEY: list<item: list<item: string>>, NORP: list<item: list<item: string>>, ORDINAL: list<item: list<item: string>>, ORG: list<item: list<item: string>>, PERCENT: list<item: list<item: string>>, PERSON: list<item: list<item: string>>, QUANTITY: list<item: list<item: string>>, TIME: list<item: list<item: string>>, WORK_OF_ART: list<item: list<item: string>>>

to

{'CARDINAL': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'DATE': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'EVENT': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'FAC': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'GPE': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'LAW': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'LOC': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'MONEY': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'NORP': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'ORDINAL': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'ORG': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'PERCENT': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'PERSON': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'PRODUCT': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'QUANTITY': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'TIME': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None), 'WORK_OF_ART': Sequence(feature=Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), length=-1, id=None)}

```

</details>

## Environment info

<!-- You can run the command `datasets-cli env` and copy-and-paste its output below. -->

- `datasets` version: 2.2.2

- Platform: Ubuntu 18.04

- Python version: 3.9.7

- PyArrow version: 7.0.0

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/4405/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/4405/timeline | null | completed | false |

https://api.github.com/repos/huggingface/datasets/issues/3952 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3952/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3952/comments | https://api.github.com/repos/huggingface/datasets/issues/3952/events | https://github.com/huggingface/datasets/issues/3952 | 1,171,895,531 | I_kwDODunzps5F2bTr | 3,952 | Checksum error for glue sst2, stsb, rte etc datasets | {

"avatar_url": "https://avatars.githubusercontent.com/u/22090962?v=4",

"events_url": "https://api.github.com/users/ravindra-ut/events{/privacy}",

"followers_url": "https://api.github.com/users/ravindra-ut/followers",

"following_url": "https://api.github.com/users/ravindra-ut/following{/other_user}",

"gists_url": "https://api.github.com/users/ravindra-ut/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/ravindra-ut",

"id": 22090962,

"login": "ravindra-ut",

"node_id": "MDQ6VXNlcjIyMDkwOTYy",

"organizations_url": "https://api.github.com/users/ravindra-ut/orgs",

"received_events_url": "https://api.github.com/users/ravindra-ut/received_events",

"repos_url": "https://api.github.com/users/ravindra-ut/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/ravindra-ut/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/ravindra-ut/subscriptions",

"type": "User",

"url": "https://api.github.com/users/ravindra-ut"

} | [

{

"color": "d73a4a",

"default": true,

"description": "Something isn't working",

"id": 1935892857,

"name": "bug",

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug"

}

] | closed | false | null | [] | null | [

"Hi, @ravindra-ut.\r\n\r\nI'm sorry but I can't reproduce your problem:\r\n```python\r\nIn [1]: from datasets import load_dataset\r\n\r\nIn [2]: ds = load_dataset(\"glue\", \"sst2\")\r\nDownloading builder script: 28.8kB [00:00, 11.6MB/s] \r\nDownloading metadata: 28.7kB [00:00, 12.9MB/s] \r\nDownloading and preparing dataset glue/sst2 (download: 7.09 MiB, generated: 4.81 MiB, post-processed: Unknown size, total: 11.90 MiB) to .../.cache/huggingface/datasets/glue/sst2/1.0.0/dacbe3125aa31d7f70367a07a8a9e72a5a0bfeb5fc42e75c9db75b96da6053ad...\r\nDownloading data: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 7.44M/7.44M [00:01<00:00, 5.82MB/s]\r\nDataset glue downloaded and prepared to .../.cache/huggingface/datasets/glue/sst2/1.0.0/dacbe3125aa31d7f70367a07a8a9e72a5a0bfeb5fc42e75c9db75b96da6053ad. Subsequent calls will reuse this data. \r\n100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 3/3 [00:00<00:00, 895.96it/s]\r\n\r\nIn [3]: ds\r\nOut[2]: \r\nDatasetDict({\r\n train: Dataset({\r\n features: ['sentence', 'label', 'idx'],\r\n num_rows: 67349\r\n })\r\n validation: Dataset({\r\n features: ['sentence', 'label', 'idx'],\r\n num_rows: 872\r\n })\r\n test: Dataset({\r\n features: ['sentence', 'label', 'idx'],\r\n num_rows: 1821\r\n })\r\n})\r\n``` \r\n\r\nMoreover, I see in your traceback that your error was for an URL at https://firebasestorage.googleapis.com\r\nHowever, the URLs were updated on Sep 16, 2020 (`datasets` version 1.0.2) to https://dl.fbaipublicfiles.com: https://github.com/huggingface/datasets/commit/2f03041a21c03abaececb911760c3fe4f420c229\r\n\r\nCould you please try to update `datasets`\r\n```shell\r\npip install -U datasets\r\n```\r\nand then force redownload\r\n```python\r\nds = load_dataset(\"glue\", \"sst2\", download_mode=\"force_redownload\")\r\n```\r\nto update the cache?\r\n\r\nPlease, feel free to reopen this issue if the problem persists."

] | "2022-03-17T03:45:47Z" | "2022-03-17T07:10:15Z" | "2022-03-17T07:10:14Z" | NONE | null | null | null | ## Describe the bug

Checksum error for glue sst2, stsb, rte etc datasets

## Steps to reproduce the bug

```python

>>> nlp.load_dataset('glue', 'sst2')

Downloading and preparing dataset glue/sst2 (download: 7.09 MiB, generated: 4.81 MiB, post-processed: Unknown sizetotal: 11.90 MiB) to

Downloading: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 73.0/73.0 [00:00<00:00, 18.2kB/s]

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/Library/Python/3.8/lib/python/site-packages/nlp/load.py", line 548, in load_dataset

builder_instance.download_and_prepare(

File "/Library/Python/3.8/lib/python/site-packages/nlp/builder.py", line 462, in download_and_prepare

self._download_and_prepare(

File "/Library/Python/3.8/lib/python/site-packages/nlp/builder.py", line 521, in _download_and_prepare

verify_checksums(

File "/Library/Python/3.8/lib/python/site-packages/nlp/utils/info_utils.py", line 38, in verify_checksums

raise NonMatchingChecksumError(error_msg + str(bad_urls))

nlp.utils.info_utils.NonMatchingChecksumError: Checksums didn't match for dataset source files:

['https://firebasestorage.googleapis.com/v0/b/mtl-sentence-representations.appspot.com/o/data%2FSST-2.zip?alt=media&token=aabc5f6b-e466-44a2-b9b4-cf6337f84ac8']

```

## Expected results

dataset load should succeed without checksum error.

## Actual results

```

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/Library/Python/3.8/lib/python/site-packages/nlp/load.py", line 548, in load_dataset

builder_instance.download_and_prepare(

File "/Library/Python/3.8/lib/python/site-packages/nlp/builder.py", line 462, in download_and_prepare

self._download_and_prepare(

File "/Library/Python/3.8/lib/python/site-packages/nlp/builder.py", line 521, in _download_and_prepare

verify_checksums(

File "/Library/Python/3.8/lib/python/site-packages/nlp/utils/info_utils.py", line 38, in verify_checksums

raise NonMatchingChecksumError(error_msg + str(bad_urls))

nlp.utils.info_utils.NonMatchingChecksumError: Checksums didn't match for dataset source files:

['https://firebasestorage.googleapis.com/v0/b/mtl-sentence-representations.appspot.com/o/data%2FSST-2.zip?alt=media&token=aabc5f6b-e466-44a2-b9b4-cf6337f84ac8']

```

## Environment info

- `datasets` version: '1.18.3'

- Platform: Mac OS

- Python version: Python 3.8.9

- PyArrow version: '7.0.0'

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3952/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/3952/timeline | null | completed | false |

https://api.github.com/repos/huggingface/datasets/issues/5815 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5815/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5815/comments | https://api.github.com/repos/huggingface/datasets/issues/5815/events | https://github.com/huggingface/datasets/issues/5815 | 1,693,701,743 | I_kwDODunzps5k89Zv | 5,815 | Easy way to create a Kaggle dataset from a Huggingface dataset? | {

"avatar_url": "https://avatars.githubusercontent.com/u/5355286?v=4",

"events_url": "https://api.github.com/users/hrbigelow/events{/privacy}",

"followers_url": "https://api.github.com/users/hrbigelow/followers",

"following_url": "https://api.github.com/users/hrbigelow/following{/other_user}",

"gists_url": "https://api.github.com/users/hrbigelow/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/hrbigelow",

"id": 5355286,

"login": "hrbigelow",

"node_id": "MDQ6VXNlcjUzNTUyODY=",

"organizations_url": "https://api.github.com/users/hrbigelow/orgs",

"received_events_url": "https://api.github.com/users/hrbigelow/received_events",

"repos_url": "https://api.github.com/users/hrbigelow/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/hrbigelow/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/hrbigelow/subscriptions",

"type": "User",

"url": "https://api.github.com/users/hrbigelow"

} | [] | open | false | null | [] | null | [

"Hi @hrbigelow , I'm no expert for such a question so I'll ping @lhoestq from the `datasets` library (also this issue could be moved there if someone with permission can do it :) )",

"Hi ! Many datasets are made of several files, and how they are parsed often requires a python script. Because of that, datasets like wmt14 are not available as a single file on HF. Though you can create this file using `datasets`:\r\n\r\n```python\r\nfrom datasets import load_dataset\r\n\r\nds = load_dataset(\"wmt14\", \"de-en\", split=\"train\")\r\n\r\nds.to_json(\"wmt14-train.json\")\r\n# OR to parquet, which is compressed:\r\n# ds.to_parquet(\"wmt14-train.parquet\")\r\n```\r\n\r\nWe are also working on providing parquet exports for all datasets, but wmt14 is not supported yet (we're rolling it out for datasets <1GB first). They're usually available in the `refs/convert/parquet` branch (empty for wmt14):\r\n\r\n<img width=\"267\" alt=\"image\" src=\"https://user-images.githubusercontent.com/42851186/235878909-7339f5a4-be19-4ada-85d8-8a50d23acf35.png\">\r\n",

"also cc @nateraw for visibility on this (and cc @osanseviero too)",

"I've requested support for creating a Kaggle dataset from an imported HF dataset repo on their \"forum\" here: https://www.kaggle.com/discussions/product-feedback/427142 (upvotes appreciated 🙂)"

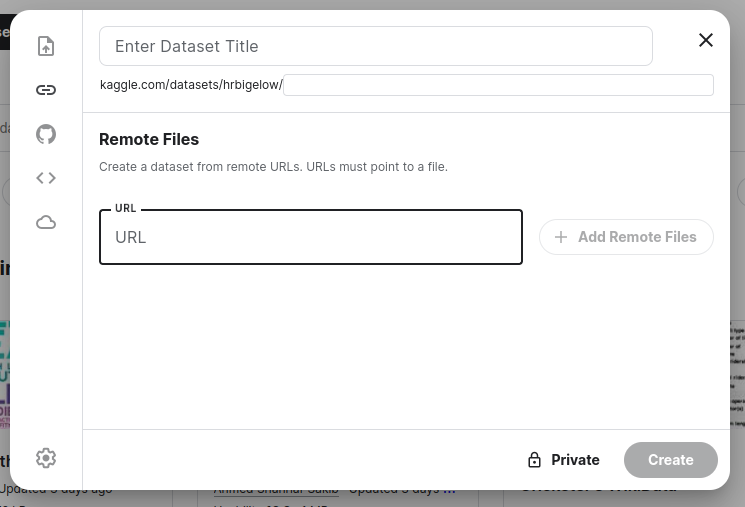

] | "2023-05-02T21:43:33Z" | "2023-07-26T16:13:31Z" | null | NONE | null | null | null | I'm not sure whether this is more appropriately addressed with HuggingFace or Kaggle. I would like to somehow directly create a Kaggle dataset from a HuggingFace Dataset.

While Kaggle does provide the option to create a dataset from a URI, that URI must point to a single file. For example:

Is there some mechanism from huggingface to represent a dataset (such as that from `load_dataset('wmt14', 'de-en', split='train')` as a single file? Or, some other way to get that into a Kaggle dataset so that I can use the huggingface `datasets` module to process and consume it inside of a Kaggle notebook?

Thanks in advance!

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/5815/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/5815/timeline | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/2115 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/2115/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/2115/comments | https://api.github.com/repos/huggingface/datasets/issues/2115/events | https://github.com/huggingface/datasets/issues/2115 | 841,283,974 | MDU6SXNzdWU4NDEyODM5NzQ= | 2,115 | The datasets.map() implementation modifies the datatype of os.environ object | {

"avatar_url": "https://avatars.githubusercontent.com/u/19983848?v=4",

"events_url": "https://api.github.com/users/leleamol/events{/privacy}",

"followers_url": "https://api.github.com/users/leleamol/followers",

"following_url": "https://api.github.com/users/leleamol/following{/other_user}",

"gists_url": "https://api.github.com/users/leleamol/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/leleamol",

"id": 19983848,

"login": "leleamol",

"node_id": "MDQ6VXNlcjE5OTgzODQ4",

"organizations_url": "https://api.github.com/users/leleamol/orgs",

"received_events_url": "https://api.github.com/users/leleamol/received_events",

"repos_url": "https://api.github.com/users/leleamol/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/leleamol/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/leleamol/subscriptions",

"type": "User",

"url": "https://api.github.com/users/leleamol"

} | [] | closed | false | null | [] | null | [] | "2021-03-25T20:29:19Z" | "2021-03-26T15:13:52Z" | "2021-03-26T15:13:52Z" | NONE | null | null | null | In our testing, we noticed that the datasets.map() implementation is modifying the datatype of python os.environ object from '_Environ' to 'dict'.

This causes following function calls to fail as follows:

`

x = os.environ.get("TEST_ENV_VARIABLE_AFTER_dataset_map", default=None)

TypeError: get() takes no keyword arguments

`

It looks like the following line in datasets.map implementation introduced this functionality.

https://github.com/huggingface/datasets/blob/0cb1ac06acb0df44a1cf4128d03a01865faa2504/src/datasets/arrow_dataset.py#L1421

Here is the test script to reproduce this error.

```

from datasets import load_dataset

from transformers import AutoTokenizer

import os

def test_train():

model_checkpoint = "distilgpt2"

datasets = load_dataset('wikitext', 'wikitext-2-raw-v1')

tokenizer = AutoTokenizer.from_pretrained(model_checkpoint, use_fast=True)

tokenizer.pad_token = tokenizer.eos_token

def tokenize_function(examples):

y = tokenizer(examples['text'], truncation=True, max_length=64)

return y

x = os.environ.get("TEST_ENV_VARIABLE_BEFORE_dataset_map", default=None)

print(f"Testing environment variable: TEST_ENV_VARIABLE_BEFORE_dataset_map {x}")

print(f"Data type of os.environ before datasets.map = {os.environ.__class__.__name__}")

datasets.map(tokenize_function, batched=True, num_proc=2, remove_columns=["text"])

print(f"Data type of os.environ after datasets.map = {os.environ.__class__.__name__}")

x = os.environ.get("TEST_ENV_VARIABLE_AFTER_dataset_map", default=None)

print(f"Testing environment variable: TEST_ENV_VARIABLE_AFTER_dataset_map {x}")

if __name__ == "__main__":

test_train()

```

| {

"+1": 1,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 1,

"url": "https://api.github.com/repos/huggingface/datasets/issues/2115/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/2115/timeline | null | completed | false |

https://api.github.com/repos/huggingface/datasets/issues/652 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/652/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/652/comments | https://api.github.com/repos/huggingface/datasets/issues/652/events | https://github.com/huggingface/datasets/pull/652 | 705,390,850 | MDExOlB1bGxSZXF1ZXN0NDkwMTI3MjIx | 652 | handle connection error in download_prepared_from_hf_gcs | {

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/lhoestq",

"id": 42851186,

"login": "lhoestq",

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"type": "User",

"url": "https://api.github.com/users/lhoestq"

} | [] | closed | false | null | [] | null | [] | "2020-09-21T08:21:11Z" | "2020-09-21T08:28:43Z" | "2020-09-21T08:28:42Z" | MEMBER | null | 0 | {

"diff_url": "https://github.com/huggingface/datasets/pull/652.diff",

"html_url": "https://github.com/huggingface/datasets/pull/652",

"merged_at": "2020-09-21T08:28:42Z",

"patch_url": "https://github.com/huggingface/datasets/pull/652.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/652"

} | Fix #647 | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/652/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/652/timeline | null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/5087 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5087/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5087/comments | https://api.github.com/repos/huggingface/datasets/issues/5087/events | https://github.com/huggingface/datasets/pull/5087 | 1,400,487,967 | PR_kwDODunzps5AW-N9 | 5,087 | Fix filter with empty indices | {

"avatar_url": "https://avatars.githubusercontent.com/u/23029765?v=4",

"events_url": "https://api.github.com/users/Mouhanedg56/events{/privacy}",

"followers_url": "https://api.github.com/users/Mouhanedg56/followers",

"following_url": "https://api.github.com/users/Mouhanedg56/following{/other_user}",

"gists_url": "https://api.github.com/users/Mouhanedg56/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/Mouhanedg56",

"id": 23029765,

"login": "Mouhanedg56",

"node_id": "MDQ6VXNlcjIzMDI5NzY1",

"organizations_url": "https://api.github.com/users/Mouhanedg56/orgs",

"received_events_url": "https://api.github.com/users/Mouhanedg56/received_events",

"repos_url": "https://api.github.com/users/Mouhanedg56/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/Mouhanedg56/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Mouhanedg56/subscriptions",

"type": "User",

"url": "https://api.github.com/users/Mouhanedg56"

} | [] | closed | false | null | [] | null | [

"_The documentation is not available anymore as the PR was closed or merged._"

] | "2022-10-07T01:07:00Z" | "2022-10-07T18:43:03Z" | "2022-10-07T18:40:26Z" | CONTRIBUTOR | null | 0 | {

"diff_url": "https://github.com/huggingface/datasets/pull/5087.diff",

"html_url": "https://github.com/huggingface/datasets/pull/5087",

"merged_at": "2022-10-07T18:40:26Z",

"patch_url": "https://github.com/huggingface/datasets/pull/5087.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/5087"

} | Fix #5085 | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/5087/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/5087/timeline | null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3735 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3735/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3735/comments | https://api.github.com/repos/huggingface/datasets/issues/3735/events | https://github.com/huggingface/datasets/issues/3735 | 1,140,087,891 | I_kwDODunzps5D9FxT | 3,735 | Performance of `datasets` at scale | {

"avatar_url": "https://avatars.githubusercontent.com/u/8264887?v=4",

"events_url": "https://api.github.com/users/lvwerra/events{/privacy}",

"followers_url": "https://api.github.com/users/lvwerra/followers",

"following_url": "https://api.github.com/users/lvwerra/following{/other_user}",

"gists_url": "https://api.github.com/users/lvwerra/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/lvwerra",

"id": 8264887,

"login": "lvwerra",

"node_id": "MDQ6VXNlcjgyNjQ4ODc=",

"organizations_url": "https://api.github.com/users/lvwerra/orgs",

"received_events_url": "https://api.github.com/users/lvwerra/received_events",

"repos_url": "https://api.github.com/users/lvwerra/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/lvwerra/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lvwerra/subscriptions",

"type": "User",

"url": "https://api.github.com/users/lvwerra"

} | [] | open | false | null | [] | null | [

"> using command line git-lfs - [...] 300MB/s!\r\n\r\nwhich server location did you upload from?",

"From GCP region `us-central1-a`.",

"The most surprising part to me is the saving time. Wondering if it could be due to compression (`ParquetWriter` uses SNAPPY compression by default; it can be turned off with `to_parquet(..., compression=None)`). ",

"+1 to what @mariosasko mentioned. Also, @lvwerra did you parallelize `to_parquet` using similar approach in #2747? (we used multiprocessing at the shard level). I'm working on a similar PR to add multi_proc in `to_parquet` which might give you further speed up. \r\nStas benchmarked his approach and mine in this [gist](https://gist.github.com/stas00/dc1597a1e245c5915cfeefa0eee6902c) for `lama` dataset when we were working on adding multi_proc support for `to_json`.",

"@mariosasko I did not turn it off but I can try the next time - I have to run the pipeline again, anyway. \r\n\r\n@bhavitvyamalik Yes, I also sharded the dataset and used multiprocessing to save each shard. I'll have a closer look at your approach, too."

] | "2022-02-16T14:23:32Z" | "2022-03-15T09:15:29Z" | null | MEMBER | null | null | null | # Performance of `datasets` at 1TB scale

## What is this?

During the processing of a large dataset I monitored the performance of the `datasets` library to see if there are any bottlenecks. The insights of this analysis could guide the decision making to improve the performance of the library.

## Dataset

The dataset is a 1.1TB extract from GitHub with 120M code files and is stored as 5000 `.json.gz` files. The goal of the preprocessing is to remove duplicates and filter files based on their stats. While the calculating of the hashes for deduplication and stats for filtering can be parallelized the filtering itself is run with a single process. After processing the files are pushed to the hub.

## Machine

The experiment was run on a `m1` machine on GCP with 96 CPU cores and 1.3TB RAM.

## Performance breakdown

- Loading the data **3.5h** (_30sec_ from cache)

- **1h57min** single core loading (not sure what is going on here, corresponds to second progress bar)

- **1h10min** multi core json reading

- **20min** remaining time before and after the two main processes mentioned above

- Process the data **2h** (_20min_ from cache)

- **20min** Getting reading for processing

- **40min** Hashing and files stats (96 workers)

- **58min** Deduplication filtering (single worker)

- Save parquet files **5h**

- Saving 1000 parquet files (16 workers)

- Push to hub **37min**

- **34min** git add

- **3min** git push (several hours with `Repository.git_push()`)

## Conclusion

It appears that loading and saving the data is the main bottleneck at that scale (**8.5h**) whereas processing (**2h**) and pushing the data to the hub (**0.5h**) is relatively fast. To optimize the performance at this scale it would make sense to consider such an end-to-end example and target the bottlenecks which seem to be loading from and saving to disk. The processing itself seems to run relatively fast.

## Notes

- map operation on a 1TB dataset with 96 workers requires >1TB RAM

- map operation does not maintain 100% CPU utilization with 96 workers

- sometimes when the script crashes all the data files have a corresponding `*.lock` file in the data folder (or multiple e.g. `*.lock.lock` when it happened a several times). This causes the cache **not** to be triggered (which is significant at that scale) - i guess because there are new data files

- parallelizing `to_parquet` decreased the saving time from 17h to 5h, however adding more workers at this point had almost no effect. not sure if this is:

a) a bug in my parallelization logic,

b) i/o limit to load data form disk to memory or

c) i/o limit to write from memory to disk.

- Using `Repository.git_push()` was much slower than using command line `git-lfs` - 10-20MB/s vs. 300MB/s! The `Dataset.push_to_hub()` function is even slower as it only uploads one file at a time with only a few MB/s, whereas `Repository.git_push()` pushes files in parallel (each at a similar speed).

cc @lhoestq @julien-c @LysandreJik @SBrandeis

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 4,

"heart": 12,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 16,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3735/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/3735/timeline | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/4019 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/4019/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/4019/comments | https://api.github.com/repos/huggingface/datasets/issues/4019/events | https://github.com/huggingface/datasets/pull/4019 | 1,180,628,293 | PR_kwDODunzps41AlFk | 4,019 | Make yelp_polarity streamable | {

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/lhoestq",

"id": 42851186,

"login": "lhoestq",

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"type": "User",

"url": "https://api.github.com/users/lhoestq"

} | [] | closed | false | null | [] | null | [

"_The documentation is not available anymore as the PR was closed or merged._",

"The CI is failing because of the incomplete dataset card - this is unrelated to the goal of this PR so we can ignore it"

] | "2022-03-25T10:42:51Z" | "2022-03-25T15:02:19Z" | "2022-03-25T14:57:16Z" | MEMBER | null | 0 | {

"diff_url": "https://github.com/huggingface/datasets/pull/4019.diff",

"html_url": "https://github.com/huggingface/datasets/pull/4019",

"merged_at": "2022-03-25T14:57:15Z",

"patch_url": "https://github.com/huggingface/datasets/pull/4019.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/4019"

} | It was using `dl_manager.download_and_extract` on a TAR archive, which is not supported in streaming mode. I replaced this by `dl_manager.iter_archive` | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/4019/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/4019/timeline | null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/5315 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5315/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5315/comments | https://api.github.com/repos/huggingface/datasets/issues/5315/events | https://github.com/huggingface/datasets/issues/5315 | 1,470,026,797 | I_kwDODunzps5XntQt | 5,315 | Adding new splits to a dataset script with existing old splits info in metadata's `dataset_info` fails | {

"avatar_url": "https://avatars.githubusercontent.com/u/16348744?v=4",

"events_url": "https://api.github.com/users/polinaeterna/events{/privacy}",

"followers_url": "https://api.github.com/users/polinaeterna/followers",

"following_url": "https://api.github.com/users/polinaeterna/following{/other_user}",

"gists_url": "https://api.github.com/users/polinaeterna/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/polinaeterna",

"id": 16348744,

"login": "polinaeterna",

"node_id": "MDQ6VXNlcjE2MzQ4NzQ0",

"organizations_url": "https://api.github.com/users/polinaeterna/orgs",

"received_events_url": "https://api.github.com/users/polinaeterna/received_events",

"repos_url": "https://api.github.com/users/polinaeterna/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/polinaeterna/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/polinaeterna/subscriptions",

"type": "User",

"url": "https://api.github.com/users/polinaeterna"

} | [

{

"color": "d73a4a",

"default": true,

"description": "Something isn't working",

"id": 1935892857,

"name": "bug",

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug"

}

] | open | false | {

"avatar_url": "https://avatars.githubusercontent.com/u/16348744?v=4",

"events_url": "https://api.github.com/users/polinaeterna/events{/privacy}",

"followers_url": "https://api.github.com/users/polinaeterna/followers",

"following_url": "https://api.github.com/users/polinaeterna/following{/other_user}",

"gists_url": "https://api.github.com/users/polinaeterna/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/polinaeterna",

"id": 16348744,

"login": "polinaeterna",

"node_id": "MDQ6VXNlcjE2MzQ4NzQ0",

"organizations_url": "https://api.github.com/users/polinaeterna/orgs",

"received_events_url": "https://api.github.com/users/polinaeterna/received_events",

"repos_url": "https://api.github.com/users/polinaeterna/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/polinaeterna/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/polinaeterna/subscriptions",

"type": "User",

"url": "https://api.github.com/users/polinaeterna"

} | [

{

"avatar_url": "https://avatars.githubusercontent.com/u/16348744?v=4",

"events_url": "https://api.github.com/users/polinaeterna/events{/privacy}",

"followers_url": "https://api.github.com/users/polinaeterna/followers",

"following_url": "https://api.github.com/users/polinaeterna/following{/other_user}",

"gists_url": "https://api.github.com/users/polinaeterna/gists{/gist_id}",

"gravatar_id": "",